Large Language Models Experiments Using Google Colab

In addition to using LLM APIs from OpenAI, Cohere, etc. you can also run smaller LLMs locally on your laptop if you have enough memory (and optionally a good GPU). When I experiment with self-hosted LLMs I usually run them in the cloud using either Google Colab or a leased GPU server from Lambda Labs.

We will use the Hugging Face tiiuae/falcon-7b model. You can read the Hugging Face documentation for the tiiuae/falcon-7b model.

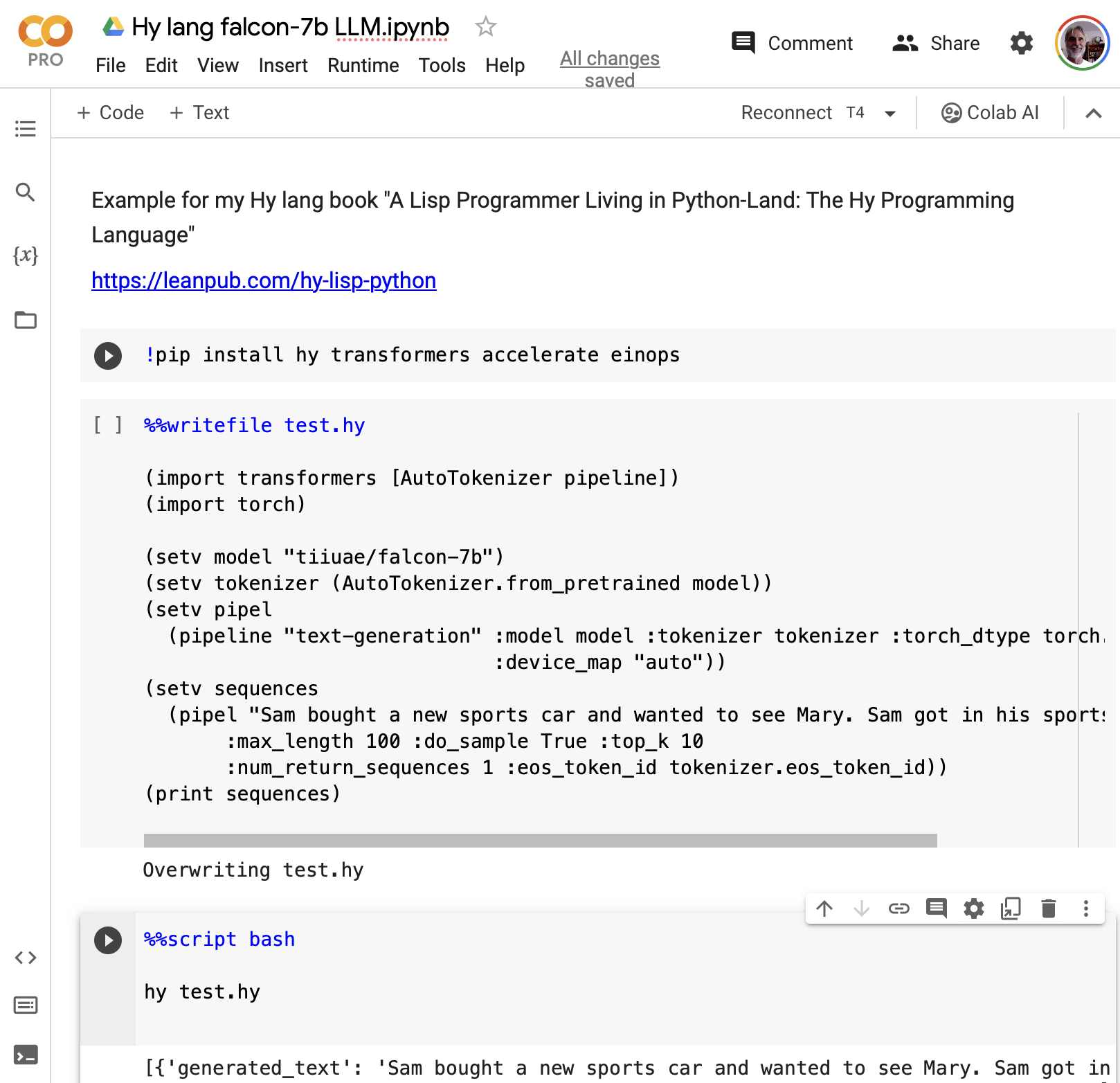

Google Colab directly supports only the Python and R languages. We can use Hy by using the %%writefile test.hy script magic to write the contents of a cell to a local file, in this case Hy language source code. For interactive development we will use the script magic %%script bash to run hy test.hy because this will use the same process when we re-evaluate the notebook cell. If we would run our Hy script using !hy test.hy then each time we evaluate the cell we would get a fresh Linux process, so the previous caching of model files, etc. would be repeated.

Here we use the Colab notebook that is shown here:

If you have a laptop that can run this example, you can also run it locally by installing the dependencies:

1 pip install hy transformers accelerate einops

Here is the code example:

1 (import transformers [AutoTokenizer pipeline])

2 (import torch)

3

4 (setv model "tiiuae/falcon-7b")

5 (setv tokenizer (AutoTokenizer.from_pretrained model))

6 (setv pipel

7 (pipeline "text-generation" :model model :tokenizer tokenizer

8 :torch_dtype torch.bfloat16

9 :device_map "auto"))

10 (setv sequences

11 (pipel "Sam bought a new sports car and wanted to see Mary. Sam got in his sports \

12 car and"

13 :max_length 100 :do_sample True :top_k 10

14 :num_return_sequences 1 :eos_token_id tokenizer.eos_token_id))

15 (print sequences)

The generated text varies for each run. Here is example output:

1 Sam bought a new sports car and wanted to see Mary. Sam got in his sports car and dr\

2 ove the 20 miles to Mary’s house. The weather was perfect and the road was nice and \

3 smooth. It didn’t take long to get there and Sam had a lot of time to relax on his w\

4 ay. The car was a lot of fun to drive because it had all kinds of new safety feature\

5 s, and Sam really felt in control of his sports car.

As I write this chapter in September 2023, more small LLM models are being released that can run on laptops. If you use M1 or M2 Macs and you have at least 16G of shared memory, it is now also easier to run LLMs locally. Macs with 64G or more shared memory are very capable of both local self-hosted fine tuning and inference. While it is certainly simpler to use APIs from OpenAI and other vendors there are privacy and control advantages to running self-hosted models.