Semantic Navigator App Using Gradio

Even though this is a book on using local LLMs I thought, dear reader, that it would be fun to add a complete web app example that is also an effective application of LLMs to perform natural language processing (NLP) tasks that a decade ago would have required a major development effort and would not have been as effective as the LLM-based solution we use here.

Overview or Semantic Web and Linked Data

The Semantic Web, often referred to as Web 3.0 or the Web of Data, represents an ambitious vision originally proposed by Tim Berners-Lee, the inventor of the World Wide Web. While the traditional web is a collection of documents designed for human consumption—where computers essentially act as mailmen delivering pages they cannot “understand”—the Semantic Web aims to make the underlying data machine-readable. By providing a common framework that allows data to be shared and reused across application, enterprise, and community boundaries, it transforms the internet from a library of isolated silos into a massive, interconnected global database.

At the heart of this transformation is a specific technology stack designed to categorize and link information. The Resource Description Framework (RDF) serves as the standard data model, breaking down information into “triples” (subject, predicate, and object) that describe relationships between entities. To ensure these entities are unique and discoverable, they are identified using Uniform Resource Identifiers (URIs), which function like permanent, global addresses for concepts rather than just web pages. This layer is often visualized as a “Layer Cake,” progressing from basic syntax to complex logic and trust protocols.

Linked Data provides the practical set of best practices required to realize this vision. It is governed by four core principles: using URIs as names for things, using HTTP URIs so people can look up those names, providing useful information via standards like RDF or SPARQL (a semantic query language), and including links to other URIs to facilitate discovery. When data is published according to these rules, it becomes part of the Linked Open Data (LOD) Cloud, a vast network of interlinked datasets, such as DBpedia or GeoNames, that allows machines to traverse the web of knowledge much like humans browse a web of pages.

In other books I have written examples of transforming text into RDF data (e.g., https://leanpub.com/lovinglisp and https://leanpub.com/racket-ai). In this chapter we identify entities and relationships between entities, storing this extracted data in JSON rather than RDF.

Design Goals for the Semantic Navigator App

We develop an example web app that allows a user to paste in large blocks of text and extracts entities and relations between the identified entities.

I originally wrote this web app for the Huggingface Spaces platform using a Huggingface inference endpoint. I later modified the web app to run locally on my laptop using Ollama.

This web app can easily be hosted on a Linux server, using a tool like nginx to serve as a public endpoint on port 80.

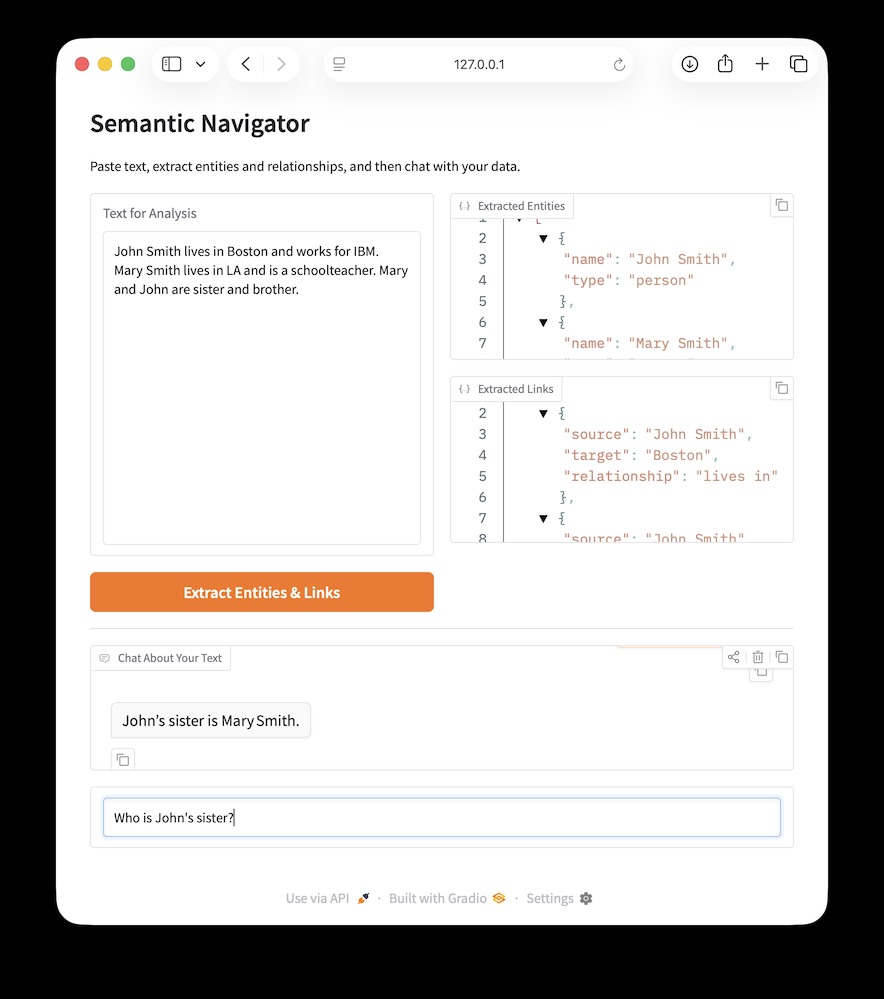

Before looking at the code, here is what the finished product looks like:

Implementation of the Semantic Navigator App Using Gradio

We will use the Gradio toolkit for creating interactive web apps. You can find detailed documentation here: https://www.gradio.app/docs.

The following program demonstrates the construction of a “Semantic Navigator,” a web application built with Gradio that leverages Large Language Models (LLMs) to transform unstructured prose into structured knowledge. By using the Ollama Python client library, the application connects to a high-performance model to perform two distinct natural language processing tasks: named entity recognition (NER) and relationship extraction. The code implements a dual-stage workflow where users first submit raw text for analysis—triggering a system prompt that enforces a strict JSON schema for identifying persons, places, and organizations—and then interact with that data through a context-aware chatbot. This implementation showcases critical modern AI patterns, including the handling of structured LLM outputs, state management within a reactive UI, and the use of RAG-lite (Retrieval-Augmented Generation) techniques to constrain assistant responses to a specific, extracted dataset.

1 import gradio as gr

2 import os

3 import json

4 from ollama import Client

5

6 client = Client(

7 host="https://ollama.com",

8 headers={'Authorization': os.environ.get("OLLAMA_API_KEY")}

9 )

10

11 MODEL = "gpt-oss:20b-cloud"

12

13 def extract_entities_and_links(text: str):

14 """Uses an LLM to extract entities and links from text."""

15 print("\n* Entered extract_entities_and_links function *\n")

16

17 system_message = (

18 "You are an expert in information extraction. From the given "

19 "text, extract entities of type 'person', 'place', and "

20 "'organization'. Also, identify links between these entities. "

21 "Output the result as a single JSON object with two keys: "

22 "'entities' and 'links'.\n"

23 "- 'entities': list of objects, each with 'name' and 'type'.\n"

24 "- 'links': list of objects, each with 'source', 'target', "

25 "and 'relationship'.\n"

26 "Example output format:\n"

27 "{\n"

28 " \"entities\": [{\"name\": \"A\", \"type\": \"person\"}],\n"

29 " \"links\": [{\"source\": \"A\", \"target\": \"B\", "

30 "\"relationship\": \"works for\"}]\n"

31 "}"

32 )

33

34 messages = [

35 {"role": "system", "content": system_message},

36 {"role": "user", "content": text}

37 ]

38

39 try:

40 response = client.chat(MODEL, messages=messages, stream=False)

41 content = response['message']['content'].strip()

42

43 # Strip Markdown formatting if present

44 if content.startswith("```json"):

45 content = content[7:-3].strip()

46 elif content.startswith("```"):

47 content = content[3:-3].strip()

48

49 print("\n* Raw model output: *\n", content)

50 data = json.loads(content)

51 entities = data.get("entities", [])

52 links = data.get("links", [])

53

54 return entities, links, entities, links

55

56 except Exception as e:

57 print(f"Error during extraction: {e}")

58 raise gr.Error(f"Extraction failed. Details: {e}")

59

60 def chat_responder(message, history, entities, links):

61 """Streaming chatbot using extracted entities as context."""

62 print("\n* Entered chat_responder function *\n")

63

64 if not entities and not links:

65 system_message = (

66 "You are a helpful-but-skeptical assistant. The user has "

67 "not extracted any information yet. Politely ask them to "

68 "paste text above and click 'Extract' first."

69 )

70 else:

71 system_message = (

72 "You are a helpful assistant. Use ONLY the following extracted "

73 "entities and links to answer questions. If the answer is "

74 "not in this data, state that clearly.\n"

75 f"Entities: {json.dumps(entities, indent=2)}\n"

76 f"Links: {json.dumps(links, indent=2)}"

77 )

78

79 messages = [{"role": "system", "content": system_message}]

80 messages.extend(history)

81 messages.append({"role": "user", "content": message})

82

83 response_text = ""

84 new_history = history + [

85 {"role": "user", "content": message},

86 {"role": "assistant", "content": ""}

87 ]

88

89 yield new_history, ""

90

91 for part in client.chat(MODEL, messages=messages, stream=True):

92 if 'message' in part and 'content' in part['message']:

93 token = part['message']['content']

94 response_text += token

95 new_history[-1]["content"] = response_text

96 yield new_history, ""

97

98 # --- Gradio UI ---

99 with gr.Blocks(fill_height=True) as demo:

100 gr.Markdown(

101 "# Semantic Navigator\n"

102 "Paste text, extract relationships, and chat with your data."

103 )

104

105 with gr.Row(scale=1):

106 with gr.Column(scale=1):

107 text_input = gr.Textbox(

108 scale=1, lines=15, label="Text for Analysis",

109 placeholder="Paste paragraphs of text here to analyze..."

110 )

111 extract_button = gr.Button("Extract Entities & Links",

112 variant="primary")

113 with gr.Column(scale=1):

114 entities_out = gr.JSON(label="Entities", max_height="20vh")

115 links_out = gr.JSON(label="Links", max_height="20vh")

116

117 gr.Markdown("---")

118

119 with gr.Column(scale=1):

120 chatbot_display = gr.Chatbot(label="Chat Context",

121 max_height="20vh")

122 chat_input = gr.Textbox(

123 show_label=False, lines=1,

124 placeholder="Ask a question about the extracted data..."

125 )

126

127 entity_state = gr.State()

128 link_state = gr.State()

129

130 extract_button.click(

131 fn=extract_entities_and_links,

132 inputs=[text_input],

133 outputs=[entities_out, links_out, entity_state, link_state]

134 )

135

136 chat_input.submit(

137 fn=chat_responder,

138 inputs=[chat_input, chatbot_display, entity_state, link_state],

139 outputs=[chatbot_display, chat_input]

140 )

141

142 if __name__ == "__main__":

143 demo.launch()

The core logic of this application resides in the extract_entities_and_links function, which serves as the bridge between raw text and structured data. It utilizes a system message to “program” the LLM to act as an information extraction expert, ensuring that the response is returned as a JSON object containing distinct lists for entities and their corresponding relationships. To ensure robustness, this function includes logic to strip common Markdown code block wrappers that models often include in their responses, and it employs internal state management via gr.State to persist this structured data across multiple user interactions without cluttering the visible interface.

The interactivity is rounded out by the chat_responder function which demonstrates a streaming chatbot implementation that utilizes the previously extracted entities as its primary source of truth. By dynamically injecting the JSON data into the system prompt, the assistant is constrained to answer questions based strictly on the provided context, effectively preventing hallucinations of outside information. The Gradio layout organizes these complex interactions into a clean, two-column interface, utilizing a combination of gr.Blocks, gr.Row, and gr.Column to provide a professional user experience that balances data input, structured visualization, and conversational exploration. This web app is responsive and adjusts for mobile web browsers.