Table of Contents

Foreward

- Audience

- Structure of the book

- Non-Technical section

- Technical details follow

- Typographical conventions

- Where to find the code

- Modifications Log

- Use of footnotes and external linking

| NOTE | |

|---|---|

|

Hello! I’m a Technology Wizard. You will be seeing me whenever information of particular importance to the exerienced technology audience is warranted. |

| NOTE | |

|---|---|

|

Hi! I’m Newbie. I’ll be buzzing around when I need to point out something to our novice readers who are new to all this technology stuff. |

Modification Log

| Seq | Date | Description |

| 1 | 2015-09-22 | Expanded the Introduction’s “So What Now …” section |

| Added the “What Will It Do?” section | ||

| Added the “A change in Plans” section | ||

| 2 | 2015-09-23 | Expanded bullets in “Familiar Turf” section |

| Moved the “What Will It Do” section to the “My Subject Application chapter. |

Introduction

Preface to My Moment

As a professional software architect, one of the enjoyable parts of my job is attending user conferences. Typically these are large events, during which knowledge can be acquired and professional contacts made. Lest I forget, both the hosting company and many of the exhibiting vendors are usually prepared to wine, dine and generally schmooze customers as conferences are a wonderful sales cultivation opportunity for them. Leave it to say, a user conference is a place to learn, meet people, and generally have a ball in the process.

Recently I attended one of these conferences, during which I came to one very uncomfortable conclusion. I’m not nearly as smart as I thought.

There it is, my ugly moment of truth. I had allowed myself to become technically complacent and fallen out-of-date with my kind of technology. If you happen to be in the business of information systems, then you are probably aware, that out-of-date is as professionally deadly as the ebola virus and simply not to be tolerated.

Nothing but the Truth!

During the conference, I attended session after session that was filled with technical references to topics, which I understood conceptually, but had never used or laid my hands on. I knew very well what the phrase “Continuous Integration” referred to and could define it and even use it in a white paper. But I had never actually constructed nor personally worked with a continuous integration process. The same was true with:

- Continuous Delivery

- Microservices

- Container based Deployment

… and to my uncomfortable chagrin this list could have been much longer.

The session descriptions, in this conference, were filled with these somewhat familiar words and phrases, which are considered vital to the future of information services technology.

Currently, the evolutionary direction of information services involves the integration and adoption of “cloud computing” or to use popular tech-speak ‘moving to ‘the Cloud’1. For those of you who are just salivating for a clean, precise, textbook definition here, how about …

“Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction”2. Now that is one fine example of the tech-speak mumbo jumbo for which computer folks are so famous. (Although, I think medical folk are just as guilty.)

For the rest of us, I should simply explain that my little bit of corporate America is currently consumed with the idea of ‘the Cloud’ and our best and brightest all agree working in the Cloud involves all the the concepts mentioned above. So, being able to spell them and use them in a sentence, regardless of how long that sentence may be, is just NOT good enough!

But how did a hip, young (OK, kidding myself there), technologist like me get into this situation. Hmmmmmmmmmmm. Let’s think about that. Not because I haven’t already, but I think there may be a few others out there in a similar situation.

Familiar Turf

My primary role does not involve developing software. I’m no long a developer by profession, heaven forbid! After years in corporate IT, I’ve successfully ascended the corporate ladder to the lofty position of Software Architect. So my colleques find it absolutely unseemly that I continue to dirty my hands writing code. After all, that’s for developers!

However, as a Software Architect, I am still expected to have knowledge of the development process and its associate skill-sets. I have always felt the best way to achieve and maintain this knowledge is to stay ‘hands-on’ with development tools and the overall SDLC3 process. For a number of years now I have happily coded in Java, whenever I could conjure up an excuse. Occasionally I produced batch tools and web-based applications using J2EE4 concepts. This means that I’ve worked primarily with command line applications and on-line applications made up of servlets, Java server pages, and relational databases.

My patterns of development have been to develop using the Eclipse IDE5 to create and unit test my Java code. Since I work alone, using a Source Code Control system has usually felt like overkill to me. Instead, I make periodic copies of my project folder, and include the source code in the various Java Archive files I generate. This is definetely a low-tech, means of protecting myself from inadvertely deleted or damaged source code, but its worked quite effectively on my team of one for years.

For testing, my normal pattern is to use the main method of each class as a testing harness from which I can construct and run unit tests. Once I’m ready for subsequent testing, I generate an appropriate form of Java Archive (JAR6 or WAR7) and manually deploy the archive and any required other required archives to the destination environment. Then I can perform various types of testing on the application.

In the last few years, I have branched out from J2EE web applications into creating Ajax8 applications using the jQuery9 library and REST10 based web services on the back end. I felt this indicated that I was staying up-to-date and was still with it. Was I ever wrong!

How did I get Here

As I learned in the conference, many of my design principles, development processes, and tools were indicative of the way I have been working. Predominantly in isolation! Most of the time I create applications by myself. That means I have never personally used the techniques and the products necessary to produce software on a larger scale in a team based environment.

So What Now …

The cure for out-of-date is well known. Retool or Retire! I’m not ready for a rocking chair yet so I guess my choice is clear. The question is how to go about it?

My answer is going to be very consistent with my career path. I’m going to get my hands very, very dirty. I’m going to build a website! But much more than that, I’m going to built a website based upon non-existant requirements such as:

- It must be scalable up to many thousands of transactions per second

- It can effectively be coded by a team of developers

- It supports an effective DevOps environment

- It can be continuously updated and redeployed without interruption

By imposing these artificial requirements on my application, I will create a situation that I must learn and utilize most of the technologies with which I’m currently lacking personal proficiency.

A Change in Plans

So, I’ve been planning on creating a family website. However, up to this time it was largely a means to an end. As such, I planned on using an rather old fashioned, tried and true, approach a standard J2EE website. Yup! Time to get the job done and stay in my comfort zone doing it. In fact, I have a relational schema already implemented in a cloud based instance of MySQL for just that purpose.

But now I’ve had my Moment of Truth and those plans will have to change. I’m still going to create my website, but based on my new made up requirements, it is going to be very different from what I was planning a week or so ago. As I mentioned above, I’ve done some work already. So the best way to take a next step, is to describe the application I’m going to build.

My Application

One of my interests is Genealogy. We have a book11, prepared by my ancestors, on my family tree but it is getting out of date. I have want to extend it with more current information. I want to gather the current genealogical information for both my generation and subsequent living generations and update our family tree with this new data.

I am also aware of a variety of other branches of the Creager family. A simple Google search of our family name turns up countless members of our clan that I’ve never heard of. My hope is to discover our common ancestry and integrate these folks, as appropriate, into our family records.

Justifications

I actually have multiple motivations for doing this.

- I want to integrate current technology to produce a relatively easy mechanism for continuous maintenance of our family tree data.

- I’m hoping to enable the online generation of future Creager Family Books utilizing eBooks and self-publishing technology.

If I can achieve this, I can not only capture the growing number of family members in my particular branch of our tree, but also expand our scope to try and discover other family branches. This would allow us to ultimately tie together what we know to be a rather expansive family that has become fragmented over our years in America.

Approaches

At present I can think of three approaches to this problem.

- Use an existing online service to capture our information.

- Implement existing open source software on a custom website for our family.

- Create a custom application for our family.

Use an Existing Online Service

The cost of the existing online services is an issue. There are numerous online services available (e.g. ancestry.com, familysearch.org, etc) some of which are subscription based and others are advertised as free. However, in my experience after about 5 minutes on most sites it is easy to see how they plan on getting paid, and it usually comes from the user. I don’t want my family members to have to subscribe to keep these records up-to-date or retieve a copy of our family tree.

Limited access to our family data is also an issue. While most of these websites support GEDCOM12 exports, there are multiple implementations of the GEDCOM spec and it is old an lacking many of the characteristics of a more current data specification. My plans require better access to our families’ data.

I’m also somewhat concerned about the long term viability of some of these sites. We need an approach that can stand for many years.

Implement Open Source Software13

There is a pleathora of software available on the subject of Geneaology and great deal of it is open source. Take a quick look at GenSoft Reviews and you will quickly see what I mean. I reviewed multiple of them and had various issues with them all. Then I widened my search to open source AND reasonably priced software which lead me to Genealone. I implemented a Creager Family instance of Genealone and soon started to discover shortcomings. I could simply say it does what it does well, but it didn’t do what I wanted. In reality I rejected this approach for two reasons:

- I don’t want to settle for and 80/20 solution.

- I want the experience of developing a very modern, highly scalable, cloud-based solution.

Create a Custom Application

I’ve settled on developing a custom website. Tell anyone you know, who knows me professionally, that I’ve come to the conclusion to write my own application and you will see no surprise on their face at all. You are more likely to see them smirk and giggle if you suggest that I would have ever done anything else. Well, enough said on that subject. The more relevant topic is what will the custom application do or to use appropriate professional jargon, what are the functional requirements?

Dictation Segment 1

For those of you who are not familiar with the term functional requirements, it refers to the general capabilities of the system, or to put it another way what the application needs to do. For those who would like a bit more detail, I’ve written the various types of requirements for this application at a reasonably high level, in Appendix A.

The functional requirements of this application are:

- To capture the people and relationships that constitute a family over multiple generations.

- To enable the production of family records such as a family history

- To produce family trees

- You may not think of a family trees as coming in various types, but they do.

Consider the following:

- if I ask “who are my descendants ?”, the answer is a family tree were my Spouse and I are the root and it extendings down toward everyone who comes after us.

- If I ask “who are my ancestors ?”, the answer is a family tree were I am the root but it extends upward to all of the people who contributed to my creation back multiple generations.

- You may not think of a family trees as coming in various types, but they do.

Consider the following:

Beyond family trees there are documents that I would like to automate generating. For example: we have what we call the “Book of Creager”, which consists of a series of stories about members of my family from previous generations along with their genealogical data. I would like to automate re-creating this book and extend it with new information as we gather it within our database.

One other necessary capability ability is uploading media. Now what do I mean by media? The obvious thing would be pictures and or videos. However in addition to the obvious media there are documents such as:

- birth certificates

- marriage certificates

- divorce records

- death certificates

- adoption records

- immigration records

- military service records

Any number of forms of documentation could be captured.

The reason for needing documentation is the validity of the database. In order for our database to constitute a valid genealogical record, we have to substantiate the declarations that we make within it. Yes, you and I know we are telling the truth! But after our generations have passed away, when the third and/or fourth subsequent generations start looking at this data, there will be a need to have proof.

I have some other thoughts of other features that I would like to include, but those are longer-term, lower priority requirements. For now I consider them outside the scope of this book.

That covers a high-level view of the requirements and the rationale behind them. So now, let’s move onto the next step of the process, which is to begin development.

Beginning Development

I’m an ex-database administrator. The logical place for me to start on an application is to consider the data that it will manage. There are other points starting spots, such as constructing the pattern of processing in the code, but I prefer to start with the data.

I naturally gravitate toward a relational database, which dictates the approach for creating the structure of the data.

| NOTE | |

|---|---|

|

I will be describing how we create the schema of the database through the process of logical database design. If you have these skills please skip forward. |

| NOTE | |

|---|---|

|

(WN) Describe a realational database and why we care. |

- (WN) Add external link to definition of database schema one add external link to definition of third form normalization

The first process of designing a database is called logical design.

During logical design we seek to organize the data we care about into a series of logical relationships called entities. The entities are described by a data elements known as attributes. Attributes are organized into instances of the entity and the instances are collected into a set, which is called a relation.

Later, in a process called physical design these logical constructs will be mapped to physical constructs. The physical counterparts are frequently much more recognizable.

| Logical | Physical |

| ———– | ——– |

| Relation | Table |

| Instance | Row |

| Attribute | Column |

Mapping Database constructs from Logical to Physical

So let’s think about the basic elements that we will have to capture in our database in order to create our family tree system. Considering the requirements state above we will need to represent a:

- Person

- Couple

- Family

- Event

- Media

Each of these elements is an entity in the design. To get a better understanding of entities consider the following excerpt from Jan L. Harrington’s book “Relational Database Design: Clearly Explained” …

“An entity is something about which we store data. A customer is an entity, as is a merchandise item stocked by Lasers Only. Entities are not necessarily tangible. For example, an event such as a concert is an entity; an appointment to see the doctor is an entity.”14

Based on these descriptions we have …

| Entity | Description |

| ———– | ——– |

| Person | An individual about which we store data.

| Couple | A set of individuals that have bonded together.

| Family | A couple and their offspring as a set of people.

| Event | An action that has occured and is worth documenting.

| Media | A pre-defined component that we store and associate to other entities within our database.

| Location | A place where events have taken place in our family tree.

Logical Entities for our Application

“Entities have data that describe them (their attributes). For example, a customer entity is usually described by a customer number, first name, last name, street, city, state, zip code, and phone number. A concert entity might be described by a title, date, location and name of the performer.”15

Now, let’s start defining the attributes which describe our Person entity.

| Person

| ———– | —————————- |

| id | An assigned number which uniquely defines this person.

| given | An individual about which we store data.

| surname | A set of individuals that have bonded together.

| birth_surname | The family name given at birth. (aka. Maidename)

| gender | The gender or sex of the individual.

| adopted | Indicator if this person was adopted

| birthDate | A couple and their offspring as a set of people.

| deathDate | An action that has occured and is worth documenting.

| birthloc | The location of this person’s birth

| deathloc | The location of this person’s death

| internment | The location where this person was buried

| occupation | The primary occupation of this person.

| notations | Notes concerning this person.

Entity/Attribute Definition for Person

“When we represent entities in a database we actually store only the attributes. Each group of attributes that describes a single real-world occurrence of an entity acts to represent an instance of an entity.”16

Person

| id | given | surname | birth | deathdate | gender |

|---|---|---|---|---|---|

| 1 | Donald | Creager | 6/12 | 6/1 | Male |

| 2 | Virginia | Creager | 11/8 | 1/3 | Female |

| 3 | Charles | Creager | 10/25 | Male | |

| 4 | Frederick | Creager | 6/7 | Male | |

| 5 | Daniel | Creager | 7/16 | Male | |

| 6 | Kenneth | Creager | 2/18 | Male | |

| 7 | Richard | Creager | 3/6 | Male | |

| 8 | Michael | Creager | 4/11 | Male | |

| 9 | Frances | Lieffers | 10/10 | 7/7 | Female |

So we understand that entities are anything about which we store data in the form of attributes. Attributes about a single entity are gathered together to form a single instance of an entity. Multiple instances of an entity, are grouped together

To begin with we will have an entity known as a person.

(WN) Insert External link to definition of entity

There are other entities that we immediately can recognize in this particular application there will be a family there will be relationships between families there will be relationships between people and their families there will be various forms of media including documents, video, Images, and or audio recordings. All of these various types of meat you will have relationships to people and various types of family units and when I say family units I’m referring to a couple versus a family. Each of these entities then has a collection of attributes which must be specified for the entity if I consider the person entity we will have to create the attributes for the person to have a name we will have a birthdate we will have a death date we could have a marriage date but if you think about it that makes more sense as an attribute of a couple because an individual does not get married in isolation we could have a list of children but that really belong to a person as much as to a couple or family.

I’m not going to take the time to do a full analysis of the data structure because that is going to get into techniques that are outside the scope of what were actually discussing in this book. Please refer to appendix B to see the results of this process and if you want to investigate the process more deeply you might check insert reference books on data normalization and relational database design.

Dictation Segment 2

(Writing note) Focus on process rather than techniques Exclusive use of open-source software References to be added to the forward

Once you have your schema and you can find ours and appendix be interesting question is how do you apply that schema and create an actual database in the cloud? In our case were going to use the Amazon cloud service we’re going to use the MySQL open source relational database engine and we’re going to use MySQL work station which is an open source tool for me.

(Writing note) insert in the section illustrations and specific process instructions on how to create a database using Amazon and how to install the workstation then illustrate using workstation to actually populate an instance of a database with the schema that’s been provided in appendix B.

So no we have a relational database it’s been populated with our particular schema for a family tree application. There are some validation steps which are necessary at this point to make sure that the schema works because there’s no point in developing code around a data scheme of that is flawed. It’s also a good practice to create some views of the database to simplify it use and keep the complexity of its use as compartmentalized as possible.

For those of you who are not from layer with the database of you a schema meet will frequently referred to contents of a foreign entity. Boy this is starting to sound very jargon field isn’t it? We’ll see if we can break this down more simply we have an into tea call the person that person may well get to know another person and they might form a couple. In a relational database the way will see that is will have a person entity that has two records in at one for him and one for her. Next will have a couple entity and this will have one record the represents the two of them together. It would be a waste of space to list all of the information we know about a person in the couple record all over again you do have their birthdate their name anything we know about them would be duplicated that’s very inefficient and causes problems and databases. To work around that problem we’re going to store all the individual knowledge about the person in the person entity in the couple entity will simply refer to two person records so if you think of him as record number three and her his record number one the couple record now consists of a single record that says record number one got together with a record number three and if you want to know any details about their names you have to go over and pull the details from the person entity. As you should be able to understand at this point dealing with raw data tables in a relational database can lead to a lot of very unusual or unfriendly displays. The resolution of that situation is frequently the use of views.

So we will create a view for the couple entity and when you reference the view instead of getting back a list of numbers of other person records you’ll actually get something meaningful like the name of the man and the name of the woman and perhaps their wedding date tour anniversary date far more useful than native data tables.

To create a view, basically you can create a query that produces the results you desire then save that query in the database.

Creating a view in this manner allows you to subsequently reference the view as if it were table. The more complicated query gets executed and you get back the desired results without having to deal with the complexity of the more sophisticated underlying query.

As a best practice, I recommend that you create views which return lists rather than single rows. A list can always be qualified by secondary where clause to return only a single row making it far more reusable. However, a view that returns a single row is much more limited in usage.

(writing note) insert an example of creating a view with illustrations.

(Writing note) we need to insert a description of the use of foreign keys in relational databases without the jargon

(Writing note) insert a section on defining the characteristics of a relational database industry terms

Dictation Segment 3

(Writing note) augment description of starting with the database with the possibility of starting with the infrastructure of the web app code

- We could also have started by establishing the application architecture with in our web application There are multiple ways to accomplish this I will not be reviewing each of the possibilities but will go directly to my preferred method

- preference of model, view , controller pattern using Java beans

(writing note) insert reference to documentation of this pattern

- With struts and without stru

(WN) insert a section about testing and validation at the Database Level

(WN) insert references to master detail presentation and the common usage of selection lists - noting that views don’t need to contain all of the information as they will typically be used to present selection lists

# relational databases

Relational database is our very frequently used in data processing applications. Well this is not new technology by any stretch of the imagination it is very effective technology. A relational database can be thought of as a collection of tables and the tables can be related together in order to show information was this taken from the beginning and it’ll probably make more sense

When we talked about analyzing our data we briefly discussed an entity was going to that a little further. An entity is one concept it is one something that we might want to keep information about all of the information for that particular something should be stored in one table which represents the entity. For example if the entity is person then we would probably store attributes such as name age maybe birthdate haircolor anything that is information about that single person. Notice I said that single person that’s because each role in the table call person represents one person the table itself may represent hundred 2000s of people with each person being represented by information in one room. One of the interesting concepts that everyone should understand with the relational database is that these Rose have a special column which is referred to as its primary key. A primary key is the special column which uniquely identifies that person from every other person in the person table. As I said there may be many many people with their information stored in this table but if the key is the primary key knowing its value allows me to isolate one person separate from all others.(Writing out) this description lost hang out we need to find some way to make it more straightforward. So it’s taking example I have a person table and it may have many rows in at each role in the person table will contain information for one and only one person. That’s a very important point I need to say that again each row contains information for one person only. Earlier we talked about relational database as we were doing the analysis of our data needs. In the analysis phase we talked about an obstruction called an entity. An entity represents a single thing or a single concept frequently entities can be described using now owns a car a person house there one single thing. We can put multiple entities together into a table when we do that by convention that table will have the name of the entity so our table name would be person let’s say when we start that are person table has 10 rows each row represents one individual of a group of 10 the table represents the entire group the individual roll represents one person. Now in order to enable the database to locate the record for a single person we have to have a special column designated in that role. This column is called the primary key. The primary key is a special value which will uniquely identify every person and separate them from every other person that might be in the same table. Let’s imagine for a second that we use the Social Security number as the primary key. Because we know that within our country Social Security numbers are not shared between people it’s a valid value to use as the primary key in a person table. It does come with some risks but those have to do with the character characteristics of that particular date element it’s probably not a good choice but it did illustrate my point so what we need to remember is that a table is a collection of Rose and everyone of those rose contains a different entity but they all contain the same type of entity they all contain in our example a person and that there is a primary key that allows us to separate a person from any other person in that table The important point that we’ve just discuss is the notion of the boundaries of the table what can go in it and what camps and the fact that each row must be uniquely identified by a primary key. Each row must be identified by primary key if member if if your primary key is going to be made up of Social Security number each role as to have a different social security number it has to have a unique value underline you need OK so now we have a table and we have its primary key and it contains a variety of other columns all of which have to do with the entity a person and those other columns when you’re designing a referred to as attributes when you implement the table of course they become columns now is when it gets interesting. The value of a relational database is that you can make relations across tables for example if I have a person table I could have a couple table and that would show me the relationship it’s up to Persons I know it’s supposed to be people but I’m sick with me here that exist in the person table but they’re in a relationship so they are a couple in the way we do that is with something called a foreign key. A foreign key is a column in an outside table that points to a column in a primary entity for example we talked about a person table and now I’ve brought up the notion of a couples table the couples table might have a column called father it might have a column called mother strike that go back it might have a column called husband it might have a column called wife The value that I put in those columns will be the primary key from the person table that represents those people. Remember a minute ago I suggested you might use a Social Security number as the primary key so what would you expect to find in the couple table? You’re going to find one row because it represents one entity that being a couple and a column named husband that has the Social Security number of the man who is the husband and another column called wife that contains the Social Security number or the primary key value from the person table this is called a relation that table couple tells us about a relationship of two different people. The value of a relational database is it allows us to recognize that relationship without having to make a copy of all of the information in the people table. In previous technologies it would’ve been necessary to copy the name the birthdate the various columns of the husband and the wife into the couples table with with relational database technology that is avoided so you avoid the problems with having to keep things in sync and up-to-date in multiple places. (Writers note) insert section prior to the relational database helping the novice understand why this is important information so they’re willing to stick with it and get through it

- Technology

- Java

- J2EE (Servlets, Java ServerPages)

- MySQL Relational Database

- Eclipse

Steps Along the Journey

Introduced Asynchronus Messaging

- Introduced ActiveMQ

- Inserted a layer between microservice and client

Switched Interfaces

- Switched the internal interface between the REST API Gateway and the Microservice to Java Objects using serialization

Added a SrvcMgr

- Introduced a Service Manager (SrvcMgr) to handle the load balancing of the microservices.

Designated Group ID ad Service Type

- Implemented ActiveMQ groupid by service type.

Switch to Listeners

- Implemented Listener interface for microservices.

The Story of Containers

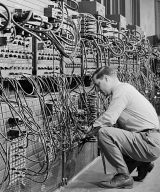

Have you ever heard computer people talk about containers as though the thought was something new and different. Well that is probably because for them, strangely enough it is!

Computers

As long as we’ve been using general purpose computers to process data, a computer has been a physical machines. In the early days they filled rooms and required lots of special things such as temperature controlled, clean rooms to function properly.

As long as we’ve been using general purpose computers to process data, a computer has been a physical machines. In the early days they filled rooms and required lots of special things such as temperature controlled, clean rooms to function properly.

Now we’ve gotten to the point that they are on a chip that lives in your phone, on your credit card, or your wrist.

Now we’ve gotten to the point that they are on a chip that lives in your phone, on your credit card, or your wrist.

Virtual Machines

Since the turn of the century we’ve started to ever-increasingly hear about another type of computer, a virtual computer. Virtualization is a process of separating the various components that make up a computer (memory, storage, processors, etc) so they become sharable. With virtualization we can have multiple computers that all share a pool of resources.

With physical machines all of its resources are permanently allocated and that allocation can only change by upgrading the machine. Virtual machine operate more dynamically and can change their resource allocations as needed. This way when a virtual machine needs more of memory, it simply requests it, uses what it needs, and gives it back when it’s done with it so another machine can use it. No visit by an upgrade technician required!

With physical machines all of its resources are permanently allocated and that allocation can only change by upgrading the machine. Virtual machine operate more dynamically and can change their resource allocations as needed. This way when a virtual machine needs more of memory, it simply requests it, uses what it needs, and gives it back when it’s done with it so another machine can use it. No visit by an upgrade technician required!

Virtualization has done some fantastic things for managing large data centers and being able to provision new computers very quickly. However, whether you have physical or virtual machine you still get a single dedicated operating system and all the things that come with it. In most cases, starting one of these machines takes from a few minutes to a quarter of an hour.

Containers

Characteristics

- Lightweight

- Isolated

- Potentially Portable

Opportunities

- Create a Reference Implementaion of each Tech Stack

- Develop a matrix for qualifying enterprise applications for:

- Containers

- Cloud Deployment

- CD/CI could be a Pre-requisite

Comparitive Characteristics

| Pets | Cattle |

|——–|——–|

| Isolated | Isolated |

| Heavy Client | Light Client |

| Hypervisor | Either Hypervisor OR Bare Metal |

| Slow Startup (Minutes) | Quick Startup (seconds) |

| Composite Image | Simple Image |

| Compound OS Images | Single OS |

| Managed | Versioned |

| Documentation Optional | Explicitly Required Documentation |

| Configured by Admins | Configured by DevOps |

Business Reporting

Hosted Private Cloud

Facilitating Migration

Lessons Learned

Using Temporary Queues was a Mistake

- Sought best practice for request/response pattern in asyn env and found the temp response queue solution. MISTAKE!

Expect Refactoring

- Most things will not be right on the first try, expect refactoring

- Plan time for refactoring in your schedules

- Remember that refactoring requires re-testing

Appendix A: Requirements

Data Requirements

- The ability to store information about:

- person

- family

- locations

- dates

- relationships

- Generations

- parents

- siblings

- birth (biological, social adopted,foster,assimilated)

- media

- relate that media to:

- people

- events

- families

- relate that media to:

- events

- relate events to:

- people

- media

- families

- relate events to:

Input Requirements

- Data

- Import data

- GEDCOM

- JSON

- CSV

- Import data

Output Requirements

- Data

- Generate a Family Tree data structure from a designated entry

- Ancestors

- Descendant

- Export data

- GEDCOM

- JSON

- CSV

- Generate a Family Tree data structure from a designated entry

- Hardcopy

- Generate Family History Book

Process Requirements

- Perform CRUD17 processing on all stored data

- Maintain and access data exclusively via REST API based services

Operational Requirements

- Dynamically resizable

- Self-healing

- Auto-configuration based upon load

Technical Requirements

- Cloud Deployable

- Deployable on multiple cloud service providers

- Easily scalable

Security Requirements

- Unauthenticated users may Retrieve data.

- Only authenticated user’s may Create, Update, and/or Delete data.

Introduction

The Coca-Cola Company’s (TCCC) code respository for its SalesForce related code components has been relocated from CenturyLink to Amason Web Services (AWS). This document provides the necessary, environmentally specific information to utilize and administer this new instance of the SalesForce related code.

Audience

The Audience for this document is presumed to already posses a working knowledge of both AWS and Subversion. Such individuals include: Programmers, Integrators. Technical Managers, and Administrators.

Configuration

The SalesForce code components are segregated into five different repositories. These repositories are designated for the use of five different projects: <table> <tr><th>Project</th><th>System Identifier</th></tr> <tr><th>Common Bottler System</th><th>CBS</th></tr> <tr><th>Coca-Cola North America</th><th>CCNA</th></tr> <tr><th>Coca-Cola North America FS</th><th>CCNAFS</th></tr> <tr><th>Coca-Cola Refreshments</th><th>CCR</th></tr> <tr><th><i><Fill in the Project Name></i></th><th>HERE</th></tr> </table>

These separations have been designated due to the management routines for the associated code bases as well as the separation of access rights on project group boundaries.

Access

##AWS Console Access

Accessing the AWS Console is as easy as:

1. Enter the TCCC/AWS Portal

2. Login to AWS

3. Select a Role

Note: If this procedure is not familiar to you, please refer to Appendix A of this document for a more detailed description.

Via the Web or SVN Clients

Web access is provided by the Apache Httpd web server, which is configured with the dav_svn module. For more detailed information concerning this component kindly refer to the Subversion documentation.

The web access is available to appropriately authorized users via the base URL of https://prod-2-01-elasticl-1aegzkpnlqtuu-174822828.us-east-1.elb.amazonaws.com.

Security Note

The preexisting set of authorized user ids has been re-established and issued freshly generated passwords.

Details follow in subsequent sections of this document.

To utilize a specific repository, append the associated system identifier to the base URL as follows:

https://prod-2-01-elasticl-1aegzkpnlqtuu-174822828.us-east-1.elb.amazonaws.com/ccr

Technical Note

The web server is accessed via a load balancer listening on the HTTPS protocol. This load balancer has been >configured using a “self-signed” certificate. Until this certificate is replaced with a “domain specific >certificate”, as described later, browsers will issue warnings that the connection is not secure.

Via SSH Clients to the Web Server

All administrative access to the AWS web server must be directed through the assigned AWS bastion server.

This process of making an administrative connection is:

1. Connect to the TCCC Network

2. Connect to the Bastion server

3. Connect to the Target server.

Note:

If this procedure is not familiar to you, please refer to Appendix B of this document for a more detailed description.

AWS Configuration

## Computational Resources

As shown above, the SalesForce respository is supported by four small EC2 instances. <table> <tr><th>Name</th><th>Functional Description</th></tr> <tr><td>bastion</td><td><strong>Bastion</strong> server, used to provide Secure Shell (SSH) access to the AWS SalesForce environments. All SSH access must be through this server. </td></tr> <tr><td>nas01</td><td><Strong>Network Accessible Storage (NAS)</strong> server, used to persistant, independently connectable disk storage for all AWS SalesForce environments.</td></tr> <tr><td>nat</td><td><strong>Network Address Translation (NAT)</strong> server, used to provide safe, secure, external network access to the AWS SalesForce environment.</td></tr> <tr><td>Web</td><td><strong>Web</strong> server, used to provide Secure Shell (SSH) access to the AWS SalesForce environments. All SSH access must be through this server. </td></tr> </table>

Storage Resources

<pre> Filesystem 1K-blocks Used Available Use% Mounted on /dev/xvda1 8123812 1892724 6130840 24% / devtmpfs 1016808 60 1016748 1% /dev tmpfs 1025820 0 1025820 0% /dev/shm 10.0.1.177:/pool1/volume1 28075008 1530368 26544640 6% /san

/san ├── bin │ ├── checkUrl │ ├── file2svn │ ├── initsvn │ ├── installsvn │ ├── loadNewUsers │ ├── loadSalesForce │ ├── loadUser │ ├── migrate │ ├── s32svn │ ├── s3cleanup │ ├── svn2git │ └── svn2s3 ├── etc │ ├── 10-subversion.conf │ ├── backup │ │ ├── 10-subversion.conf │ │ ├── subversion.conf │ │ ├── svn-acl │ │ ├── svn-auth-file │ │ └── svn.conf │ ├── subversion.01.conf │ ├── subversion.conf │ ├── svn-acl │ ├── svn-auth-file │ └── svn.conf └── svn ├── 007428 │ ├── conf │ ├── db │ ├── format │ ├── hooks │ ├── locks │ └── README.txt ├── 008610 │ ├── conf │ ├── db │ ├── format │ ├── hooks │ ├── locks │ └── README.txt ├── 008619 │ ├── conf │ ├── db │ ├── format │ ├── hooks │ ├── locks │ └── README.txt ├── 008621 │ ├── conf │ ├── db │ ├── format │ ├── hooks │ ├── locks │ └── README.txt ├── cbs -> /san/svn/008621 ├── ccna -> /san/svn/008610 ├── ccnafs -> /san/svn/008619 ├── ccr -> /san/svn/007428 └── here -> /san/svn/008620 </pre>

Next Steps

- Simplify the access URL

- Reserve a Domain Name

- Create a DNS Entry

- Obtain a Certificate reflecting this Domain name

- Update the new certificate into the Load Balancer

Administration

Password Management

All users of the previous CenturyLink environment have been assigned access to the AWS based repositories. Each user account has the same user id as before, but a newly generated, individually assigned password has been created for each user. A list of these assignments is available to project managers and team leads for their technicians.

To request the assigned passwords.

Email a request for your team’s passwords. Be sure to include a list of all of your team’s user ids.

To change a specific user’s password.

-

Sign on to the web server and issue the following command:

htpasswd -bm /san/etc/svn-auth-file <userId> '<new password>'

To use the root account.

To temporarily use the root account, the sudo command has been configured to support most common commands.

If you need to login as root:

1. Logon in as ec2-user

2. sudo su -

Procedural Samples

## Retrieve Repository Directory

wget --http-user=****** --http-password=******** --no-check-certificate

https://prod-2-01-elasticl-1aegzkpnlqtuu-174822828.us-east-1.elb.amazonaws.com/cbs

Checkout a working copy

svn checkout

https://prod-2-01-elasticl-1aegzkpnlqtuu-174822828.us-east-1.elb.amazonaws.com/cbs

/san/svn/cbs/work

<Source code downloads> ...

- - -

# Appendix A

##AWS Console Access Procedure

### Enter the TCCC/AWS Portal

Entry to the AWS Console for TCCC work must be through the TCCC/AWS Access Portal.

### Login to AWS

After gaining initial access you must perform a user sign-in.

This very familiar dialog box can be deceptive. In this dialog box you must use your regular TCCC userid and password.

Access Note

If you have been assigned an AWS Adminstrator role for any reason, you will be presented with a second dialog box which looks very much like this one. However, pay particular attention to the Labels on the two input fields. It will require different input and is only distinguishable by these labels.

If you are presented with this Adminstrative Login dialog box you must provide your standard TCCC user id along with a freshly generated RSA Passcode. This code is obtained using the same procedure as you may be familiar with when you establish a VPN connection to the TCCC Network.

### Select a Role

After completing the login steps you may encounter an additional selection screen.

If you have multiple AWS Roles assigned, you will have to select the SalesForce Administrator role, as illustrated, to work with the SalesForce Repositories configuration.

- - -

# Appendix B

##Admistrative Connection Procedure to AWS Servers

All administrative access to the AWS web server must be directed through the assigned AWS bastion server. It is expected that most readers will use Putty as their ssh client. To access AWS with Putty, a specific setup must be executed. For more details on this process, kindly refer to Connecting to Your Linux Instance from Windows Using PuTTY in the AWS documentation library.

Additional Administrative Setup

In addition to the steps documented above, it will be necessary to securely upload a copy of the root private key to the Bastion server. This can be accomplished from a Windows workstation using the Putty Secure Copy Program (pscp) as follows:

pscp.exe -i 011074_bastion.ppk "011074_root.pem" ec2-user@<bastion server ip address>:/home/ec2-user

Access Note

1. Due to IP addresswhite listingrestrictions, you must be connected to the TCCC network to access the bastion server.

2. The host you must connect to is the Bastion server.

3. The Bastion server is running the Linux operating system and the user name is ec2-user.

Web Server and/or Repository Administration

- Connect to the Bastion server using Putty as describe above.

- Connect to the Web server from the Bastion server using ssh.

ssh -i ./011074_root.pem ec2-user@<web server ip address>

# Appendix C ##Technical Notes on Restoring the SAN ### References

- Restoring an Amazon Volume

- Creating a SAN volume using CLI

Procedure

- Log on to the AWS Console using the Salesforce Account

- Locate the most current Snapshots

- Enter the EC2 Service

- From the Nav pane select Elastic Block Store:Snapshots

- Identify and capture the two most current Snapshot

- There are two volumes that are backed up (RAID 1)

- You must work with both volumes

- Restore the SAN volumes

- Enter the Cloud Formation service

- Select the

prod-2-011074-lampstack - Select the

Update Stackaction - Select

NEXTusing the Current template - Provide the Snapshot identifiers

- NAS EBS 1 Snapshotid =

<snap-0c6c9bc2fcff2922c> - NAS EBS 2 Snapshotid =

<snap-0f35865693505ad21>

Note: These values are variables and will change each time. - Click

NEXTon this and the following screen

- NAS EBS 1 Snapshotid =

- Scroll to the bottom and click on the

I acknowledge that ...option - Click

Updateto restore the SAN storage

- Next Steps

- Research the pre-conditions

- Can we do a hot restore?

- Do we need to stop the web instance first?

- Do we need to stop the SAN instance first?

- Research the pre-conditions

Notes

1CRUD is an ancronym for Create, Read, Update, and Delete, which are the four basis operations on persistent storage.↩

2CRUD is an ancronym for Create, Read, Update, and Delete, which are the four basis operations on persistent storage.↩

3The systems development life cycle (SDLC) is a conceptual model used that describes the stages involved in an information system development project, Margaret Rouse↩

4Short for Java 2 Platform Enterprise Edition. J2EE is a platform-independent, Java-centric environment for developing, building and deploying Web-based enterprise applications online. The J2EE platform consists of a set of services, APIs, and protocols that provide thhttps://en.wikipedia.org/wiki/Ajax_(programming)e functionality for developing multi-tiered, Web-based applications.↩

5An integrated development environment (IDE) is a software application that provides comprehensive facilities to computer programmers for software development ↩

6In software, JAR (Java Archive) is a package file format typically used to aggregate many Java class files and associated metadata and resources (text, images, etc.) into one file to distribute application software or libraries on the Java platform.↩

7In software engineering, a WAR file, or Web application ARchive, is a JAR file used to distribute a collection of Java Server Pages, Java Servlets, Java classes, XML files, tag libraries, static web pages HTML and related files and other resources that together constitute a web application.↩

8Open source software is software that can be freely used, changed, and shared (in modified or unmodified form) by anyone.↩

9[Relational Database Design Clearly Explained (The Morgan Kaufmann Series in Data Managment Systems), Jan L. Harrington, Academic Press, 2002] (http://www.amazon.com/Relational-Database-Explained-Kaufmann-Management/dp/1558608206 “Amazon Book”)↩

10In computing, RepresentationalState Transfer (REST) is a software architecture style for building scalable web services. REST gives a coordinated set of constraints to the design of components in a distributed hypermedia system that can lead to a higher performing and more maintainable architecture. Architectural Styles and the Design of Network-based Software Architecture: Chapter 5: Representation State Transfer (REST), Fielding, Roy Thomas Ph.D., 2000, “University of California, Irvine.”↩

11CRUD is an ancronym for Create, Read, Update, and Delete, which are the four basis operations on persistent storage.↩

12Short for Java 2 Platform Enterprise Edition. J2EE is a platform-independent, Java-centric environment for developing, building and deploying Web-based enterprise applications online. The J2EE platform consists of a set of services, APIs, and protocols that provide thhttps://en.wikipedia.org/wiki/Ajax_(programming)e functionality for developing multi-tiered, Web-based applications.↩

13Open source software is software that can be freely used, changed, and shared (in modified or unmodified form) by anyone.↩

14[Relational Database Design Clearly Explained (The Morgan Kaufmann Series in Data Managment Systems), Jan L. Harrington, Academic Press, 2002] (http://www.amazon.com/Relational-Database-Explained-Kaufmann-Management/dp/1558608206 “Amazon Book”)↩

15[Relational Database Design Clearly Explained (The Morgan Kaufmann Series in Data Managment Systems), Jan L. Harrington, Academic Press, 2002] (http://www.amazon.com/Relational-Database-Explained-Kaufmann-Management/dp/1558608206 “Amazon Book”)↩

16[Relational Database Design Clearly Explained (The Morgan Kaufmann Series in Data Managment Systems), Jan L. Harrington, Academic Press, 2002] (http://www.amazon.com/Relational-Database-Explained-Kaufmann-Management/dp/1558608206 “Amazon Book”)↩

17CRUD is an ancronym for Create, Read, Update, and Delete, which are the four basis operations on persistent storage.↩