Table of Contents

- Front Matter

-

Part 1: Just the basics

- Motivation – the first step to learning TDD

- The essential tools

- It’s not (only) a test

- Statement-first programming

- Practicing what we have already learned

- Sorting out the bits

- How to start?

- How is TDD about analysis and what does “GIVEN-WHEN-THEN” mean?

- What is the scope of a unit-level Statement in TDD?

- Developing a TDD style and Constrained Non-Determinism

- Specifying functional boundaries and conditions

- Driving the implementation from Specification

-

Part 2: Object-Oriented World

- On Object Composability

- Telling, not asking

- The need for mock objects

- Why do we need composability?

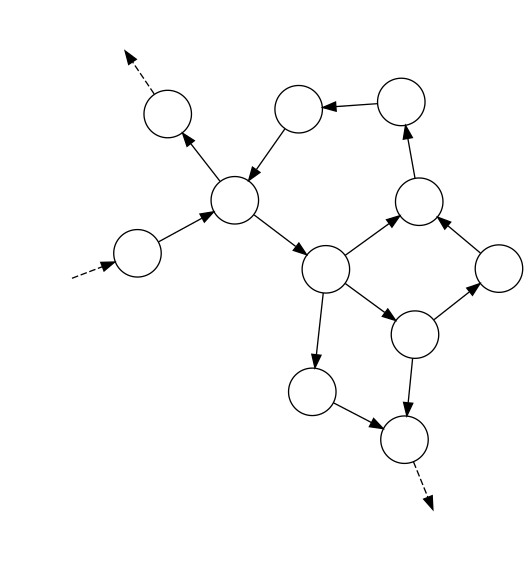

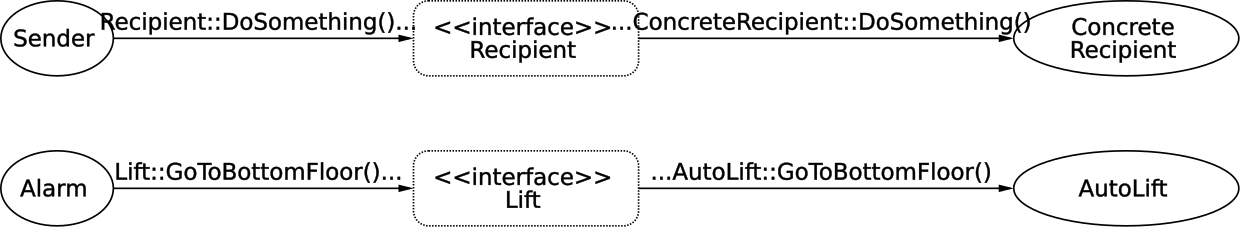

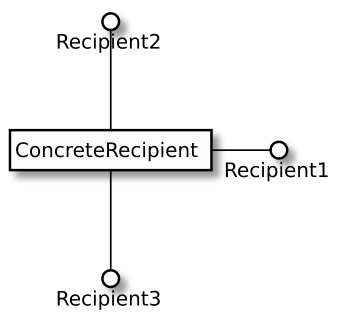

- Web, messages and protocols

- Composing a web of objects

- When are objects composed?

- How does a sender obtain a reference to a recipient (i.e. how connections are made)?

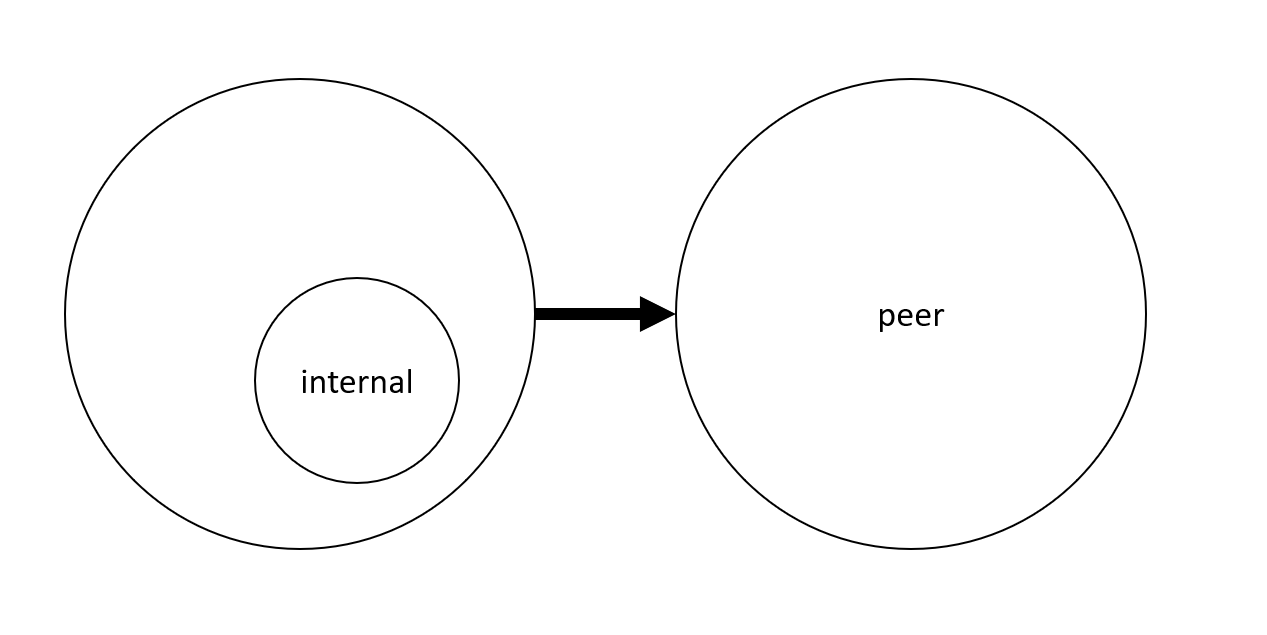

- Where are objects composed?

- Interfaces

-

Protocols

- Protocols exist

- Protocol stability

- Craft messages to reflect the sender’s intention

- Model interactions after the problem domain

- Message recipients should be told what to do, instead of being asked for information

- Most of the getters should be removed, return values should be avoided

- Protocols should be small and abstract

- Summary

- Classes

- Object Composition as a Language

- Value Objects

- Value object anatomy

- Aspects of value objects design

-

Part 3: TDD in Object-Oriented World

- Mock Objects as a testing tool

- Test-first using mock objects

- Test-driving at the input boundary

- Test-driving at the input boundary – a retrospective

- Test-driving object creation

- Test-driving object creation – a retrospective

- Test-driving application logic

- Test-driving value objects

- Reaching the web of objects boundaries

- What’s inside the object?

- Design smells visible in the Specification

- THIS IS ALL I HAVE FOR NOW. WHAT FOLLOWS IS RAW, UNORDERED MATERIAL THAT’S NOT YET READY TO BE CONSUMED AS PART OF THIS TUTORIAL

- Mock objects as a design tool

- Guidance of test smells

- Revisiting topics from chapter 1

- Maintainable mock-based Statements

- Refactoring mock code

- Part 4: Application architecture

- Part 5: TDD on application architecture level

- Notes

Front Matter

Dedications

Ad Deum qui laetificat iuventutem meam.

To my beloved wife Monika and our lovely son Daniel.

Thanks!

I would like to thank the following people (listed alphabetically by name) for valuable feedback, suggestions, typo fixes and other contributions:

- Brad Appleton

- Borysław Bobulski

- Chris Kucharski

- Daniel Dec

- Daniel Żołopa (cover image)

- Donghyun Lee

- Łukasz Maternia

- Marek Radecki

- Martin Moene

- Michael Whelan

- Polina Kravchenko

- Rafał Bigaj

- Reuven Yagel

- Rémi Goyard

- Robert Pająk

- Wiktor Żołnowski

This book is not original at all. It presents various topics that others invented and I just picked up. Thus, I would also like to thank my mentors and authorities on test-driven development and object-oriented design that I gained most of my knowledge from (listed alphabetically by name):

- Amir Kolsky

- Dan North

- Emily Bache

- Ken Pugh

- Kent Beck

- Mark Seemann

- Martin Fowler

- Nat Pryce

- Philip Schwarz

- Robert C. Martin

- Scott Bain

- Steve Freeman

About code examples

Notes for C# users

The language of choice for code examples is C#, however, I made some exception from the typical C# code conventions.

Dropping “I” from interface names

I am not a big fan of using ISomething as an interface name, so I decided to drop the I even though most C# developers expect to see it. I hope you can forgive on this one.

Idiomatic C#

Most of the code in this book is not idiomatic C#. I tried to avoid properties, events, and most modern features. My goal is to allow users of other languages (especially Java) to benefit from the book.

Using underscore in field names

Some people like it, some not. I decided to stick to the convention of putting an underscore (_) before a class field name.

Notes for Java users

The language of choice for the code examples is C#. That said, I wanted the book to be as technology-agnostic as possible, to allow especially Java programmers to benefit from it. I tried using a minimum number of C#-specific features and in several places even made remarks targeted at Java users to make it easier for them. Still, there are some things I could not avoid. That’s why I wrote up a list containing several of the differences between Java and C# that Java users could benefit from knowing when reading the book.

Naming conventions

Most languages have their default naming conventions. For example, in Java, a class name is written with pascal case (e.g. UserAccount), methods and fields are written with camel case, e.g. payTaxes and constants/read-only fields are typically written in underscored upper-case (e.g. CONNECTED_NODES).

C# uses pascal case for both classes and methods (e.g. UserAccount, PayTaxes, ConnectedNodes). For fields, there are several naming conventions. I chose the one starting with underscore (e.g. _myDependency). There are other minor differences, but these are the ones you are going to encounter quite often.

var keyword

For example brevity, I chose to use the var keyword in the examples. This keyword serves as automatic type inference, e.g.

1 var x = 123; //x inferred as integer

Of course, this is no dynamic typing by any means – everything is resolved at compile time.

One more thing – var keyword can only be used when the type can be inferred, so occasionally, you will see me declaring types explicitly as in:

1 List<string> list = null; //list cannot be inferred

string as keyword

C# has a String type, similar to Java. It allows, however, to write this type name as keyword, e.g. string instead of String. This is only syntactic sugar which is used by default by the C# community.

Attributes instead of annotations

In C#, attributes are used for the same purpose as annotations in Java. So, whenever you see:

1 [Whatever]

2 public void doSomething()

think:

1 @Whatever

2 public void doSomething()

readonly and const instead of final

Where Java uses final for constants (together with static) and read-only fields, C# uses two keywords: const and readonly. Without going into details, whenever you see something like:

1 public class User

2 {

3 // a constant with literal:

4 private const int DefaultAge = 15;

5

6 // a "constant" object:

7 private static readonly TimeSpan DefaultSessionTime

8 = TimeSpan.FromDays(2);

9

10 // a read-only instance field:

11 private readonly List<int> _marks = new List<int>();

12 }

think:

1 public class User {

2 //a constant with literal:

3 private static final int DEFAULT_AGE = 15;

4

5 //a "constant" object:

6 private static final Duration

7 DEFAULT_SESSION_TIME = Duration.ofDays(2);

8

9 // a read-only instance field:

10 private final List<Integer> marks = new ArrayList<>();

11 }

A List<T>

If you are a Java user, note that in C#, List<T> is not an interface, but a concrete class. it is typically used where you would use an ArrayList.

Generics

One of the biggest difference between Java and C# is how they treat generics. First of all, C# allows using primitive types in generic declarations, so you can write List<int> in C# where in Java you have to write List<Integer>.

The other difference is that in C# there is no type erasure as there is in Java. C# code retains all the generic information at runtime. This impacts how most generic APIs are declared and used in C#.

A generic class definition and creation in Java and C# are roughly the same. There is, however, a difference on a method level. A generic method in Java is typically written as:

1 public <T> List<T> createArrayOf(Class<T> type) {

2 ...

3 }

and called like this:

1 List<Integer> ints = createArrayOf(Integer.class);

whereas in C# the same method would be defined as:

1 public List<T> CreateArrayOf<T>()

2 {

3 ...

4 }

and called as such:

1 List<int> ints = CreateArrayOf<int>();

These differences are visible in the design of the library that I use throughout this book for generating test data. While in the C# version, one generates test data by writing:

1 var data = Any.Instance<MyData>();

the Java version of the library is used like this:

1 MyData data = Any.instanceOf(MyData.class);

Part 1: Just the basics

Without going much into advanced aspects, such as applying TDD to object-oriented systems where multiple objects collaborate (which is a topic of part 2), I introduce the basic TDD philosophy and practices. In terms of design, most of the examples will be about methods of a single object being exercised. The goal is to focus on the core of TDD before going into its specific applications and to slowly introduce some concepts in an easy to grasp manner.

After reading part 1, you will be able to effectively develop classes that have no dependencies on other classes (and on operating system resources) using TDD.

Motivation – the first step to learning TDD

I’m writing this book because I’m an enthusiast of Test-Driven Development (TDD). I believe that TDD is a major improvement over other software development methodologies that I have used to deliver quality software. I also believe that this is true not only for me, but for many other software developers. This raises the question: why don’t more people learn and use TDD as their software delivery method of choice? In my professional life, I haven’t seen the adoption rate to be high enough to justify the claim that TDD is now mainstream.

I have to respect you for deciding to pick up a book, rather than building your understanding of TDD on the foundation of urban legends and your imagination. I am honored and happy that you chose this one, no matter if this is your first book on TDD or one of many you have opened up in your learning endeavors. As much as I hope you will read this book from cover to cover, I am aware that this doesn’t always happen. That makes me want to ask you an important question that may help you decide whether you want to read on: why do you want to learn TDD?

By questioning your motivation, I’m not trying to discourage you from reading this book. Rather, I’d like you to reconsider the goal you want to achieve by reading it. Over time, I have noticed that some of us (myself included) may think we need to learn something (as opposed to wanting to learn something) for various reasons, such as getting a promotion at work, gaining a certificate, adding something to our CV, or just “staying up to date” with recent hypes. Unfortunately, my observation is that Test-Driven Development tends to fall into this category for many people. Such motivation may be difficult to sustain over the long term.

Another source of motivation may be imagining TDD as something it is not. Some of us may only have a vague knowledge of what the real costs and benefits of TDD are. Knowing that TDD is valued and praised by others, we may conclude that it has to be good for us as well. We may have a vague understanding of the reasons, such as “the code will be more tested” for example. As we don’t know the real “why” of TDD, we may make up some reasons to practice test-first development, like “to ensure tests are written for everything”. Don’t get me wrong, these statements might be partially true, but they miss a lot of the essence of TDD. If TDD does not bring the benefits we imagine it might bring, disappointment may creep in. I have heard such disappointed practitioners saying “I don’t need TDD, because I need tests that give me confidence on a broader scope” or “Why do I need unit tests1 when I already have integration tests, smoke tests, sanity tests, exploration tests, etc…?” Many times, I have seen TDD abandoned before it is even understood.

Is learning TDD a high priority for you? Are you determined to try it out and learn it? If you’re not, hey, I heard the new series of Game of Thrones is on TV, why don’t you check it out instead? Okay, I’m just teasing, but as some say, TDD is “easy to learn, hard to master”2, so without some grit to move on, it will be difficult. Especially since I plan to introduce the content slowly and gradually so that you can get a better explanation of some of the practices and techniques.

What TDD feels like

My brother and I liked to play video games in our childhood – one of the most memorable being Tekken 3 – a Japanese tournament beat’em up for Sony Playstation. Beating the game with all the warriors and unlocking all hidden bonuses, mini-games, etc. took about a day. Some could say the game had nothing to offer since then. Why is it then that we spent more than a year on it?

It is because each fighter in the game had a lot of combos, kicks, and punches that could be mixed in a variety of ways. Some of them were only usable in certain situations, others were something I could throw at my opponent almost anytime without a big risk of being exposed to counterattacks. I could side-step to evade enemy’s attacks and, most of all, I could kick another fighter up in the air where they could not block my attacks and I was able to land some nice attacks on them before they fell. These in-the-air techniques were called “juggles”. Some magazines published lists of new juggles each month and the hype has stayed in the gaming community for well over a year.

Yes, Tekken was easy to learn – I could put one hour into training the core moves of a character and then be able to “use” this character, but I knew that what would make me a great fighter was the experience and knowledge on which techniques were risky and which were not, which ones could be used in which situations, which ones, if used one after another, gave the opponent little chance to counterattack, etc. No wonder that soon many tournaments sprang, where players could clash for glory, fame, and rewards. Even today, you can watch some of those old matches on youtube.

TDD is like Tekken. You probably heard the mantra “red-green-refactor” or the general advice “write your test first, then the code”, maybe you even did some experiments on your own where you were trying to implement a bubble-sort algorithm or other simple stuff by starting with a test. But that is all like practicing Tekken by trying out each move on its own on a dummy opponent, without the context of real-world issues that make the fight challenging. And while I think such exercises are very useful (in fact, I do a lot of them), I find an immense benefit in understanding the bigger picture of real-world TDD usage as well.

Some people I talk to about TDD sum up what I say to them as, “This is demotivating – there are so many things I have to watch out for, that it makes me never want to start!”. Easy, don’t panic – remember the first time you tried to ride a bike – you might have been far back then from knowing traffic regulations and following road signs, but that didn’t keep you away, did it?

I find TDD very exciting and it makes me excited about writing code as well. Some guys of my age already think they know all about coding, are bored with it and cannot wait until they move to management or requirements or business analysis, but hey! I have a new set of techniques that makes my coding career challenging again! And it is a skill that I can apply to many different technologies and languages, making me a better developer overall! Isn’t that something worth aiming for?

Let’s get it started!

In this chapter, I tried to provoke you to rethink your attitude and motivation. If you are still determined to learn TDD with me by reading this book, which I hope you are, then let’s get to work!

The essential tools

Ever watched Karate Kid, either the old version or the new one? The thing they have in common is that when the “kid” starts learning karate (or kung-fu) from his master, he is given a basic, repetitive task (like taking off a jacket and putting it on again), not knowing yet where it would lead him. Or look at the first Rocky film (yeah, the one starring Sylvester Stallone), where Rocky chases a chicken to train agility.

When I first tried to learn how to play guitar, I found two pieces of advice on the web: the first was to start by mastering a single, difficult song. The second was to play with a single string, learn how to make it sound in different ways and try to play some melodies by ear just with this one string. Do I have to tell you that the second piece of advice worked better?

Honestly, I could dive right into the core techniques of TDD, but I feel this would be like putting you on a ring with a demanding opponent – you would most probably be discouraged before gaining the necessary skills. So, instead of explaining how to win a race, in this chapter we will take a look at what shiny cars we will be driving.

In other words, I will give you a brief tour of the three tools we will use throughout this book.

In this chapter, I will oversimplify some things just to get you up and running without getting into the philosophy of TDD yet (think: physics lessons in primary school). Don’t worry about it :-), I will make up for it in the coming chapters!

Test framework

The first tool we’ll use is a test framework. A test framework allows us to specify and execute our tests.

Let’s assume for the sake of this introduction that we have an application that accepts two numbers from the command line, multiplies them and prints the result on the console. The code is pretty straightforward:

1 public static void Main(string[] args)

2 {

3 try

4 {

5 int firstNumber = Int32.Parse(args[0]);

6 int secondNumber = Int32.Parse(args[1]);

7

8 var result =

9 new Multiplication(firstNumber, secondNumber).Perform();

10

11 Console.WriteLine("Result is: " + result);

12 }

13 catch(Exception e)

14 {

15 Console.WriteLine("Multiplication failed because of: " + e);

16 }

17 }

Now, let’s assume we want to check whether this application produces correct results. The most obvious way would be to invoke it from the command line manually with some exemplary arguments, then check the output to the console and compare it with what we expected to see. Such testing session could look like this:

1 C:\MultiplicationApp\MultiplicationApp.exe 3 7

2 21

3 C:\MultiplicationApp\

As you can see, our application produces a result of 21 for the multiplication of 3 by 7. This is correct, so we assume the application has passed the test.

Now, what if the application also performed addition, subtraction, division, calculus, etc.? How many times would we have to invoke the application manually to make sure every operation works correctly? Wouldn’t that be time-consuming? But wait, we are programmers, right? So we can write programs to do the testing for us! For example, here is a source code of a program that uses the Multiplication class, but in a slightly different way than the original application:

1 public static void Main(string[] args)

2 {

3 var multiplication = new Multiplication(3,7);

4

5 var result = multiplication.Perform();

6

7 if(result != 21)

8 {

9 throw new Exception("Failed! Expected: 21 but was: " + result);

10 }

11 }

It looks simple, isn’t it? Now, let’s use this code as a basis to build a very primitive test framework, just to show the pieces that such frameworks consist of. As a step in that direction, we can extract the verification of the result into a reusable method – after all, we will be adding division in a second, remember? So here goes:

1 public static void Main(string[] args)

2 {

3 var multiplication = new Multiplication(3,7);

4

5 var result = multiplication.Perform();

6

7 AssertTwoIntegersAreEqual(expected: 21, actual: result);

8 }

9

10 //extracted code:

11 public static void AssertTwoIntegersAreEqual(

12 int expected, int actual)

13 {

14 if(actual != expected)

15 {

16 throw new Exception(

17 "Failed! Expected: "

18 + expected + " but was: " + actual);

19 }

20 }

Note that I started the name of this extracted method with “Assert” – we will get back to the naming soon, for now just assume that this is a good name for a method that verifies that a result matches our expectation. Let’s take one last round and extract the test itself so that its code is in a separate method. This method can be given a name that describes what the test is about:

1 public static void Main(string[] args)

2 {

3 Multiplication_ShouldResultInAMultiplicationOfTwoPassedNumbers();

4 }

5

6 public void

7 Multiplication_ShouldResultInAMultiplicationOfTwoPassedNumbers()

8 {

9 //Assuming...

10 var multiplication = new Multiplication(3,7);

11

12 //when this happens:

13 var result = multiplication.Perform();

14

15 //then the result should be...

16 AssertTwoIntegersAreEqual(expected: 21, actual: result);

17 }

18

19 public static void AssertTwoIntegersAreEqual(

20 int expected, int actual)

21 {

22 if(actual != expected)

23 {

24 throw new Exception(

25 "Failed! Expected: " + expected + " but was: " + actual);

26 }

27 }

And we’re done. Now if we need another test, e.g. for division, we can just add a new method call to the Main() method and implement it. Inside this new test, we can reuse the AssertTwoIntegersAreEqual() method, since the check for division would also be about comparing two integer values.

As you see, we can easily write automated checks like this, using our primitive methods. However, this approach has some disadvantages:

- Every time we add a new test, we have to update the

Main()method with a call to the new test. If we forget to add such a call, the test will never be run. At first, it isn’t a big deal, but as soon as we have dozens of tests, an omission will become hard to notice. - Imagine your system consists of more than one application – you would have some problems trying to gather summary results for all of the applications that your system consists of.

- Soon you’ll need to write a lot of other methods similar to

AssertTwoIntegersAreEqual()– the one we already have compares two integers for equality, but what if we wanted to check a different condition, e.g. that one integer is greater than another? What if we wanted to check the equality not for integers, but characters, strings, floats, etc.? What if we wanted to check some conditions on collections, e.g. that a collection is sorted or that all items in the collection are unique? - Given a test fails, it would be hard to navigate from the command line output to the corresponding line of the source in your IDE. Wouldn’t it be easier if you could click on the error message to take you immediately to the code where the failure occurred?

For these and other reasons, advanced automated test frameworks were created such as CppUnit (for C++), JUnit (for Java) or NUnit (C#). Such frameworks are in principle based on the very idea that I sketched above, plus they make up for the deficiencies of our primitive approach. They derive their structure and functionality from Smalltalk’s SUnit and are collectively referred to as xUnit family of test frameworks.

To be honest, I can’t wait to show you how the test we just wrote looks like when a test framework is used. But first, let’s recap what we’ve got in our straightforward approach to writing automated tests and introduce some terminology that will help us understand how automated test frameworks solve our issues:

- The

Main()method serves as a Test List – a place where it is decided which tests to run. - The

Multiplication_ShouldResultInAMultiplicationOfTwoPassedNumbers()method is a Test Method. - The

AssertTwoIntegersAreEqual()method is an Assertion – a condition that, when not met, ends a test with failure.

To our joy, those three elements are present as well when we use a test framework. Moreover, they are far more advanced than what we have. To illustrate this, here is (finally!) the same test we wrote above, now using the xUnit.Net test framework:

1 [Fact] public void

2 Multiplication_ShouldResultInAMultiplicationOfTwoPassedNumbers()

3 {

4 //Assuming...

5 var multiplication = new Multiplication(3,7);

6

7 //when this happens:

8 var result = multiplication.Perform();

9

10 //then the result should be...

11 Assert.Equal(21, result);

12 }

Looking at the example, we can see that the test method itself is the only thing that’s left – the two methods (the test list and assertion) that we previously had are gone now. Well, to tell you the truth, they are not literally gone – it’s just that the test framework offers far better replacements, so we used them instead. Let’s reiterate the three elements of the previous version of the test that I promised would be present after the transition to the test framework:

- The Test List is now created automatically by the framework from all methods marked with a

[Fact]attribute. There’s no need to maintain one or more central lists anymore, so theMain()method is no more. - The Test Method is present and looks almost the same as before.

- The Assertion takes the form of a call to the static

Assert.Equal()method – the xUnit.NET framework is bundled with a wide range of assertion methods, so I used one of them. Of course, no one stops you from writing your custom assertion if the built-in assertion methods don’t offer what you are looking for.

Phew, I hope I made the transition quite painless for you. Now the last thing to add – as there is no Main() method anymore in the last example, you surely must wonder how we run those tests, right? Ok, the last big secret unveiled – we use an external application for this (we will refer to it using the term Test Runner) – we tell it which assemblies to run and then it loads them, runs them, reports the results, etc. A Test Runner can take various forms, e.g. it can be a console application, a GUI application or a plugin for an IDE. Here is an example of a test runner provided by a plugin for Visual Studio IDE called Resharper:

Mocking framework

When we want to test a class that depends on other classes, we may think it’s a good idea to include those classes in the test as well. This, however, does not allow us to test a single object or a small cluster of objects in isolation, where we would be able to verify that just a small part of the application works correctly. Thankfully, if we make our classes depend on interfaces rather than other classes, we can easily implement those interfaces with special “fake” classes that can be crafted in a way that makes our testing easier. For example, objects of such classes may contain pre-programmed return values for some methods. They can also record the methods that are invoked on them and allow the test to verify whether the communication between our object under test and its dependencies is correct.

Nowadays, we can rely on tools to generate such a “fake” implementation of a given interface for us and let us use this generated implementation in place of a real object in tests. This happens in a different way, depending on a language. Sometimes, the interface implementations can be generated at runtime (like in Java or C#), sometimes we have to rely more on compile-time generation (e.g. in C++).

Narrowing it down to C# – a mocking framework is just that – a mechanism that allows us to create objects (called “mock objects” or just “mocks”), that adhere to a certain interface, at runtime. It works like this: the type of the interface we want to have implemented is usually passed to a special method which returns a mock object based on that interface (we’ll see an example in a few seconds). Aside from the creation of mock objects, such a framework provides an API to configure the mocks on how they behave when certain methods are called on them and allows us to inspect which calls they received. This is a very powerful feature, because we can simulate or verify conditions that would be difficult to achieve or observe using only production code. Mocking frameworks are not as old as test frameworks so they haven’t been used in TDD since the very beginning.

I’ll give you a quick example of a mocking framework in action now and defer further explanation of their purpose to later chapters, as the full description of mocks and their place in TDD is not so easy to convey.

Let’s pretend that we have a class that allows placing orders and then puts these orders into a database (using an implementation of an interface called OrderDatabase). Besides, it handles any exception that may occur, by writing it into a log. The class itself does not do any important stuff, but let’s try to imagine hard that this is some serious domain logic. Here’s the code for this class:

1 public class OrderProcessing

2 {

3 OrderDatabase _orderDatabase; //OrderDatabase is an interface

4 Log _log;

5

6 //we get the database object from outside the class:

7 public OrderProcessing(

8 OrderDatabase database,

9 Log log)

10 {

11 _orderDatabase = database;

12 _log = log;

13 }

14

15 //other code...

16

17 public void Place(Order order)

18 {

19 try

20 {

21 _orderDatabase.Insert(order);

22 }

23 catch(Exception e)

24 {

25 _log.Write("Could not insert an order. Reason: " + e);

26 }

27 }

28

29 //other code...

30 }

Now, imagine we need to test it – how do we do that? I can already see you shake your head and say: “Let’s just create a database connection, invoke the Place() method and see if the record is added properly into the database”. If we did that, the first test would look like this:

1 [Fact] public void

2 ShouldInsertNewOrderToDatabaseWhenOrderIsPlaced()

3 {

4 //GIVEN

5 var orderDatabase = new MySqlOrderDatabase(); //uses real database

6 orderDatabase.Connect();

7 orderDatabase.Clean(); //clean up after potential previous tests

8 var orderProcessing = new OrderProcessing(orderDatabase, new FileLog());

9 var order = new Order(

10 name: "Grzesiek",

11 surname: "Galezowski",

12 product: "Agile Acceptance Testing",

13 date: DateTime.Now,

14 quantity: 1);

15

16 //WHEN

17 orderProcessing.Place(order);

18

19 //THEN

20 var allOrders = orderDatabase.SelectAllOrders();

21 Assert.Contains(order, allOrders);

22 }

At the beginning of the test, we open a connection to the database and clean all existing orders in it (more on that shortly), then create an order object, insert it into the database and query the database for all orders it contains. At the end, we assert that the order we tried to insert is among all orders in the database.

Why do we clean up the database at the beginning of the test? Remember that a database provides persistent storage. If we don’t clean it up before executing the logic of this test, the database may already contain the item we are trying to add, e.g. from previous executions of this test. The database might not allow us to add the same item again and the test would fail. Ouch! It hurts so bad, because we wanted our tests to prove something works, but it looks like it can fail even when the logic is coded correctly. Of what use would be such a test if it couldn’t reliably tell us whether the implemented logic is correct or not? So, to make sure that the state of the persistent storage is the same every time we run this test, we clean up the database before each run.

Now that the test is ready, did we get what we wanted from it? I would be hesitant to answer “yes”. There are several reasons for that:

- The test will most probably be slow because accessing the database is relatively slow. It is not uncommon to have more than a thousand tests in a suite and I don’t want to wait half an hour for results every time I run them. Do you?

- Everyone who wants to run this test will have to set up a special environment, e.g. a local database on their machine. What if their setup is slightly different from ours? What if the schema gets outdated – will everyone manage to notice it and update the schema of their local databases accordingly? Should we re-run our database creation script only to ensure we have got the latest schema available to run your tests against?

- There may be no implementation of the database engine for the operating system running on our development machine if our target is an exotic or mobile platform.

- Note that the test we wrote is only one out of two. We still have to write another one for the scenario where inserting an order ends with an exception. How do we setup the database in a state where it throws an exception? It is possible, but requires significant effort (e.g. deleting a table and recreating it after the test, for use by other tests that might need it to run correctly), which may lead some to the conclusion that it is not worth writing such tests at all.

Now, let’s try to approach this problem differently. Let’s assume that the MySqlOrderDatabase that queries a real database query is already tested (this is because I don’t want to get into a discussion on testing database queries just yet – we’ll get to it in later chapters) and that the only thing we need to test is the OrderProcessing class (remember, we’re trying to imagine really hard that there is some serious domain logic coded here). In this situation we can leave the MySqlOrderDatabase out of the test and instead create another, fake implementation of the OrderDatabase that acts as if it was a connection to a database but does not write to a real database at all – it only stores the inserted records in a list in memory. The code for such a fake connection could look like this:

1 public class FakeOrderDatabase : OrderDatabase

2 {

3 public Order _receivedArgument;

4

5 public void Insert(Order order)

6 {

7 _receivedArgument = order;

8 }

9

10 public List<Order> SelectAllOrders()

11 {

12 return new List<Order>() { _receivedOrder };

13 }

14 }

Note that the fake order database is an instance of a custom class that implements the same interface as MySqlOrderDatabase. Thus, if we try, we can make the tested code use our fake without knowing.

Let’s replace the real implementation of the order database by the fake instance in the test:

1 [Fact] public void

2 ShouldInsertNewOrderToDatabaseWhenOrderIsPlaced()

3 {

4 //GIVEN

5 var orderDatabase = new FakeOrderDatabase();

6 var orderProcessing = new OrderProcessing(orderDatabase, new FileLog());

7 var order = new Order(

8 name: "Grzesiek",

9 surname: "Galezowski",

10 product: "Agile Acceptance Testing",

11 date: DateTime.Now,

12 quantity: 1);

13

14 //WHEN

15 orderProcessing.Place(order);

16

17 //THEN

18 var allOrders = orderDatabase.SelectAllOrders();

19 Assert.Contains(order, allOrders);

20 }

Note that we do not clean the fake database object as we did with the real database since we create a fresh object each time the test is run and the results are stored in a memory location different for each instance. The test will also be much quicker now because we are not accessing the database anymore. What’s more, we can now easily write a test for the error case. How? Just make another fake class, implemented like this:

1 public class ExplodingOrderDatabase : OrderDatabase

2 {

3 public void Insert(Order order)

4 {

5 throw new Exception();

6 }

7

8 public List<Order> SelectAllOrders()

9 {

10 }

11 }

Ok, so far so good, but now we have two classes of fake objects to maintain (and chances are we will need even more). Any method added to the OrderDatabase interface must also be added to each of these fake classes. We can spare some coding by making our mocks a bit more generic so that their behavior can be configured using lambda expressions:

1 public class ConfigurableOrderDatabase : OrderDatabase

2 {

3 public Action<Order> doWhenInsertCalled;

4 public Func<List<Order>> doWhenSelectAllOrdersCalled;

5

6 public void Insert(Order order)

7 {

8 doWhenInsertCalled(order);

9 }

10

11 public List<Order> SelectAllOrders()

12 {

13 return doWhenSelectAllOrdersCalled();

14 }

15 }

Now, we don’t have to create additional classes for new scenarios, but our syntax becomes awkward. Here’s how we configure the fake order database to remember and yield the inserted order:

1 var db = new ConfigurableOrderDatabase();

2 Order gotOrder = null;

3 db.doWhenInsertCalled = o => {gotOrder = o;};

4 db.doWhenSelectAllOrdersCalled = () => new List<Order>() { gotOrder };

And if we want it to throw an exception when anything is inserted:

1 var db = new ConfigurableOrderDatabase();

2 db.doWhenInsertCalled = o => {throw new Exception();};

Thankfully, some smart programmers created libraries that provide further automation in such scenarios. One such a library is NSubstitute. It provides an API in a form of C# extension methods, which is why it might seem a bit magical at first, especially if you’re not familiar with C#. Don’t worry, you’ll get used to it.

Using NSubstitute, our first test can be rewritten as:

1 [Fact] public void

2 ShouldInsertNewOrderToDatabaseWhenOrderisPlaced()

3 {

4 //GIVEN

5 var orderDatabase = Substitute.For<OrderDatabase>();

6 var orderProcessing = new OrderProcessing(orderDatabase, new FileLog());

7 var order = new Order(

8 name: "Grzesiek",

9 surname: "Galezowski",

10 product: "Agile Acceptance Testing",

11 date: DateTime.Now,

12 quantity: 1);

13

14 //WHEN

15 orderProcessing.Place(order);

16

17 //THEN

18 orderDatabase.Received(1).Insert(order);

19 }

Note that we don’t need the SelectAllOrders() method on the database connection interface anymore. It was there only to make writing the test easier – no production code used it. We can delete the method and get rid of some more maintenance trouble. Instead of the call to SelectAllOrders(), mocks created by NSubstitute record all calls received and allow us to use a special method called Received() on them (see the last line of this test), which is actually a camouflaged assertion that checks whether the Insert() method was called with the order object as parameter.

This explanation of mock objects is very shallow and its purpose is only to get you up and running. We’ll get back to mocks later as we’ve only scratched the surface here.

Anonymous values generator

Looking at the test data in the previous section we see that many values are specified literally, e.g. in the following code:

1 var order = new Order(

2 name: "Grzesiek",

3 surname: "Galezowski",

4 product: "Agile Acceptance Testing",

5 date: DateTime.Now,

6 quantity: 1);

the name, surname, product, date, and quantity are very specific. This might suggest that the exact values are important from the perspective of the behavior we are testing. On the other hand, when we look at the tested code again:

1 public void Place(Order order)

2 {

3 try

4 {

5 this.orderDatabase.Insert(order);

6 }

7 catch(Exception e)

8 {

9 this.log.Write("Could not insert an order. Reason: " + e);

10 }

11 }

we can spot that these values are not used anywhere – the tested class does not use or check them in any way. These values are important from the database point of view, but we already took the real database out of the picture. Doesn’t it trouble you that we fill the order object with so many values that are irrelevant to the test logic itself and that clutter the structure of the test with needless details? To remove this clutter let’s introduce a method with a descriptive name to create the order and hide the details we don’t need from the reader of the test:

1 [Fact] public void

2 ShouldInsertNewOrderToDatabase()

3 {

4 //GIVEN

5 var orderDatabase = Substitute.For<OrderDatabase>();

6 var orderProcessing = new OrderProcessing(orderDatabase, new FileLog());

7 var order = AnonymousOrder();

8

9 //WHEN

10 orderProcessing.Place(order);

11

12 //THEN

13 orderDatabase.Received(1).Insert(order);

14 }

15

16 public Order AnonymousOrder()

17 {

18 return new Order(

19 name: "Grzesiek",

20 surname: "Galezowski",

21 product: "Agile Acceptance Testing",

22 date: DateTime.Now,

23 quantity: 1);

24 }

Now, that’s better. Not only did we make the test shorter, we also provided a hint to the reader that the actual values used to create an order don’t matter from the perspective of tested order-processing logic. Hence the name AnonymousOrder().

By the way, wouldn’t it be nice if we didn’t have to provide the anonymous objects ourselves, but could rely on another library to generate these for us? Surprise, surprise, there is one! It’s called Autofixture. It is an example of a so-called anonymous values generator (although its creator likes to say that it is also an implementation of the Test Data Builder pattern, but let’s skip this discussion here).

After changing our test to use AutoFixture, we arrive at the following:

1 private Fixture any = new Fixture();

2

3 [Fact] public void

4 ShouldInsertNewOrderToDatabase()

5 {

6 //GIVEN

7 var orderDatabase = Substitute.For<OrderDatabase>();

8 var orderProcessing = new OrderProcessing(orderDatabase, new FileLog());

9 var order = any.Create<Order>();

10

11 //WHEN

12 orderProcessing.Place(order);

13

14 //THEN

15 orderDatabase.Received(1).Insert(order);

16 }

In this test, we use an instance of a Fixture class (which is a part of AutoFixture) to create anonymous values for us via a method called Create(). This allows us to remove the AnonymousOrder() method, thus making our test setup shorter.

Nice, huh? AutoFixture has a lot of advanced features, but to keep things simple I like to hide its use behind a static class called Any. The simplest implementation of such class would look like this:

1 public static class Any

2 {

3 private static any = new Fixture();

4

5 public static T Instance<T>()

6 {

7 return any.Create<T>();

8 }

9 }

In the next chapters, we’ll see many different methods from the Any type, plus the full explanation of the philosophy behind it. The more you use this class, the more it grows with other methods for creating customized objects.

Summary

This chapter introduced the three tools we’ll use in this book that, when mastered, will make your test-driven development flow smoother. If this chapter leaves you with insufficient justification for their use, don’t worry – we will dive into the philosophy behind them in the coming chapters. For now, I just want you to get familiar with the tools themselves and their syntax. Go on, download these tools, launch them, try to write something simple with them. You don’t need to understand their full purpose yet, just go out and play :-).

It’s not (only) a test

Is the role of a test only to “verify” or “check” whether a piece of software works? Surely, this is a significant part of its runtime value, i.e. the value that we get when we execute the test. However, when we limit our perspective on tests only to this, it could lead us to a conclusion that the only thing that is valuable about having a test is to be able to execute it and view the result. Such acts as designing a test or implementing a test would only have the value of producing something we can run. Reading a test would only have value when debugging. Is this really true?

In this chapter, I argue that the acts of designing, implementing, compiling and reading a test are all very valuable activities. And they let us treat tests as something more than just “automated checks”.

When a test becomes something more

I studied in Łódź, a large city in the center of Poland. As probably all other students in all other countries, we have had lectures, exercises and exams. The exams were pretty difficult. As my computer science group was on the faculty of electronic and electric engineering, we had to grasp a lot of classes that didn’t have anything to do with programming. For instance: electrotechnics, solid-state physics or electronic and electrical metrology.

Knowing that exams were difficult and that it was hard to learn everything during the semester, the lecturers would sometimes give us exemplary exams from previous years. The questions were different from the actual exams that we were to take, but the structure and kinds of questions asked (practice vs. theory etc.) were similar. We would usually get these exemplary questions before we started learning really hard (which was usually at the end of a semester). Guess what happened then? As you might suspect, we did not use the tests we received just to “verify” or “check” our knowledge after we finished learning. Quite the contrary – examining those tests was the very first step of our preparation. Why was that so? What use were the tests when we knew we wouldn’t know most of the answers?

I guess my lecturers would disagree with me, but I find it quite amusing that what we were really doing back then was similar to “lean software development”. Lean is a philosophy where, among other things, there is a rigorous emphasis on eliminating waste. Every feature or product that is produced but is not needed by anyone, is considered a waste. That’s because if something is not needed, there is no reason to assume it will ever be needed. In that case, the entire feature or product adds no value. Even if it ever will be needed, it very likely will require rework to fit the customer’s needs at that time. In such a case, the work that went into the parts of the original solution that had to be reworked is a waste – it had a cost, but brought no benefit (I am not talking about such things as customer demos, but finished, polished features or products).

So, to eliminate waste, we usually try to “pull features from demand” instead of “pushing them” into a product, hoping they can become useful one day. In other words, every feature is there to satisfy a concrete need. If not, the effort is considered wasted and the money drowned.

Going back to the exams example, why can the approach of first looking through the exemplary tests be considered “lean”? That’s because, when we treat passing an exam as our goal, then everything that does not put us closer to this goal is considered wasteful. Let’s suppose the exam concerns theory only – why then practice the exercises? It would probably pay off a lot more to study the theoretical side of the topics. Such knowledge could be obtained from those exemplary tests. So, the tests were a kind of specification of what was needed to pass the exam. It allowed us to pull the value (i.e. our knowledge) from the demand (information obtained from realistic tests) rather that push it from the implementation (i.e. learning everything in a coursebook chapter after chapter).

So the tests became something more. They proved very valuable before the “implementation” (i.e. learning for the exam) because:

- they helped us focus on what was needed to reach our goal

- they brought our attention away from what was not needed to reach our goal

That was the value of a test before learning. Note that the tests we would usually receive were not exactly what we would encounter at the time of the exam, so we still had to guess. Yet, the role of a test as a specification of a need was already visible.

Taking it to the software development land

I chose this lengthy metaphor to show you that a writing a “test” is really another way of specifying a requirement or a need and that it’s not counterintuitive to think about it this way – it occurs in our everyday lives. This is also true in software development. Let’s take the following “test” and see what kind of needs it specifies:

1 var reporting = new ReportingFeature();

2 var anyPowerUser = Any.Of(Users.Admin, Users.Auditor);

3 Assert.True(reporting.CanBePerformedBy(anyPowerUser));

(In this example, we used Any.Of() method that returns any enumeration value from the specified list. Here, we say “give me a value that is either Users.Admin or Users.Auditor”.)

Let’s look at those (only!) three lines of code and imagine that the production code that makes this “test” pass does not exist yet. What can we learn from these three lines about what this production code needs to supply? Count with me:

- We need a reporting feature.

- We need to support the notion of users and privileges.

- We need to support the concept of a power user, who is either an administrator or an auditor.

- Power users need to be allowed to use the reporting feature (note that it does not specify which other users should or should not be able to use this feature – we would need a separate “test” for that).

Also, we are already after the phase of designing an API (because the test is already using it) that will fulfill the need. Don’t you think this is already quite some information about the application functionality from just three lines of code?

A Specification rather than a test suite

I hope you can see now that what we called “a test” can also be seen as a kind of specification. This is also the answer to the question I raised at the beginning of this chapter.

In reality, the role of a test, if written before production code, can be broken down even further:

- designing a scenario – is when we specify our requirements by giving concrete examples of behaviors we expect

- writing the test code – is when we specify an API through which we want to use the code that we are testing

- compiling – is when we get feedback on whether the production code has the classes and methods required by the specification we wrote. If it doesn’t, the compilation will fail.

- execution – is where we get feedback on whether the production code exhibits the behaviors that the specification describes

- reading – is where we use the already written specification to obtain knowledge about the production code.

Thus, the name “test” seems like narrowing down what we are doing here too much. My feeling is that maybe a different name would be better – hence the term specification.

The discovery of the tests’ role as a specification is quite recent and there is no uniform terminology connected to it yet. Some like to call the process of using tests as specifications Specification By Example to say that the tests are examples that help specify and clarify the functionality being developed. Some use the term BDD (Behavior-Driven Development) to emphasize that writing tests is really about analyzing and describing behaviors. Also, you might encounter different names for some particular elements of this approach, for example, a “test” can be referred to as a “spec”, or an “example”, or a “behavior description”, or a “specification statement” or “a fact about the system” (as you already saw in the chapter on tools, the xUnit.NET framework marks each “test” with a [Fact] attribute, suggesting that by writing it, we are stating a single fact about the developed code. By the way, xUnit.NET also allows us to state ‘theories’ about our code, but let’s leave this topic for another time).

Given this variety in terminology, I’d like to make a deal: to be consistent throughout this book, I will establish a naming convention, but leave you with the freedom to follow your own if you so desire. The reason for this naming convention is pedagogical – I am not trying to create a movement to change established terms or to invent a new methodology or anything – I hope that by using this terminology throughout the book, you’ll look at some things differently3. So, let’s agree that for the sake of this book:

- Specification Statement (or simply Statement, with a capital ‘S’)

- will be used instead of the words “test” and “test method”

- Specification (or simply Spec, also with a capital ‘S’)

- will be used instead of the words “test suite” and “test list”

- False Statement

- will be used instead of “failing test”

- True Statement

- will be used instead of “passing test”

From time to time I’ll refer back to the “traditional” terminology, because it is better established and because you may have already heard some other established terms and wonder how they should be understood in the context of thinking of tests as a specification.

The differences between executable and “traditional” specifications

You may be familiar with requirements specifications or design specifications that are written in plain English or another spoken language. However, our Specifications differ from them in several ways. In particular, the kind of Specification that we create by writing tests:

- Is not completely written up-front like many of such “traditional” specs have been written (which doesn’t mean it’s written after the code is done – more on this in the next chapters).

- Is executable – you can run it to see whether the code adheres to the specification or not. This lowers the risk of inaccuracies in the Specification and falling out of sync with the production code.

- Is written in source code rather than in spoken language – which is both good, as the structure and formality of code leave less room for misunderstanding, and challenging, as great care must be taken to keep such specification readable.

Statement-first programming

What’s the point of writing a specification after the fact?

One of the best known thing about TDD is that a failing test for a behavior of a piece of code is written before this behavior is implemented. This concept is often called “test-first development” and seems controversial to many.

In the previous chapter, I said that in TDD a “test” takes an additional role – one of a statement that is part of a specification. If we put it this way, then the whole controversial concept of “writing a test before the code” does not pose a problem at all. Quite the contrary – it only seems natural to specify what we expect from a piece of code to do before we attempt to write it. Does the other way round even make sense? A specification written after completing the implementation is nothing more than an attempt at documenting the existing solution. Sure, such attempts can provide some value when done as a kind of reverse-engineering (i.e. writing the specification for something that was implemented long ago and for which we uncover the previously implicit business rules or policies as we document the existing solution) – it has an excitement of discovery in it, but doing so just after we made all the decisions ourselves doesn’t seem to me like a productive way to spend my time, not to mention that I find it dead boring (you can check whether you’re like me on this one. Try implementing a simple calculator app and then write specification for it just after it is implemented and manually verified to work). Anyway, I hardly find specifying how something should work after it works creative. Maybe that’s the reason why, throughout the years, I have observed the specifications written after a feature is implemented to be much less complete than the ones written before the implementation.

Oh, and did I tell you that without a specification of any kind we don’t know whether we are done implementing our changes or not? This is because, to determine if the change is complete, we need to compare the implemented functionality to “something”, even if this “something” is only in the customer’s head. in TDD, we “compare” it to expectations set by a suite of automated tests.

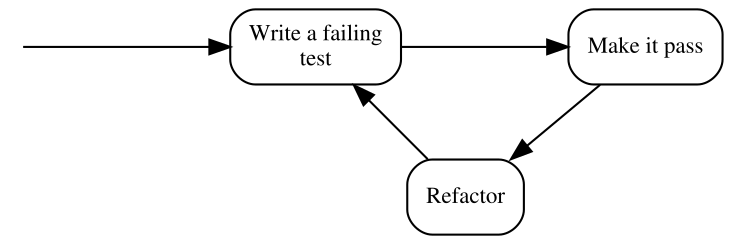

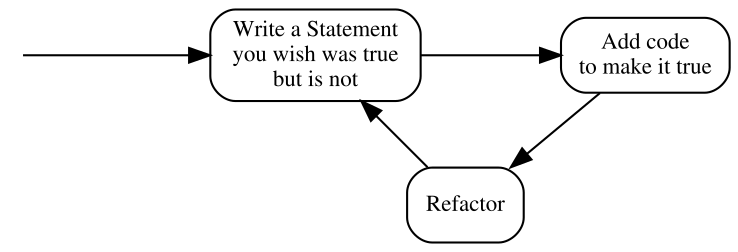

Another thing I mentioned in the previous chapter is that we approach writing a Specification of executable Statements differently from writing a textual design or requirements specification: even though a behavior is implemented after its Specification is ready, we do not write the Specification entirely up-front. The usual sequence is to specify a bit first and then code a bit, repeating it one Statement at a time. When doing TDD, we are traversing repeatedly through a few phases that make up a cycle. We like these cycles to be short, so that we get feedback early and often. This is essential because it allows us to move forward, confident that what we already have works as we intended. It also enables us to make the next cycle more efficient thanks to the knowledge we gained in the previous cycle (if you don’t believe me that fast feedback matters, ask yourself a question: “how many times a day do I compile the code I’m working on?”).

Reading so much about cycles, it is probably no surprise that the traditional illustration of the TDD process is modeled visually as a circular flow:

Note that the above form uses the traditional terminology of TDD, so before I explain the steps, here’s a similar illustration that uses our terms of Specification and Statements:

The second version seems more like common sense than the first one – specifying how something should behave before putting that behavior in place is way more intuitive than testing something that does not yet exist.

Anyway, these three steps deserve some explanation. In the coming chapters, I’ll give you some examples of how this process works in practice and introduce an expanded version, but in the meantime, it suffices to say that:

- Write a Statement you wish were true but is not

- means that the Statement evaluates to false. In the test list, it appears as failing, which most xUnit frameworks mark with red color.

- Add code to make it true

- means that we write just enough code to make the Statement true. In the test list, it appears as passing, which most xUnit frameworks mark with green color. Later in the course of the book, you’ll see how little can be “just enough”.

- Refactor

- is a step that I have silently ignored so far and will do so for several more chapters. Don’t worry, we’ll get back to it eventually. For now, it’s important to be aware that the executable Specification can act as a safety net while we are improving the quality of the code without changing its externally visible behavior: by running the Specification often, we quickly discover any mistake we make in the process.

By the way, this process is sometimes referred to as “Red-Green-Refactor”, because of the colors that xUnit tools display for failing and passing test. I am just mentioning it here for the record – I will not be using this term further in the book.

“Test-First” means seeing a failure

Explaining the illustration with the TDD process above, I pointed out that we are supposed to write a Statement that we wish was true but is not. It means that not only do we have to write a Statement before we provide an implementation that makes it true, we also have to evaluate it (i.e. run it) and watch it fail its assertions before we provide the implementation.

Why is it so important? Isn’t it enough to write the Statement first? Why run it and watch it fail? There are several reasons and I will try to outline some of them briefly.

The main reason for writing a Statement and watching it fail is that otherwise, I don’t have any proof that the Statement can ever fail.

Every accurate Statement fails when it isn’t fulfilled and passes when it is. That’s one of the main reasons why we write it – to see this transition from red to green, which means that what previously was not implemented (and we had a proof for that) is now working (and we have a proof). Observing the transition proves that we made progress.

Another thing to note is that, after being fulfilled, the Statement becomes a part of the executable specification and starts failing as soon as the code stops fulfilling it, for example as a result of a mistake made during code refactoring.

Seeing a Statement proven as false gives us valuable feedback. If we run a Statement only after the behavior it describes has been implemented and it is evaluated as true, how do we know whether it accurately describes a need? We never saw it failing, so what proof do we have that it ever will?

The first time I encountered this argument was before I started thinking of tests as an executable specification. “Seriously?” – I thought – “I know what I’m writing. If I make my tests small enough, it is self-evident that I am describing the correct behavior. This is paranoid”. However, life quickly verified my claims and I was forced to withdraw my arguments. Let me describe three of the ways I experienced how one can write a Statement that is always true, whether the code is correct or not. There are more ways, however, I think giving you three should be an illustration enough.

Test-first allowed me to avoid the following situations where Statements cheated me into thinking they were fulfilled even when they shouldn’t be:

1. Accidental omission of including a Statement in a Specification

It’s usually insufficient to just write the code of a Statement – we also have to let the test runner know that a method we wrote is a Statement (not e.g. just a helper method) and it needs to be evaluated, i.e. ran by the runner.

Most xUnit frameworks have some kind of mechanism to mark methods as Statements, whether by using attributes (C#, e.g. [Fact]) or annotations (Java, e.g @Test), or by using macros (C and C++), or by using a naming convention. We have to use such a mechanism to let the runner know that it should execute such methods.

Let’s take xUnit.Net as an example. To turn a method into a Statement in xUnit.Net, we have to mark it with the [Fact] attribute like this:

1 public class CalculatorSpecification

2 {

3 [Fact]

4 public void ShouldDisplayAdditionResultAsSumOfArguments()

5 {

6 //...

7 }

8 }

There is a chance that we forget to decorate a method with the [Fact] attribute – in such a case, this method is never executed by the test runner. However funny it may sound, this is exactly what happened to me several times. Let’s take the above Statement as an example and imagine that we are writing this Statement post-factum as a unit test in an environment that has, let’s say, more than thirty Statements already written and passing. We have written the code and now we are just creating test after test to ensure the code works. Test – pass, test – pass, test – pass. When I execute tests, I almost always run more than one at a time, since it’s easier for me than selecting what to evaluate each time. Besides, I get more confidence this way that I don’t make a mistake and break something that is already working. Let’s imagine we are doing the same here. Then the workflow is really: Test – all pass, test – all pass, test – all pass…

Over time, I have learned to use code snippets mechanism of my IDE to generate a template body for my Statements. Still, in the early days, I have occasionally written something like this:

1 public class CalculatorSpecification

2 {

3 //... some Statements here

4

5 //oops... forgot to insert the attribute!

6 public void ShouldDisplayZeroWhenResetIsPerformed()

7 {

8 //...

9 }

10 }

As you can see, the [Fact] attribute is missing, which means this Statement will not be executed. This has happened not only because of not using code generators – sometimes – to create a new Statement – it made sense to copy-paste an existing Statement, change the name and few lines of code4. I didn’t always remember to include the [Fact] attribute in the copied source code. The compiler was not complaining as well.

The reason I didn’t see my mistake was that I was running more than one test at a time – when I got a green bar (i.e. all Statements proven true), I assumed that the Statement I just wrote works as well. It was unattractive for me to search for each new Statement in the list and make sure it’s there. The more important reason, however, was that the absence of the [Fact] attribute did not disturb my workflow: test – all pass, test – all pass, test – all pass… In other words, my process did not give me any feedback that I made a mistake. So, in such a case, what I end up with is a Statement that not only will never be proven false – it won’t be evaluated at all.

How does treating tests as Statements and evaluating them before making them true help here? The fundamental difference is that the workflow of TDD is: test – fail – pass, test – fail – pass, test – fail – pass… In other words, we expect each Statement to be proven false at least once. So every time we miss the “fail” stage, we get feedback from our process that something suspicious is happening. This allows us to investigate and fix the problem if necessary.

2. Misplacing Statement setup

Ok, this may sound even funnier, but it happened to me a couple of times as well, so I assume it may happen to you one day, especially if you are in a hurry.

Consider the following toy example: we want to validate a simple data structure that models a frame of data that can arrive via a network. The structure looks like this:

1 public class Frame

2 {

3 public int timeSlot;

4 }

and we need to write a Specification for a Validation class that accepts a Frame object as an argument and checks whether the time slot (whatever it is) inside it is correct. The correctness is determined by comparing the time slot to a maximum allowed value specified in a constant called TimeSlot.MaxAllowed (so it’s a constant defined in a TimeSlot class). If the frame time slot is higher than the maximum allowed, it is assumed incorrect and the validation should return false. Otherwise, true should be returned.

Let’s take a look at the following Statement which specifies that setting a value higher than allowed to a field of a frame should make the validation fail:

1 [Fact]

2 public void ShouldRecognizeTimeSlotAboveMaximumAllowedAsInvalid()

3 {

4 var frame = new Frame();

5 var validation = new Validation();

6 var timeSlotAboveMaximumAllowed = TimeSlot.MaxAllowed + 1;

7 var result = validation.PerformForTimeSlotIn(frame);

8 frame.timeSlot = timeSlotAboveMaximumAllowed;

9 Assert.False(result);

10 }

Note how the method PerformForTimeSlotIn(), which triggers the specified behavior, is accidentally called before value of timeSlotAboveMaximumAllowed is set up and thus, this value is not taken into account at the moment when the validation is executed. If, for example, we make a mistake in the implementation of the Validation class so that it returns false for values below the maximum and not above, such mistake may go unnoticed, because the Statement will always be true.

Again, this is a toy example – I just used it as an illustration of something that can happen when dealing with more complex cases.

3. Using static data inside the production code

Once in a while, we have to jump in and add some new Statements to an existing Specification and some logic to the class it describes. Let’s assume that the class and its Specification were written by someone else than us. Imagine the code we are talking about is a wrapper around our product XML configuration file. We decide to write our Statements after applying the changes (“well”, we may say, “we’re all protected by the Specification that is already in place, so we can make our change without the risk of accidentally breaking existing functionality, and then just test our changes and it’s all good…”).

We start coding… done. Now we start writing this new Statement that describes the functionality we just added. After examining the Specification class, we can see that it has a member field like this:

1 public class XmlConfigurationSpecification

2 {

3 XmlConfiguration config = new XmlConfiguration(xmlFixtureString);

4

5 //...

What it does is it sets up an object used by every Statement. So, each Statement uses a config object initialized with the same xmlConfiguration string value. Another quick examination leads us to discover the following content of the xmlFixtureString:

1 <config>

2 <section name="General Settings">

3 <subsection name="Network Related">

4 <parameter name="IP">192.168.3.2</parameter>

5 <parameter name="Port">9000</parameter>

6 <parameter name="Protocol">AHJ-112</parameter>

7 </subsection>

8 <subsection name="User Related">

9 <parameter name="login">Johnny</parameter>

10 <parameter name="Role">Admin</parameter>

11 <parameter name="Password Expiry (days)">30</parameter>

12 </subsection>

13 <!-- and so on and on and on...-->

14 </section>

15 </config>

The string is already pretty large and messy since it contains all information that is required by the existing Statements. Let’s assume we need to write tests for a little corner case that does not need all this crap inside this string. So, we decide to start afresh and create a separate object of the XmlConfiguration class with your own, minimal string. Our Statement begins like this:

1 string customFixture = CreateMyOwnFixtureForThisTestOnly();

2 var configuration = new XmlConfiguration(customFixture);

3 ...

And goes on with the scenario. When we execute it, it passes – cool… not. Ok, what’s wrong with this? At first sight, everything’s OK, until we read the source code of XmlConfiguration class carefully. Inside, we can see, how the XML string is stored:

1 private static string xmlText; //note the static keyword!

It’s a static field, which means that its value is retained between instances. What the…? Well, well, here’s what happened: the author of this class applied a small optimization. He thought: “In this app, the configuration is only modified by members of the support staff and to do it, they have to shut down the system, so, there is no need to read the XML file every time an XmlConfiguration object is created. I can save some CPU cycles and I/O operations by reading it only once when the first object is created. Later objects will just use the same XML!”. Good for him, not so good for us. Why? Because, depending on the order in which the Statements are evaluated, either the original XML string will be used for all Statements or your custom one! Thus the Statements in this Specification may pass or fail for the wrong reason – because they accidentally use the wrong XML.

Starting development from a Statement that we expect to fail may help when such a Statement passes even though the behavior it describes is not implemented yet.

“Test-After” often ends up as “Test-Never”

Consider again the question I already asked in this chapter: did you ever have to write requirements or a design document for something that you already implemented? Was it fun? Was it valuable? Was it creative? As for me, my answer to these questions is no. I observed that the same answer applied to formulating my executable Specification. By observing myself and other developers, I concluded that after we’ve written the code, we have little motivation to specify what we wrote – some of the pieces of code “we can just see are correct”, other pieces “we already saw working” when we compiled and deployed our changes and ran a few manual checks… The design is ready… Specification? Maybe next time… Thus, the Specification may never get to be written at all and if it is written, I often find that it covers most of the main flow of the program, but lacks some Statements saying what should happen in case of errors, etc.

Another reason for ending up not writing the Specification might be time pressure, especially in teams that are not yet mature or not have very strong professional ethics. Many times, I have seen people reacting to pressure by dropping everything besides writing the code that directly implements a feature. Among the things that get dropped are design, requirements and tests. And learning as well. I have seen many times teams that, when under pressure, stopped experimenting and learning and reverted to old “safe” behaviors in a mindset of “saving a sinking ship” and “hoping for the best”. As in such situations, I’ve seen pressure raise as the project approached its deadline or milestone, leaving Specification until the end means that it’s very likely to get dropped, especially in the case when the changes are (to a degree) tested manually later anyway.

On the other hand, when doing TDD (as we will see in the coming chapters) our Specification grows together with the production code, so there is much less temptation to drop it entirely. Moreover, In TDD, a written Specification Statement is not an addition to the code, but rather a reason to write the code. Creating an executable Specification becomes an indispensable part of implementing a feature.

“Test-After” often leads to design rework

I like reading and watching Uncle Bob (Robert C. Martin). One day I was listening to his keynote at Ruby Midwest 2011, called Architecture The Lost Years. At the end, Robert made some digressions, one of them about TDD. He said that writing tests after the code is not TDD and instead called it “a waste of time”.

My initial thought was that the comment was maybe a bit too exaggerated and only about missing all the benefits that starting with a false Statement brings me: the ability to see the Statement fail, the ability to do a clean-sheet analysis, etc. However, now I feel that there’s much more to it, thanks to something I learned from Amir Kolsky and Scott Bain – to be able to write a maintainable Specification for a piece of code, the code must have a high level of testability. We will talk about this quality in part 2 of this book, but for now let’s assume the following simplified definition: the higher testability of a piece of code (e.g. a class), the easier it is to write a Statement for its behavior.

Now, where’s the waste in writing the Specification after the code is written? To find out, let’s compare the Statement-first and code-first approaches. In the Statement-first workflow for new (non-legacy) code, my workflow and approach to testability usually look like this:

- Write a Statement that is false to start with (during this step, detect and correct testability issues even before the production code is written).

- Write code to make the Statement true.

And here’s what I often see programmers do when they write the code first (extra steps marked with strong text):

- Write some production code without considering how it will be tested (after this step, the testability is often suboptimal as it’s usually not being considered at this point).

- Start writing a unit test (this might not seem like an extra step, since it’s also present in the previous approach, but once you reach the step 5, you’ll know what I mean).

- Notice that unit testing the code we wrote is cumbersome and unsustainable and the tests become looking messy as they try to work around the testability issues.

- Decide to improve testability by restructuring the code, e.g. to be able to isolate objects and use techniques such as mock objects.

- Write unit tests (this time it should be easier as the testability of the tested is better).

What is the equivalent of the marked steps in the Statement-first approach? There is none! Doing these things is a waste of time! Sadly, this is a waste I encounter a lot.

Summary

In this chapter, I tried to show you that the choice of when we write our Specification often makes a huge difference and that there are numerous benefits of starting with a Statement. When we consider the Specification as what it really is – not only as a suite of tests that check runtime correctness – then the Statement-first approach becomes less awkward and less counter-intuitive.

Practicing what we have already learned

And now, a taste of things to come!

– Shang Tsung, Mortal Kombat The Movie

The above quote took place just before a fighting scene in which a nameless warrior jumped at Sub-Zero only to be frozen and broken into multiple pieces upon hitting the wall. The scene was not spectacular in terms of fighting technique or length. Also, the nameless guy didn’t even try hard – the only thing he did was to jump only to be hit by a freezing ball, which, by the way, he actually could see coming. It looked a lot like the fight was set up only to showcase Sub-Zero’s freezing ability. Guess what? In this chapter, we’re going to do roughly the same thing – set up a fake, easy scenario just to showcase some of the basic TDD elements!