Table of Contents

The author has used good faith effort in preparation of this book, but makes no expressed or implied warranty of any kind and disclaims without limitation all responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. Use of the information and instructions in this book is at your own risk.

The cover image is in the public domain and available from the New York Public Library. The cover font is Open Sans Condensed, released by Steve Matteson under the Apache License version 2.0.

1. Introduction

With the enthusiasm of youth, the QuantLib web site used to state that QuantLib aimed at becoming “the standard free/open-source financial library.” By interpreting such statement a bit loosely, one might say that it has somewhat succeeded—albeit by employing the rather devious trick of being the first, and thus for some time the only open-source financial library1.

Standard or not, the project is thriving; at the time of this writing, each new release is downloaded a few thousand times, there is a steady stream of contributions from users, and the library seems to be used in the real world—as far as I can guess through the usual shroud of secrecy used in the financial world. All in all, as a project administrator, I can declare myself a happy camper.

But all the more for that, the lack of proper documentation shows. Although a detailed class reference is available (that was an easy task, since it can be generated automatically) it doesn’t let one see the forest for the trees; so that a new user might get the impression that the QuantLib developers share the views of the Bellman from Lewis Carroll’s Hunting of the Snark:

“What use are Mercator’s North Poles and Equators,

Tropics, Zones and Meridian Lines?”

So the Bellman would cry: and the crew would reply,

“They are merely conventional signs!”

The purpose of this book is to fill a part of the existing void. It is a report on the design and implementation of QuantLib, alike in spirit—but, hopefully, with less frightening results—to the How I did it book2 prominently featured in Mel Brooks’ Young Frankenstein. If you are—or want to be—a QuantLib user, you will find here useful information on the design of the library that might not be readily apparent when reading the code. If you’re working in quantitative finance, even if not using QuantLib, you can still read it as a field report on the design of a financial library. You will find that it covers issues that you might also face, as well as some possible solutions and their rationale. Based on your constraints, it is possible—even likely—that you will choose other solutions; but you might profit from this discussion just the same.

In my descriptions, I’ll also point out shortcomings in the current implementation; not to disparage the library (I’m pretty much involved in it, after all) but for more useful purposes. On the one hand, describing the existing pitfalls will help developers avoid them; on the other hand, it might show how to improve the library. Indeed, it already happened that reviewing the code for this book caused me to go back and modify it for the better.

For reasons of both space and time, I won’t be able to cover every aspect of the library. In the first half of the book, I’ll describe a few of the most important classes, such as those modeling financial instruments and term structures; this will give you a view of the larger architecture of the library. In the second half, I’ll describe a few specialized frameworks, such as those used for creating Monte Carlo or finite-differences models. Some of those are more polished than others; I hope that their current shortcomings will be as interesting as their strong points.

The book is primarily aimed at users wanting to extend the library with their own instruments or models; if you desire to do so, the description of the available class hierarchies and frameworks will provide you with information about the hooks you need to integrate your code with QuantLib and take advantage of its facilities. If you’re not this kind of user, don’t close the book yet; you can find useful information too. However, you might want to look at the QuantLib Python Cookbook instead. It can be useful to C++ users, too.

And now, as is tradition, a few notes on the style and requirements of this book.

Knowledge of both C++ and quantitative finance is assumed. I’ve no pretense of being able to teach you either one, and this book is thick enough already. Here, I just describe the implementation and design of QuantLib; I’ll leave it to other and better authors to describe the problem domain on one hand, and the language syntax and tricks on the other.

As you already noticed, I’ll write in the first person singular. True, it might look rather self-centered—as a matter of fact, I hope you still haven’t put down the book in annoyance—but we would feel rather pompous if we were to use the first person plural. The author of this book feels the same about using the third person. After a bit of thinking, I opted for a less formal but more comfortable style (which, as you noted, also includes a liberal use of contractions). For the same reason, I’ll be addressing you instead of the proverbial acute reader. The use of the singular will also help to avoid confusion; when I use the plural, I describe work done by the QuantLib developers as a group.

I will describe the evolution of a design when it is interesting on its own, or relevant for the final result. For the sake of clarity, in most cases I’ll skip over the blind alleys and wrong turns taken; put together design decisions which were made at different times; and only show the final design, sometimes simplified. This will still leave me with plenty to say: in the words of the Alabama Shakes, “why” is an awful lot of question.

I will point out the use of design patterns in the code I describe. Mind you, I’m not advocating cramming your code with them; they should be applied when they’re useful, not for their own sake.3 However, QuantLib is now the result of several years of coding and refactoring, both based on user feedback and new requirements being added over time. It is only natural that the design evolved toward patterns.

I will apply to the code listings in the book the same conventions used in the library and outlined in appendix B. I will depart from them in one respect: due to the limitations on line length, I might drop the std and boost namespaces from type names. When the listings need to be complemented by diagrams, I will be using UML; for those not familiar with this language, a concise guide can be found in Fowler, 2003.

And now, let’s dive.

2. Financial instruments and pricing engines

The statement that a financial library must provide the means to price financial instruments would certainly have appealed to Monsieur de La Palisse. However, that is only a part of the whole problem; a financial library must also provide developers with the means to extend it by adding new pricing functionality.

Foreseeable extensions are of two kinds, and the library must allow either one. On the one hand, it must be possible to add new financial instruments; on the other hand, it must be feasible to add new means of pricing an existing instrument. Both kinds have a number of requirements, or in pattern jargon, forces that the solution must reconcile. This chapter details such requirements and describes the design that allows QuantLib to satisfy them.

2.1 The Instrument class

In our domain, a financial instrument is a concept in its own right. For this reason alone, any self-respecting object-oriented programmer will code it as a base class from which specific instruments will be derived.

The idea, of course, is to be able to write code such as

for (i = portfolio.begin(); i != portfolio.end(); ++i)

totalNPV += i->NPV();

where we don’t have to care about the specific type of each

instrument. However, this also prevents us from knowing what

arguments to pass to the NPV method, or even what methods to

call. Therefore, even the two seemingly harmless lines above tell us

that we have to step back and think a bit about the interface.

2.1.1 Interface and requirements

The broad variety of traded assets—which range from the simplest to

the most exotic—implies that any method specific to a given class of

instruments (say, equity options) is bound not to make sense for some

other kind (say, interest-rate swaps). Thus, very few methods were

singled out as generic enough to belong to the Instrument

interface. We limited ourselves to those returning its present value

(possibly with an associated error estimate) and indicating whether or

not the instrument has expired; since we can’t specify what arguments

are needed,4 the methods take none; any needed input will

have to be stored by the instrument. The resulting interface is shown

in the listing below.

Instrument class.class Instrument {

public:

virtual ~Instrument();

virtual Real NPV() const = 0;

virtual Real errorEstimate() const = 0;

virtual bool isExpired() const = 0;

};

As is good practice, the methods were first declared as pure virtual ones; but—as Sportin’ Life points out in Gershwin’s Porgy and Bess—it ain’t necessarily so. There might be some behavior that can be coded in the base class. In order to find out whether this was the case, we had to analyze what to expect from a generic financial instrument and check whether it could be implemented in a generic way. Two such requirements were found at different times, and their implementation changed during the development of the library; I present them here in their current form.

One is that a given financial instrument might be priced in different ways (e.g., with one or more analytic formulas or numerical methods) without having to resort to inheritance. At this point, you might be thinking “Strategy pattern”. It is indeed so; I devote section 2.2 to its implementation.

The second requirement came from the observation that the value of a financial instrument depends on market data. Such data are by their nature variable in time, so that the value of the instrument varies in turn; another cause of variability is that any single market datum can be provided by different sources. We wanted financial instruments to maintain links to these sources so that, upon different calls, their methods would access the latest values and recalculate the results accordingly; also, we wanted to be able to transparently switch between sources and have the instrument treat this as just another change of the data values.

We were also concerned with a potential loss of efficiency. For instance, we could monitor the value of a portfolio in time by storing its instruments in a container, periodically poll their values, and add the results. In a simple implementation, this would trigger recalculation even for those instruments whose inputs did not change. Therefore, we decided to add to the instrument methods a caching mechanism: one that would cause previous results to be stored and only recalculated when any of the inputs change.

2.1.2 Implementation

The code managing the caching and recalculation of the instrument value was written for a generic financial instrument by means of two design patterns.

When any of the inputs change, the instrument is notified by means of the Observer pattern (Gamma et al, 1995). The pattern itself is briefly described5 in appendix A; I describe here the participants.

Obviously enough, the instrument plays the role of the observer while

the input data play that of the observables. In order to have access

to the new values after a change is notified, the observer needs to

maintain a reference to the object representing the input. This might

suggest some kind of smart pointer; however, the behavior of a pointer

is not sufficient to fully describe our problem. As I already

mentioned, a change might come not only from the fact that values from

a data feed vary in time; we might also want to switch to a different

data feed. Storing a (smart) pointer would give us access to the

current value of the object pointed; but our copy of the pointer,

being private to the observer, could not be made to point to a

different object. Therefore, what we need is the smart equivalent of a

pointer to pointer. This feature was implemented in QuantLib as a

class template and given the name of Handle. Again, details are

given in appendix A; relevant to this discussion is the

fact that copies of a given Handle share a link to an

object. When the link is made to point to another object, all copies

are notified and allow their holders to access the new

pointee. Furthermore, Handles forward any notifications from

the pointed object to their observers.

Finally, classes were implemented which act as observable data and can

be stored into Handles. The most basic is the Quote

class, representing a single varying market value. Other inputs for

financial instrument valuation can include more complex objects such

as yield or volatility term structures.6

Another problem was to abstract out the code for storing and

recalculating cached results, while still leaving it to derived

classes to implement any specific calculations.

In earlier versions of QuantLib, the functionality was included in the

Instrument class itself; later, it was extracted and coded into

another class—somewhat unsurprisingly called LazyObject—which is

now reused in other parts of the library. An outline of the class is

shown in the following listing.

LazyObject class.class LazyObject : public virtual Observer,

public virtual Observable {

protected:

mutable bool calculated_;

virtual void performCalculations() const = 0;

public:

void update() { calculated_ = false; }

virtual void calculate() const {

if (!calculated_) {

calculated_ = true;

try {

performCalculations();

} catch (...) {

calculated_ = false;

throw;

}

}

}

};

The code is not overly complex. A boolean data member calculated_ is

defined which keeps track of whether results were calculated and still

valid. The update method, which implements the Observer

interface and is called upon notification from observables, sets such

boolean to false and thus invalidates previous results.

The calculate method is implemented by means of the Template Method

pattern (Gamma et al, 1995), sometimes also called

non-virtual interface. As explained in the Gang of Four book, the

constant part of the algorithm (in this case, the management of the cached

results) is implemented in the base class; the varying parts (here,

the actual calculations) are delegated to a virtual method, namely,

performCalculations, which is called in the body of the

base-class method. Therefore, derived classes will only implement

their specific calculations without having to care about caching: the

relevant code will be injected by the base class.

The logic of the caching is simple. If the current results are no longer valid, we let the derived class perform the needed calculations and flag the new results as up to date. If the current results are valid, we do nothing.

However, the implementation is not as simple. You might

wonder why we had to insert a try block setting

calculated_ beforehand and a handler rolling back the change

before throwing the exception again. After all, we could have written

the body of the algorithm more simply—for instance, as in the

following, seemingly equivalent code, that doesn’t catch and rethrow

exceptions:

if (!calculated_) {

performCalculations();

calculated_ = true;

}

The reason is that there are cases (e.g., when the lazy object is a

yield term structure which is bootstrapped lazily) in which

performCalculations happens to recursively call

calculate. If calculated_ were not set to true,

the if condition would still hold and performCalculations

would be called again, leading to infinite recursion. Setting such

flag to true prevents this from happening; however, care must

now be taken to restore it to false if an exception is

thrown. The exception is then rethrown so that it can be caught by the

installed error handlers.

A few more methods are provided in LazyObject which enable

users to prevent or force a recalculation of the results. They are not

discussed here. If you’re interested, you can heed the advice often

given by master Obi-Wan Kenobi: “Read the source, Luke.”

The Instrument class inherits from LazyObject. In order

to implement the interface outlined earlier,

it decorates the calculate method with code specific to

financial instruments. The resulting method is shown in

the listing below, together with other bits of supporting

code.

Instrument class.class Instrument : public LazyObject {

protected:

mutable Real NPV_;

public:

Real NPV() const {

calculate();

return NPV_;

}

void calculate() const {

if (isExpired()) {

setupExpired();

calculated_ = true;

} else {

LazyObject::calculate();

}

}

virtual void setupExpired() const {

NPV_ = 0.0;

}

};

Once again, the added code follows the Template Method pattern to

delegate instrument-specific calculations to derived classes. The

class defines an NPV_ data member to store the result of the

calculation; derived classes can declare other data members to store

specific results.7 The body of the calculate method calls

the virtual isExpired method to check whether the instrument is an

expired one. If this is the case, it calls another virtual method,

namely, setupExpired, which has the responsibility of giving

meaningful values to the results; its default implementation sets

NPV_ to 0 and can be called by derived classes. The calculated_

flag is then set to true. If the instrument is not expired, the

calculate method of LazyObject is called instead, which in turn

will call performCalculations as needed. This imposes a contract on

the latter method, namely, its implementations in derived classes are

required to set NPV_ (as well as any other instrument-specific data

member) to the result of the calculations. Finally, the NPV method

ensures that calculate is called before returning the answer.

2.1.3 Example: interest-rate swap

I end this section by showing how a specific financial instrument can be implemented based on the described facilities.

The chosen instrument is the interest-rate swap. As you surely know, it is a contract which consists in exchanging periodic cash flows. The net present value of the instrument is calculated by adding or subtracting the discounted cash-flow amounts depending on whether the cash flows are paid or received.

Not surprisingly, the swap is implemented8 as a new class

deriving from Instrument. Its outline is shown in

the following listing.

Swap class.class Swap : public Instrument {

public:

Swap(const vector<shared_ptr<CashFlow> >& firstLeg,

const vector<shared_ptr<CashFlow> >& secondLeg,

const Handle<YieldTermStructure>& termStructure);

bool isExpired() const;

Real firstLegBPS() const;

Real secondLegBPS() const;

protected:

// methods

void setupExpired() const;

void performCalculations() const;

// data members

vector<shared_ptr<CashFlow> > firstLeg_, secondLeg_;

Handle<YieldTermStructure> termStructure_;

mutable Real firstLegBPS_, secondLegBPS_;

};

It contains as data members the objects

needed for the calculations—namely, the cash flows on the first and

second leg and the yield term structure used to discount their

amounts—and two variables used to store additional

results. Furthermore, it declares methods implementing the

Instrument interface and others returning the swap-specific

results. The class diagram of Swap and the related classes is shown

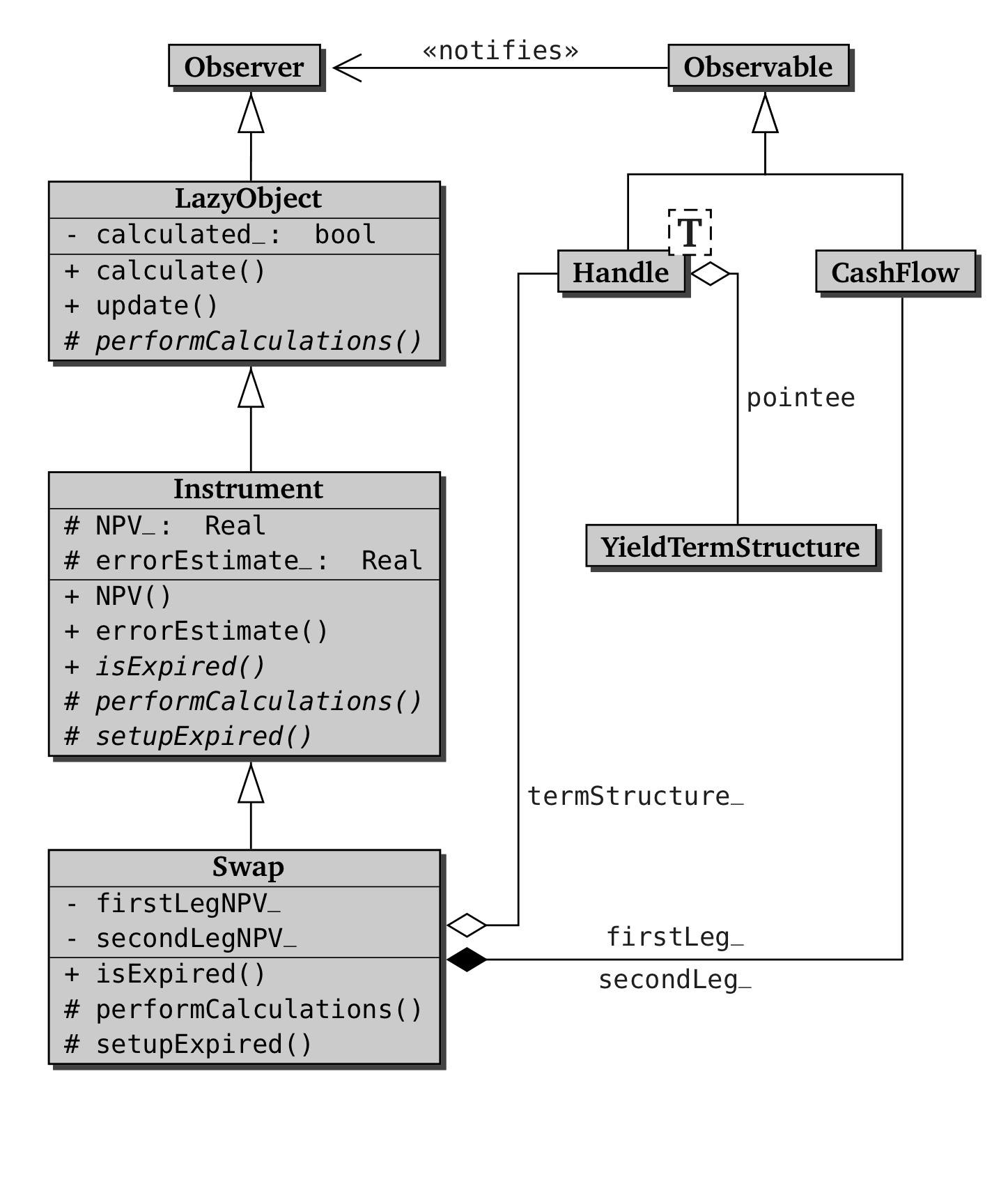

in the figure below.

Swap class.The fitting of the class to the Instrument framework is done in

three steps, the third being optional depending on the derived class;

the relevant methods are shown in the next listing.

Swap class.Swap::Swap(const vector<shared_ptr<CashFlow> >& firstLeg,

const vector<shared_ptr<CashFlow> >& secondLeg,

const Handle<YieldTermStructure>& termStructure)

: firstLeg_(firstLeg), secondLeg_(secondLeg),

termStructure_(termStructure) {

registerWith(termStructure_);

vector<shared_ptr<CashFlow> >::iterator i;

for (i = firstLeg_.begin(); i!= firstLeg_.end(); ++i)

registerWith(*i);

for (i = secondLeg_.begin(); i!= secondLeg_.end(); ++i)

registerWith(*i);

}

bool Swap::isExpired() const {

Date settlement = termStructure_->referenceDate();

vector<shared_ptr<CashFlow> >::const_iterator i;

for (i = firstLeg_.begin(); i!= firstLeg_.end(); ++i)

if (!(*i)->hasOccurred(settlement))

return false;

for (i = secondLeg_.begin(); i!= secondLeg_.end(); ++i)

if (!(*i)->hasOccurred(settlement))

return false;

return true;

}

void Swap::setupExpired() const {

Instrument::setupExpired();

firstLegBPS_= secondLegBPS_ = 0.0;

}

void Swap::performCalculations() const {

NPV_ = - Cashflows::npv(firstLeg_,**termStructure_)

+ Cashflows::npv(secondLeg_,**termStructure_);

errorEstimate_ = Null<Real>();

firstLegBPS_ = - Cashflows::bps(firstLeg_, **termStructure_);

secondLegBPS_ = Cashflows::bps(secondLeg_, **termStructure_);

}

Real Swap::firstLegBPS() const {

calculate();

return firstLegBPS_;

}

Real Swap::secondLegBPS() const {

calculate();

return secondLegBPS_;

}

The first step is performed in the class constructor, which takes as arguments (and copies into the corresponding data members) the two sequences of cash flows to be exchanged and the yield term structure to be used for discounting their amounts. The step itself consists in registering the swap as an observer of both the cash flows and the term structure. As previously explained, this enables them to notify the swap and trigger its recalculation each time a change occurs.

The second step is the implementation of the required interface. The

logic of the isExpired method is simple enough; its body loops

over the stored cash flows checking their payment dates. As soon as it

finds a payment which still has not occurred, it reports the swap as

not expired. If none is found, the instrument has expired. In this

case, the setupExpired method will be called. Its

implementation calls the base-class one, thus taking care of the data

members inherited from Instrument; it then sets to 0 the

swap-specific results.

The last required method is performCalculations. The calculation is

performed by calling two external functions from the Cashflows

class.9 The first one, namely, npv, is a straightforward

translation of the algorithm outlined above: it cycles on a sequence

of cash flows adding the discounted amount of its future cash flows.

We set the NPV_ variable to the difference of the results from the

two legs. The second one, bps, calculates the basis-point

sensitivity (BPS) of a sequence of cash flows. We call it once per leg

and store the results in the corresponding data members. Since the

result carries no numerical error, the errorEstimate_ variable is

set to Null<Real>()—a specific floating-point value which is used

as a sentinel value indicating an invalid number.10

The third and final step only needs to be performed if—as in this

case—the class defines additional results. It consists in writing

corresponding methods (here, firstLegBPS and

secondLegBPS) which ensure that the calculations are (lazily)

performed before returning the stored results.

The implementation is now complete. Having been written on top of the

Instrument class, the Swap class will benefit from its

code. Thus, it will automatically cache and recalculate results

according to notifications from its inputs—even though no related

code was written in Swap except for the registration calls.

2.1.4 Further developments

You might have noticed a shortcoming in my treatment of the previous

example and of the Instrument class in general. Albeit

generic, the Swap class we implemented cannot manage

interest-rate swaps in which the two legs are paid in different

currencies. A similar problem would arise if you wanted to add the

values of two instruments whose values are not in the same currency;

you would have to convert manually one of the values to the

currency of the other before adding them together.

Such problems stem from a single weakness of the implementation: we

used the Real type (i.e., a simple floating-point number) to

represent the value of an instrument or a cash flow. Therefore, such

results miss the currency information which is attached to them in the

real world.

The weakness might be removed if we were to express such results by

means of the Money class. Instances of such class contain

currency information; moreover, depending on user settings, they are

able to automatically perform conversion to a common currency upon

addition or subtraction.

However, this would be a major change, affecting a large part of the code base in a number of ways. Therefore, it will need some serious thinking before we tackle it (if we do tackle it at all).

Another (and more subtle) shortcoming is that the Swap class

fails to distinguish explicitly between two components of the

abstraction it represents. Namely, there is no clear separation

between the data specifying the contract (the cash-flow specification)

and the market data used to price the instrument (the current discount

curve).

The solution is to store in the instrument only the first group of data (i.e., those that would be in its term sheet) and keep the market data elsewhere.11 The means to do this are the subject of the next section.

2.2 Pricing engines

We now turn to the second of the requirements I stated in the previous section. For any given instrument, it is not always the case that a unique pricing method exists; moreover, one might want to use multiple methods for different reasons. Let’s take the classic textbook example—the European equity option. One might want to price it by means of the analytic Black-Scholes formula in order to retrieve implied volatilities from market prices; by means of a stochastic volatility model in order to calibrate the latter and use it for more exotic options; by means of a finite-difference scheme in order to compare the results with the analytic ones and validate one’s finite-difference implementation; or by means of a Monte Carlo model in order to use the European option as a control variate for a more exotic one.

Therefore, we want it to be possible for a single instrument to be

priced in different ways. Of course, it is not desirable to give

different implementations of the performCalculations method,

as this would force one to use different classes for a single

instrument type. In our example, we would end up with a base

EuropeanOption class from which AnalyticEuropeanOption,

McEuropeanOption and others would be derived. This is wrong in

at least two ways. On a conceptual level, it would introduce different

entities when a single one is needed: a European option is a European

option is a European option, as Gertrude Stein said. On a usability

level, it would make it impossible to switch pricing methods at

run-time.

The solution is to use the Strategy pattern, i.e., to let the

instrument take an object encapsulating the computation to be

performed. We called such an object a pricing engine. A given

instrument would be able to take any one of a number of available

engines (of course corresponding to the instrument type), pass the

chosen engine the needed arguments, have it calculate the value of the

instrument and any other desired quantities, and fetch the results.

Therefore, the performCalculations method would be

implemented roughly as follows:

void SomeInstrument::performCalculations() const {

NPV_ = engine_->calculate(arg1, arg2, ... , argN);

}

where we assumed that a virtual calculate method is defined in

the engine interface and implemented in the concrete engines.

Unfortunately, the above approach won’t work as such. The problem is,

we want to implement the dispatching code just once, namely, in the

Instrument class. However, that class doesn’t know the number

and type of arguments; different derived classes are likely to have

data members differing wildly in both number and type. The same goes

for the returned results; for instance, an interest-rate swap might

return fair values for its fixed rate and floating spread, while the

ubiquitous European option might return any number of Greeks.

An interface passing explicit arguments to the engine through a method, as the one outlined above, would thus lead to undesirable consequences. Pricing engines for different instruments would have different interfaces, which would prevent us from defining a single base class; therefore, the code for calling the engine would have to be replicated in each instrument class. This way madness lies.

The solution we chose was that arguments and results be passed and

received from the engines by means of opaque structures aptly called

arguments and results. Two structures derived from those

and augmenting them with instrument-specific data will be stored in

any pricing engine; an instrument will write and read such data in

order to exchange information with the engine.

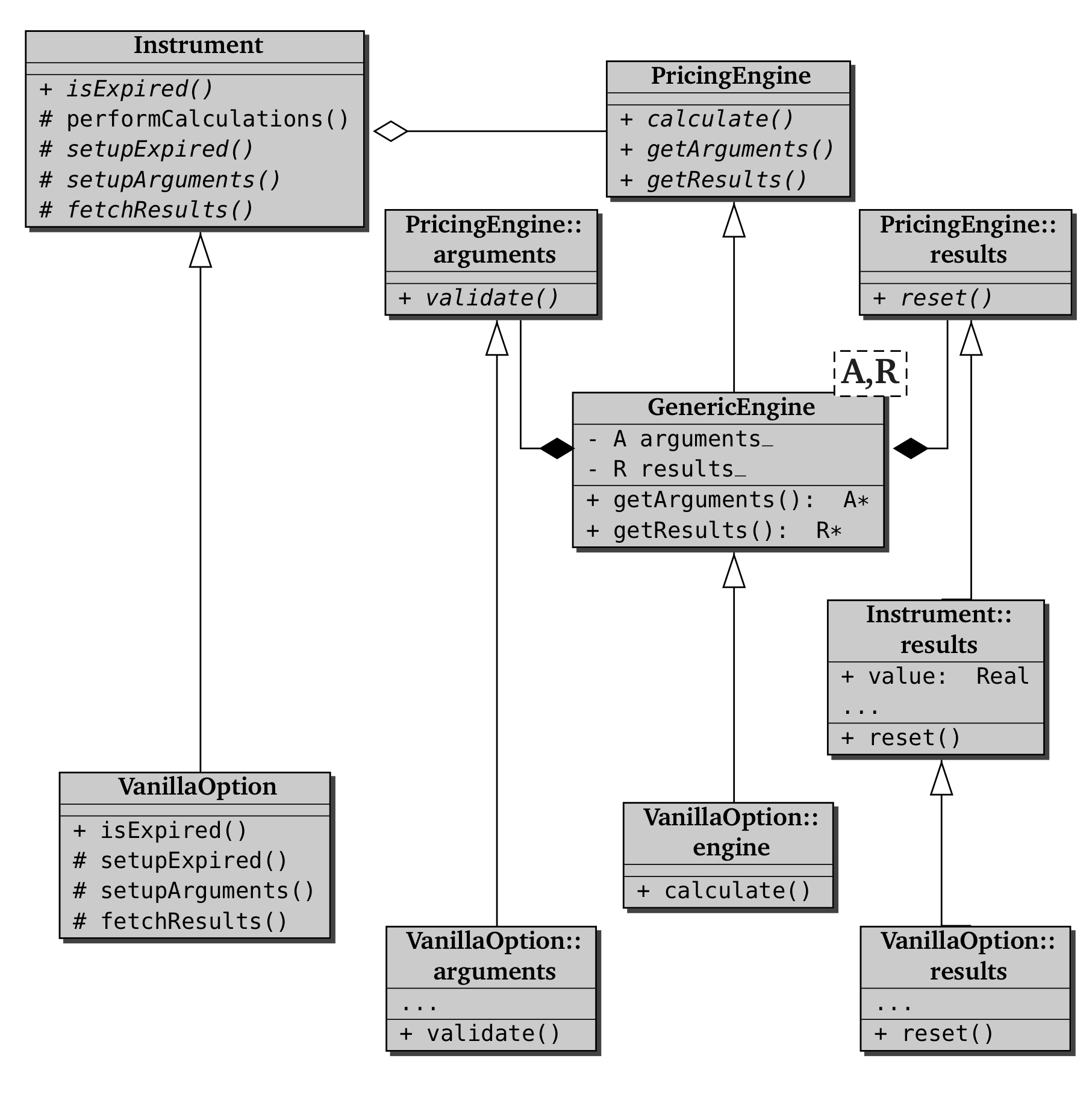

The listing below shows the interface of the resulting

PricingEngine class, as well as its inner argument and

results classes and a helper GenericEngine class

template. The latter implements most of the PricingEngine

interface, leaving only the implementation of the calculate

method to developers of specific engines. The arguments and

results classes were given methods which ease their use as drop

boxes for data: arguments::validate is to be called after input

data are written to ensure that their values lie in valid ranges,

while results::reset is to be called before the engine starts

calculating in order to clean previous results.

PricingEngine and of related classes.class PricingEngine : public Observable {

public:

class arguments;

class results;

virtual ~PricingEngine() {}

virtual arguments* getArguments() const = 0;

virtual const results* getResults() const = 0;

virtual void reset() const = 0;

virtual void calculate() const = 0;

};

class PricingEngine::arguments {

public:

virtual ~arguments() {}

virtual void validate() const = 0;

};

class PricingEngine::results {

public:

virtual ~results() {}

virtual void reset() = 0;

};

// ArgumentsType must inherit from arguments;

// ResultType from results.

template<class ArgumentsType, class ResultsType>

class GenericEngine : public PricingEngine {

public:

PricingEngine::arguments* getArguments() const {

return &arguments_;

}

const PricingEngine::results* getResults() const {

return &results_;

}

void reset() const { results_.reset(); }

protected:

mutable ArgumentsType arguments_;

mutable ResultsType results_;

};

Armed with our new classes, we can now write a generic

performCalculation method. Besides the already mentioned

Strategy pattern, we will use the Template Method pattern to allow any

given instrument to fill the missing bits. The resulting

implementation is shown in the next listing. Note that an

inner class Instrument::result was defined; it inherits

from PricingEngine::results and contains the results that have

to be provided for any instrument12

Instrument class.class Instrument : public LazyObject {

public:

class results;

virtual void performCalculations() const {

QL_REQUIRE(engine_, "null pricing engine");

engine_->reset();

setupArguments(engine_->getArguments());

engine_->getArguments()->validate();

engine_->calculate();

fetchResults(engine_->getResults());

}

virtual void setupArguments(

PricingEngine::arguments*) const {

QL_FAIL("setupArguments() not implemented");

}

virtual void fetchResults(

const PricingEngine::results* r) const {

const Instrument::results* results =

dynamic_cast<const Value*>(r);

QL_ENSURE(results != 0, "no results returned");

NPV_ = results->value;

errorEstimate_ = results->errorEstimate;

}

template <class T> T result(const string& tag) const;

protected:

shared_ptr<PricingEngine> engine_;

};

class Instrument::results

: public virtual PricingEngine::results {

public:

Value() { reset(); }

void reset() {

value = errorEstimate = Null<Real>();

}

Real value;

Real errorEstimate;

};

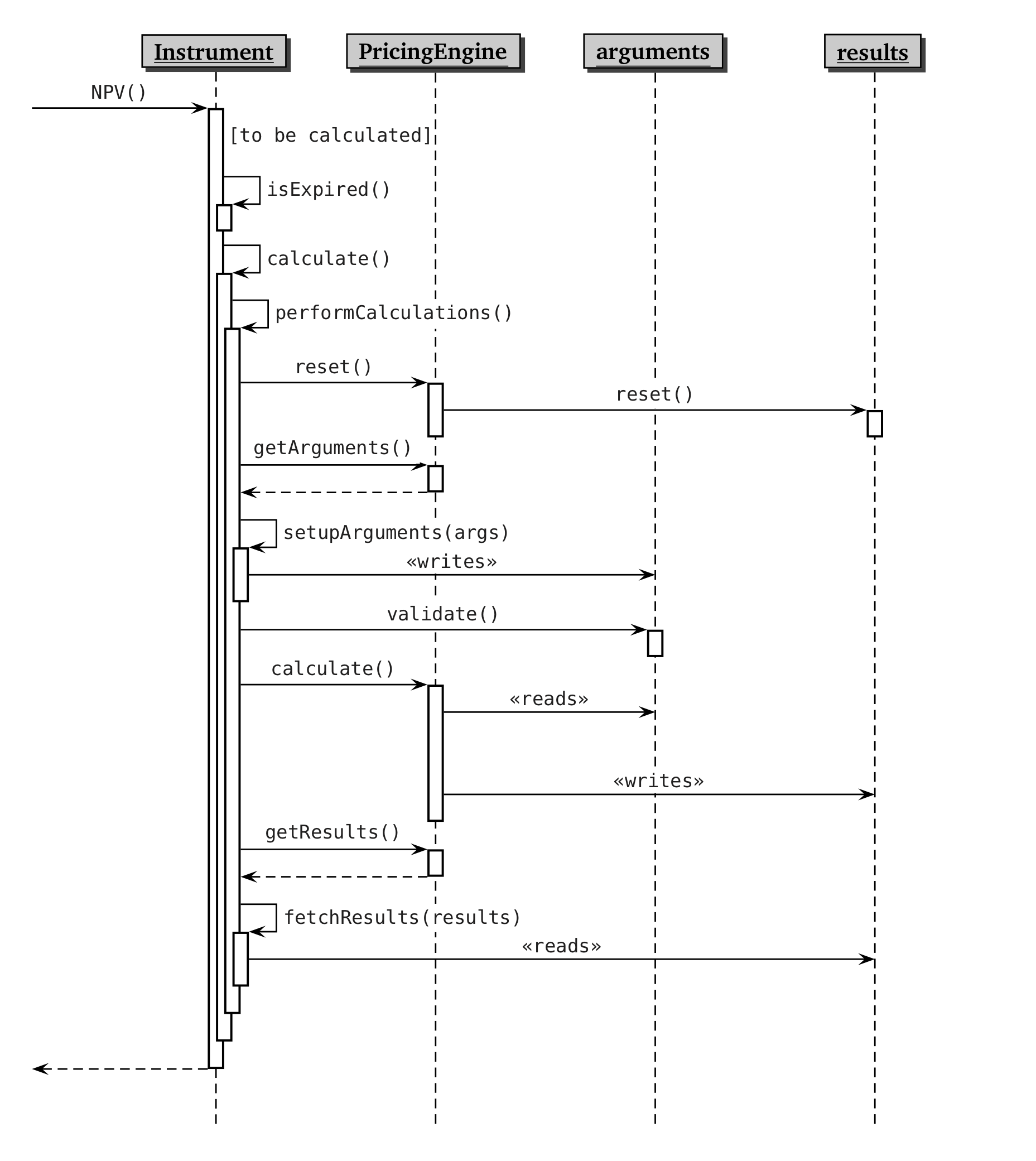

As for performCalculation, the actual work is split between a

number of collaborating classes—the instrument, the pricing engine,

and the arguments and results classes. The dynamics of such a

collaboration (described in the following paragraphs) might be best

understood with the help of the UML sequence diagram shown in

the first of the next two figures; the static relationship between the

classes (and a possible concrete instrument) is shown in

the second.

Instrument, PricingEngine, and related classes

including a derived instrument class.A call to the NPV method of the instrument eventually triggers

(if the instrument is not expired and the relevant quantities need to

be calculated) a call to its performCalculations method. Here

is where the interplay between instrument and pricing engine

begins. First of all, the instrument verifies that an engine is

available, aborting the calculation if this is not the case. If one is

found, the instrument prompts it to reset itself. The message is

forwarded to the instrument-specific result structure by means of its

reset method; after it executes, the structure is a clean slate

ready for writing the new results.

At this point, the Template Method pattern enters the scene. The

instrument asks the pricing engine for its argument structure, which

is returned as a pointer to arguments. The pointer is then

passed to the instrument’s setupArguments method, which acts as

the variable part in the pattern. Depending on the specific

instrument, such method verifies that the passed argument is of the

correct type and proceeds to fill its data members with the correct

values. Finally, the arguments are asked to perform any needed checks

on the newly-written values by calling the validate method.

The stage is now ready for the Strategy pattern. Its arguments set,

the chosen engine is asked to perform its specific calculations,

implemented in its calculate method. During the processing,

the engine will read the inputs it needs from its argument structure

and write the corresponding outputs into its results structure.

After the engine completes its work, the control returns to the

Instrument instance and the Template Method pattern continues

unfolding. The called method, fetchResults, must now ask the

engine for the results, downcast them to gain access to the contained

data, and copy such values into its own data members. The

Instrument class defines a default implementation which fetches

the results common to all instruments; derived classes might extend it

to read specific results.

2.2.1 Example: plain-vanilla option

At this point, an example is necessary. A word of

warning, though: although a class exists in QuantLib which implements

plain-vanilla options—i.e., simple call and put equity options with

either European, American or Bermudan exercise—such class is

actually the lowermost leaf of a deep class hierarchy. Having the

Instrument class at its root, such hierarchy specializes it

first with an Option class, then again with a

OneAssetOption class generalizing options on a single

underlying, passing through another class or two until it finally

defines the VanillaOption class we are interested in.

There are good reasons for this; for instance, the code

in the OneAssetOption class can naturally be reused for, say,

Asian options, while that in the Option class lends itself for

reuse when implementing all kinds of basket options. Unfortunately,

this causes the code for pricing a plain option to be spread among all

the members of the described inheritance chain, which would not make

for an extremely clear example. Therefore, I will describe a simplified

VanillaOption class with the same implementation as the one in

the library, but inheriting directly from the Instrument class;

all code implemented in the intermediate classes will be

shown as if it were implemented in the example

class rather than inherited.

The listing below shows the interface of our

vanilla-option class. It declares the required methods from the

Instrument interface, as well as accessors for additional

results, namely, the greeks of the options; as pointed out in the

previous section, the corresponding data members are declared as

mutable so that their values can be set in the logically constant

calculate method.

VanillaOption class.class VanillaOption : public Instrument {

public:

// accessory classes

class arguments;

class results;

class engine;

// constructor

VanillaOption(const shared_ptr<Payoff>&,

const shared_ptr<Exercise>&);

// implementation of instrument method

bool isExpired() const;

void setupArguments(Arguments*) const;

void fetchResults(const Results*) const;

// accessors for option-specific results

Real delta() const;

Real gamma() const;

Real theta() const;

// ...more greeks

protected:

void setupExpired() const;

// option data

shared_ptr<Payoff> payoff_;

shared_ptr<Exercise> exercise_;

// specific results

mutable Real delta_;

mutable Real gamma_;

mutable Real theta_;

// ...more

};

Besides its own data and methods, VanillaOption declares a

number of accessory classes: that is, the specific argument and result

structures and a base pricing engine. They are defined as

inner classes to highlight the relationship between them and the

option class; their interface is shown in the next listing.

VanillaOption inner classes.class VanillaOption::arguments

: public PricingEngine::arguments {

public:

// constructor

arguments();

void validate() const;

shared_ptr<Payoff> payoff;

shared_ptr<Exercise> exercise;

};

class Greeks : public virtual PricingEngine::results {

public:

Greeks();

Real delta, gamma;

Real theta;

Real vega;

Real rho, dividendRho;

};

class VanillaOption::results : public Instrument::results,

public Greeks {

public:

void reset();

};

class VanillaOption::engine

: public GenericEngine<VanillaOption::arguments,

VanillaOption::results> {};

Two comments can be made on such accessory classes. The first is that,

making an exception to what I said in my introduction to the example,

I didn’t declare all data members into the results class. This

was done in order to point out an implementation detail. One might

want to define structures holding a few related and commonly used

results; such structures can then be reused by means of inheritance,

as exemplified by the Greeks structure that is here composed

with Instrument::results to obtain the final structure. In

this case, virtual inheritance from PricingEngine::results must

be used to avoid the infamous inheritance diamond (see, for instance,

Stroustrup, 2013; the name is in the index).

The second comment is that, as shown, it is sufficient to inherit from

the class template GenericEngine (instantiated with the right

argument and result types) to provide a base class for

instrument-specific pricing engines. We will see that derived classes

only need to implement their calculate method.

We now turn to the implementation of the VanillaOption class,

shown in the following listing.

VanillaOption class.VanillaOption::VanillaOption(

const shared_ptr<StrikedTypePayoff>& payoff,

const shared_ptr<Exercise>& exercise)

: payoff_(payoff), exercise_(exercise) {}

bool VanillaOption::isExpired() const {

Date today = Settings::instance().evaluationDate();

return exercise_->lastDate() < today;

}

void VanillaOption::setupExpired() const {

Instrument::setupExpired();

delta_ = gamma_ = theta_ = ... = 0.0;

}

void VanillaOption::setupArguments(

PricingEngine::arguments* args) const {

VanillaOption::arguments* arguments =

dynamic_cast<VanillaOption::arguments*>(args);

QL_REQUIRE(arguments != 0, "wrong argument type");

arguments->exercise = exercise_;

arguments->payoff = payoff_;

}

void VanillaOption::fetchResults(

const PricingEngine::results* r) const {

Instrument::fetchResults(r);

const VanillaOption::results* results =

dynamic_cast<const VanillaOption::results*>(r);

QL_ENSURE(results != 0, "wrong result type");

delta_ = results->delta;

... // other Greeks

}

Real VanillaOption::delta() const {

calculate();

QL_ENSURE(delta_ != Null<Real>(), "delta not given");

return delta_;

}

Its constructor takes a few objects defining the instrument. Most of them will be described in later chapters or in appendix A. For the time being, suffice to say that the payoff contains the strike and type (i.e., call or put) of the option, and the exercise contains information on the exercise dates and variety (i.e., European, American, or Bermudan). The passed arguments are stored in the corresponding data members. Also, note that they do not include market data; those will be passed elsewhere.

The methods related to expiration are straightforward; isExpired

checks whether the latest exercise date is passed, while

setupExpired calls the base-class implementation and sets the

instrument-specific data to 0.

The setupArguments and fetchResults methods are a bit

more interesting. The former starts by downcasting the generic

argument pointer to the actual type required, raising an

exception if another type was passed; it then turns to the actual

work. In some cases, the data members are just copied verbatim into

the corresponding argument slots. However, it might be the case that

the same calculations (say, converting dates into times) will be

needed by a number of engines; setupArguments provides a place

to write them just once.

The fetchResults method is the dual of

setupArguments. It also starts by downcasting the passed

results pointer; after verifying its actual type, it

copies the results into his own data members.

Any method that returns additional results, such as delta, will do

as NPV does: it will call calculate, check that the corresponding

result was cached (because any given engine might or might not be able

to calculate it) and return it.

The above implementation is everything we needed to have a working instrument—working, that is, once it is set an engine which will perform the required calculations. Such an engine is sketched in the listing below and implements the analytic Black-Scholes-Merton formula for European options.

VanillaOption class.class AnalyticEuropeanEngine

: public VanillaOption::engine {

public:

AnalyticEuropeanEngine(

const shared_ptr<GeneralizedBlackScholesProcess>&

process)

: process_(process) {

registerWith(process);

}

void calculate() const {

QL_REQUIRE(

arguments_.exercise->type() == Exercise::European,

"not an European option");

shared_ptr<PlainVanillaPayoff> payoff =

dynamic_pointer_cast<PlainVanillaPayoff>(

arguments_.payoff);

QL_REQUIRE(process, "Black-Scholes process needed");

... // other requirements

Real spot = process_->stateVariable()->value();

... // other needed quantities

BlackCalculator black(payoff, forwardPrice,

stdDev, discount);

results_.value = black.value();

results_.delta = black.delta(spot);

... // other greeks

}

private:

shared_ptr<GeneralizedBlackScholesProcess> process_;

};

Its constructor takes (and registers itself with) a Black-Scholes

stochastic process that contains market-data information about the

underlying including present value, risk-free rate, dividend yield,

and volatility. Once again, the actual calculations are hidden behind

the interface of another class, namely, the BlackCalculator

class. However, the code has enough detail to show a few relevant

features.

The method starts by verifying a few preconditions. This might come as

a surprise, since the arguments of the calculations were already

validated by the time the calculate method is called. However,

any given engine can have further requirements to be fulfilled before

its calculations can be performed. In the case of our engine, one such

requirement is that the option is European and that the payoff is a

plain call/put one, which also means that the payoff will be cast down

to the needed class.13

In the middle section of the method, the engine extracts from the passed arguments any information not already presented in digested form. Shown here is the retrieval of the spot price of the underlying; other quantities needed by the engine, e.g., the forward price of the underlying and the risk-free discount factor at maturity, are also extracted.14

Finally, the calculation is performed and the results are stored in

the corresponding slots of the results structure. This concludes both

the calculate method and the example.

A. Odds and ends

A number of basic issues with the usage of QuantLib have been glossed over in the previous chapters, in order not to undermine their readability (if any) with an accumulation of technical details; as pointed out by Douglas Adams in the fourth book of its Hitchhiker trilogy,15

[An excessive amount of detail] is guff. It doesn’t advance the action. It makes for nice fat books such as the American market thrives on, but it doesn’t actually get you anywhere.

This appendix provides a quick reference to some such issues. It is not meant to be exhaustive nor systematic;16 if you need that kind of documentation, check the QuantLib reference manual, available at the QuantLib web site.

Basic types

The library interfaces don’t use built-in types; instead, a number of

typedefs are provided such as Time, Rate,

Integer, or Size. They are all mapped to basic types

(we talked about using full-featured types, possibly with range

checking, but we dumped the idea). Furthermore, all floating-point

types are defined as Real, which in turn is defined as

double. This makes it possible to change all of them

consistently by just changing Real.

In principle, this would allow one to choose the desired level of

accuracy; but to this, the test-suite answers “Fiddlesticks!” since

it shows a few failures when Real is defined as float or

long double. The value of the typedefs is really in making the

code more clear—and in allowing dimensional analysis for those who,

like me, were used to it in a previous life as a physicist; for

instance, expressions such as exp(r) or r+s*t can be

immediately flagged as fishy if they are preceded by Rate r,

Spread s, and Time t.

Of course, all those fancy types are only aliases to double and

the compiler doesn’t really distinguish between them. It would nice

if they had stronger typing; so that, for instance, one could overload

a method based on whether it is passed a price or a volatility.

One possibility would be the BOOST_STRONG_TYPEDEF macro, which is one

of the bazillion utilities provided by Boost. It is used as, say,

BOOST_STRONG_TYPEDEF(double, Time)

BOOST_STRONG_TYPEDEF(double, Rate)

and creates a corresponding proper class with appropriate conversions to and from the underlying type. This would allow overloading methods, but has the drawbacks that not all conversions are explicit. This would break backward compatibility and make things generally awkward.17

Also, the classes defined by the macro overload all operators: you can happily add a time to a rate, even though it doesn’t make sense (yes, dimensional analysis again). It would be nice if the type system prevented this from compiling, while still allowing, for instance, to add a spread to a rate yielding another rate or to multiply a rate by a time yielding a pure number.

How to do this in a generic way, and ideally with no run-time costs, was shown first by Barton and Nackman, 1995; a variation of their idea is implemented in the Boost.Units library, and a simpler one was implemented once by yours truly while still working in Physics.18 However, that might be overkill here; we don’t have to deal with all possible combinations of length, mass, time and so on.

The ideal compromise for a future library might be to implement wrapper classes (à la Boost strong typedef) and to define explicitly which operators are allowed for which types. As usual, we’re not the first ones to have this problem: the idea has been floating around for a while, and at some point a proposal was put forward (Brown, 2013) to add to C++ a new feature, called opaque typedefs, which would have made it easier to define this kind of types.

A final note: among these types, there is at least one which is not

determined on its own (like Rate or Time) but depends on

other types. The volatility of a price and the volatility of a rate

have different dimensions, and thus should have different types.

In short, Volatility should be a template type.

Date calculations

Date calculations are among the basic tools of quantitative finance. As can be expected, QuantLib provides a number of facilities for this task; I briefly describe some of them in the following subsections.

Dates and periods

An instance of the Date class represents a specific day such as

November 15th, 2014. This class provides a number of methods for

retrieving basic information such as the weekday, the day of the

month, or the year; static information such as the minimum and maximum

date allowed (at this time, January 1st, 1901 and December 31st, 2199,

respectively) or whether or not a given year is a leap year; or other

information such as a date’s Excel-compatible serial number or whether

or not a given date is the last date of the month. The complete list

of available methods and their interface is documented in the

reference manual. No time information is included (unless you enable

an experimental compilation switch).

Capitalizing on C++ features, the Date class also overloads a

number of operators so that date algebra can be written in a natural

way; for example, one can write expressions such as ++d, which

advances the date d by one day; d + 2, which yields the

date two days after the given date; d2 - d1, which yields the

number of days between the two dates; d - 3*Weeks, which yields

the date three weeks before the given date (and incidentally, features

a member of the available TimeUnit enumeration, the other

members being Days, Months, and Years); or

d1 < d2, which yields true if the first date is earlier

than the second one. The algebra implemented in the Date class

works on calendar days; neither bank holidays nor business-day

conventions are taken into account.

The Period class models lengths of time such as two days, three

weeks, or five years by storing a TimeUnit and an integer. It

provides a limited algebra and a partial ordering. For the non

mathematically inclined, this means that two Period instances

might or might not be compared to see which is the shorter; while it

is clear that, say, 11 months are less than one year, it is not

possible to determine whether 60 days are more or less than two months

without knowing which two months. When the comparison cannot

be decided, an exception is thrown.

And of course, even when the comparison seems obvious, we managed to sneak in a few surprises. For instance, the comparison

Period(7,Days) == Period(1,Weeks)

returns true. It seems correct, right? Hold that thought.

Calendars

Holidays and business days are the domain of the Calendar

class. Several derived classes exist which define holidays for a

number of markets; the base class defines simple methods for

determining whether or not a date corresponds to a holiday or a

business day, as well as more complex ones for performing tasks such

as adjusting a holiday to the nearest business day (where “nearest”

can be defined according to a number of business-day conventions,

listed in the BusinessDayConvention enumeration) or advancing a

date by a given period or number of business days.

It might be interesting to see how the behavior of a calendar changes

depending on the market it describes. One way would have been to

store in the Calendar instance the list of holidays for the

corresponding market; however, for maintainability we wanted to code

the actual calendar rules (such as “the fourth Thursday in November”

or “December 25th of every year”) rather than enumerating the

resulting dates for a couple of centuries. Another obvious way would

have been to use polymorphism and the Template Method pattern; derived

calendars would override the isBusinessDay method, from which

all others could be implemented. This is fine, but it has the

shortcoming that calendars would need to be passed and stored in

shared_ptrs. The class is conceptually simple, though, and is

used frequently enough that we wanted users to instantiate it and pass

it around more easily—that is, without the added verbosity of

dynamic allocation.

The final solution was the one shown in the listing below. It is a variation of the pimpl idiom, also reminiscent of the Strategy or Bridge patterns; these days, the cool kids might call it type erasure, too (Becker,2007).

Calendar class.class Calendar {

protected:

class Impl {

public:

virtual ~Impl() {}

virtual bool isBusinessDay(const Date&) const = 0;

};

shared_ptr<Impl> impl_;

public:

bool isBusinessDay(const Date& d) const {

return impl_->isBusinessDay(d);

}

bool isHoliday(const Date& d) const {

return !isBusinessDay(d);

}

Date adjust(const Date& d,

BusinessDayConvention c = Following) const {

// uses isBusinessDay() plus some logic

}

Date advance(const Date& d,

const Period& period,

BusinessDayConvention c = Following,

bool endOfMonth = false) const {

// uses isBusinessDay() and possibly adjust()

}

// more methods

};

Long story short: Calendar declares a polymorphic inner class Impl

to which the implementation of the business-day rules is delegated and

stores a pointer to one of its instances. The non-virtual

isBusinessDay method of the Calendar class forwards to the

corresponding method in Calendar::Impl; following somewhat the

Template Method pattern, the other Calendar methods are also

non-virtual and implemented (directly or indirectly) in terms of

isBusinessDay.19

Coming back to this after all these years, though, I’m thinking that

we might have implemented all public methods in terms of

isHoliday instead. Why? Because all calendars are defined

by stating which days are holidays (e.g., Christmas on December 25th

in a lot of places, or MLK Day on the third Monday in January in the

United States). Having isBusinessDay in the Impl

interface instead forces all derived classes to negate that logic

instead of implementing it directly. It’s like having them implement

isNotHoliday.

Derived calendar classes can provide specialized behavior by defining

an inner class derived from Calendar::Impl; their constructor

will create a shared pointer to an Impl instance and store it

in the impl_ data member of the base class. The resulting

calendar can be safely copied by any class that need to store a

Calendar instance; even when sliced, it will maintain the

correct behavior thanks to the contained pointer to the polymorphic

Impl class. Finally, we can note that instances of the same

derived calendar class can share the same Impl instance. This

can be seen as an implementation of the Flyweight pattern—bringing

the grand total to about two and a half patterns for one deceptively

simple class.

Enough with the implementation of Calendar, and back to its

behavior. Here’s the surprise I mentioned in the previous section.

Remember Period(1,Weeks) being equal to Period(7,Days)?

Except that for the advance method of a calendar, 7 days means 7

business days. Thus, we have a situation in which two periods

p1 and p2 are equal (that is, p1 == p2 returns

true) but calendar.advance(p1) differs from

calendar.advance(p2). Yay, us.

I’m not sure I have a good idea for a solution here. Since we want

backwards compatibility, the current uses of Days must keep

working in the same way; so it’s not possible, say, to start

interpreting calendar.advance(7, Days) as 7 calendar days.

One way out might be to keep the current situation, introduce two new

enumeration cases BusinessDays and CalendarDays that

remove the ambiguity, and deprecate Days. Another is to just

remove the inconsistency by dictating that a 7-days period do not, in

fact, equal one week; I’m not overly happy about this one.

As I said, no obvious solution. If you have any other suggestions, I’m all ears.

Day-count conventions

The DayCounter class provides the means to calculate the

distance between two dates, either as a number of days or a fraction

of an year, according to different conventions. Derived classes such

as Actual360 or Thirty360 exist; they implement

polymorphic behavior by means of the same technique used by the

Calendar class and described in the previous section.

Unfortunately, the interface has a bit of a rough edge. Instead of

just taking two dates, the yearFraction method is declared as

Time yearFraction(const Date&,

const Date&,

const Date& refPeriodStart = Date(),

const Date& refPeriodEnd = Date()) const;

The two optional dates are required by one specific day-count convention (namely, the ISMA actual/actual convention) that requires a reference period to be specified besides the two input dates. To keep a common interface, we had to add the two additional dates to the signature of the method for all day counters (most of which happily ignore them). This is not the only mischief caused by this day counter; you’ll see another in the next section.

Schedules

The Schedule class, shown in the next listing, is

used to generate sequences of coupon dates.

Schedule class.class Schedule {

public:

Schedule(const Date& effectiveDate,

const Date& terminationDate,

const Period& tenor,

const Calendar& calendar,

BusinessDayConvention convention,

BusinessDayConvention terminationDateConvention,

DateGeneration::Rule rule,

bool endOfMonth,

const Date& firstDate = Date(),

const Date& nextToLastDate = Date());

Schedule(const std::vector<Date>&,

const Calendar& calendar = NullCalendar(),

BusinessDayConvention convention = Unadjusted,

... /* `other optional parameters` */);

Size size() const;

bool empty() const;

const Date& operator[](Size i) const;

const Date& at(Size i) const;

const_iterator begin() const;

const_iterator end() const;

const Calendar& calendar() const;

const Period& tenor() const;

bool isRegular(Size i) const;

Date previousDate(const Date& refDate) const;

Date nextDate(const Date& refDate) const;

... // other inspectors and utilities

};

Following practice and ISDA conventions, this class has to accept a lot of parameters; you can see them as the argument list of its constructor. (Oh, and you’ll forgive me if I don’t go and explain all of them. I’m sure you can guess what they mean.) They’re probably too many, which is why the library uses the Named Parameter Idiom (already described in chapter 4) to provide a less unwieldy factory class. With its help, a schedule can be instantiated as

Schedule s = MakeSchedule().from(startDate).to(endDate)

.withFrequency(Semiannual)

.withCalendar(TARGET())

.withNextToLastDate(stubDate)

.backwards();

Other methods include on the one hand, inspectors for the stored data;

and on the other hand, methods to give the class a sequence interface,

e.g., size, operator[], begin, and end.

The Schedule class has a second constructor, taking a

precomputed vector of dates and a number of optional parameters, which

might be passed to help the library use the resulting schedule

correctly. Such information includes the date generation rule or

whether the dates are aligned to the end of the month, but mainly,

you’ll probably need to pass the tenor and an isRegular

vector of bools, about which I need to spend a couple of words.

What does “regular” mean? The boolean isRegular(i) doesn’t refer to

the i-th date, but to the i-th interval; that is, the one between

the i-th and (i+1)-th dates. When a schedule is built based on a

tenor, most intervals correspond to the passed tenor (and thus are

regular) but the first and last intervals might be shorter or longer

depending on whether we passed an explicit first or next-to-last date.

We might do this, e.g., when we want to specify a short first coupon.

If we build the schedule with a precomputed set of dates, we don’t have the tenor information and we can’t tell if a given interval is regular unless those pieces of information are passed to the schedule.20 In turn, this means that using that schedule to build a sequence of coupons (by passing it, say, to the constructor of a fixed-rate bond) might give us the wrong result. And why, oh, why does the bond needs this missing info in order to build the coupons? Again, because the day-count convention of the bond might be ISMA actual/actual, which needs a reference period; and in order to calculate the reference period, we need to know the coupon tenor. In absence of this information, all the bond can do is assume that the coupon is regular, that is, that the distance between the passed start and end dates of the coupon also corresponds to its tenor.

Finance-related classes

Given our domain, it is only to be expected that a number of classes directly model financial concepts. A few such classes are described in this section.

Market quotes

There are at least two possibilities to model quoted values. One is to model quotes as a sequence of static values, each with an associated timestamp, with the current value being the latest; the other is to model the current value as a quoted value that changes dynamically.

Both views are useful; and in fact, both were implemented in the

library. The first model corresponds to the TimeSeries class,

which I won’t describe in detail here; it is basically a map between

dates and values, with methods to retrieve values at given dates and

to iterate on the existing values, and it was never really used in

other parts of the library. The second resulted in the Quote

class, shown in the following listing.

Quote class.class Quote : public virtual Observable {

public:

virtual ~Quote() {}

virtual Real value() const = 0;

virtual bool isValid() const = 0;

};

Its interface is slim enough. The class inherits from the

Observable class, so that it can notify its dependent objects

when its value change. It declares the isValid method, that

tells whether or not the quote contains a valid value (as opposed to,

say, no value, or maybe an expired value) and the value method,

which returns the current value.

These two methods are enough to provide the needed behavior. Any other object whose behavior or value depends on market values (for example, the bootstrap helpers of chapter 2) can store handles to the corresponding quotes and register with them as an observer. From that point onwards, it will be able to access the current values at any time.

The library defines a number of quotes—that is, of implementations

of the Quote interface. Some of them return values which are

derived from others; for instance, ImpliedStdDevQuote turns

option prices into implied-volatility values. Others adapt other

objects; ForwardValueQuote returns forward index fixings as the

underlying term structures change, while LastFixingQuote

returns the latest value in a time series.

At this time, only one implementation is an genuine source of external

values; that would be the SimpleQuote class, shown in

the next listing.

SimpleQuote class.class SimpleQuote : public Quote {

public:

SimpleQuote(Real value = Null<Real>())

: value_(value) {}

Real value() const {

QL_REQUIRE(isValid(), "invalid SimpleQuote");

return value_;

}

bool isValid() const {

return value_!=Null<Real>();

}

Real setValue(Real value) {

Real diff = value-value_;

if (diff != 0.0) {

value_ = value;

notifyObservers();

}

return diff;

}

private:

Real value_;

};

It is simple in the sense that it

doesn’t implement any particular data-feed interface: new values are

set manually by calling the appropriate method. The latest value

(possibly equal to Null<Real>() to indicate no value) is stored

in a data member. The Quote interface is implemented by having

the value method return the stored value, and the

isValid method checking whether it’s null. The method used to

feed new values is setValue; it takes the new value, notifies

its observers if it differs from the latest stored one, and returns

the increment between the old and new values.21

I’ll conclude this post with a few short notes. The first is that

the type of the quoted values is constrained to Real. This has

not been a limitation so far, and besides, it’s now too late to define

Quote as a class template; so it’s unlikely that this will ever

change.

The second is that the original idea was that the Quote

interface would act as an adapter to actual data feeds, with different

implementations calling the different API and allowing QuantLib to use

them in a uniform way. So far, however, nobody provided such

implementations; the closer we got was to use data feeds in Excel and

set their values to instances of SimpleQuote.

The last (and a bit longer) note is that the interface of

SimpleQuote might be modified in future to allow more advanced

uses. When setting new values to a group of related quotes (say, the

quotes interest rates used for bootstrapping a curve) it would be

better to only trigger a single notification after all values are set,

instead of having each quote send a notification when it’s updated.

This behavior would be both faster, since chains of notifications turn

out to be quite the time sink, and safer, since no observer would risk

to recalculate after only a subset of the quotes are updated. The

change (namely, an additional silent parameter to

setValue that would mute notifications when equal to

true) has already been implemented in a fork of the library,

and could be added to QuantLib too.

Interest rates

The InterestRate class (shown in the listing that follows)

encapsulates general interest-rate calculations. Instances of this

class are built from a rate, a day-count convention, a compounding

convention, and a compounding frequency (note, though, that the value

of the rate is always annualized, whatever the frequency). This

allows one to specify rates such as “5%, actual/365, continuously

compounded” or “2.5%, actual/360, semiannually compounded.” As can be

seen, the frequency is not always needed. I’ll return to this later.

InterestRate class.enum Compounding { Simple, // 1+rT

Compounded, // (1+r)^T

Continuous, // e^{rT}

SimpleThenCompounded

};

class InterestRate {

public:

InterestRate(Rate r,

const DayCounter&,

Compounding,

Frequency);

// inspectors

Rate rate() const;

const DayCounter& dayCounter();

Compounding compounding() const;

Frequency frequency() const;

// automatic conversion

operator Rate() const;

// implied discount factor and compounding after a given time

// (or between two given dates)

DiscountFactor discountFactor(Time t) const;

DiscountFactor discountFactor(const Date& d1,

const Date& d2) const;

Real compoundFactor(Time t) const;

Real compoundFactor(const Date& d1,

const Date& d2) const;

// other calculations

static InterestRate impliedRate(Real compound,

const DayCounter&,

Compounding,

Frequency,

Time t);

... // same with dates

InterestRate equivalentRate(Compounding,

Frequency,

Time t) const;

... // same with dates

};

Besides the obvious inspectors, the class provides a number of

methods. One is the conversion operator to Rate, i.e., to

double. On afterthought, this is kind of risky, as the

converted value loses any day-count and compounding information; this

might allow, say, a simply-compounded rate to slip undetected where a

continuously-compounded one was expected. The conversion was added

for backward compatibility when the InterestRate class was

first introduced; it might be removed in a future revision of the

library, dependent on the level of safety we want to force on

users.22

Other methods complete a basic set of calculations. The

compoundFactor returns the unit amount compounded for a time

\(t\) (or equivalently, between two dates \(d_1\) and

\(d_2\)) according to the given interest rate; the

discountFactor method returns the discount factor between two dates

or for a time, i.e., the reciprocal of the compound factor; the

impliedRate method returns a rate that, given a set of conventions,

yields a given compound factor over a given time; and the

equivalentRate method converts a rate to an equivalent one with

different conventions (that is, one that results in the same

compounded amount).

Like the InterestRate constructor, some of these methods take a

compounding frequency. As I mentioned, this doesn’t always make

sense; and in fact, the Frequency enumeration has a

NoFrequency item just to cover this case.

Obviously, this is a bit of a smell. Ideally, the frequency should be

associated only with those compounding conventions that need it, and

left out entirely for those (such as Simple and

Continuous) that don’t. If C++ supported it, we would write

something like

enum Compounding { Simple,

Compounded(Frequency),

Continuous,

SimpleThenCompounded(Frequency)

};

which would be similar to algebraic data types in functional

languages, or case classes in Scala;23 but unfortunately

that’s not an option. To have something of this kind, we’d have to go

for a full-featured Strategy pattern and turn Compounding into

a class hierarchy. That would probably be overkill for the needs of

this class, so we’re keeping both the enumeration and the smell.

Indexes

Like other classes such as Instrument and TermStructure,

the Index class is a pretty wide umbrella: it covers concepts

such as interest-rate indexes, inflation indexes, stock indexes—you

get the drift.

Needless to say, the modeled entities are diverse enough that the

Index class has very little interface to call its own. As

shown in the following listing, all its methods have to do with

index fixings.

Index class.class Index : public Observable {

public:

virtual ~Index() {}

virtual std::string name() const = 0;

virtual Calendar fixingCalendar() const = 0;

virtual bool isValidFixingDate(const Date& fixingDate)

const = 0;

virtual Real fixing(const Date& fixingDate,

bool forecastTodaysFixing = false)

const = 0;

virtual void addFixing(const Date& fixingDate,

Real fixing,

bool forceOverwrite = false);

void clearFixings();

};

The isValidFixingDate method tells us whether a

fixing was (or will be made) on a given date; the

fixingCalendar method returns the calendar used to determine

the valid dates; and the fixing method retrieves a fixing for a

past date or forecasts one for a future date. The remaining methods

deal specifically with past fixings: the name method, which

returns an identifier that must be unique for each index, is used to

index (pun not intended) into a map of stored fixings; the

addFixing method stores a fixing (or many, in other overloads

not shown here); and the clearFixing method clears all stored

fixings for the given index.

Why the map, and where is it in the Index class? Well, we started

from the requirement that past fixings should be shared rather than

per-instance; if one stored, say, the 6-months Euribor fixing for a

date, we wanted the fixing to be visible to all instances of the same

index,24 and not just the particular one whose addFixing

method we called. This was done by defining and using an

IndexManager singleton behind the curtains. Smelly? Sure, as all

singletons. An alternative might have been to define static class

variables in each derived class to store the fixings; but that would

have forced us to duplicate them in each derived class with no real

advantage (it would be as much against concurrency as the singleton).

Since the returned index fixings might change (either because their

forecast values depend on other varying objects, or because a newly

available fixing is added and replaces a forecast) the Index

class inherits from Observable so that instruments can register

with its instances and be notified of such changes.

At this time, Index doesn’t inherit from Observer,

although its derived classes do (not surprisingly, since forecast

fixings will almost always depend on some observable market quote).

This was not an explicit design choice, but rather an artifact of the

evolution of the code and might change in future

releases. However, even if we were to inherit Index from Observer,

we would still be forced to have some code duplication in derived

classes, for a reason which is probably worth describing in more

detail.

I already mentioned that fixings can change for two reasons. One is

that the index depends on other observables to forecast its fixings;

in this case, it simply registers with them (this is done in each

derived class, as each class has different observables). The other

reason is that a new fixing might be made available, and that’s more

tricky to handle. The fixing is stored by a call to addFixing

on a particular index instance, so it seems like no external

notification would be necessary, and that the index can just call the