Table of Contents

- 1 Introduction

- 2 Making Components

- 3 Shadow DOM

- 4 Events

- 5 Templates

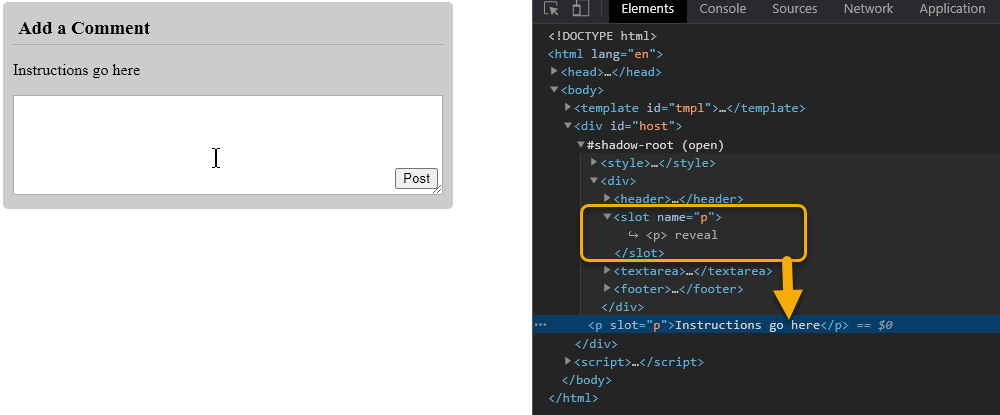

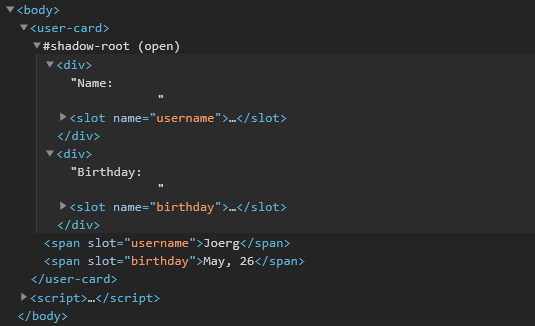

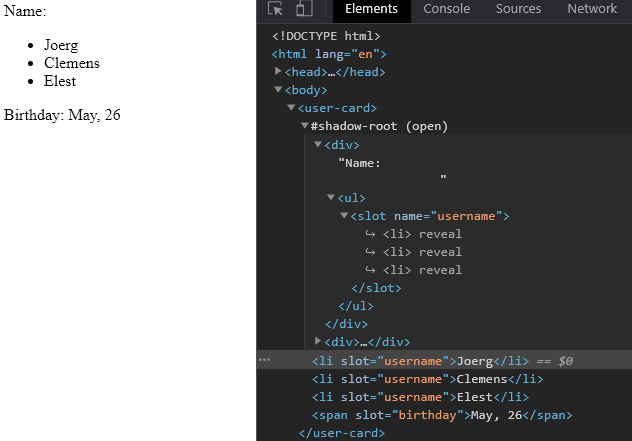

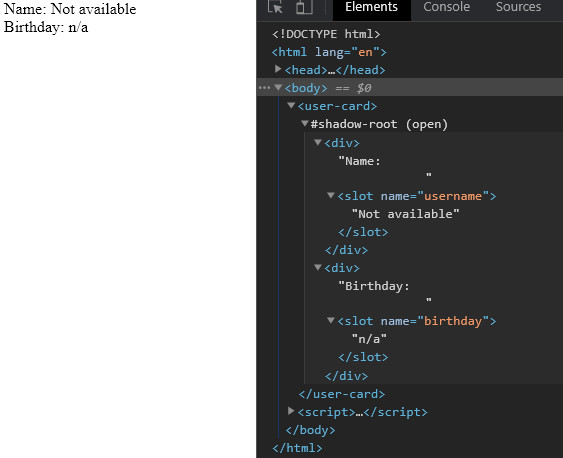

- 6 Slots

- 7 Components and Styles

- 8 Making Single Page Apps

- 9 Professional Components

-

Introducing @nyaf

- Elevator Pitch

- Parts

- Project Configuration with TypeScript

- Project Configuration with Babel

- Components

- The First Component

- Template Features

- n-repeat

- JSX / TSX

- Examples

- Select Elements

- Smart Components

- The Life Cycle

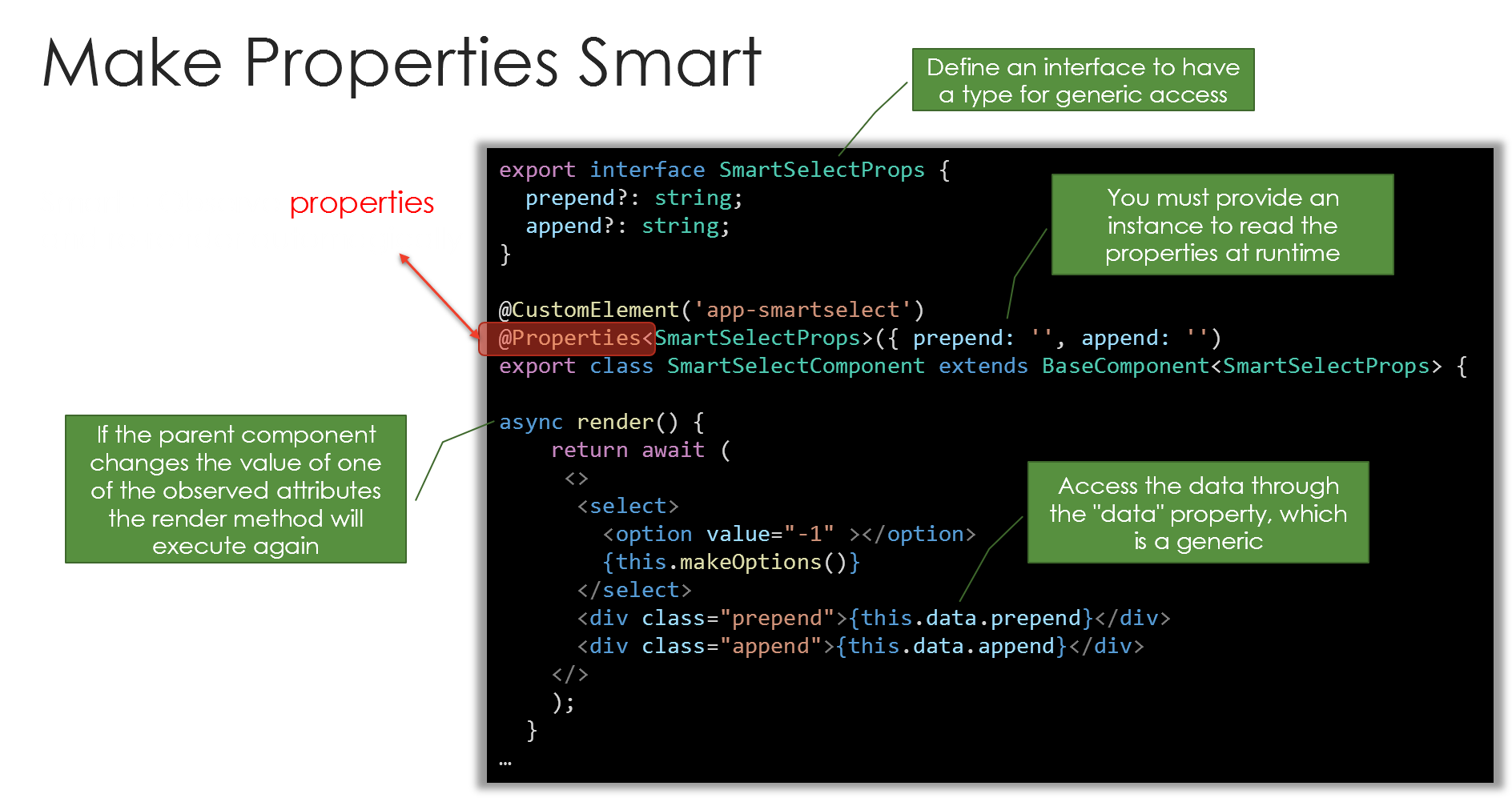

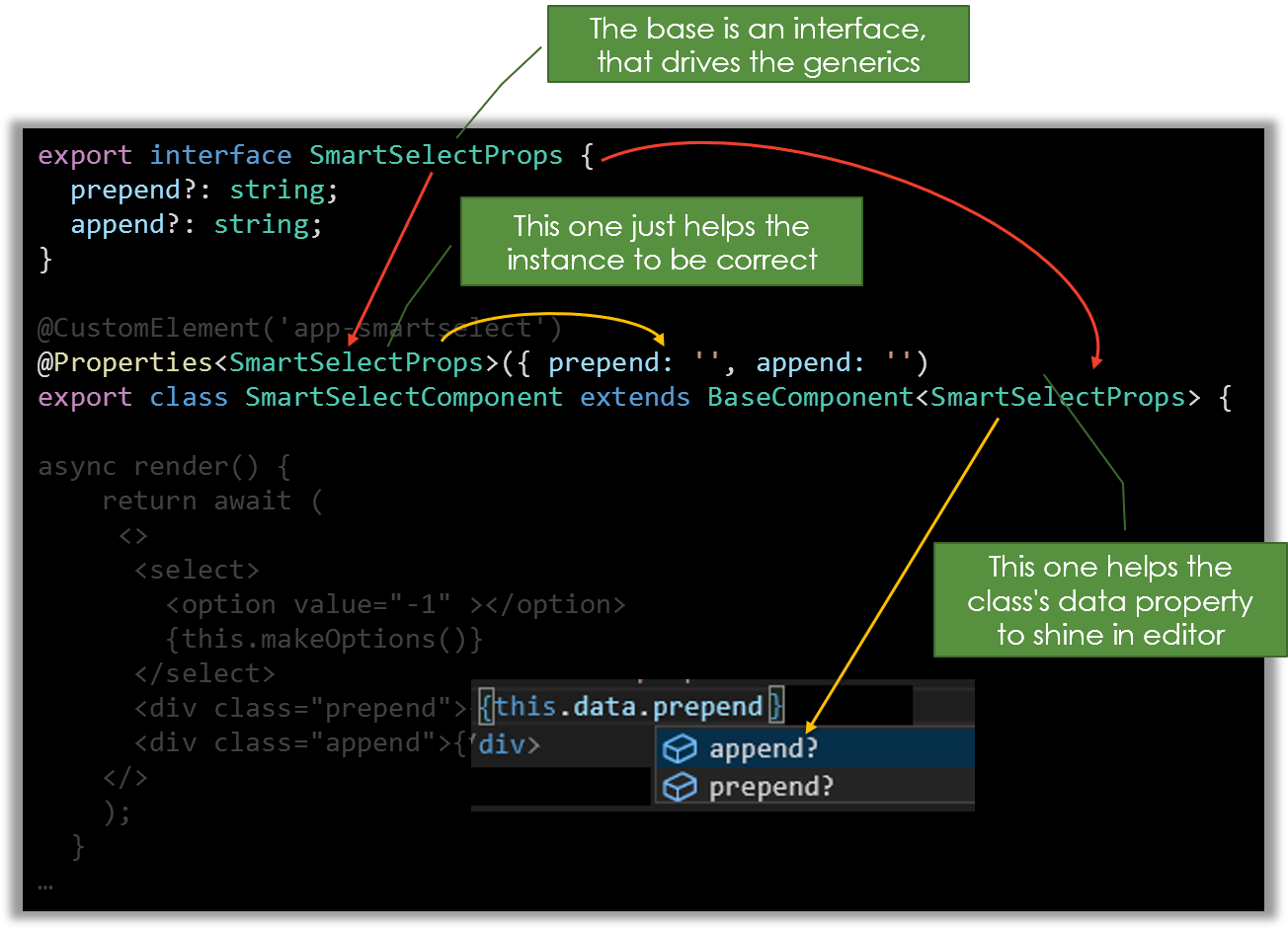

- State and Properties

- Directives

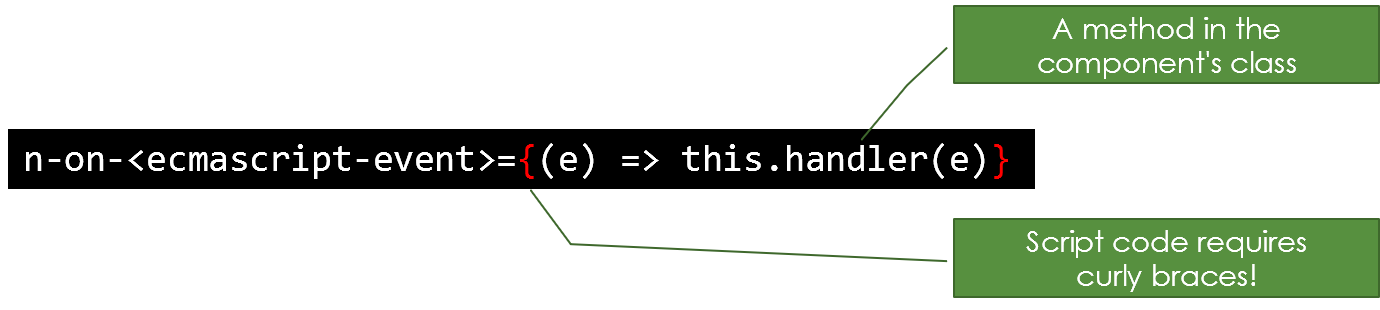

- Events

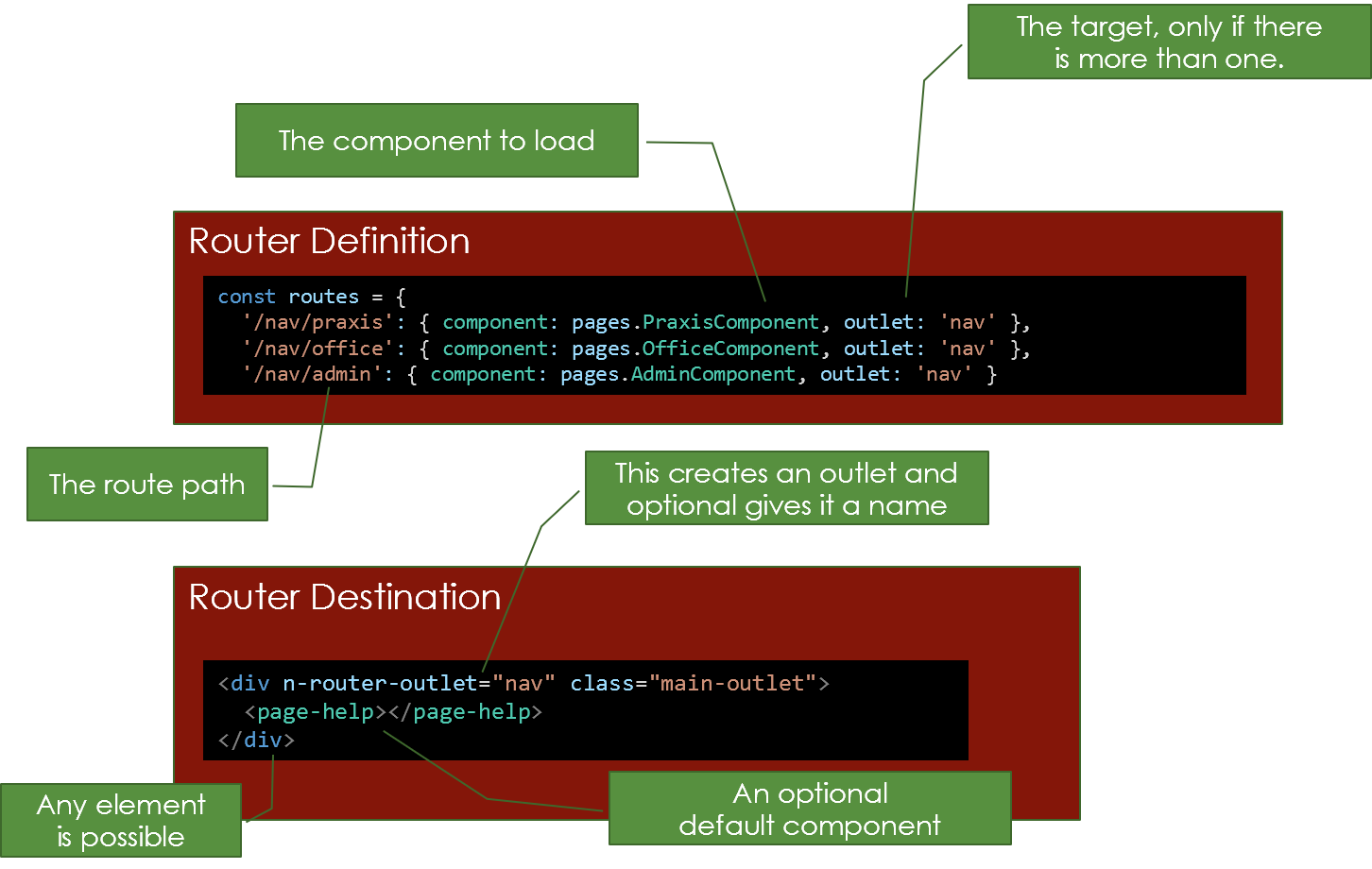

- Router

- Shadow DOM

- Services

- Forms Module

- View Models

- Data Binding

- Creating Forms

- Smart Binders

- Validation

- Additional Information

- Custom Binders

- Installation of Forms Module

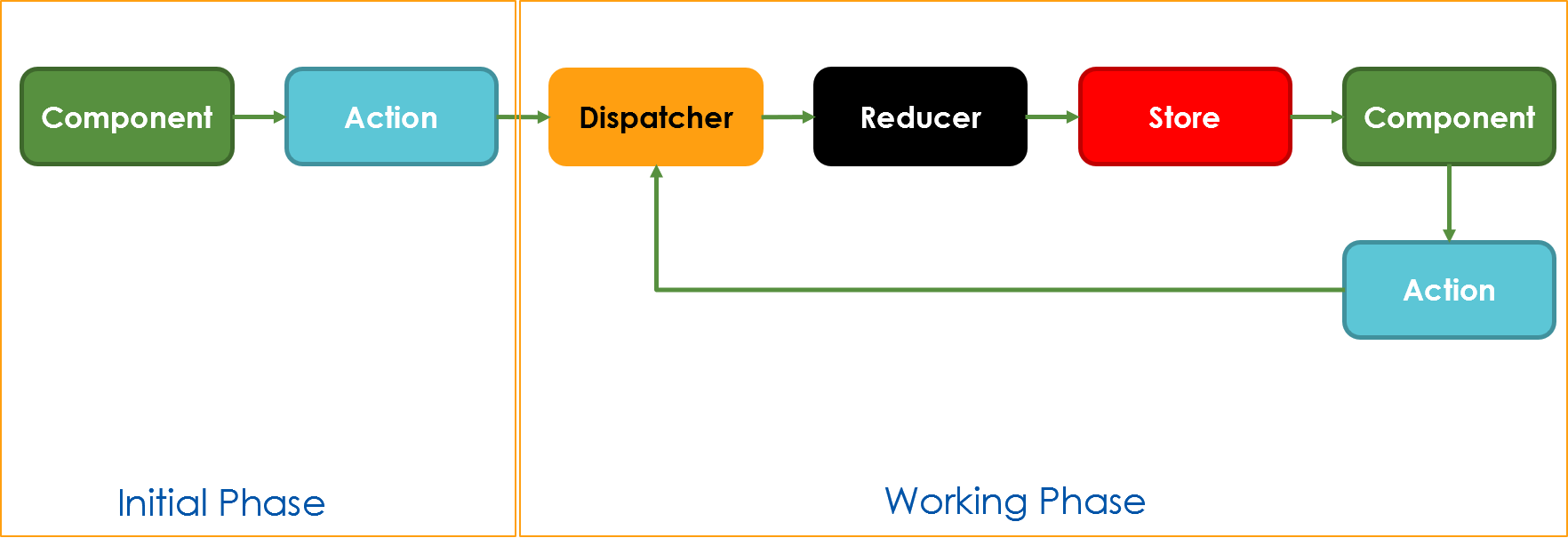

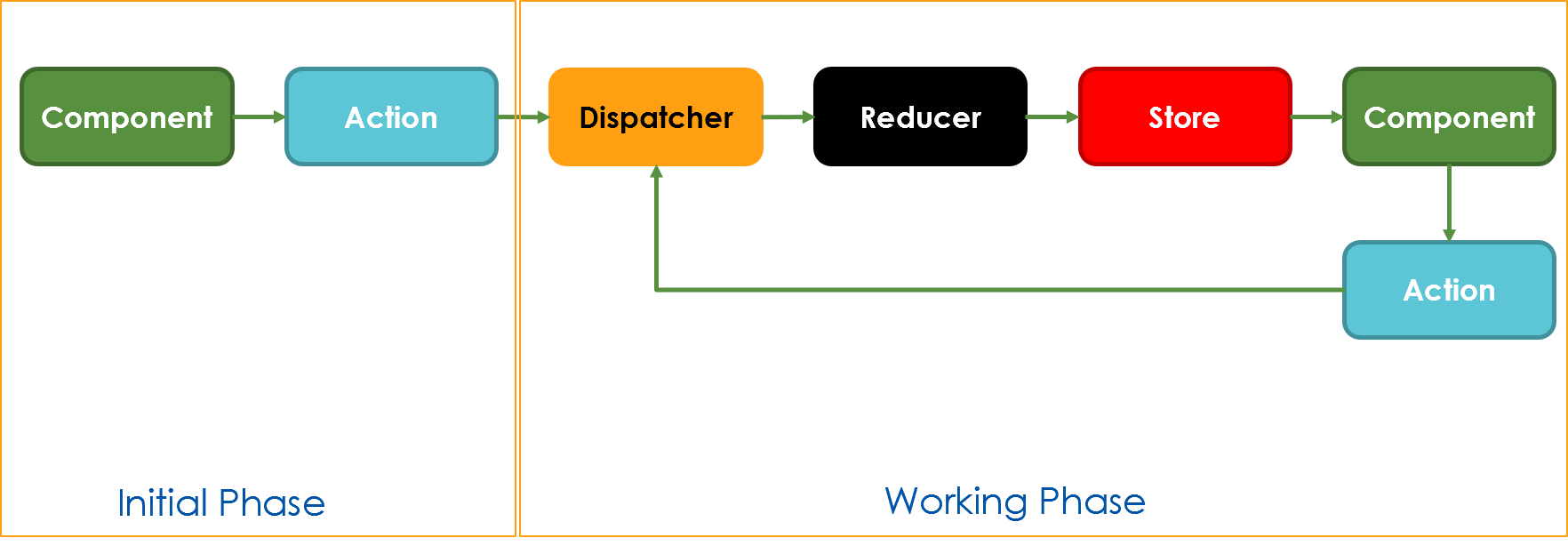

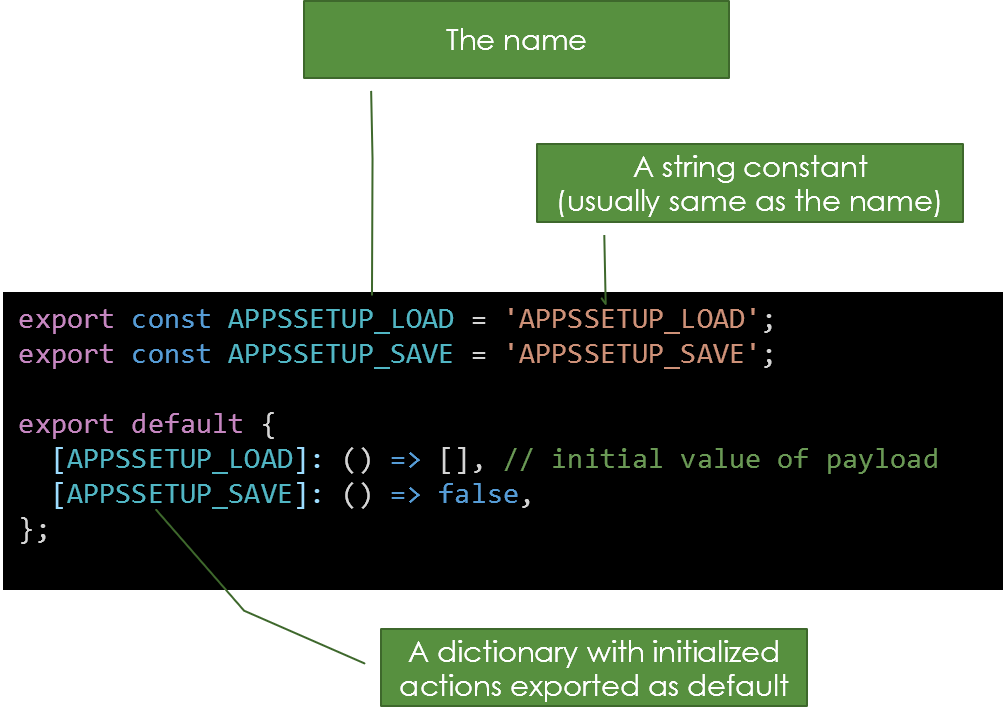

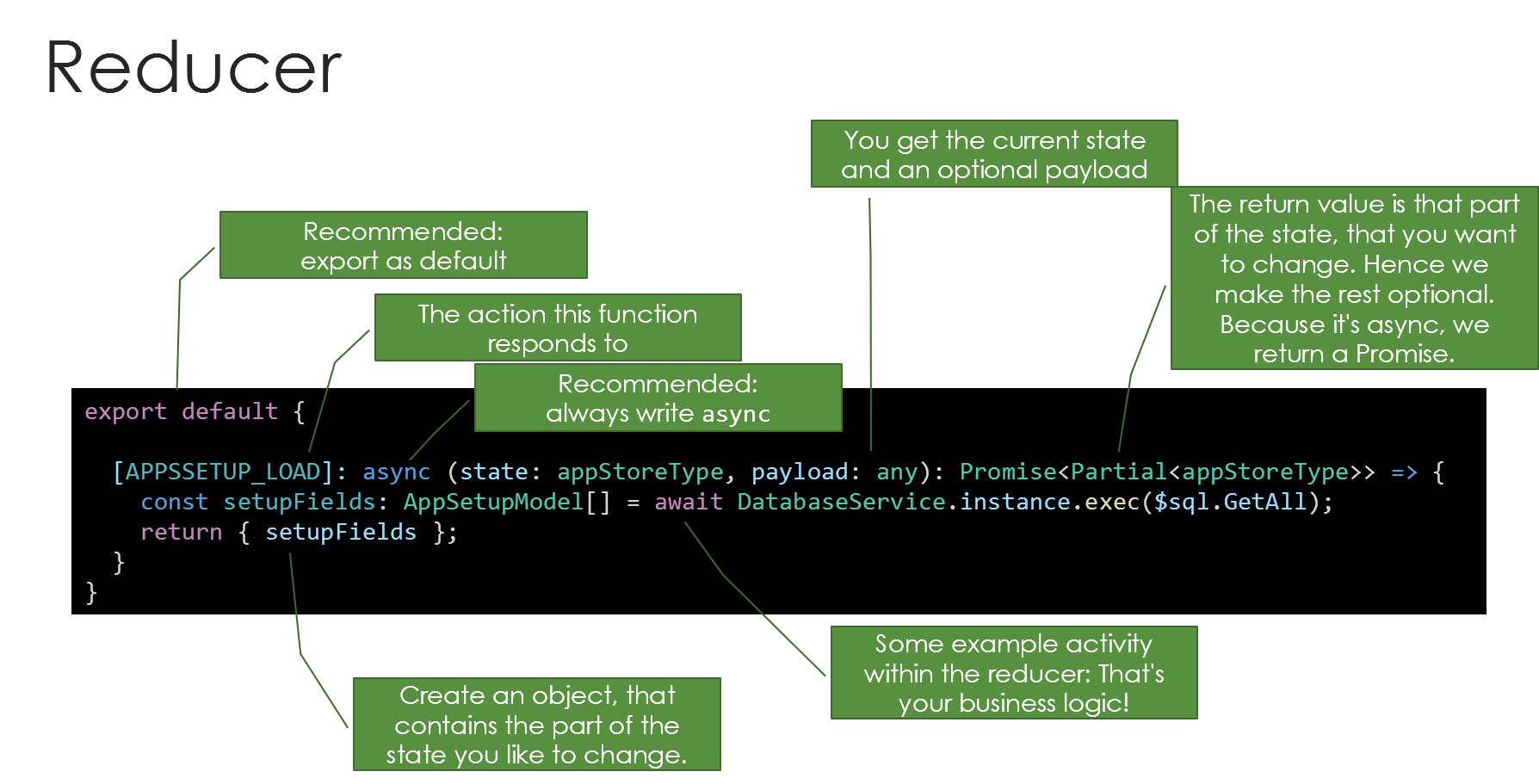

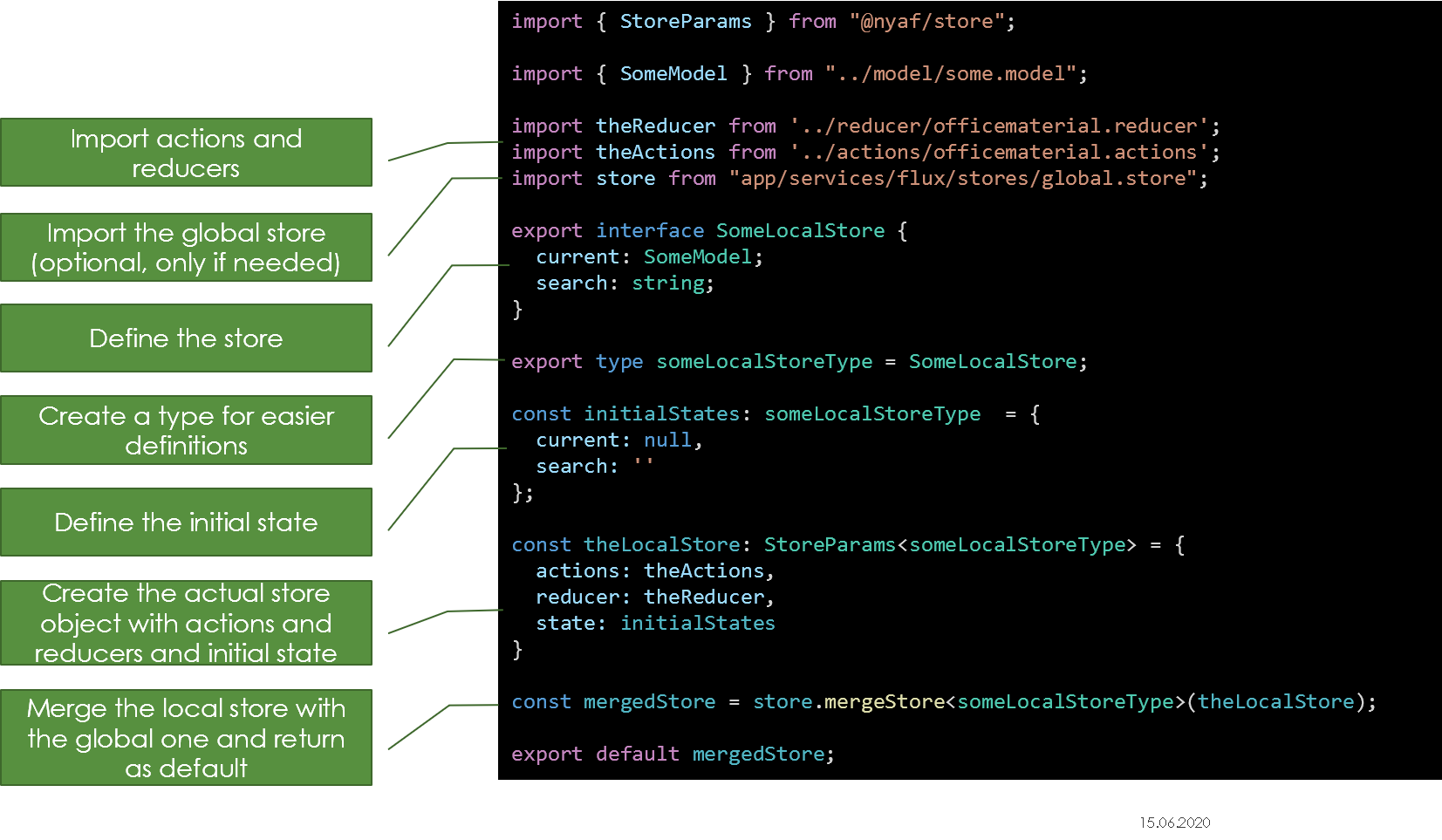

- The Flux Store

- Type Handling in Typescript

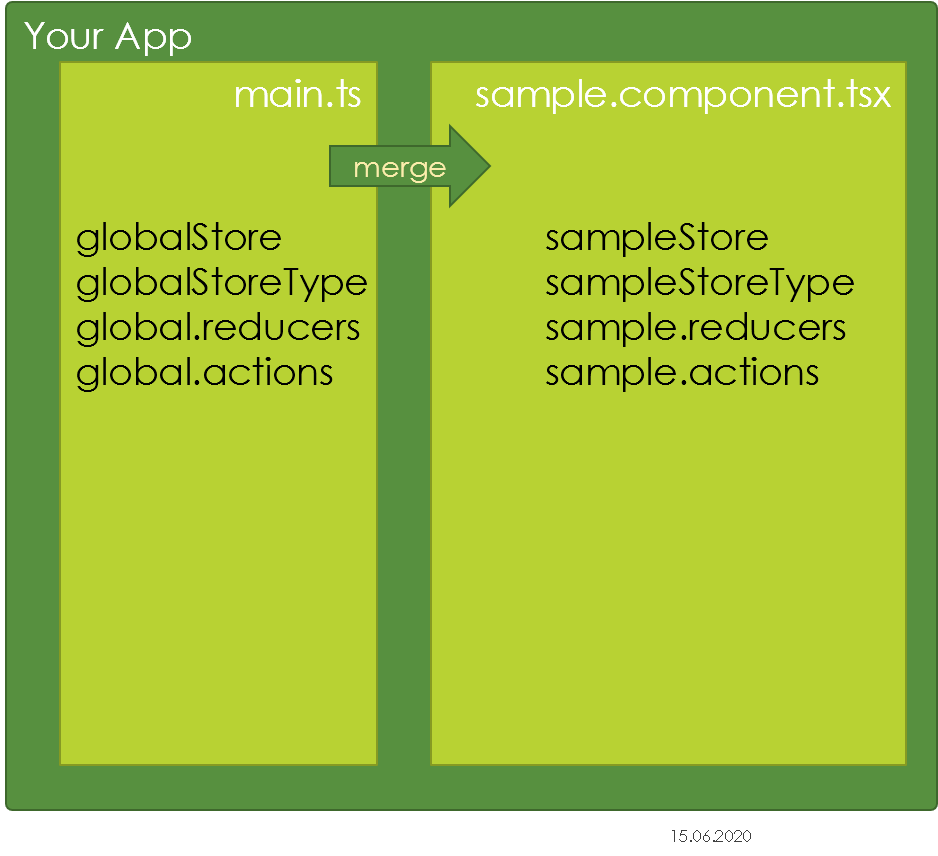

- Global and Local Store

- Disposing

- Effects Mapping

- Automatic Updates

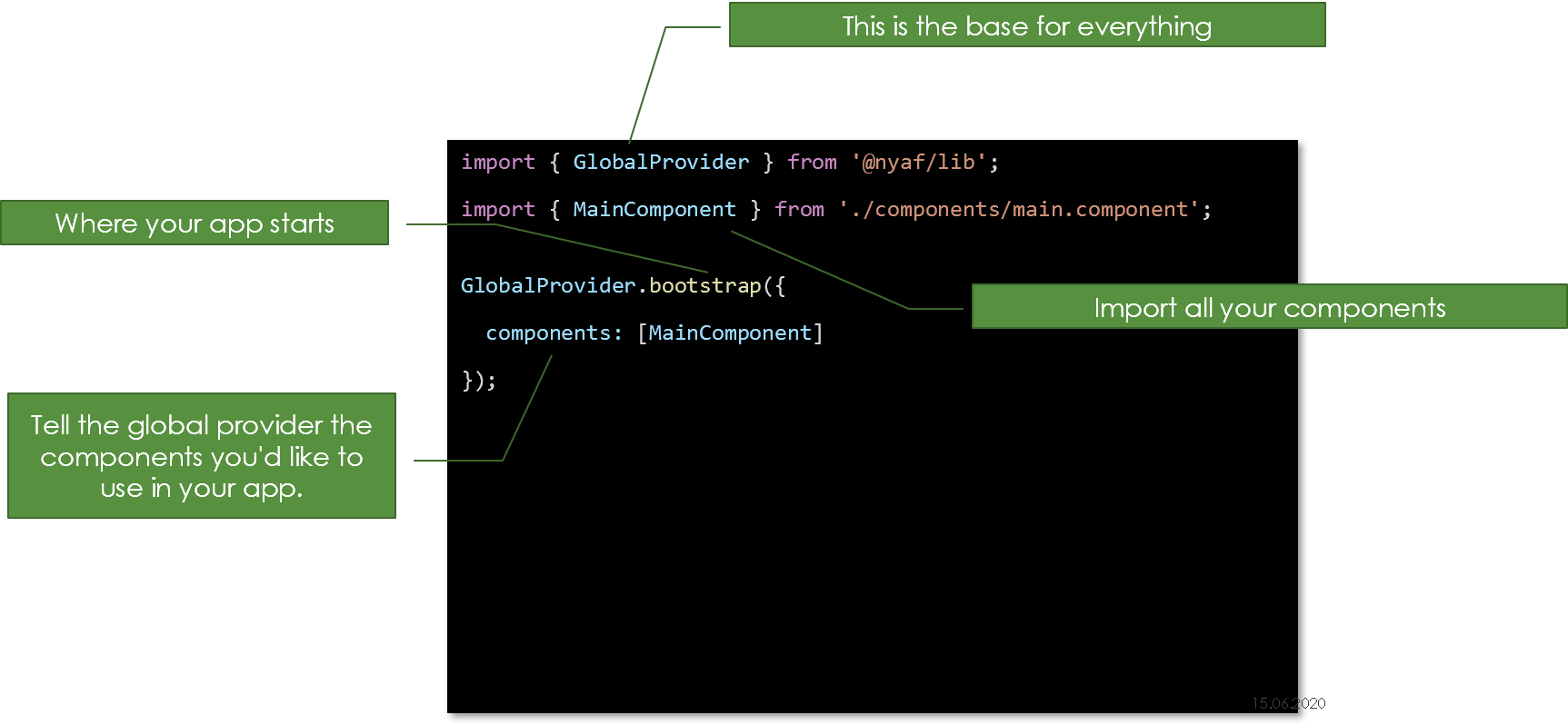

- Installation

- Notes

This book explains Web Components. Additionally, it shows how to create a simple and small layer (a so-called thin library) around the native HTML 5 API to make your life as a developer a lot easier. Such a library is available as an Open Source project called @nyaf – Not Yet Another Framework. It’s not a requirement, but reduces the hurdles to use Web Components significantly and avoids the jump into the full blown frameworks and libraries such as Angular or React.

Who Should Read this Book?

This book is aimed at both, beginners and experienced web developers. The code is mainly TypeScript, few examples are pure ECMAScript.

In any case, I tried not to ask any prerequisites or conditions to the reader. You do not need to be a computer scientist, not in perfect command of language, don’t need to know rocket science. No matter in what context you have encountered on Web Components, you should be able to read this text. However, the most benefit from this book gets everybody already working on frontend stuff. Especially those overwhelmed with frameworks, techniques and monstrous project structures will learn what modern Web development has to offer. Nowadays all modern browsers are able to execute ES2015 and above natively and transpilers such as Babel or TypeScript makes it easy to adapt.

What You Should Know

Readers of my books have hardly any requirements. Some HTML cannot harm and who already have seen a static HTML page (the source code, of course) is certainly well prepared. I assume that you have at least a current operating system, on that you will find an editor with which you can create web pages.

Web Components are not that demanding. But they are made by an API on top of the HTML 5 API. This API is offered through JavaScript. Hence, as an additional requirement, you should be able to read JavaScript and have a basic understanding of TypeScript.

As You Can Read this Text

I will not dictate how you should read this text. In the first draft of the structure, I have tried several variations and found that there exists no ideal form. However, reader tend today to consume smaller chunks, independent chapters, and focused content. This book supports this trend by reducing it to a small issue, focused and with no “blah-blah” for the inflation of the volume.

Beginners should read the text as a narrative from the first to the last page. Those who are already somewhat familiar can safely skip certain sections.

Conventions used in the Book

The theme is not easy to master technically, because scripts are often too wide and it would be nice if one could support the best optical reading form. I have therefore included extra line breaks used to aid readability, but that’s not required in the editor of your development environment.

In general, each program code is set to a non-proportional font. In addition, most scripts have line numbers:

1 customElements.define('show-hello', class extends HTMLElement {

2 connectedCallback() {

3 const shadow = this.attachShadow({mode: 'open'});

4 shadow.innerHTML = `<p>

5 Hello, ${this.getAttribute('name')}6 </p>`;

7 }

8 });

If you think you need to enter something in the prompt or in a dialog box, this part of the statement is in bold:

$ npm install typescript

The first character is the prompt and is not entered. In the book I use the Linux prompt from the bash shell. The commands will work, without any exception, unchanged even on Windows. The only difference then is the command prompt C:> or something similar at the beginning of the line. Usually the instructions are related to relative paths or no paths at all, so the actual prompt shouldn’t matter despite the fact that you shall be in your working folder.

Expressions and command lines are sometimes peppered with all types of characters, and in almost all cases, it depends on each character. Often, I’ll discuss the use of certain characters in precisely such an expression. Then the “important” characters with line breaks stay alone and in this case, too, line numbers are used to reference the affected symbol in the text exactly (note the : (colon) character in line 2):

1 a.test {

2 :hover {

3 color: red

4 }

5 }

The font is non-proportional, so that the characters are countable and opening and closing parentheses are always in the same column.

Symbols

To facilitate the orientation in the search for a solution, there is a whole range of symbols that are used in the text.

Preparations

To use the code in the book you need this:

- A machine with NodeJs v10+ installed. Any desktop OS will do it, whether as Windows, MacOS, or Linux. Windows users can use any shell, WSL or CMD or Powershell. Of course, any shell on Linux is good enough, too.

- An editor to enter code. I recommend using Visual Studio Code. It runs on all mentioned operating systems. Webstorm is also an amazing powerful editor.

- A folder where the project is being created. Easy enough, but keep your environment clean and organized like a pro.

This book comes with a lot of examples and demo code. It’s available on Github at:

The folders are structured following the book, chapter by chapter.

If you’re relatively new in the Web development field, test your knowledge by cloning the repo, bring the examples to live and watch the outcome. Read the text and add your own stuff once you know that the environment is up and running.

Using the @nyaf Library

The author of this book has years of experience with Web components. After several projects, smaller and bigger ones, the frustration was growing about the lack of support for simple tasks and the burden of huge frameworks that intentionally solve these burdens, but come with an overwhelming amount of additional features. None of the frameworks felt right. I suspect that most developer support code has a similar trigger, so I decided to start my own library project. It’s called nyaf - Not Yet Another Framework. It’s just a thin library, few Kilobytes only, and it solves just the basic needs:

- A thin wrapper to handle Web components in TypeScript code very well

- A router to have full single page application support

- Support for data binding and form validation

- A Flux based store to get a professional architecture

It’s split into three parts, so you just use what you want and skip the features not (yet) needed.

About the Author

Jörg works as a trainer, consultant and software developer for major companies worldwide. Build up on the experience of 25 years of work with web and many, many large and small projects.

Jörg believes it is especially important to have solid foundations. Instead of always running to create the latest framework, many developers would be better advised to create and provide a robust foundation.

Jörg has written over 60 titles in the renowned and prestigious specialist publishers in German and English, including some bestsellers for Carl Hanser, Apress, and O’Reilly.

Anyone who wants to learn this subject compact and fast, its right here. On his website www.joergkrause.de much more information can be found.

Contact the Author

In addition to the website, you can also direct contact him over at www.IT-Visions.de. If your business needs professional advice about web topics or a continuing education/ training session for software developers, please contact Jörg through his Website or book directly via http://www.IT-Visions.de.

1 Introduction

Web components are a set of standards to make self-contained components: custom HTML-elements with their own properties and methods, encapsulated DOM and styles. The technology is natively supported by all modern browsers and does not require a framework. The API has some quicks and quirks though. I will explain those obstacles it in great detail, but it’s helpful to know that you can make your life easier. A thin wrapper library to handle common tasks is the answer. This is what the @nyaf – Not Yet Another Framework – code is for. A full description can be found in the appendix. However, all examples and explanations within the book chapters are completely independent. Of course you can use any other component library.

1.1 The Global Picture

This section describes a set of modern standards for Web Components.

Components

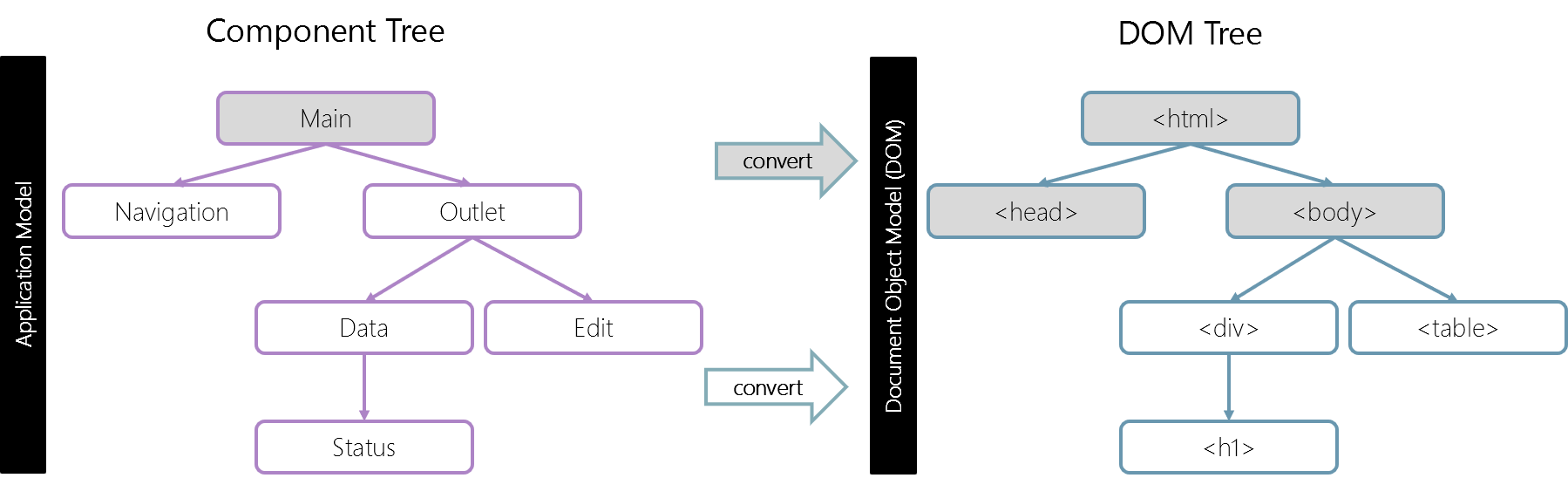

The whole component idea is nothing new. It’s used in many frameworks and elsewhere. Before we move to implementation details, imagine how the internals of a page in a browser is being described. You have a tree of simple elements, defined by the language HyperText Markup Language (HTML). You also have the ability to describe the appearance of each element using Cascading Style Sheets (CSS). You have the ability to manipulate both parts dynamically at runtime using ECMAScript (also known as JavaScript). The most important point in this description is the word “tree”. Elements form a tree, where one or more elements are the children of another one.

If the basic structure of a page is already a tree of smaller parts (see Figure 1-1), it makes sense and simplifies development, if on a higher level the elements form a tree too. Such a unit, hierarchical collections of functionality that can form a tree, is called a component.

A page hence consists of many components. Each component, in its turn, has many smaller details inside. At the end, it’s still pure HTML.

The components can be very complex, sometimes more complicated than websites itself. How such complex units are created? Which principles we could borrow to make our development same-level reliable and scalable? Or, at least, close to it.

Component Architecture

The well known rule for developing complex software is: don’t make complex software. If something becomes complex – split it into simpler parts and connect them in the most obvious way. A good architect is the one who can make the complex simple to handle for the developer. (That’s not the same as an UX designer, who makes the complex application simple to use for the end user; but that’s an entirely different story.)

You can split user interfaces into visual components: each of them has its own place on the page, can “do” a well-described task, and is separate from the others.

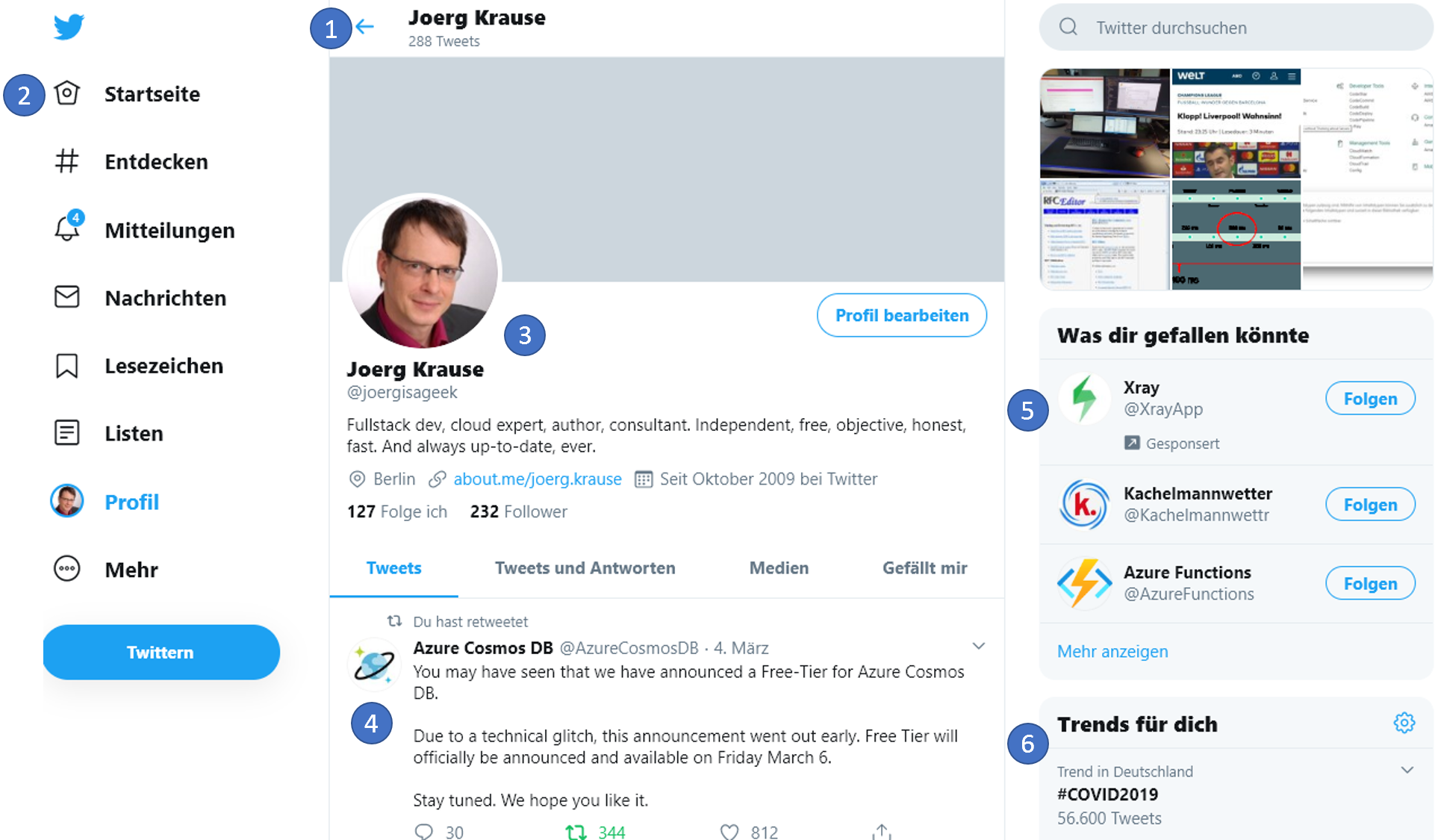

Let’s take a look at a website (see Figure 1-2), for example Twitter. It naturally splits into components:

- Top navigation

- Main menu

- User profile

- Tweet feed

- Suggestions

- Trending subjects

Components may have sub-components, e.g. messages may be part of a higher-level “message list” component. A clickable user picture itself may be a component, and so on. It boils down to HTML eventually. If there is no more simplification a native element forms a leave in the tree. The profile branch may end with an <img> tag, then.

How do we decide, what a component is? That comes from intuition, experience and common sense. Usually it’s a separate visual entity that we can describe in terms of what it does and how it interacts with the page. In the case above, the page has blocks, each of them plays its own role, it’s logical to make these components. If you’re new to this software architecture, it’s a good advise to keep a component smaller than the typical size of your screen. In reality, that means the lines of code that form the component shall fit on your standard monitor using your favorite font size. For me, it’s a maximum of 100 lines of code. If my components grow, I try to split them in smaller chunks. However, always keep the logical structure in mind. If two parts of a component differ significantly and both may use only 25 lines of code, it’s still a good idea to split it up and have clean code instead of clinging to the 100 lines1 rule.

Parts of a Component

A component has several parts. These can be splittet into several files or appear in just one file. It mainly depends on the environment you use and the strategy to create, compile and deploy the final code. In a logical view these are the parts:

- A JavaScript or TypeScript class.

- A DOM structure, managed solely by its class, so outside code doesn’t access it (the “encapsulation” principle).

- CSS styles, applied to the component. These can be isolated or global.

- An API: events, class methods etc. that interact with other components or application parts.

Once again, the whole “component” thing is nothing special. It’s just a clever approach to handle the complexity of web pages in a way an average human being can understand.

There exists many frameworks and development methodologies to build them, each with its own bells and whistles. Usually, special CSS classes and conventions are used to provide “component feel” – CSS scoping and DOM encapsulation. “Web components” provide built-in browser capabilities for that, so we don’t have to emulate them any more. That’s one of the most powerful developments we saw in the recent years to raise in the realm of web development. Unfortunately, the “component frameworks”, especially Angular, Vue, and React seem to be seen by developers as the final solution, the solely way to create components. We understand that’s because it brings users and makes the framework more useful. But it’s not entirely true. The native stuff is almost as good as these frameworks as you will see soon. However, don’t ask an Angular fellow. What should she say?

Web Components bring some basic features that makes them so extraordinarily useful.

- Custom elements – to define custom HTML elements.

- Shadow DOM – to create an internal DOM for the component, hidden from the other parts of the app.

- CSS Scoping – to declare styles that only apply inside the Shadow DOM of the component.

- Event re-targeting and other minor stuff to make custom components better fit the development requirements.

In the next chapters I’ll go into details of such components – the fundamentals and well-supported features of web components that are really good on its own. Also, some smart stuff written around it will be explained to show how you can work very closely on the comfort level of Angular or React without actually using them.

1.2 The Raise of Thin Libraries

After digging deeper into the Web Component world it seems that there is no need for a full framework like Angular or React anymore. However, some repeating tasks are boring and error prone. Hence a small layer around the basic API would be helpful. That was the beginning of the famous @nyaf thin library. It’s not called a framework because even with two more modules that became part of the package it’s very small, indeed. These additional modules are first @nyaf/forms that is responsible for bi-directional binding and validation. Second, the @nyaf/store module is a simple yet powerful Flux based store. It simplifies the architecture of huge applications dramatically.

One of the clear approaches from the beginning was the avoidance of dependencies. You need this and nothing more. Another approach is the interaction with any existing library. Even pure jQuery code will not harm the usage of @nyaf. And, finally, it’s pure ES2015+ and there are no polyfills or additions for elder browsers. Modern browsers have a market share of 96% and that’s what you target. The full documentation is added as an appendix to this book for your reference.

Single Page Apps

A single-page application (SPA) is a web application or website that interacts with the web browser by dynamically rewriting the current web page with new data from the web server, instead of the default method of the browser loading entire new pages. The goal is faster transitions that make the website feel more like a native app.

In a SPA, all necessary HTML, JavaScript, and CSS code is either retrieved by the browser with a single page load, or the appropriate resources are dynamically loaded and added to the page as necessary, usually in response to user actions. The page does not reload at any point in the process, nor does control transfer to another page. The location hash or the HTML5 History API can still be used to provide the perception and navigability of separate logical pages in the application.

Web Components make it easy to create SPAs. The main part is a feature called “router”. The router routes a call (usually a click on a hyperlink or button) by using an assigned URL to some kind of management code. That code creates a new tree of components and moves it to a particular target element. The browser reacts to this operation by rendering the elements. The developer has to make these decisions to get it working:

- Define a target – an element where the replaceable tree appears. We call this usually an “outlet”.

- Create a definition that maps routes to components. This is a “router configuration”.

Again, some convenient stuff can be created to make your daily life easier. See chapter “Single Page Apps” for more details of possible implementations.

The HTML 5 API

The HTML 5 API is amazingly powerful and covers a wide range of features. All existing frameworks and libraries – with no exception – are build on top of this API. The advantage of using a certain framework is primarily that you get a simplified view, a reduced view, a more elegant API style or even more robust code made by additional error handling. These are all good reasons to use a framework or library, ain’t these?

Imagine you know all these APIs. What would happen is that you can avoid few of the libraries and probably a whole framework. The code is finally smaller, faster, and easier to maintain. Learning the HTML 5 APIs is essential for web developers nowadays.

The Template Language

A template language simplifies the creation of forms. It’s not enforced, you can af course use the basic API and pure HTML. In complex applications you’ll see that there is lot of repeating code. There are many template languages available and I’ll present few of them so you can compare and choose freely.

Smart Decorators

Instead of splitting the definition and registration the component itself covers all necessary information as meta-data. Decorators are feature of the TypeScript compiler and eventually they will become part of the ECMAScript standard.

This coding style supports the “separation of concern” principle and is easy to implement. A final solution could look like this:

1 @CustomElement('app-main')

2 export class MainComponent extends BaseComponent<{}> {

3

4 protected render() {

5 return (<h1>Demo</h1>);

6 }

7

8 }

The decorator CustomElement is called in the instantiation process of the class. It can access both, the underlying function definition and the instance. Here you can manipulate the code further (at runtime) by adding hidden properties, for example. Other code fragments may access these properties and act according these hidden instructions. In the above example some external code may see this and take ist as an “please register me” instruction. The advantage here is that the component developer doesn’t need to think about such infrastructure stuff and the code is much smaller and easier to read.

TypeScript

TypeScript is not covered in this book. It is, however, the language used to write components and related libraries. It’s not exactly necessary for writing Web components, but it’s a strong tool in the developers toolset. The ability to transform JSX has already been explained and if you don’t use TypeScript you have to replace one tool with another. So avoiding it gains nothing, while embracing it gives a bunch of advantages.

One of the main reasons for its success is, that valid JavaScript is valid TypeScript. Any ES2015 example shown here will be accepted by the TypeScript transpiler. What’s added is the ability to use features from newer JavaScript versions such as ECMAScript 2020 today, even if the browser do not have full support yet. And it adds types that reduce error prone code. In short it is this:

TypeScript = JavaScript + Type System

TypeScript is compatible with ECMAScript2 2018 and provides necessary polyfills.

WebPack

WebPack is an open-source JavaScript module bundler, primarily for JavaScript, but it can transform front-end assets like HTML, CSS, and images if the corresponding loaders are included. Webpack takes modules with dependencies and generates static assets representing those modules. The dependencies and generated dependency graph allows web developers to use a modular approach for their web application development purposes. It can be used from the command line, or can be configured using a configuration file which is named webpack.config.js3. This file is used to define rules, plugins, etc., for a project.

WebPack is highly extensible via rules which allow developers to write custom tasks that they want to perform when bundling files together. NodeJs is required for using webpack, hence it’s a command line tool running at development time.

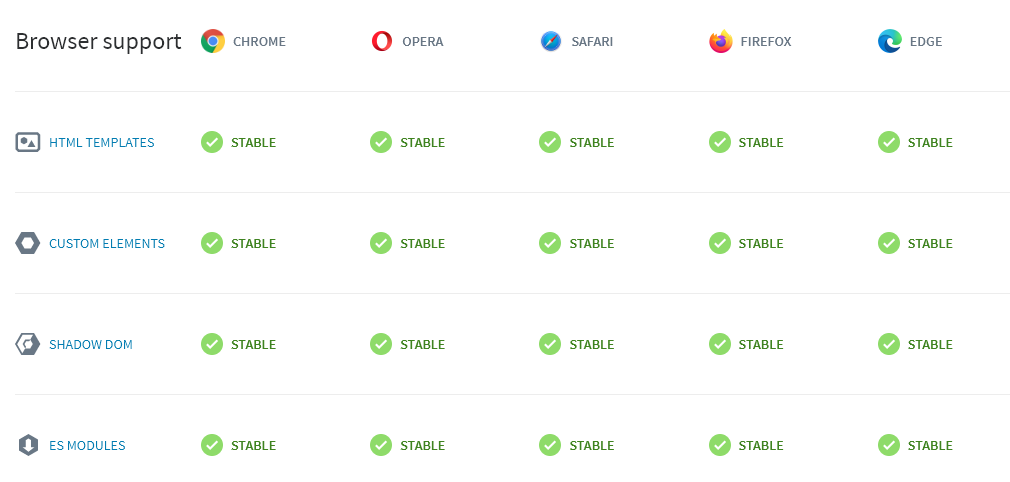

1.3 Compatibility

For every new technology it takes some time until all browsers and tools have a fully working implementation. The great news about the year 2020 is, that meanwhile all browsers (see Figure 1-3) have full support and you rarely need a polyfill.

1.4 Other Libraries

Apart from the one I feature in this book, @nyaf, there are few others that I found worth mentioning here. They differ in focus and quality. It depends on your project and feature requirements which one suits better. It’s also a good idea to analyse them and learn how things work internally and consider going with some code where you have the ownership. The following list is pulled from webcomponents.org:

- Hybrids is a UI library for creating web components with simple and functional API. The library uses plain objects and pure functions for defining custom elements, which allow very flexible composition. It provides built-in cache mechanism, template engine based on tagged template literals, and integration with developer tools.

- LitElement uses lit-html to render into the element’s Shadow DOM and adds API to help manage element properties and attributes. LitElement reacts to changes in properties and renders declaratively using lit-html.

- Polymer is a web component library built by Google, with a simple element creation API. Polymer offers one- and two-way data binding into element templates, and provides shims for better cross-browser performance.

- Skate.js is a library built on top of the W3C web component specs that enables you to write functional and performant web components with a very small footprint. Skate is inherently cross-framework compatible. For example, it works seamlessly with – and complements – React and other frameworks.

- Slim.js is a lightweight web component library that provides extended capabilities for components, such as data binding, using ES2015 native class inheritance. This library is focused for providing the developer the ability to write robust and native web components without the hassle of dependencies and an overhead of a framework.

- Stencil is an open source compiler that generates standards-compliant web components.

2 Making Components

We can create custom HTML elements, described by a class, with its own methods and properties, events and so on. Once a custom element is defined, we can use it on par with built-in HTML elements. These elements are called Web Components.

2.1 Basics

That’s great, as HTML dictionary is rich, but not infinite. There are no <easy-tabs>, <sliding-carousel>, or <beautiful-upload>. Just think of any other tag we might need.

We can define them with a special class, and then use as if they were always a part of HTML.

There are two kinds of custom elements:

- Autonomous custom elements – custom elements, extending the abstract

HTMLElementclass. - Customized built-in elements – extending built-in elements, like a customized button, based on

HTMLButtonElementetc.

First I’ll cover autonomous elements, and then move to customized built-in ones.

To create a custom element, we need to tell the browser several details about it: how to show it, what to do when the element is added or removed to page, etc. That’s done by making a class with special methods. That’s easy, as there are only few methods, and all of them are optional.

A sketch with the full list is shown in Listing 2-1:

1 class MyElement extends HTMLElement {

2 constructor() {

3 super();

4 // element created

5 }

6

7 connectedCallback() {

8 this.innerHTML = '<h1>Hello Web Component</h1>';

9 // called when the element is added to the document

10 }

11

12 disconnectedCallback() {

13 // called when the element is removed from the document

14 }

15

16 static get observedAttributes() {

17 return [

18 /* array of attribute names to monitor for changes */

19 ];

20 }

21

22 attributeChangedCallback(name, oldValue, newValue) {

23 // called when one of attributes listed above is modified

24 }

25

26 adoptedCallback() {

27 // called when moved to a new document

28 }

29

30 // there can be other element methods and properties

31 }

All methods shown are optional, implement those you really need only. Be aware that under certain circumstances the methods might be called multiple times.

After defining the component, we need to register it as an element. We need to let the browser know that <my-element> is served by our new class.

customElements.define('my-element', MyElement);

Now for any HTML elements with the tag <my-element> an instance of MyElement is created, and the aforementioned methods are called. We also can use document.createElement('my-element') to create the element through an HTML 5 API call and attach the element later to the DOM.

A custom element name must have a hyphen -, e.g. my-element and super-button are valid names, but myelement is not.

To load and use the component you need an HTML page. This could look as simple as shown in Listing 2.2.

1 <!DOCTYPE html>

2 <html lang="en">

3 <head>

4 <meta charset="UTF-8" />

5 <meta name="viewport"

6 content="width=device-width, initial-scale=1.0" />

7 <title>Document</title>

8 <script src="component.js"></script>

9 </head>

10 <body>

11 <my-element></my-element>

12 </body>

13 </html>

It’s recommended to wait for the document ready state before applying the registration. This might or might not change the behavior – it depends on the inner construction of the component’s content. But waiting for the ready event seems to fix some common issues and has rarely any disadvantages.

A First Example

For example, there already exists a <time> element in HTML, for date and time information. But it doesn’t do any formatting by itself.

Let’s create <time-format> element that displays the time in a nice, language-aware format as shown in Listing 2-3.

1 class TimeFormat extends HTMLElement {

2

3 connectedCallback() {

4 let date = new Date(this.getAttribute('datetime')

5 || Date.now());

6

7 this.innerHTML = new Intl.DateTimeFormat('default', {

8 year: this.getAttribute('year') || undefined,

9 month: this.getAttribute('month') || undefined,

10 day: this.getAttribute('day') || undefined,

11 hour: this.getAttribute('hour') || undefined,

12 minute: this.getAttribute('minute') || undefined,

13 second: this.getAttribute('second') || undefined,

14 timeZoneName: this.getAttribute('time-zone-name')

15 || undefined,

16 }).format(date);

17 }

18 }

19

20 customElements.define('time-format', TimeFormat);

To use it, the following piece of HTML is necessary (Listing 2-4).

1 <time-format

2 datetime="2020-04-13"

3 year="numeric"

4 month="long"

5 day="numeric"

6 hour="numeric"

7 minute="numeric"

8 second="numeric"

9 time-zone-name="short"

10 ></time-format>

The class has only one method connectedCallback() – the browser calls it when a <time-format> element is added to the document and it uses the built-in Intl.DateTimeFormat2 data formatter, well-supported across the browsers, to show a nicely formatted time. We need to register our new element by customElements.define(tag, class). And then we can use it everywhere. The output is shown in Figure 2-1.

Observe Unset Elements

If the browser encounters any <time-format> elements before customElements.define got called, it will not produce an error. The element is yet unknown, just like any non-standard tag. It will render into nothing. That’s hard to capture. To make it visible we could add an style that’s using the pseudo CSS selector :not(:defined).

When customElement.define is called, the element is “upgraded”. A new instance of the TimeFormat class is created for each element, and the connectedCallback method is called. The element becomes :defined, then.

A very helpful stylesheet to achieve the visibility of not yet upgraded components is shown in Listing 2-5:

1 <style>

2 :not(:defined) {

3 display: block;

4 width: 150px;

5 border-bottom: 2px dotted red;

6 text-align: center;

7 }

8 :not(:defined):before {

9 content: "unknown element !";

10 color: red;

11 }

12 </style>

In the example the JavaScript part is missing (to simulate an error) and hence the style makes the element visible. The result is shown in Figure 2-2:

Custom Elements API

To get information about custom elements, there are two helpful methods:

-

customElements.get(name)– returns the class for a custom element with the given name

- customElements.whenDefined(name) – returns a promise that resolves (without value) when a custom element with the given name becomes upgraded.

It’s important to start the rendering in connectedCallback, not in the constructor. In the example above, element content is rendered (created) that way. The constructor is not suitable. When the constructor is called, it’s yet too early. The element is created, but the browser did not yet process and assign attributes at this stage. For instance, calls to getAttribute would return null.

An additional reason is performance. In the further stages of the render process some code might decide not to render the element or replace the render content with some message. Imagine a grid, which might become huge, but due to some attribute setting it’s replaced by a “to many data” message. Processing all attributes first and render then makes sense.

The connectedCallback method triggers when the element is added to the document. Not just appended to another element as a child, but actually becomes a part of the page. So we can build detached DOM, create elements and prepare them for later use. They will only be actually rendered when they make it into the page. In the first examples that’s always the case, because the element is written directly into the page. However, in a more dynamic environment, such as a Single Page App (SPA), this would not be the same.

2.2 Observing Attributes

In the current implementation of <time-format>, after the element is rendered, further attribute changes doesn’t have any effect. That’s strange for an HTML element. Usually, when we change an attribute, like href of an anchor element, we expect the change to be immediately visible. All kind of effects and animations need this behavior.

We can observe attributes by providing their list in the observedAttributes() method. It’s static (not part of the prototype), because it’s global definition once for all instances of the element. For such attributes, the method attributeChangedCallback is called when they are modified. It doesn’t trigger for any other attribute for performance reasons.

Listing 2-6 shows a new <time-format> version that auto-updates when attributes change.

1 class TimeFormat extends HTMLElement {

2

3 render() {

4 let date = new Date(this.getAttribute('datetime')

5 || Date.now());

6

7 this.innerHTML = new Intl.DateTimeFormat("default", {

8 year: this.getAttribute('year') || undefined,

9 month: this.getAttribute('month') || undefined,

10 day: this.getAttribute('day') || undefined,

11 hour: this.getAttribute('hour') || undefined,

12 minute: this.getAttribute('minute') || undefined,

13 second: this.getAttribute('second') || undefined,

14 timeZoneName: this.getAttribute('time-zone-name')

15 || undefined,

16 }).format(date);

17 }

18

19 connectedCallback() {

20 if (!this.rendered) {

21 this.render();

22 this.rendered = true;

23 }

24 }

25

26 static get observedAttributes() {

27 return ['datetime', 'year', 'month', 'day', 'hour',

28 'minute', 'second', 'time-zone-name'];

29 }

30

31 attributeChangedCallback(name, oldValue, newValue) {

32 this.render();

33 }

34

35 }

36

37 customElements.define("time-format", TimeFormat);

The usage doesn’t look much different from the first example.

<time-format id="elem"

hour="numeric"

minute="numeric"

second="numeric">

</time-format>

However, when you change one of the observed attributes in your code, the element re-renders automatically:

const elem = document.querySelector('time-format');

setInterval(

() => elem.setAttribute('datetime', new Date()),

1000

);

The rendering logic is moved to render() helper method. We call it once when the element is inserted into the document. After a change of an attribute, listed in observedAttributes(), the method attributeChangedCallback triggers and re-renders the element. The call to the render method must be implemented in the component. On the first look it’s not much magic here and in comparison with frameworks such as Angular or React it might feel primitive. But the ability to control the rendering and have a clear cycle makes the implementation very handy and straight.

Attribute Data

The component itself can handle only scalar values. That means you’re limited to string, boolean, and number. Anything else will run through toString() and may end up as something like [Object object] or, in case of null as a string “null”. That’s a lot weaker than the binding we can see in Angular, for example. Of course, a private implementation can detect such types and use JSON.stringify and JSON.parse. That is, indeed, the slowest but most robust way to serialize complex data.

Another way is to ditch the usage of observed attributes completely and use a programmatic way. This would, however, limit the component to be accessible by code only. That’s a serious limitaton indeed, but let’s explore an example (Listing 2-7) anyway to give you the idea.

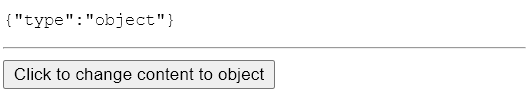

1 class ObjectComponent extends HTMLElement {

2

3 render() {

4 this.innerHTML = null;

5 const pre = document.createElement('pre');

6 pre.innerHTML = JSON.stringify(this.content);

7 this.insertAdjacentElement('afterbegin', pre);

8 }

9

10 connectedCallback() {

11 this.render();

12 }

13

14 static get observedAttributes() {

15 return ['content'];

16 }

17

18 attributeChangedCallback(name, oldValue, newValue) {

19 this.content = newValue;

20 this.render();

21 }

22

23 set content(value) {

24 this._content = value;

25 this.render();

26 }

27

28 get content() {

29 return this._content;

30 }

31

32 }

33

34 document.addEventListener('readystatechange', (docEvent) => {

35 if (document.readyState === 'complete') {

36 customElements.define("obj-element", ObjectComponent);

37

38 document.querySelector('button')

39 .addEventListener('click', (e) => {

40 document.querySelector('obj-element').content = {

41 type: 'object'

42 };

43 });

44 }

45 });

The key is line 19 with access to the custom property content. Here we assign an object and call the render method immediately. That bypasses the conversion to string type and keeps the stringifyer working as expected. Figure 2-3 shows the expected result.

The observation is still present to allow a static value for initialization (see Listing 2-8).

1 <obj-element content="Default String Content"></obj-element>

2 <hr>

3 <button type="button" >Click to change content to object</button>

Discussing the Options

To monitor external data without the ability to use the observation you may also consider using a Proxy object and optionally the MutationObserver class. Both ways use ES 2015 classes and are hence native APIs. A Proxy gives you the ability to intercept the access to an object’s properties. Whatever and whenever some code outside accesses a property, a callback is being called. This could be used to trigger the render method.

The MutationObserver Type

A MutationObserver on the other hand monitors the DOM itself and calls a callback if something changes. However, this function runs in a mikrotask and is not entirely synchronous. That means, the new attribute value (if observed) may not have the current value already. To not expose a risk of non-deterministic behavior the MutationRecord instance that the callback returns will not give access to the new value. Of course, there are several ways to patch the prototype or add an interceptor, but it all feels hackish and not very reliable. The biggest risk is that the API changes internally without any notification and the code will fail eventually out of nowhere with a simple browser update. I made some experiments with this and never found a satisfying solution. Just in case you want to dig deeper into this, the following code snippet gives you the general usage of such an observer:

1 document.addEventListener('readystatechange', (docEvent) => {

2 if (document.readyState === 'complete') {

3

4 const observer = new MutationObserver(mutations => {

5 console.log('mutations', mutations);

6 });

7

8 customElements.define("obj-element", ObjectComponent);

9

10 document.querySelector('button')

11 .addEventListener('click', (e) => {

12 // observed by MutationObserver

13 document.querySelector('obj-element').setAttribute('content',

14 { type: 'object' });

15 });

16 // just observe the attributes

17 observer.observe(

18 document.querySelector('obj-element'),

19 { attributes: true }

20 );

21 }

22 });

Proxy

A Proxy class is pure JavaScript and handles just an object. But, a Web component is an object, so this sounds like a feasible solution. However, the amount of boilerplate code is significant. In a real life scenario you would move this to a base class, but the example shows nonetheless the weakness of HTML 5 API (and it’s power, too).

1 class ObjectComponent extends HTMLElement {

2

3 constructor() {

4 super();

5 this.proxy = new Proxy(

6 this, {

7 get: () => { return this.content; },

8 set: (target, prop, value) => {

9 this._content = value;

10 if (ObjectComponent.observedAttributes.includes(prop)) {

11 this.setAttribute(prop, JSON.stringify(value));

12 }

13 return true;

14 }

15 });

16 }

17

18 render() {

19 this.innerHTML = null;

20 const pre = document.createElement('pre');

21 pre.innerHTML = JSON.stringify(this.content);

22 this.insertAdjacentElement('afterbegin', pre);

23 }

24

25 connectedCallback() {

26 this.render();

27 }

28

29 static get observedAttributes() {

30 return ['content'];

31 }

32

33 attributeChangedCallback(name, oldValue, newValue) {

34 if (!oldValue) {

35 this.setAttribute('content', JSON.stringify(newValue));

36 }

37 if (oldValue !== newValue) {

38 this.render();

39 }

40 }

41

42 set content(value) {

43 this.proxy.content = value;

44 }

45

46 get content() {

47 return JSON.parse(this.getAttribute('content'));

48 }

49

50 }

51

52 document.addEventListener('readystatechange', (docEvent) => {

53 if (document.readyState === 'complete') {

54

55 customElements.define("obj-element", ObjectComponent);

56

57 document.querySelector('button')

58 .addEventListener('click', (e) => {

59 document.querySelector('obj-element').content = {

60 type: 'object'

61 };

62 });

63

64 }

65 });

Again, there is no way to use the programmatic access here:

document.querySelector('obj-element').content = ...

Because the attribut observation is still operational the external access from HTML would work too. Insofar it does exactly what’s expected. The external access and the programmatic access both write into the property content. That’s observed by the proxy handler’s setter path. Here we look that’s really an observed attribute (line 10) and trigger the regular attribute observer (line 11). The difference is, that the value received by the proxy is still an object (while the internal API would have called toString first and delivers [Object object]). Now we can transform it into the stringified version and store this in the attribute. The actual render code expects an object (line 21). To make it working and makes use of the JSON.parse method to return an actual object in a transparent way, the getter method at the end (line 47) transforms the string back.

2.3 Rendering Order

When the browser’s HTML parser builds the DOM, elements are processed one after another, parents before children. Imagine we have something like that:

<outer-element><inner-element></inner-element></outer-element>

Then an <outer-element> element is created and connected to DOM first, and then <inner-element>.

That leads to important consequences for custom elements. For example, if a custom element tries to access innerHTML in connectedCallback, it gets nothing:

1 customElements.define(

2 'user-info',

3 class extends HTMLElement {

4 connectedCallback() {

5 alert(this.innerHTML);

6 }

7 }

8 );

<user-info>Joerg</user-info>

If you run it, the alert is empty. That’s exactly because there are no children on that stage, the DOM is unfinished. HTML parser connected the custom element <user-info>, and is going to proceed to its children, but they’re not here yet.

If you like to pass information to custom elements, we can use attributes. They are available immediately.

Delay Access

If we really need the content immediately, we can defer access to them with a zero-delay setTimeout.

1 <script>

2 customElements.define('user-info', class extends HTMLElement {

3

4 connectedCallback() {

5 setTimeout(() => alert(this.innerHTML));

6 }

7

8 });

9 </script>

10

11 <user-info>Joerg</user-info>

Now the alert shows “Joerg”, because we run it asynchronously and the HTML parsing is complete. Of course, this solution is not perfect. If nested custom elements also use setTimeout to initialize themselves, then they queue up: the outer setTimeout triggers first, and then the inner one. And that’s simply the wrong order.

Let’s demonstrate that with an example:

1 <script>

2 customElements.define('user-info', class extends HTMLElement {

3 connectedCallback() {

4 console.log(`${this.id} connected.`);

5 setTimeout(() => console.log(`${this.id} initialized.`));

6 }

7 });

8 </script>

9

10 <user-info id="outer">

11 <user-info id="inner"></user-info>

12 </user-info>

Output order:

- outer connected

- inner connected

- outer initialized

- inner initialized

We can clearly see that the outer element finishes initialization before the inner one.

There’s no built-in callback that triggers after nested elements are ready. If needed, we can implement such thing on our own. For instance, inner elements can dispatch events like initialized, and outer ones can listen and react on them.

Introducing a Life Cycle

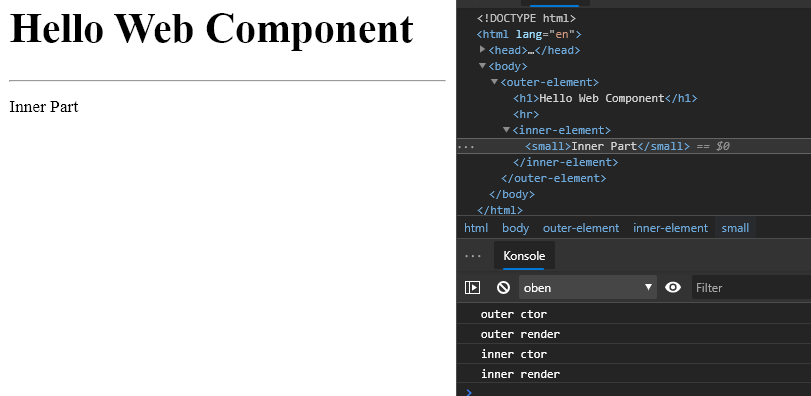

The current implementation of a Web Component is very simple. That makes it easy to get in the first step, but some additional implementation effort is required. One idea to solve the issues with the last example is to introduce a loading callback. Once the render stage has passed the component fires a event or – even better – resolves a Promise. The outer component can wait for this to happen and proceed once the children confirm they are done. Again, a not so good working example:

1 class OuterElement extends HTMLElement {

2 constructor() {

3 super();

4 console.log('outer ctor');

5 }

6

7 connectedCallback() {

8 console.log('outer render');

9 this.innerHTML = '<h1>Hello Web Component</h1>' +

10 this.innerHTML +

11 '<hr>';

12 }

13

14 }

15

16 class InnerElement extends HTMLElement {

17 constructor() {

18 super();

19 console.log('inner ctor');

20 }

21

22 connectedCallback() {

23 console.log('inner render');

24 this.innerHTML = '<small>Inner Part</small>';

25 }

26

27 }

28

29 customElements.define('outer-element', OuterElement);

30 customElements.define('inner-element', InnerElement);

Let’s assume a piece of HTML like this:

1 <outer-element>

2 <inner-element></inner-element>

3 </outer-element>

The expected render output would be this one:

1 <h1>Hello Web Component</h1><small>Inner Part</small><hr>

If you execute this “as is”, the result is wrong, as shown in the Figure 2-4:

Look at the DOM, the <inner-element> comes after the <hr>, which is not what we expected.

So, how could we make the outer component to wait for all the children? Attaching events to the component itself wouldn’t be an option, because the content might be simple static text and a text node can’t trigger events.

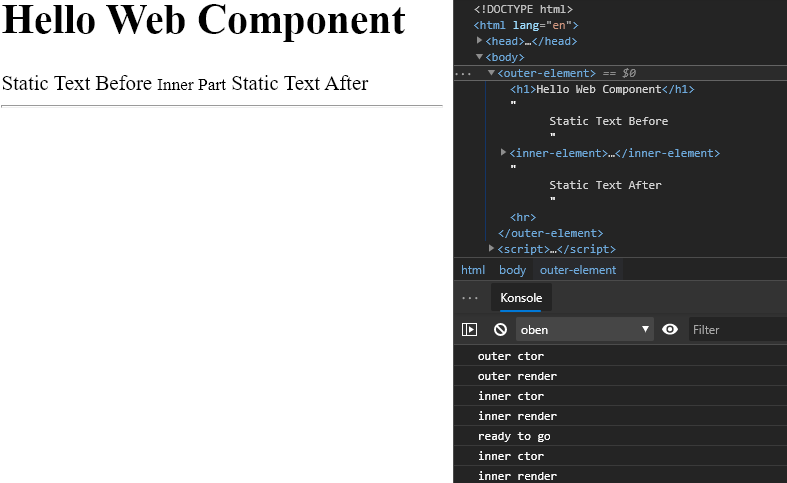

Waiting for other custom elements would be easy. We could just loop through all outer elements, look weather they have a specific state and wait. Also, to make it easier to handle, the render method could be async. But in case of regular HTML and static text this will not work. One proposal is to set an explicit trigger for the render part:

1 <outer-element>

2 Static Text Before

3 <inner-element></inner-element>

4 Static Text After

5 <content-done />

6 </outer-element>

The element <content-done /> is such a trigger. Once it occurred it will call the render method of the outer-most element. The whole code is in the next example (Listing 2-11).

1 class OuterElement extends HTMLElement {

2 constructor() {

3 super();

4 console.log('outer ctor');

5 }

6

7 async connectedCallback() {

8 console.log('outer render');

9 }

10

11 render() {

12 console.log('ready to go');

13 this.innerHTML = '<h1>Hello Web Component</h1>' +

14 this.innerHTML +

15 '<hr>';

16 }

17

18 }

19

20 class InnerElement extends HTMLElement {

21 constructor() {

22 super();

23 console.log('inner ctor');

24 }

25

26 connectedCallback() {

27 console.log('inner render');

28 this.innerHTML = '<small>Inner Part</small>';

29 }

30

31 }

32

33 customElements.define('outer-element', OuterElement);

34 customElements.define('inner-element', InnerElement);

35

36 // the currently needed utility

37 customElements.define('content-done', class extends HTMLElement {

38 connectedCallback() {

39 const {parentElement} = this;

40 parentElement.removeChild(this);

41 if (parentElement.render) {

42 parentElement.render();

43 }

44 }

45 });

There are two critical parts here. First, the outer-most element must be prepared to receive a call. In the example shown above it’s the custom render method. Second, the trigger element looks a bit awkward and is some context knowledge the template developer need to have; a thing we usually try to avoid.

If you watch carefully on the console output you see that the inner part is called twice. First, the immediate call from the render engine once the component is registered. Second, the call from the custom trigger element. While this seems in the demo not relevant, it could bring a serious performance penalty ones in a huge and more complex application.

If you have custom components only, this render approach is much more feasible.

<outer-element>

<inner-element></inner-element>

</outer-element>

Here the <inner-element> could call render and if that happens for all elements it will work smoothly. You have just to test whether the content exists to let the inner most element render itself immediately. The @nyaf thin library shown in the appendix is doing exactly this, along with other libraries such as Polymer or Lightning.

An even better way is to wait for elements being available. That can be made by just delaying the component registration after document ready event.

1 if (document.readyState === 'interactive'

2 || document.readyState === 'complete') {

3 customElements.define('outer-element', OuterElement);

4 customElements.define('inner-element', InnerElement);

5 } else {

6 document.addEventListener('DOMContentLoaded', _ => {

7 customElements.define('outer-element', OuterElement);

8 customElements.define('inner-element', InnerElement);

9 });

10 }

Here the code looks for an already available ready state or the finishing event.

This works very well for an application with a static appearance. However, if you have components loaded dynamically, as it happens in a Single Page App with a router logic, this will not work. The final (and best) solution depends on the kind of app you write and may change over time.

2.4 Customized Built-in Elements

New elements that we create, such as <time-format>, don’t have any associated semantics. They are unknown to search engines, and accessibility devices can’t handle them. Such things can be important, though. A search engine would be interested to know that we actually show a time. And if we’re making a special kind of button, why not reuse the existing <button> functionality?

We can extend and customize built-in HTML elements by inheriting from their classes. For example, buttons are instances of HTMLButtonElement. Extend HTMLButtonElement with a new class:

class HelloButton extends HTMLButtonElement {

/* custom element methods */

}

Provide a third argument to customElements.define, that specifies the type to extend by using the tag’s name:

customElements.define('hello-button', HelloButton, {extends: 'button'});

There may be different tags that share the same DOM-class, that’s why specifying extends is needed. To get the custom element, insert a regular <button> tag, but add the is attribute to it like this:

<button is="hello-button">...</button>

Here’s a full example:

1 <script>

2 // The button that says "hello" on click

3 class HelloButton extends HTMLButtonElement {

4 constructor() {

5 super();

6 this.addEventListener('click', () => alert("Hello!"));

7 }

8 }

9

10 customElements.define('hello-button',

11 HelloButton,

12 {extends: 'button'});

13 </script>

14

15 <button is="hello-button">Click me</button>

16

17 <button is="hello-button" disabled>Disabled</button>

The new button extends the built-in one. That means it keeps the same styles and standard features like the disabled attribute.

If you prefer using the API, especially the document.createElement method, then you’ll find a second parameter that is an object with just one property, is.

let liComponent = document.createElement('ul', { is : 'app-list' })

The component itself must be registered as before, but now with the extension instruction:

customElements.define('app-list', ListComponent, { extends: "ul" });

That way you can modify any existing element and there is no need to create custom tags from scratch.

<ul is="app-list"></ul>

2.5 Advantage of TypeScript

In the previous examples I used only pure ES2015 code. The TypeScript syntax would be similar. However, using TypeScript’s features could make it even easier to handle certain tasks. One of the important parts is the handling of attributes. As already shown in the previous examples the observedAttributes method is responsible to trigger the observation. To access those attributes means calling methods like this.getAttribute('name'). And here lies a culprit. The usage of strings is error-prone. A much better way would it if we could use named properties, like this.name.

Using Generics

The key is a generic. In TypeScript you can assign a type to a concrete type placeholder to achieve this. However, a full implementation is quite tricky and it would be nice if we can handle this internally and not bother the developer with all the details. Let’s work this out step by step.

The component shall finally look like this:

1 class TimeFormat extends BaseComponent<TimeProperties> {

2

3 constructor() {

4 super();

5 }

6

7 render() {

8 let date = new Date(this.data.datetime

9 || Date.now());

10

11 this.innerHTML = new Intl.DateTimeFormat("default", {

12 year: this.data.year || undefined,

13 month: this.data.month || undefined,

14 day: this.data.day || undefined,

15 hour: this.data.hour || undefined,

16 minute: this.data.minute || undefined,

17 second: this.data.second || undefined,

18 timeZoneName: this.data['time-zone-name']

19 || undefined,

20 }).format(date);

21 }

22

23 connectedCallback() {

24 this.render();

25 }

26

27 }

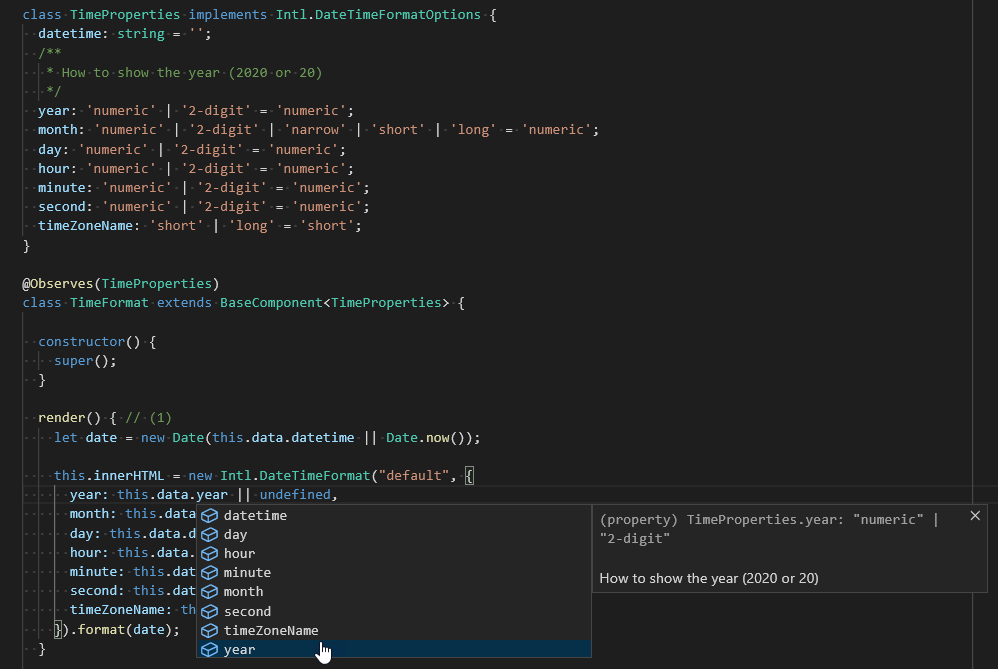

As you can see the getAttribute calls are replaced by this.data calls that Intellisense understands through the generic of the base class BaseComponent. However, this will not work, because the generic is stripped out be the TypeScript transpiler and JavaScript doesn’t understand that. To get the type at runtime we need an instance of TimeProperties. The first decision to make is the type itself. It must be class, because we need a runtime instance. An interface would not work here. So let’s get the class:

class TimeProperties {

datetime: string = '';

year: string = '';

month: string = '';

day: string = '';

hour: string = '';

minute: string = '';

second: string = '';

'time-zone-name': string = '';

}

Then, we need to configure the base class. This involves two steps. First, the assignment of the generic type to the property data. This is primarily for a convenient access. Second, a method that retrieves the properties of the TimeProperties class and returns them as an array that observedAttributes can handle. The difficult part here is, and that’s the point where the coding can going to be a bit weird, that this method is static while on the component we work with an instance. Static members are being initialized before instance members, and especially before the constructor get called. Unfortunately TypeScript does not really have an elegant way to provide this, so we mix in some JavaScript here. The result is a base class as shown next:

1 abstract class BaseComponent<T extends object>

2 extends HTMLElement {

3

4 private static _keys: any;

5 private _data: T;

6

7 constructor() {

8 super();

9 this._data = {} as T;

10 }

11

12 public get data(): T {

13 return this._data;

14 }

15

16 static get observedAttributes() {

17 return (this.constructor as any)._keys || [];

18 }

19

20 attributeChangedCallback(name: string,

21 oldValue: any,

22 newValue: any) {

23 if (oldValue !== newValue) {

24 (this.data as any)[name] = newValue;

25 }

26 this.render();

27 }

28

29 public abstract render(): void;

30

31 }

The class is abstract to enforces the implementation. It’s generic as we planned it. It extends the usual HTMLElement class to make a real Web component. The crucial part is the call to get the array of properties: return (this.constructor as any)._keys (line 17). Here we access the constructor object, that’s available in the static initialization phase. But how we add the data at runtime to this property?

The trick is using a decorator. Decorators are a TypeScript feature that provides additional metadata to an object. They are static by definition and instantiated before the actual object. Technically they are just pure function calls, so we can do anything within the decorator. Decorators will become part of ECMAScript sooner or later (currently it’s experimental), but due to the polyfill the TypeScript compiler creates it’s no risk using them. The head of the class would now look like this:

1 @Observes(TimeProperties)

2 class TimeFormat extends BaseComponent<TimeProperties> {

3 // content omitted for brevity

4 }

The decorator Observes is defined like this:

1 type Type<T> = new (...args: any[]) => T;

2

3 function Observes<T extends {}>(type: Type<T>) {

4 // the original decorator

5 function internal(target: Object): void {

6 Object.defineProperty(target.constructor, '_keys', {

7 get: function () {

8 const defaults = new type()

9 return Object.keys(defaults);

10 },

11 enumerable: false,

12 configurable: false

13 });

14 }

15

16 // return the decorator

17 return internal;

18 }

The inner part defines where the decorator is allowed to appear. The given signature (line 5) is for a class. The class definition itself is delivered by the infrastructure through the target parameter. On that object (internally it’s a Function object) we create a dynamic property. An instance property would go to target directly, while a static property goes to constructor. Pure JavaScript magic, by the way. The strategy has nothing to do with Web components nor TypeScript. To avoid TypeScript from complaining a helper type is created, called Type<T>. This helper defines a constructor signature to allow the code to create an actual instance (new type()). And on this actual type we can call Object.keys to get all the property names.

You have seen that the property class has initializers for the members (datetime: string = ‘’;) That’s necessary, because otherwise the TypeScript transpiler would strip this code out to make a smaller bundle and assume that JavaScript can handle this (it cans), but here we really need values at runtime and hence the initializers enforce the existence of the properties. The actual values doesn’t matter, as long as you don’t need any defaults.

Summary

That might sound complicated and seems to contradict the simplicity of easy to use Web components. But the effort to create a base class is only a one time task and its usage is a lot more easier afterwards.

Figure 2-6 shows the example with a typed base interface from the type library and additional comments on the property year. The editor is now able to help a lot while selecting the right property. That’s the main reason for the effort, because on the long term it will increase the code quality.

3 Shadow DOM

The Shadow DOM brings encapsulation. It allows a component to have its very own DOM tree, that can’t be accidentally accessed from the main document, may have local style rules, and more. When creating a new component, the component’s developer doesn’t need to know anything about the application this particular component is running in. That further simplifies the development.

3.1 Preparation

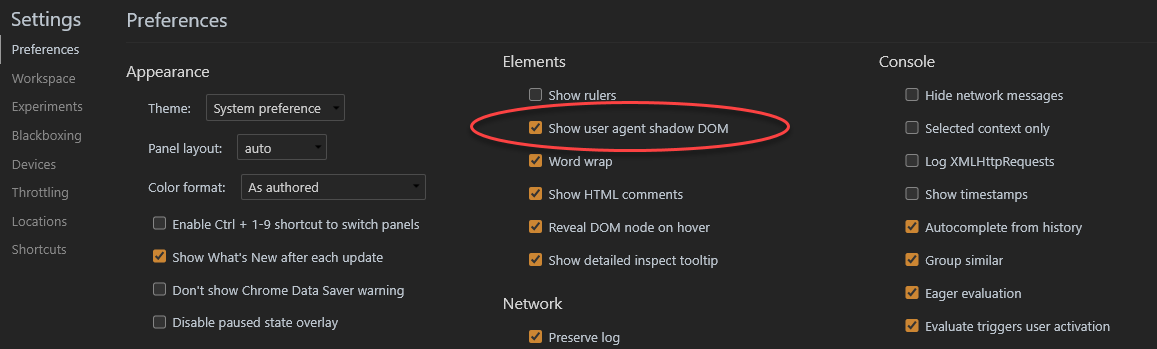

To recap some of the facts shown here, it’s recommended to have the Chrome browser available. To deal with the Shadow DOM, just activate the appropriate feature (see Figure 3-1) in Dev Tools settings (F12) and you’re good to go.

3.2 Built-in Shadow DOM

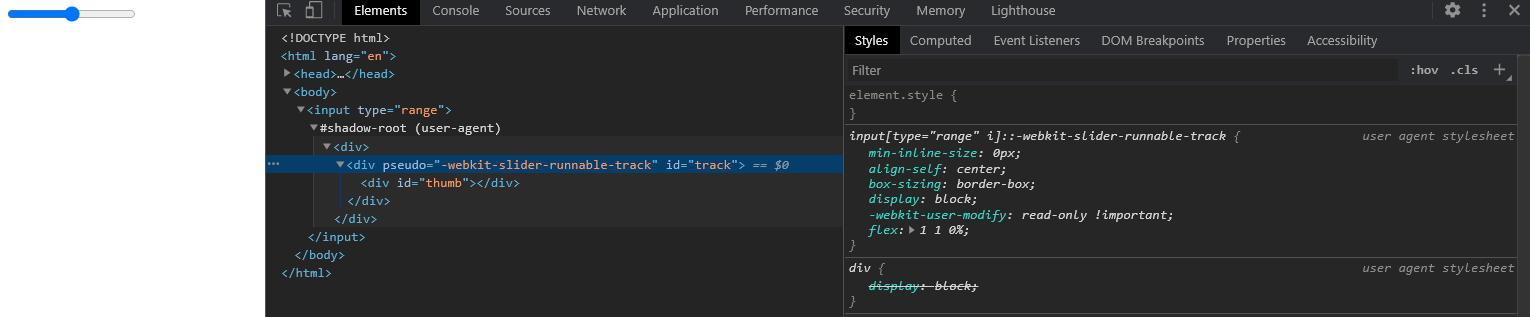

Complex browser controls are created and styled internally in different ways. Let’s have a look into <input type="range"> as an example. The browser uses DOM/CSS internally to draw them. That DOM structure is normally hidden from the developer, but we can see it in the developer tools with the Shadow DOM option enabled as mentioned before.

Then <input type="range"> looks like shown in Figure 3-2:

What you see under #shadow-root is called “shadow DOM”. It’s a piece of completely isolated code, made with the standard techniques like HTML and CSS. We can’t get built-in shadow DOM elements by regular JavaScript calls or selectors. These are not regular children, but a powerful encapsulation technique.

In the example above, we can see an useful attribute -webkit-slider-runnable-track. It’s non-standard, in fact, it exists for historical reasons. We can use it style subelements with CSS like this:

1 <style>

2 /* make the slider track red */

3 input::-webkit-slider-runnable-track {

4 background: red;

5 }

6 </style>

7

8 <input type="range">

Once again, this is a non-standard attribute. It’s specific to browsers using the Chromium engine. But a similar structure can be expected in all other engines, and sometimes it helps to achieve weird requirements.

Here, it’s just a primer to show that there is more under the hood. The Shadow DOM of a Web component is a way to work with encapsulation in a well defined way.

3.3 Shadow Tree

A DOM element can have two types of DOM sub-trees:

- Light tree: a regular DOM sub-tree, made of HTML children. All sub-trees that we’ve seen so far were “light”.

- Shadow tree: a hidden DOM sub-tree, not reflected in HTML, hidden from users eyes.

If an element has both, then the browser renders only the shadow tree. But we can setup a kind of composition between shadow and light trees as well. Some more details are explained in the chapter Slots.

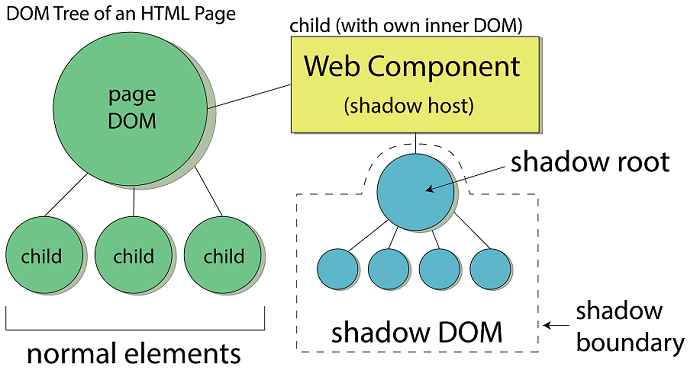

Terms

There are a few terms here you should know:

- Shadow Host: The host component

- Shadow Root: The root of the partial tree that forms the shadow tree

- Shadow DOM: An isolated DOM that contains the content of the tree

- Shadow Boundary: The border around the whole thing, includes root and tree

The relations between these parts are shown in Figure 3-3.

The access to the inner DOM is the same as for the regular DOM, that means you can use the same methods to manipulate the content. The methods might return different values and access different parts of the page (or nothing at all), though.

Using Shadow Trees

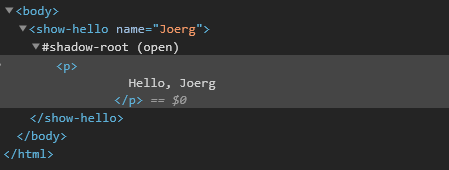

The shadow tree can be used in custom elements to hide component internals and apply component-local styles. For example, this <show-hello> element hides its internal DOM in a shadow tree:

1 <script>

2 customElements.define('show-hello', class extends HTMLElement {

3 connectedCallback() {

4 const shadow = this.attachShadow({mode: 'open'});

5 shadow.innerHTML = `<p>

6 Hello, ${this.getAttribute('name')} 7 </p>`;

8 }

9 });

10 </script>

11

12 <show-hello name="Joerg"></show-hello>

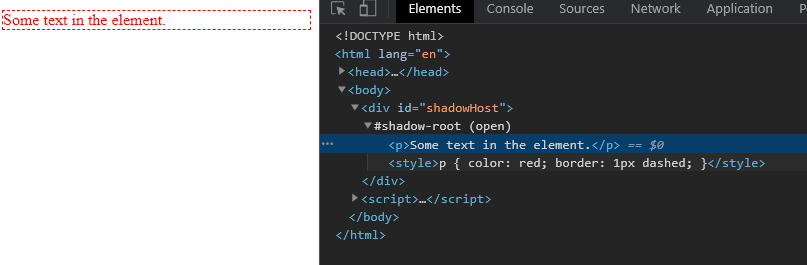

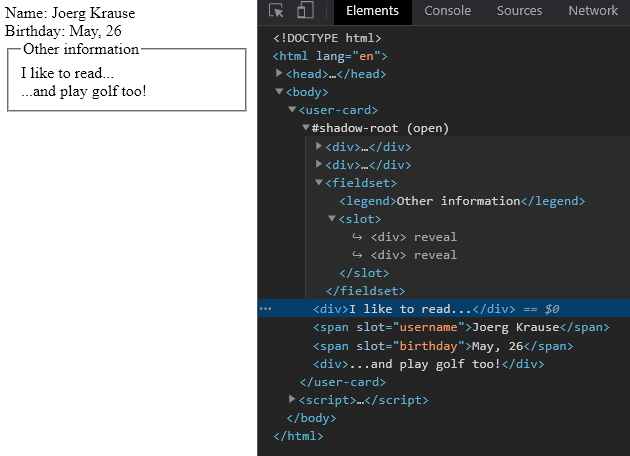

Figure 3-4 shows how the resulting DOM looks in the Chrome dev tools. All the content is placed under “#shadow-root (open)” tag:

The call to this.attachShadow({mode: …}) creates a shadow tree. The options are “open” and “closed”, I’ll explain this shortly.

Limitations

There are two limitations you have to consider:

- We can create only one shadow root per component.

- The component must be either a custom element or derive from one of these:

-

<article>, represented through the API class HTMLArticleElement -

<aside>, represented through the API class HTMLAsideElement -

<blockquote>, represented through the API class HTMLBlockquoteElement -

<body>, represented through the API class HTMLBodyElement -

<div>, represented through the API class HTMLDivElement -

<footer>, represented through the API class HTMLFooterElement -

<h1…h6>, represented through the API class HTMLHeadElement -

<header>, represented through the API class HTMLHeaderElement -

<main>, represented through the API class HTMLMainElement -

<nav>, represented through the API class HTMLNavElement -

<p>, represented through the API class HTMLParagraphElement -

<section>, represented through the API class HTMLSectionElement -

<span>, represented through the API class HTMLSpanElement

Other elements, like <img>, can’t host a shadow tree. The basic rule is, that the element must be able to host some content at all.

Modes

The mode option sets the encapsulation level. It must have any of two values:

- “open” – the shadow root is available as

this.shadowRoot. Any code (JavaScript) is able to access the shadow tree of the element. - “closed” –

this.shadowRootis always null, and there is no access through code (sort of total isolation).

We can only access the shadow DOM by the reference returned by attachShadow (and probably hidden inside a class). Browser-native shadow trees, such as <input type="range">, are closed. There’s no way to access them.

The shadow root, returned by attachShadow, is like an element. We can use innerHTML or DOM methods, such as append, to populate it. In fact, the @nyaf thin library code uses innerHTML to assign the rendered content to the web component. As simple as it sounds as simple it is.

The element with a shadow root is called a “shadow tree host”, and is available as the shadow root host property. This will work only in “open” mode

var hostElement = elem.shadowRoot.host;

If you use this in a base class, it’s easy and powerful to copy data from host to shadow and back.

3.4 Encapsulation

The Shadow DOM is strongly delimited from the main document. Shadow DOM elements are not visible to querySelector from the light DOM. In particular, Shadow DOM elements may have identifiers that conflict with those in the light DOM. They must be unique only within the shadow tree. Also, the shadow DOM has own stylesheets. Style rules from the outer DOM don’t get applied, at least not directly. There are pseudo classes that help here to apply externally provided styles. You can find more about this in the chapter Styling.

An example shows how it works directly. First, a global styles is being created:

<style>

p { color: red; }

</style>

Imagine a document, that contains that style and a web component definition:

1 <div id="elem"></div>

2

3 <script>

4 const elem = this.querySelector('#elem');

5 elem.attachShadow({mode: 'open'});

6 elem.shadowRoot.innerHTML = `

7 <style> p { font-weight: bold; } </style>

8 <p>Hello, Joerg!</p>

9 `;

10 console.log(document.querySelectorAll('p').length);

11 console.log(elem.shadowRoot.querySelectorAll('p').length);

12 </script>

Three effects can be recognized here:

- The style from the document does not affect the shadow tree. The color is not red.

- The style from the inside works. The element is bold.

- To get elements in shadow tree, we must query from inside the tree (elem.shadowRoot).

In the example I use length to check for elements. If there is nothing the value would be ‘0’ and at runtime JavaScript treats this as false.

Shadow DOM without Components

Just as a side step it’s worth to mention that using Web components is not a condition for using the shadow DOM. You can create a shadow DOM on-the-fly without using Web components. Assume this regular HTML element:

<div id="shadowHost"></div>

Add some code to see how it creates the shadow DOM:

1 const shadow = document.querySelector('#shadowHost').createShadowRoot();

2 shadow.innerHTML = `

3 <p>Some text in the element.</p>

4 <style>p { color: red; border: 1px dashed; }</style>

5 `;

You now have an existing element upgraded with piece of isolated DOM and some content hidden form the rest of page (result in Figure 3-5).

Closing the Shadow Root

In all previous examples and in most examples in this book we use the open mode to attach a shadow root. If you really not need access to the root and have nothing to apply programmatically, consider closing the root by using closed.

const shadowroot = element.attachShadow({ mode: 'closed' });

In that case the element.shadowRoot returns null and obviously you can do nothing with it.

3.5 The Shadow Root API

The ShadowRoot interface of the Shadow DOM API is the root node of a DOM subtree that is rendered separately from a document’s main DOM tree. This is what you get with element.shadowRoot.

Properties

Some properties give access to the internal parts of a component.

-

delegatesFocus: a readonly property, that returns a boolean that indicates whetherdelegatesFocuswas set when the shadow was attached. -

host: a readonly property, that returns a reference to the DOM element the shadow root is attached to. -

innerHTML: Sets or returns a reference to the DOM tree inside the shadow root. -

mode: a readonly property, that returns the mode of the shadow root — either open or closed. This defines whether or not the shadow root’s internal features are accessible from JavaScript.

The ShadowRoot interface includes the following properties defined on the DocumentOrShadowRoot mixin. Note that this is currently only implemented by Chrome. Other browsers make this available in the Document.

-

activeElement: a readonly property, that returns the Element within the shadow tree that has focus. -

styleSheets: a readonly property, that returns aStyleSheetListofCSSStyleSheetobjects for stylesheets explicitly linked into, or embedded in a document.

Methods

Some methods extend this API.

-

getSelection(): A method that returns aSelectionobject representing the range of text selected by the user, or the current position of the caret. -

elementFromPoint(): A method that returns the topmost element at the specified coordinates. -

elementsFromPoint(): A method that returns an array of all elements at the specified coordinates. -

caretPositionFromPoint(): A method that returns aCaretPositionobject containing the DOM node containing the caret, and caret’s character offset within that node. The caret is the blinking point where the user starts typing.

Similar incompatibilities like for the properties appear when accessing these methods. This depends on browser version and manufacturer. Because the situation changes with each new version, it’s hard to give a clear advise here. Best is to first define what browsers with what version you need to support. Then have a look on MDN (Mozilla Developer Network) to look up any support issues and seek a polyfill to help solving compatibility issues.

3.6 Summary

In this chapter I covered the shadow DOM, the isolated inner part of a Web component. Several API calls are available to deal with the shadow DOM and it’s root element. You could also find examples how the shadow DOM looks like in a browsers development tool.

4 Events

The idea behind the shadow tree is to encapsulate internal implementation details of a component. That requires to expose events explicitly if you still want to interact with inner parts of a component.

Let’s say, a click event happens inside a shadow DOM of the <user-card> component. But scripts in the main document have no idea about the shadow DOM internals. So, to keep the details encapsulated, the browser has to re-targets the event. Events that happen in shadow DOM have the host element as the target, when caught outside of the component.

4.1 Events in ECMAScript

Before you deal with custom events you should have a basic understanding of the event schema in JavaScript.

Event Handlers

On the occurrence of an event, the application executes a set of related tasks. The block of code that achieves this purpose is called the event handler. Every HTML element has a set of events associated with it. We can define how the events will be processed in JavaScript by using event handlers. Sometimes the handler appears as a callback, a function that’s provided as a parameter. You can read the callback as the technical solution to create an event handler.

Assign a Handler

To assign a handler you have two options. First, an attribute on the HTML element. In that case the name starts with an on. For instance, the ‘click’ event is called onclick. Second, you can attach an event to an element object, using the HTML 5 API. In that case the pure name is used; ‘click’ remains ‘click’. Because we code Web components and deal with them as objects, you will usually use the second method exclusively. There is also a combination of both methods possible, where you assign the handler function to an event property. And these event properties are the same as the attribute (with an on prefix).

<button onclick="sendData()">Send Data</button>

const button = this.querySelector('button');

button.addEventListener('click', e => sendData(e));

const button = this.querySelector('button');

button.onclick = sendData;

Choose the Right Events

While the handling is not that difficult, choosing the right event is much harder. Of course, just using click sounds easy. But the sheer number of events is frightening.

HTML 5 Standard Events

The standard HTML 5 events are listed in the following table for your reference. The script indicates a JavaScript function to be executed against that event.

| Attribute[^evt] | Realm | Description |

|---|---|---|

| offline | document | Triggers when the document goes offline |

| abort | document | Triggers on an abort event |

| afterprint | document | Triggers after the document is printed |

| beforeonload | document | Triggers before the document load |

| beforeprint | document | Triggers before the document is printed |

| blur | input | Triggers when the window loses focus |

| canplay | media | Triggers when the media can start play, but might have to stop for buffering |

| canplaythrough | media | Triggers when the media can be played to the end, without stopping for buffering |

| change | input | Triggers when an element changes |

| click | common | Triggers on a mouse click |

| contextmenu | common | Triggers when a context menu is triggered |

| dblclick | common | Triggers on a mouse double-click |

| drag | dragdrop | Triggers when an element is dragged |

| dragend | dragdrop | Triggers at the end of a drag operation |

| dragenter | dragdrop | Triggers when an element has been dragged to a valid drop target |

| dragleave | dragdrop | Triggers when an element leaves a valid drop target |

| dragover | dragdrop | Triggers when an element is being dragged over a valid drop target |

| dragstart | dragdrop | Triggers at the start of a drag operation |

| drop | dragdrop | Triggers when the dragged element is being dropped |

| durationchange | media | Triggers when the length of the media is changed |

| emptied | media | Triggers when a media resource element suddenly becomes empty |

| ended | media | Triggers when the media has reached the end |

| error | document | Triggers when an error occurs |

| focus | input | Triggers when the window gets focus |

| formchange | input | Triggers when a form changes |

| forminput | input | Triggers when a form gets user input |

| haschange | document | Triggers when the document has changed |

| input | input | Triggers when an element gets user input |

| invalid | input | Triggers when an element is invalid |

| keydown | input | Triggers when a key is pressed |

| keypress | input | Triggers when a key is pressed and released |

| keyup | input | Triggers when a key is released |

| load | document | Triggers when the document loads |

| loadeddata | media | Triggers when media data is loaded |

| loadedmetadata | media | Triggers when the duration and other media data of a media element is loaded |

| loadstart | media | Triggers when the browser starts to load the media data |

| message | document | Triggers when the message is triggered |

| mousedown | common | Triggers when a mouse button is pressed |