Table of Contents

- A Pull of the Lever: Prefaces

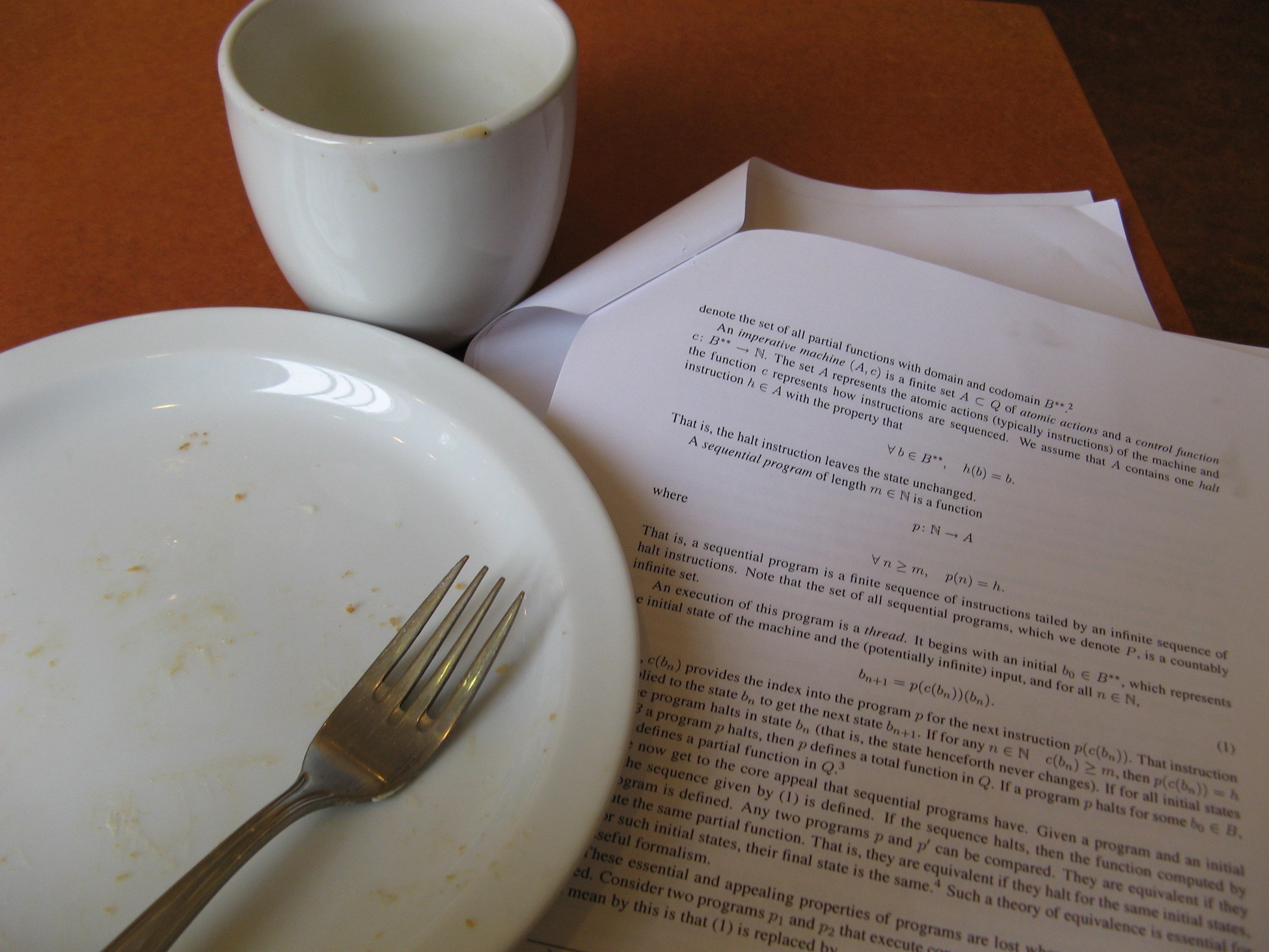

- Prelude: Values and Expressions over Coffee

- A Rich Aroma: Basic Numbers

- The first sip: Basic Functions

- Recipes with Basic Functions

- Picking the Bean: Choice and Truthiness

- Composing and Decomposing Data

- Recipes with Data

- A Warm Cup: Basic Strings and Quasi-Literals

- Stir the Allongé: Objects and State

- Recipes with Objects, Mutations, and State

- The Coffee Factory: “Object-Oriented Programming”

- Served by the Pot: Collections

- A Coffeehouse: Symbols

- Life on the Plantation: Metaobjects

- Decaffeinated: Impostors

- Finish the Cup: Constructors and Classes

- Recipes with Constructors and Classes

- Colourful Mugs: Symmetry, Colour, and Charm

- Con Panna: Composing Class Behaviour

- More Decorators

- More Decorator Recipes

- Closing Time at the Coffeeshop: Final Remarks

- The Golden Crema: Appendices and Afterwords

- Notes

A Pull of the Lever: Prefaces

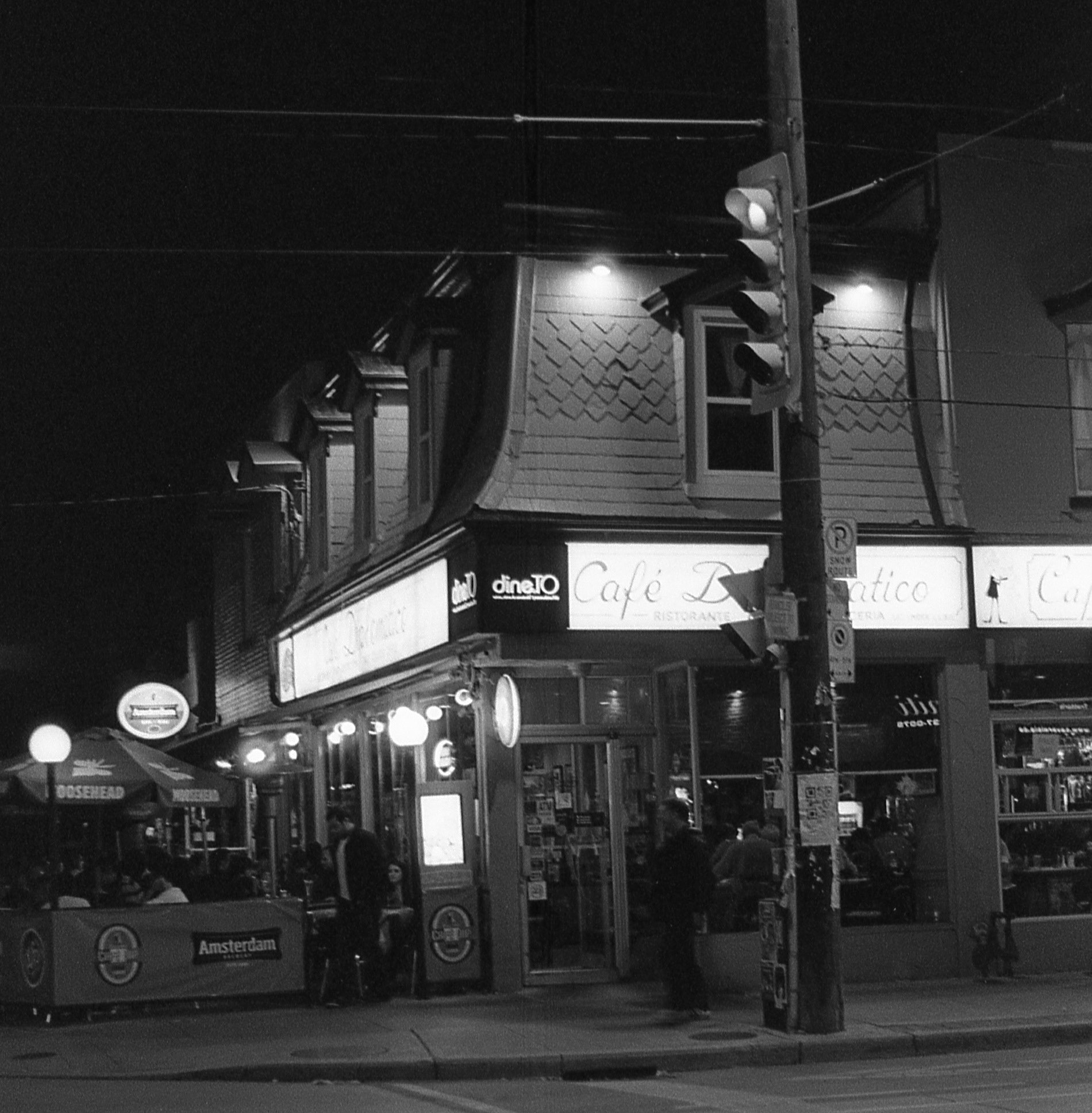

“Café Allongé, also called Espresso Lungo, is a drink midway between an Espresso and Americano in strength. There are two different ways to make it. The first, and the one I prefer, is to add a small amount of hot water to a double or quadruple Espresso Ristretto. Like adding a splash of water to whiskey, the small dilution releases more of the complex flavours in the mouth.

“The second way is to pull an extra long double shot of Espresso. This achieves approximately the same ratio of oils to water as the dilution method, but also releases a different mix of flavours due to the longer extraction. Some complain that the long pull is more bitter and detracts from the best character of the coffee, others feel it releases even more complexity.

“The important thing is that neither method of preparation should use so much water as to result in a sickly, pale ghost of Espresso. Moderation in all things.”

About JavaScript Allongé

JavaScript Allongé is a first and foremost, a book about programming with functions. It’s written in JavaScript, because JavaScript hits the perfect sweet spot of being both widely used, and of having proper first-class functions with lexical scope. If those terms seem unfamiliar, don’t worry: JavaScript Allongé takes great delight in explaining what they mean and why they matter.

JavaScript Allongé begins at the beginning, with values and expressions, and builds from there to discuss types, identity, functions, closures, scopes, collections, iterators, and many more subjects up to working with classes and instances.

It also provides recipes for using functions to write software that is simpler, cleaner, and less complicated than alternative approaches that are object-centric or code-centric. JavaScript idioms like function combinators and decorators leverage JavaScript’s power to make code easier to read, modify, debug and refactor.

JavaScript Allongé teaches you how to handle complex code, and it also teaches you how to simplify code without dumbing it down. As a result, JavaScript Allongé is a rich read releasing many of JavaScript’s subtleties, much like the Café Allongé beloved by coffee enthusiasts everywhere.

why the “six” edition?

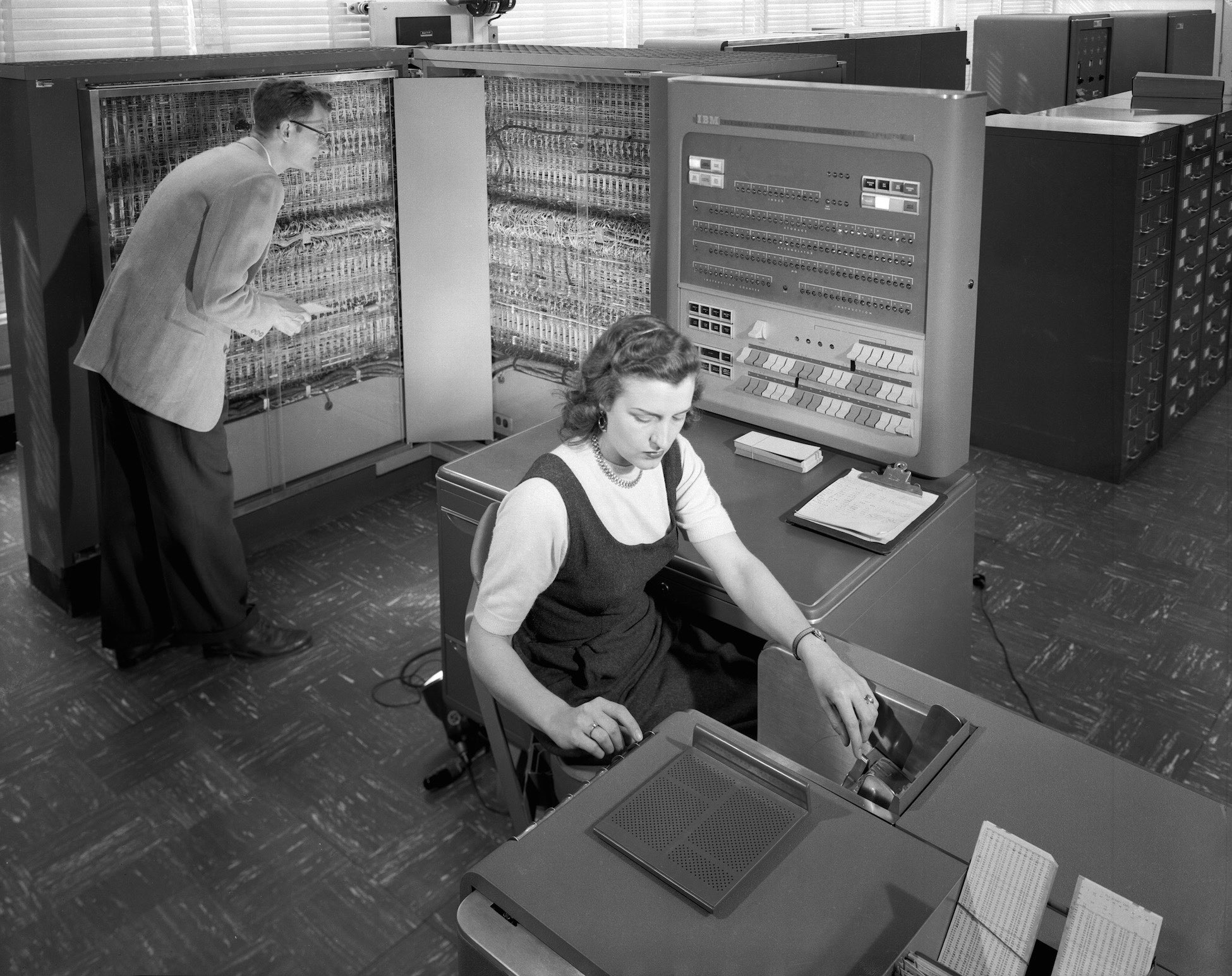

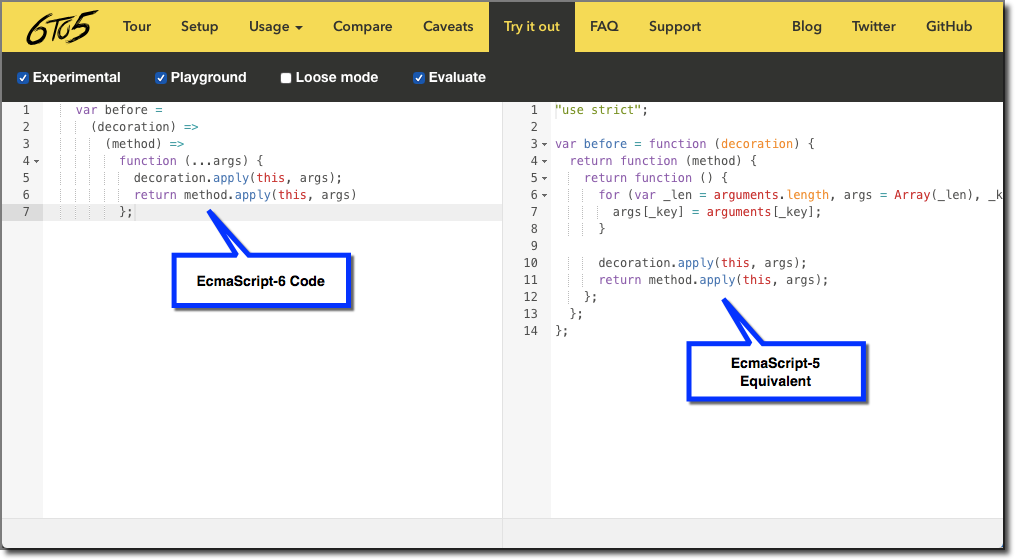

ECMAScript 2015 (formerly called ECMAScript 6 or “ES6”), is ushering in a very large number of improvements to the way programmers can write small, powerful components and combine them into larger, fully featured programs. Features like destructuring, block-structured variables, iterables, generators, and the class keyword are poised to make JavaScript programming more expressive.

Prior to ECMAScript 2015, JavaScript did not include many features that programmers have discovered are vital to writing great software. For example, JavaScript did not include block-structured variables. Over time, programmers discovered ways to roll their own versions of important features.

For example, block-structured languages allow us to write:

for (int i = 0; i < array.length; ++i) {

// ...

}

And the variable i is scoped locally to the code within the braces. Prior to ECMAScript 2015, JavaScript did not support block-structuring, so programmers borrowed a trick from the Scheme programming language, and would write:

var i;

for (i = 0; i < array.length; ++i) {

(function (i) {

// ...

})(i)

}

To create the same scoping with an Immediately Invoked Function Expression, or “IIFE.”

Likewise, many programming languages permit functions to have a variable number of arguments, and to collect the arguments into a single variable as an array. In Ruby, we can write:

def foo (first, *rest)

# ...

end

Prior to ECMAScript 2015, JavaScript did not support collecting a variable number of arguments into a parameter, so programmers would take advantage of an awkward work-around and write things like:

function foo () {

var first = arguments[0],

rest = [].slice.call(arguments, 1);

// ...

}

The first edition of JavaScript Allongé explained these and many other patterns for writing flexible and composable programs in JavaScript, but the intention wasn’t to explain how to work around JavaScript’s missing features: The intention was to explain why the style of programming exemplified by the missing features is important.

Working around the missing features was a necessary evil.

But now, JavaScript is gaining many important features, in part because the governing body behind JavaScript has observed that programmers are constantly working around the same set of limitations. With ECMASCript 2015, we can write:

for (let i = 0; i < array.length; ++i) {

// ...

}

And i is scoped to the for loop. We can also write:

function foo (first, ...rest) {

// ...

}

And presto, rest collects the rest of the arguments without a lot of malarky involving slicing arguments. Not having to work around these kinds of missing features makes JavaScript Allongé a better book, because it can focus on the why to do something and when to do it, instead of on the how to make it work

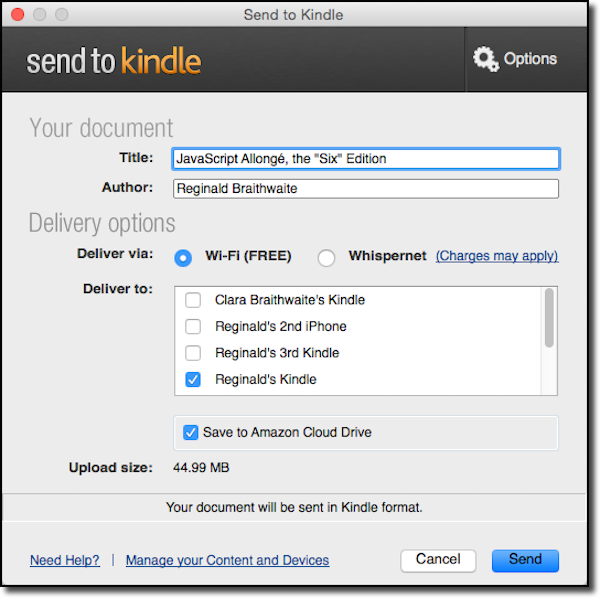

JavaScript Allongé, The “Six” Edition packs all the goodness of JavaScript Allongé into a new, updated package that is relevant for programmers working with (or planning to work with) the latest version of JavaScript.

that’s nice. is that the only reason?

Actually, no.

If it were just a matter of updating the syntax, the original version of JavaScript Allongé could have simply iterated, slowly replacing old syntax with new. It would have continued to say much the same things, only with new syntax.

But there’s more to it than that. The original JavaScript Allongé was not just written to teach JavaScript: It was written to describe certain ideas in programming: Working with small, independent entities that compose together to make bigger programs. Thus, the focus on things like writing decorators.

As noted above, JavaScript was chosen as the language for Allongé because it hit a sweet spot of having a large audience of programmers and having certain language features that happen to work well with this style of programming.

ECMAScript 2015 does more than simply update the language with some simpler syntax for a few things and help us avoid warts. It makes a number of interesting programming techniques easy to explain and easy to use. And these techniques dovetail nicely with Allongé’s focus on composing entities and working with functions.

Thus, the “six” edition introduces classes and mixins. It introduces the notion of implementing private properties with symbols. It introduces iterators and generators. But the common thread that runs through all these things is that since they are all simple objects and simple functions, we can use the same set of “programming with functions” techniques to build programs by composing small, flexible, and decoupled entities.

We just call some of those functions constructors, others decorators, others functional mixins, and yet others, policies.

Introducing so many new ideas did require a major rethink of the way the book was organized. And introducing these new ideas did add substantially to its bulk. But even so, in a way it is still explaining the exact same original idea that programs are built out of small, flexible functions composed together.

What JavaScript Allongé is. And isn’t.

JavaScript Allongé is a book about programming with functions. From functions flow many ideas, from decorators to methods to delegation to mixins, and onwards in so many fruitful directions.

The focus in this book on the underlying ideas, what we might call the fundamentals, and how they combine to form new ideas. The intention is to improve the way we think about programs. That’s a good thing.

But while JavaScript Allongé attempts to be provocative, it is not prescriptive. There is absolutely no suggestion that any of the techniques shown here are the only way to do something, the best way, or even an acceptable way to write programs that are intended to be used, read, and maintained by others.

Software development is a complex field. Choices in development are often driven by social considerations. People often say that software should be written for people to read. Doesn’t that depend upon the people in question? Should code written by a small team of specialists use the same techniques and patterns as code maintained by a continuously changing cast of inexperienced interns?

Choices in software development are also often driven by requirements specific to the type of software being developed. For example, business software written in-house has a very different set of requirements than a library written to be publicly distributed as open-source.

Choices in software development must also consider the question of consistency. If a particular codebase is written with lots of helper functions that place the subject first, like this:

const mapWith = (iterable, fn) =>

({

[Symbol.iterator]: function* () {

for (let element of iterable) {

yield fn(element);

}

}

});

Then it can be jarring to add new helpers written that place the verb first, like this:

const filterWith = (fn, iterable) =>

({

[Symbol.iterator]: function* () {

for (let element of iterable) {

if (!!fn(element)) yield element;

}

}

});

There are reasons why the second form is more flexible, especially when used in combination with partial application, but does that outweigh the benefit of having an entire codebase do everything consistently the first way or the second way?

Finally, choices in software development cannot ignore the tooling that is used to create and maintain software. The use of source-code control systems with integrated diffing rewards making certain types of focused changes. The use of linters makes checking for certain types of undesirable code very cheap. Debuggers encourage the use of functions with explicit or implicit names. Continuous integration encourages the creation of software in tandem with and factored to facilitate the creation of automated test suites.

JavaScript Allongé does not attempt to address the question of JavaScript best practices in the wider context of software development, because JavaScript Allongé isn’t a book about practicing, it’s a book about thinking.

how this book is organized

JavaScript Allongé introduces new aspects of programming with functions in each chapter, explaining exactly how JavaScript works. Code examples within each chapter are small and emphasize exposition rather than serving as patterns for everyday use.

Following some of the chapters are a series of recipes designed to show the application of the chapter’s ideas in practical form. While the content of each chapter builds naturally on what was discussed in the previous chapter, the recipes may draw upon any aspect of the JavaScript programming language.

Foreword to the “Six” edition

ECMAScript 6 (short name: ES6; official name: ECMAScript 2015) was ratified as a standard on June 17. Getting there took a while – in a way, the origins of ES6 date back to the year 2000: After ECMAScript 3 was finished, TC39 (the committee evolving JavaScript) started to work on ECMAScript 4. That version was planned to have numerous new features (interfaces, namespaces, packages, multimethods, etc.), which would have turned JavaScript into a completely new language. After internal conflict, a settlement was reached in July 2008 and a new plan was made – to abandon ECMAScript 4 and to replace it with two upgrades:

- A smaller upgrade would bring a few minor enhancements to ECMAScript 3. This upgrade became ECMAScript 5.

- A larger upgrade would substantially improve JavaScript, but without being as radical as ECMAScript 4. This upgrade became ECMAScript 6 (some features that were initially discussed will show up later, in upcoming ECMAScript versions).

ECMAScript 6 has three major groups of features:

- Better syntax for features that already exist (e.g. via libraries). For example: classes and modules.

- New functionality in the standard library. For example:

- New methods for strings and arrays

- Promises (for asynchronous programming)

- Maps and sets

- Completely new features. For example: Generators, proxies and WeakMaps.

With ECMAScript 6, JavaScript has become much larger as a language. JavaScript Allongé, the “Six” Edition is both a comprehensive tour of its features and a rich collection of techniques for making better use of them. You will learn much about functional programming and object-oriented programming. And you’ll do so via ES6 code, handed to you in small, easily digestible pieces.

– Axel Rauschmayer Blogger, trainer and author of “Exploring ES6”

Forewords to the First Edition

michael fogus

As a life-long bibliophile and long-time follower of Reg’s online work, I was excited when he started writing books. However, I’m very conservative about books – let’s just say that if there was an aftershave scented to the essence of “Used Book Store” then I would be first in line to buy. So as you might imagine I was “skeptical” about the decision to release JavaScript Allongé as an ongoing ebook, with a pay-what-you-want model. However, Reg sent me a copy of his book and I was humbled. Not only was this a great book, but it was also a great way to write and distribute books. Having written books myself, I know the pain of soliciting and receiving feedback.

The act of writing is an iterative process with (very often) tight revision loops. However, the process of soliciting feedback, gathering responses, sending out copies, waiting for people to actually read it (if they ever do), receiving feedback and then ultimately making sense out of how to use it takes weeks and sometimes months. On more than one occasion I’ve found myself attempting to reify feedback with content that either no longer existed or was changed beyond recognition. However, with the Leanpub model the read-feedback-change process is extremely efficient, leaving in its wake a quality book that continues to get better as others likewise read and comment into infinitude.

In the case of JavaScript Allongé, you’ll find the Leanpub model a shining example of effectiveness. Reg has crafted (and continues to craft) not only an interesting book from the perspective of a connoisseur, but also an entertaining exploration into some of the most interesting aspects of his art. No matter how much of an expert you think you are, JavaScript Allongé has something to teach you… about coffee. I kid.

As a staunch advocate of functional programming, much of what Reg has written rings true to me. While not exclusively a book about functional programming, JavaScript Allongé will provide a solid foundation for functional techniques. However, you’ll not be beaten about the head and neck with dogma. Instead, every section is motivated by relevant dialog and fortified with compelling source examples. As an author of programming books I admire what Reg has managed to accomplish and I envy the fine reader who finds JavaScript Allongé via some darkened channel in the Internet sprawl and reads it for the first time.

Enjoy.

– Fogus, fogus.me

matthew knox

A different kind of language requires a different kind of book.

JavaScript holds surprising depths–its scoping rules are neither strictly lexical nor strictly dynamic, and it supports procedural, object-oriented (in several flavors!), and functional programming. Many books try to hide most of those capabilities away, giving you recipes for writing JavaScript in a way that approximates class-centric programming in other languages. Not JavaScript Allongé. It starts with the fundamentals of values, functions, and objects, and then guides you through JavaScript from the inside with exploratory bits of code that illustrate scoping, combinators, context, state, prototypes, and constructors.

Like JavaScript itself, this book gives you a gentle start before showing you its full depth, and like a Cafe Allongé, it’s over too soon. Enjoy!

–Matthew Knox, mattknox.com

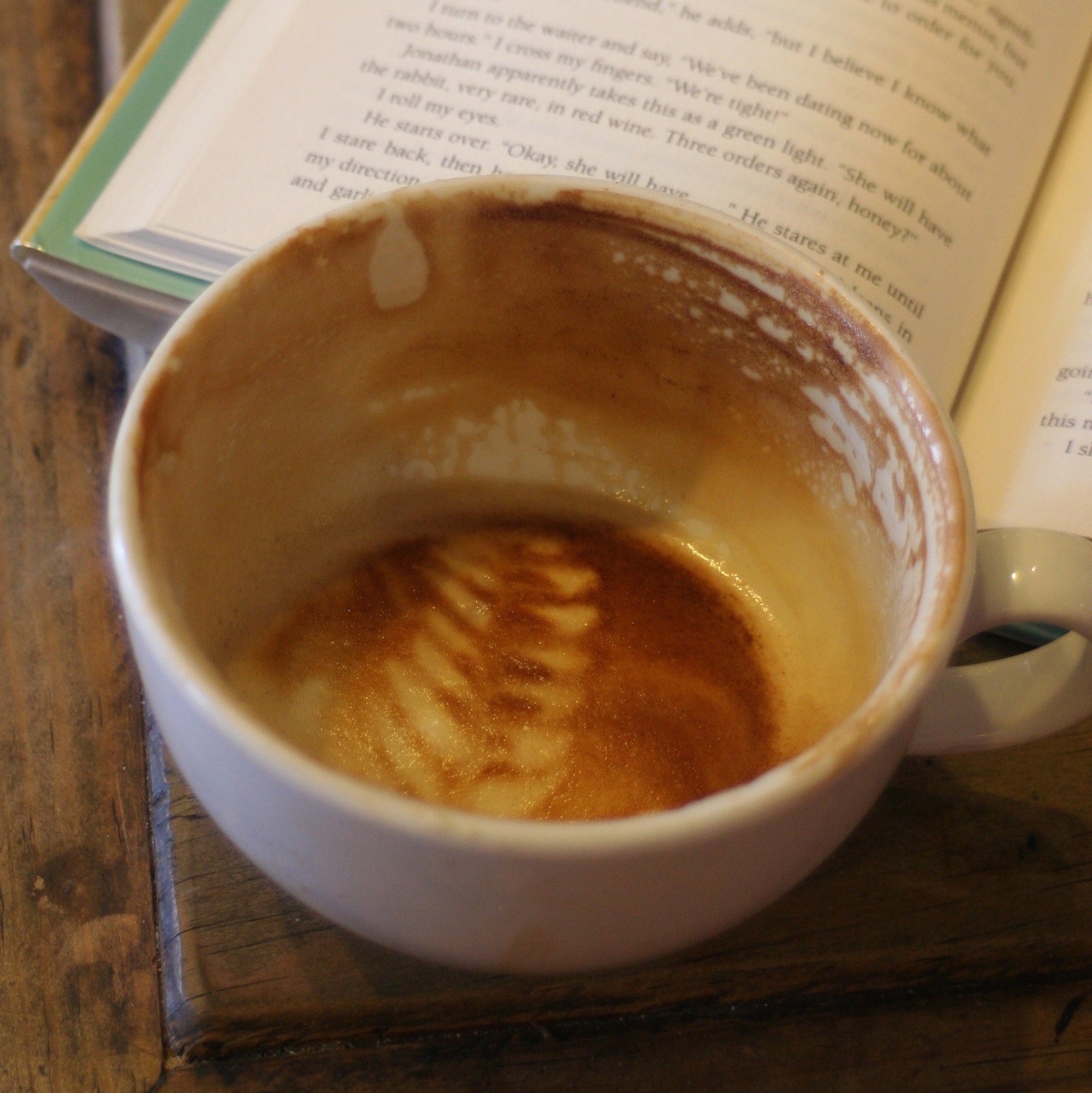

Prelude: Values and Expressions over Coffee

The following material is extremely basic, however like most stories, the best way to begin is to start at the very beginning.

Imagine we are visiting our favourite coffee shop. They will make for you just about any drink you desire, from a short, intense espresso ristretto through a dry cappuccino, up to those coffee-flavoured desert concoctions featuring various concentrated syrups and milks. (You tolerate the existence of sugary drinks because they provide a sufficient profit margin to the establishment to finance your hanging out there all day using their WiFi and ordering a $3 drink every few hours.)

You express your order at one end of their counter, the folks behind the counter perform their magic, and deliver the coffee you value at the other end. This is exactly how the JavaScript environment works for the purpose of this book. We are going to dispense with web servers, browsers and other complexities and deal with this simple model: You give the computer an expression, and it returns a value, just as you express your wishes to a barista and receive a coffee in return.

values are expressions

All values are expressions. Say you hand the barista a café Cubano. Yup, you hand over a cup with some coffee infused through partially caramelized sugar. You say, “I want one of these.” The barista is no fool, she gives it straight back to you, and you get exactly what you want. Thus, a café Cubano is an expression (you can use it to place an order) and a value (you get it back from the barista).

Let’s try this with something the computer understands easily:

42

Is this an expression? A value? Neither? Or both?

The answer is, this is both an expression and a value.1 The way you can tell that it’s both is very easy: When you type it into JavaScript, you get the same thing back, just like our café Cubano:

42

//=> 42

All values are expressions. That’s easy! Are there any other kinds of expressions? Sure! let’s go back to the coffee shop. Instead of handing over the finished coffee, we can hand over the ingredients. Let’s hand over some ground coffee plus some boiling water.

Now the barista gives us back an espresso. And if we hand over the espresso, we get the espresso right back. So, boiling water plus ground coffee is an expression, but it isn’t a value.2 Boiling water is a value. Ground coffee is a value. Espresso is a value. Boiling water plus ground coffee is an expression.

Let’s try this as well with something else the computer understands easily:

"JavaScript" + " " + "Allonge"

//=> "JavaScript Allonge"

Now we see that “strings” are values, and you can make an expression out of strings and an operator +. Since strings are values, they are also expressions by themselves. But strings with operators are not values, they are expressions. Now we know what was missing with our “coffee grounds plus hot water” example. The coffee grounds were a value, the boiling hot water was a value, and the “plus” operator between them made the whole thing an expression that was not a value.

values and identity

In JavaScript, we test whether two values are identical with the === operator, and whether they are not identical with the !== operator:

2 === 2

//=> true

'hello' !== 'goodbye'

//=> true

How does === work, exactly? Imagine that you’re shown a cup of coffee. And then you’re shown another cup of coffee. Are the two cups “identical?” In JavaScript, there are four possibilities:

First, sometimes, the cups are of different kinds. One is a demitasse, the other a mug. This corresponds to comparing two things in JavaScript that have different types. For example, the string "2" is not the same thing as the number 2. Strings and numbers are different types, so strings and numbers are never identical:

2 === '2'

//=> false

true !== 'true'

//=> true

Second, sometimes, the cups are of the same type–perhaps two espresso cups–but they have different contents. One holds a single, one a double. This corresponds to comparing two JavaScript values that have the same type but different “content.” For example, the number 5 is not the same thing as the number 2.

true === false

//=> false

2 !== 5

//=> true

'two' === 'five'

//=> false

What if the cups are of the same type and the contents are the same? Well, JavaScript’s third and fourth possibilities cover that.

value types

Third, some types of cups have no distinguishing marks on them. If they are the same kind of cup, and they hold the same contents, we have no way to tell the difference between them. This is the case with the strings, numbers, and booleans we have seen so far.

2 + 2 === 4

//=> true

(2 + 2 === 4) === (2 !== 5)

//=> true

Note well what is happening with these examples: Even when we obtain a string, number, or boolean as the result of evaluating an expression, it is identical to another value of the same type with the same “content.” Strings, numbers, and booleans are examples of what JavaScript calls “value” or “primitive” types. We’ll use both terms interchangeably.

We haven’t encountered the fourth possibility yet. Stretching the metaphor somewhat, some types of cups have a serial number on the bottom. So even if you have two cups of the same type, and their contents are the same, you can still distinguish between them.

reference types

So what kinds of values might be the same type and have the same contents, but not be considered identical to JavaScript? Let’s meet a data structure that is very common in contemporary programming languages, the Array (other languages sometimes call it a List or a Vector).

An array looks like this: [1, 2, 3]. This is an expression, and you can combine [] with other expressions. Go wild with things like:

[2-1, 2, 2+1]

[1, 1+1, 1+1+1]

Notice that you are always generating arrays with the same contents. But are they identical the same way that every value of 42 is identical to every other value of 42? Try these for yourself:

[2-1, 2, 2+1] === [1,2,3]

[1,2,3] === [1, 2, 3]

[1, 2, 3] === [1, 2, 3]

How about that! When you type [1, 2, 3] or any of its variations, you are typing an expression that generates its own unique array that is not identical to any other array, even if that other array also looks like [1, 2, 3]. It’s as if JavaScript is generating new cups of coffee with serial numbers on the bottom.

They look the same, but if you examine them with ===, you see that they are different. Every time you evaluate an expression (including typing something in) to create an array, you’re creating a new, distinct value even if it appears to be the same as some other array value. As we’ll see, this is true of many other kinds of values, including functions, the main subject of this book.

A Rich Aroma: Basic Numbers

In computer science, a literal is a notation for representing a fixed value in source code. Almost all programming languages have notations for atomic values such as integers, floating-point numbers, and strings, and usually for booleans and characters; some also have notations for elements of enumerated types and compound values such as arrays, records, and objects. An anonymous function is a literal for the function type.—Wikipedia

JavaScript, like most languages, has a collection of literals. We saw that an expression consisting solely of numbers, like 42, is a literal. It represents the number forty-two, which is 42 base 10. Not all numbers are base ten. If we start a literal with a zero, it is an octal literal. So the literal 042 is 42 base 8, which is actually 34 base 10.

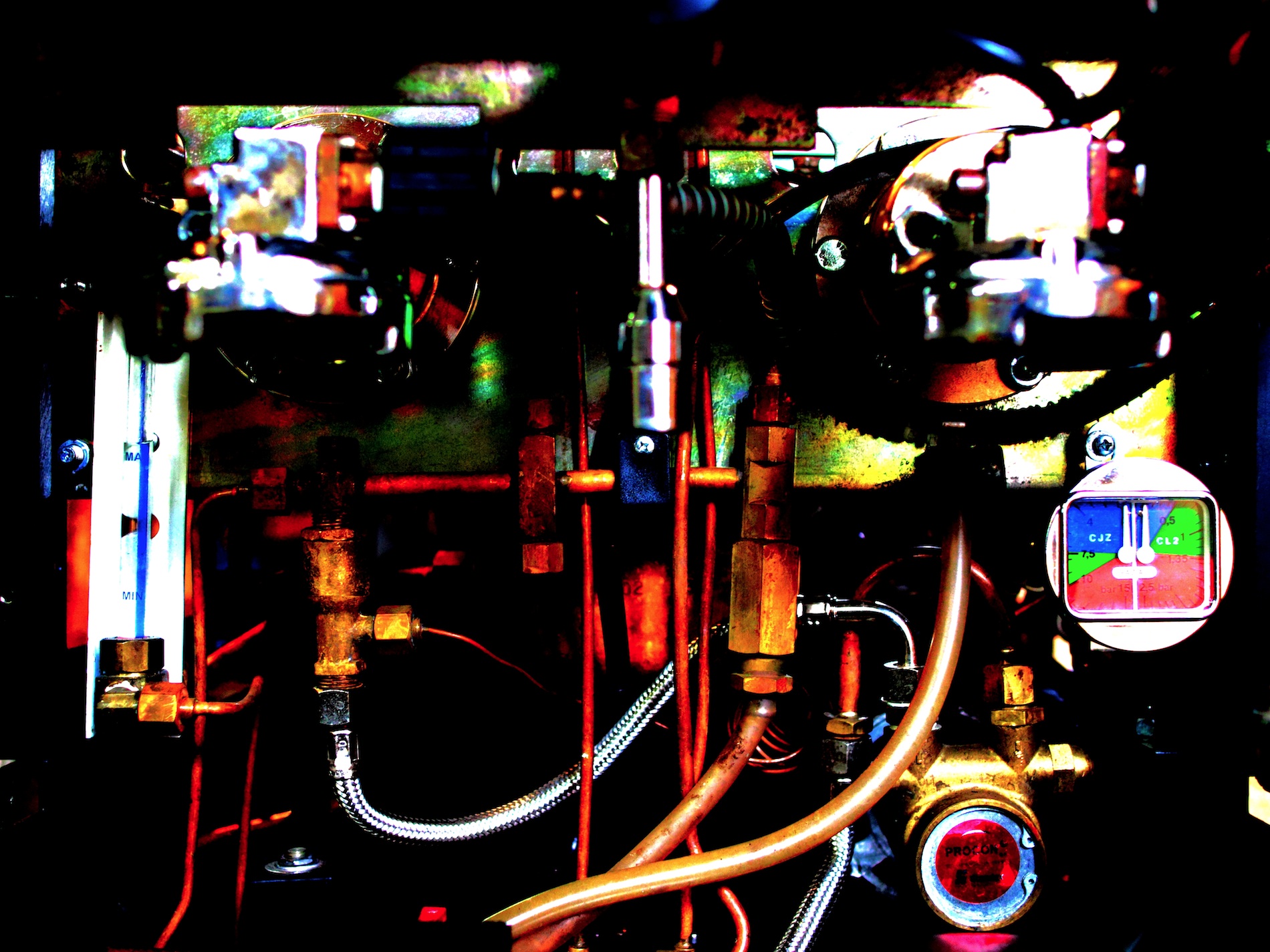

Internally, both 042 and 34 have the same representation, as double-precision floating point numbers. A computer’s internal representation for numbers is important to understand. The machine’s representation of a number almost never lines up perfectly with our understanding of how a number behaves, and thus there will be places where the computer’s behaviour surprises us if we don’t know a little about what it’s doing “under the hood.”

For example, the largest integer JavaScript can safely3 handle is 9007199254740991, or 2`53`- 1. Like most programming languages, JavaScript does not allow us to use commas to separate groups of digits.

floating

Most programmers never encounter the limit on the magnitude of an integer. But we mentioned that numbers are represented internally as floating point, meaning that they need not be just integers. We can, for example, write 1.5 or 33.33, and JavaScript represents these literals as floating point numbers.

It’s tempting to think we now have everything we need to do things like handle amounts of money, but as the late John Belushi would say, “Nooooooooooooooooooooo.” A computer’s internal representation for a floating point number is binary, while our literal number was in base ten. This makes no meaningful difference for integers, but it does for fractions, because some fractions base 10 do not have exact representations base 2.

One of the most oft-repeated examples is this:

1.0

//=> 1

1.0 + 1.0

//=> 2

1.0 + 1.0 + 1.0

//=> 3

However:

0.1

//=> 0.1

0.1 + 0.1

//=> 0.2

0.1 + 0.1 + 0.1

//=> 0.30000000000000004

This kind of “inexactitude” can be ignored when performing calculations that have an acceptable deviation. For example, when centering some text on a page, as long as the difference between what you might calculate longhand and JavaScript’s calculation is less than a pixel, there is no observable error.

But as a rule, if you need to work with real numbers, you should have more than a nodding acquaintance with the IEEE Standard for Floating-Point Arithmetic. Professional programmers almost never use floating point numbers to represent monetary amounts. For example, “$43.21” will nearly always be presented as two numbers: 43 for dollars and 21 for cents, not 43.21. In this book, we need not think about such details, but outside of this book, we must.

operations on numbers

As we’ve seen, JavaScript has many common arithmetic operators. We can create expressions that look very much like mathematical expressions, for example we can write 1 + 1 or 2 * 3 or 42 - 34 or even 6 / 2. These can be combined to make more complex expressions, like 2 * 5 + 1.

In JavaScript, operators have an order of precedence designed to mimic the way humans typically parse written arithmetic. So:

2 * 5 + 1

//=> 11

1 + 5 * 2

//=> 11

JavaScript treats the expressions as if we had written (2 * 5) + 1 and 1 + (5 * 2), because the * operator has a higher precedence than the + operator. JavaScript has many more operators. In a sense, they behave like little functions. If we write 1 + 2, this is conceptually similar to writing plus(1, 2) (assuming we have a function that adds two numbers bound to the name plus, of course).

In addition to the common +, -, *, and /, JavaScript also supports modulus, %, and unary negation, -:

-(457 % 3)

//=> -1

There are lots and lots more operators that can be used with numbers, including bitwise operators like | and & that allow you to operate directly on a number’s binary representation, and a number of other operators that perform assignment or logical comparison that we will look at later.

The first sip: Basic Functions

As Little As Possible About Functions, But No Less

In JavaScript, functions are values, but they are also much more than simple numbers, strings, or even complex data structures like trees or maps. Functions represent computations to be performed. Like numbers, strings, and arrays, they have a representation. Let’s start with the second simplest possible function.4 In JavaScript, it looks like this:

() => 0

This is a function that is applied to no values and returns 0. Let’s verify that our function is a value like all others:

(() => 0)

//=> [Function]

What!? Why didn’t it type back () => 0 for us? This seems to break our rule that if an expression is also a value, JavaScript will give the same value back to us. What’s going on? The simplest and easiest answer is that although the JavaScript interpreter does indeed return that value, displaying it on the screen is a slightly different matter. [Function] is a choice made by the people who wrote Node.js, the JavaScript environment that hosts the JavaScript REPL. If you try the same thing in a browser, you may see something else.

functions and identities

You recall that we have two types of values with respect to identity: Value types and reference types. Value types share the same identity if they have the same contents. Reference types do not.

Which kind are functions? Let’s try them out and see. For reasons of appeasing the JavaScript parser, we’ll enclose our functions in parentheses:

(() => 0) === (() => 0)

//=> false

Like arrays, every time you evaluate an expression to produce a function, you get a new function that is not identical to any other function, even if you use the same expression to generate it. “Function” is a reference type.

applying functions

Let’s put functions to work. The way we use functions is to apply them to zero or more values called arguments. Just as 2 + 2 produces a value (in this case 4), applying a function to zero or more arguments produces a value as well.

Here’s how we apply a function to some values in JavaScript: Let’s say that fn_expr is an expression that when evaluated, produces a function. Let’s call the arguments args. Here’s how to apply a function to some arguments:

fn_expr(args)

Right now, we only know about one such expression: () => 0, so let’s use it. We’ll put it in parentheses5 to keep the parser happy, like we did above: (() => 0). Since we aren’t giving it any arguments, we’ll simply write () after the expression. So we write:

(() => 0)()

//=> 0

functions that return values and evaluate expressions

We’ve seen () => 0. We know that (() => 0)() returns 0, and this is unsurprising. Likewise, the following all ought to be obvious:

(() => 1)()

//=> 1

(() => "Hello, JavaScript")()

//=> "Hello, JavaScript"

(() => Infinity)()

//=> Infinity

Well, the last one’s a doozy, but still, the general idea is this: We can make a function that returns a value by putting the value to the right of the arrow.

In the prelude, we looked at expressions. Values like 0 are expressions, as are things like 40 + 2. Can we put an expression to the right of the arrow?

(() => 1 + 1)()

//=> 2

(() => "Hello, " + "JavaScript")()

//=> "Hello, JavaScript"

(() => Infinity * Infinity)()

//=> Infinity

Yes we can. We can put any expression to the right of the arrow. For example, (() => 0)() is an expression. Can we put it to the right of an arrow, like this: () => (() => 0)()?

Let’s try it:

(() => (() => 0)())()

//=> 0

Yes we can! Functions can return the value of evaluating another function.

When dealing with expressions that have a lot of the same characters (like parentheses), you may find it helpful to format the code to make things stand out. So we can also write:

(() =>

(() => 0

)()

)()

//=> 0

It evaluates to the same thing, 0.

commas

The comma operator in JavaScript is interesting. It takes two arguments, evaluates them both, and itself evaluates to the value of the right-hand argument. In other words:

(1, 2)

//=> 2

(1 + 1, 2 + 2)

//=> 4

We can use commas with functions to create functions that evaluate multiple expressions:

(() => (1 + 1, 2 + 2))()

//=> 4

This is useful when trying to do things that might involve side-effects, but we’ll get to that later. In most cases, JavaScript does not care whether things are separated by spaces, tabs, or line breaks. So we can also write:

() =>

(1 + 1, 2 + 2)

Or even:

() => (

1 + 1,

2 + 2

)

the simplest possible block

There’s another thing we can put to the right of an arrow, a block. A block has zero or more statements, separated by semicolons.6

So, this is a valid function:

() => {}

It returns the result of evaluating a block that has no statements. What would that be? Let’s try it:

(() => {})()

//=> undefined

What is this undefined?

undefined

In JavaScript, the absence of a value is written undefined, and it means there is no value. It will crop up again. undefined is its own type of value, and it acts like a value type:

undefined

//=> undefined

Like numbers, booleans and strings, JavaScript can print out the value undefined.

undefined === undefined

//=> true

(() => {})() === (() => {})()

//=> true

(() => {})() === undefined

//=> true

No matter how you evaluate undefined, you get an identical value back. undefined is a value that means “I don’t have a value.” But it’s still a value :-)

void

We’ve seen that JavaScript represents an undefined value by typing undefined, and we’ve generated undefined values in two ways:

- By evaluating a function that doesn’t return a value

(() => {})(), and; - By writing

undefinedourselves.

There’s a third way, with JavaScript’s void operator. Behold:

void 0

//=> undefined

void 1

//=> undefined

void (2 + 2)

//=> undefined

void is an operator that takes any value and evaluates to undefined, always. So, when we deliberately want an undefined value, should we use the first, second, or third form?7 The answer is, use void. By convention, use void 0.

The first form works but it’s cumbersome. The second form works most of the time, but it is possible to break it by reassigning undefined to a different value, something we’ll discuss in Reassignment and Mutation. The third form is guaranteed to always work, so that’s what we will use.8

back on the block

Back to our function. We evaluated this:

(() => {})()

//=> undefined

We said that the function returns the result of evaluating a block, and we said that a block is a (possibly empty) list of JavaScript statements separated by semicolons.9

Something like: { statement1; statement2; statement3; ... ; statementn }

We haven’t discussed these statements. What’s a statement?

There are many kinds of JavaScript statements, but the first kind is one we’ve already met. An expression is a JavaScript statement. Although they aren’t very practical, these are valid JavaScript functions, and they return undefined when applied:

() => { 2 + 2 }

() => { 1 + 1; 2 + 2 }

As we saw with commas above, we can rearrange these functions onto multiple lines when we feel its more readable that way:

() => {

1 + 1;

2 + 2

}

But no matter how we arrange them, a block with one or more expressions still evaluates to undefined:

(() => { 2 + 2 })()

//=> undefined

(() => { 1 + 1; 2 + 2 })()

//=> undefined

(() => {

1 + 1;

2 + 2

})()

//=> undefined

As you can see, a block with one expression does not behave like an expression, and a block with more than one expression does not behave like an expression constructed with the comma operator:

(() => 2 + 2)()

//=> 4

(() => { 2 + 2 })()

//=> undefined

(() => (1 + 1, 2 + 2))()

//=> 4

(() => { 1 + 1; 2 + 2 })()

//=> undefined

So how do we get a function that evaluates a block to return a value when applied? With the return keyword and any expression:

(() => { return 0 })()

//=> 0

(() => { return 1 })()

//=> 1

(() => { return 'Hello ' + 'World' })()

// 'Hello World'

The return keyword creates a return statement that immediately terminates the function application and returns the result of evaluating its expression. For example:

(() => {

1 + 1;

return 2 + 2

})()

//=> 4

And also:

(() => {

return 1 + 1;

2 + 2

})()

//=> 2

The return statement is the first statement we’ve seen, and it behaves differently than an expression. For example, you can’t use one as the expression in a simple function, because it isn’t an expression:

(() => return 0)()

//=> ERROR

Statements belong inside blocks and only inside blocks. Some languages simplify this by making everything an expression, but JavaScript maintains this distinction, so when learning JavaScript we also learn about statements like function declarations, for loops, if statements, and so forth. We’ll see a few more of these later.

functions that evaluate to functions

If an expression that evaluates to a function is, well, an expression, and if a return statement can have any expression on its right side… Can we put an expression that evaluates to a function on the right side of a function expression?

Yes:

() => () => 0

That’s a function! It’s a function that when applied, evaluates to a function that when applied, evaluates to 0. So we have a function, that returns a function, that returns zero. Likewise:

() => () => true

That’s a function, that returns a function, that returns true:

(() => () => true)()()

//=> true

We could, of course, do the same thing with a block if we wanted:

() => () => { return true; }

But we generally don’t.

Well. We’ve been very clever, but so far this all seems very abstract. Diffraction of a crystal is beautiful and interesting in its own right, but you can’t blame us for wanting to be shown a practical use for it, like being able to determine the composition of a star millions of light years away. So… In the next chapter, “I’d Like to Have an Argument, Please,” we’ll see how to make functions practical.

Ah. I’d Like to Have an Argument, Please.10

Up to now, we’ve looked at functions without arguments. We haven’t even said what an argument is, only that our functions don’t have any.

Let’s make a function with an argument:

(room) => {}

This function has one argument, room, and an empty body. Here’s a function with two arguments and an empty body:

(room, board) => {}

I’m sure you are perfectly comfortable with the idea that this function has two arguments, room, and board. What does one do with the arguments? Use them in the body, of course. What do you think this is?

(diameter) => diameter * 3.14159265

It’s a function for calculating the circumference of a circle given the diameter. I read that aloud as “When applied to a value representing the diameter, this function returns the diameter times 3.14159265.”

Remember that to apply a function with no arguments, we wrote (() => {})(). To apply a function with an argument (or arguments), we put the argument (or arguments) within the parentheses, like this:

((diameter) => diameter * 3.14159265)(2)

//=> 6.2831853

You won’t be surprised to see how to write and apply a function to two arguments:

((room, board) => room + board)(800, 150)

//=> 950

call by value

Like most contemporary programming languages, JavaScript uses the “call by value” evaluation strategy. That means that when you write some code that appears to apply a function to an expression or expressions, JavaScript evaluates all of those expressions and applies the functions to the resulting value(s).

So when you write:

((diameter) => diameter * 3.14159265)(1 + 1)

//=> 6.2831853

What happened internally is that the expression 1 + 1 was evaluated first, resulting in 2. Then our circumference function was applied to 2.11

We’ll see below that while JavaScript always calls by value, the notion of a “value” has additional subtlety. But before we do, let’s look at variables.

variables and bindings

Right now everything looks simple and straightforward, and we can move on to talk about arguments in more detail. And we’re going to work our way up from (diameter) => diameter * 3.14159265 to functions like:

(x) => (y) => x

In order to talk about how this works, we should agree on a few terms (you may already know them, but let’s check-in together and “synchronize our dictionaries”). The first x, the one in (x) => ..., is an argument. The y in function (y) ... is another argument. The second x, the one in => x, is not an argument, it’s an expression referring to a variable. Arguments and variables work the same way whether we’re talking about (x) => (y) => x or just plain (x) => x.

Every time a function is invoked (“invoked” means “applied to zero or more arguments”), a new environment is created. An environment is a (possibly empty) dictionary that maps variables to values by name. The x in the expression that we call a “variable” is itself an expression that is evaluated by looking up the value in the environment.

How does the value get put in the environment? Well for arguments, that is very simple. When you apply the function to the arguments, an entry is placed in the dictionary for each argument. So when we write:

((x) => x)(2)

//=> 2

What happens is this:

- JavaScript parses this whole thing as an expression made up of several sub-expressions.

- It then starts evaluating the expression, including evaluating sub-expressions

- One sub-expression,

(x) => xevaluates to a function. - Another,

2, evaluates to the number 2. - JavaScript now evaluates applying the function to the argument

2. Here’s where it gets interesting… - An environment is created.

- The value ‘2’ is bound to the name ‘x’ in the environment.

- The expression ‘x’ (the right side of the function) is evaluated within the environment we just created.

- The value of a variable when evaluated in an environment is the value bound to the variable’s name in that environment, which is ‘2’

- And that’s our result.

When we talk about environments, we’ll use an unsurprising syntax for showing their bindings: {x: 2, ...}. meaning, that the environment is a dictionary, and that the value 2 is bound to the name x, and that there might be other stuff in that dictionary we aren’t discussing right now.

call by sharing

Earlier, we distinguished JavaScript’s value types from its reference types. At that time, we looked at how JavaScript distinguishes objects that are identical from objects that are not. Now it is time to take another look at the distinction between value and reference types.

There is a property that JavaScript strictly maintains: When a value–any value–is passed as an argument to a function, the value bound in the function’s environment must be identical to the original.

We said that JavaScript binds names to values, but we didn’t say what it means to bind a name to a value. Now we can elaborate: When JavaScript binds a value-type to a name, it makes a copy of the value and places the copy in the environment. As you recall, value types like strings and numbers are identical to each other if they have the same content. So JavaScript can make as many copies of strings, numbers, or booleans as it wishes.

What about reference types? JavaScript does not place copies of reference values in any environment. JavaScript places references to reference types in environments, and when the value needs to be used, JavaScript uses the reference to obtain the original.

Because many references can share the same value, and because JavaScript passes references as arguments, JavaScript can be said to implement “call by sharing” semantics. Call by sharing is generally understood to be a specialization of call by value, and it explains why some values are known as value types and other values are known as reference types.

And with that, we’re ready to look at closures. When we combine our knowledge of value types, reference types, arguments, and closures, we’ll understand why this function always evaluates to true no matter what argument12 you apply it to:

(value) =>

((ref1, ref2) => ref1 === ref2)(value, value)

Closures and Scope

It’s time to see how a function within a function works:

((x) => (y) => x)(1)(2)

//=> 1

First off, let’s use what we learned above. Given (some function)(some argument), we know that we apply the function to the argument, create an environment, bind the value of the argument to the name, and evaluate the function’s expression. So we do that first with this code:

((x) => (y) => x)(1)

//=> [Function]

The environment belonging to the function with signature (x) => ... becomes {x: 1, ...}, and the result of applying the function is another function value. It makes sense that the result value is a function, because the expression for (x) => ...’s body is:

(y) => x

So now we have a value representing that function. Then we’re going to take the value of that function and apply it to the argument 2, something like this:

((y) => x)(2)

So we seem to get a new environment {y: 2, ...}. How is the expression x going to be evaluated in that function’s environment? There is no x in its environment, it must come from somewhere else.

if functions without free variables are pure, are closures impure?

The function (y) => x is interesting. It contains a free variable, x.13 A free variable is one that is not bound within the function. Up to now, we’ve only seen one way to “bind” a variable, namely by passing in an argument with the same name. Since the function (y) => x doesn’t have an argument named x, the variable x isn’t bound in this function, which makes it “free.”

Now that we know that variables used in a function are either bound or free, we can bifurcate functions into those with free variables and those without:

- Functions containing no free variables are called pure functions.

- Functions containing one or more free variables are called closures.

Pure functions are easiest to understand. They always mean the same thing wherever you use them. Here are some pure functions we’ve already seen:

() => {}

(x) => x

(x) => (y) => x

The first function doesn’t have any variables, therefore doesn’t have any free variables. The second doesn’t have any free variables, because its only variable is bound. The third one is actually two functions, one inside the other. (y) => ... has a free variable, but the entire expression refers to (x) => ..., and it doesn’t have a free variable: The only variable anywhere in its body is x, which is certainly bound within (x) => ....

From this, we learn something: A pure function can contain a closure.

Pure functions always mean the same thing because all of their “inputs” are fully defined by their arguments. Not so with a closure. If I present to you this pure function (x, y) => x + y, we know exactly what it does with (2, 2). But what about this closure: (y) => x + y? We can’t say what it will do with argument (2) without understanding the magic for evaluating the free variable x.

it’s always the environment

To understand how closures are evaluated, we need to revisit environments. As we’ve said before, all functions are associated with an environment. We also hand-waved something when describing our environment. Remember that we said the environment for ((x) => (y) => x)(1) is {x: 1, ...} and that the environment for ((y) => x)(2) is {y: 2, ...}? Let’s fill in the blanks!

The environment for ((y) => x)(2) is actually {y: 2, '..': {x: 1, ...}}. '..' means something like “parent” or “enclosure” or “super-environment.” It’s (x) => ...’s environment, because the function (y) => x is within (x) => ...’s body. So whenever a function is applied to arguments, its environment always has a reference to its parent environment.

And now you can guess how we evaluate ((y) => x)(2) in the environment {y: 2, '..': {x: 1, ...}}. The variable x isn’t in (y) => ...’s immediate environment, but it is in its parent’s environment, so it evaluates to 1 and that’s what ((y) => x)(2) returns even though it ended up ignoring its own argument.

Functions can have grandparents too:

(x) =>

(y) =>

(z) => x + y + z

This function does much the same thing as:

(x, y, z) => x + y + z

Only you call it with (1)(2)(3) instead of (1, 2, 3). The other big difference is that you can call it with (1) and get a function back that you can later call with (2)(3).

shadowy variables from a shadowy planet

An interesting thing happens when a variable has the same name as an ancestor environment’s variable. Consider:

(x) =>

(x, y) => x + y

The function (x, y) => x + y is a pure function, because its x is defined within its own environment. Although its parent also defines an x, it is ignored when evaluating x + y. JavaScript always searches for a binding starting with the functions own environment and then each parent in turn until it finds one. The same is true of:

(x) =>

(x, y) =>

(w, z) =>

(w) =>

x + y + z

When evaluating x + y + z, JavaScript will find x and y in the great-grandparent scope and z in the parent scope. The x in the great-great-grandparent scope is ignored, as are both ws. When a variable has the same name as an ancestor environment’s binding, it is said to shadow the ancestor.

This is often a good thing.

which came first, the chicken or the egg?

This behaviour of pure functions and closures has many, many consequences that can be exploited to write software. We are going to explore them in some detail as well as look at some of the other mechanisms JavaScript provides for working with variables and mutable state.

But before we do so, there’s one final question: Where does the ancestry start? If there’s no other code in a file, what is (x) => x’s parent environment?

JavaScript always has the notion of at least one environment we do not control: A global environment in which many useful things are bound such as libraries full of standard functions. So when you invoke ((x) => x)(1) in the REPL, its full environment is going to look like this: {x: 1, '..': global environment}.

Sometimes, programmers wish to avoid this. If you don’t want your code to operate directly within the global environment, what can you do? Create an environment for them, of course. Many programmers choose to write every JavaScript file like this:

// top of the file

(() => {

// ... lots of JavaScript ...

})();

// bottom of the file

The effect is to insert a new, empty environment in between the global environment and your own functions: {x: 1, '..': {'..': global environment}}. As we’ll see when we discuss mutable state, this helps to prevent programmers from accidentally changing the global state that is shared by all code in the program.

That Constant Coffee Craving

Up to now, all we’ve really seen are anonymous functions, functions that don’t have a name. This feels very different from programming in most other languages, where the focus is on naming functions, methods, and procedures. Naming things is a critical part of programming, but all we’ve seen so far is how to name arguments.

There are other ways to name things in JavaScript, but before we learn some of those, let’s see how to use what we already have to name things. Let’s revisit a very simple example:

(diameter) => diameter * 3.14159265

What is this “3.14159265” number? PI, obviously. We’d like to name it so that we can write something like:

(diameter) => diameter * PI

In order to bind 3.14159265 to the name PI, we’ll need a function with a parameter of PI applied to an argument of 3.14159265. If we put our function expression in parentheses, we can apply it to the argument of 3.14159265:

((PI) =>

// ????

)(3.14159265)

What do we put inside our new function that binds 3.14159265 to the name PI when evaluated? Our circumference function, of course:

((PI) =>

(diameter) => diameter * PI

)(3.14159265)

This expression, when evaluated, returns a function that calculates circumferences. That sounds bad, but when we think about it, (diameter) => diameter * 3.14159265 is also an expression, that when evaluated, returns a function that calculates circumferences. All of our “functions” are expressions. This one has a few more moving parts, that’s all. But we can use it just like (diameter) => diameter * 3.14159265.

Let’s test it:

((diameter) => diameter * 3.14159265)(2)

//=> 6.2831853

((PI) =>

(diameter) => diameter * PI

)(3.14159265)(2)

//=> 6.2831853

That works! We can bind anything we want in an expression by wrapping it in a function that is immediately invoked with the value we want to bind.14

inside-out

There’s another way we can make a function that binds 3.14159265 to the name PI and then uses that in its expression. We can turn things inside-out by putting the binding inside our diameter calculating function, like this:

(diameter) =>

((PI) =>

diameter * PI)(3.14159265)

It produces the same result as our previous expressions for a diameter-calculating function:

((diameter) => diameter * 3.14159265)(2)

//=> 6.2831853

((PI) =>

(diameter) => diameter * PI

)(3.14159265)(2)

//=> 6.2831853

((diameter) =>

((PI) =>

diameter * PI)(3.14159265))(2)

//=> 6.2831853

Which one is better? Well, the first one seems simplest, but a half-century of experience has taught us that names matter. A “magic literal” like 3.14159265 is anathema to sustainable software development.

The third one is easiest for most people to read. It separates concerns nicely: The “outer” function describes its parameters:

(diameter) =>

// ...

Everything else is encapsulated in its body. That’s how it should be, naming PI is its concern, not ours. The other formulation:

((PI) =>

// ...

)(3.14159265)

“Exposes” naming PI first, and we have to look inside to find out why we care. So, should we always write this?

(diameter) =>

((PI) =>

diameter * PI)(3.14159265)

Well, the wrinkle with this is that typically, invoking functions is considerably more expensive than evaluating expressions. Every time we invoke the outer function, we’ll invoke the inner function. We could get around this by writing

((PI) =>

(diameter) => diameter * PI

)(3.14159265)

But then we’ve obfuscated our code, and we don’t want to do that unless we absolutely have to.

What would be very nice is if the language gave us a way to bind names inside of blocks without incurring the cost of a function invocation. And JavaScript does.

const

Another way to write our “circumference” function would be to pass PI along with the diameter argument, something like this:

(diameter, PI) => diameter * PI

And we could use it like this:

((diameter, PI) => diameter * PI)(2, 3.14159265)

//=> 6.2831853

This differs from our example above in that there is only one environment, rather than two. We have one binding in the environment representing our regular argument, and another our “constant.” That’s more efficient, and it’s almost what we wanted all along: A way to bind 3.14159265 to a readable name.

JavaScript gives us a way to do that, the const keyword. We’ll learn a lot more about const in future chapters, but here’s the most important thing we can do with const:

(diameter) => {

const PI = 3.14159265;

return diameter * PI

}

The const keyword introduces one or more bindings in the block that encloses it. It doesn’t incur the cost of a function invocation. That’s great. Even better, it puts the symbol (like PI) close to the value (3.14159265). That’s much better than what we were writing.

We use the const keyword in a const statement. const statements occur inside blocks, we can’t use them when we write a fat arrow that has an expression as its body.

It works just as we want. Instead of:

((diameter) =>

((PI) =>

diameter * PI)(3.14159265))(2)

Or:

((diameter, PI) => diameter * PI)(2, 3.14159265)

//=> 6.2831853

We write:

((diameter) => {

const PI = 3.14159265;

return diameter * PI

})(2)

//=> 6.2831853

We can bind any expression. Functions are expressions, so we can bind helper functions:

(d) => {

const calc = (diameter) => {

const PI = 3.14159265;

return diameter * PI

};

return "The circumference is " + calc(d)

}

Notice calc(d)? This underscores what we’ve said: if we have an expression that evaluates to a function, we apply it with (). A name that’s bound to a function is a valid expression evaluating to a function.15

We can bind more than one name-value pair by separating them with commas. For readability, most people put one binding per line:

(d) => {

const PI = 3.14159265,

calc = (diameter) => diameter * PI;

return "The circumference is " + calc(d)

}

nested blocks

Up to now, we’ve only ever seen blocks we use as the body of functions. But there are other kinds of blocks. One of the places you can find blocks is in an if statement. In JavaScript, an if statement looks like this:

(n) => {

const even = (x) => {

if (x === 0)

return true;

else

return !even(x - 1);

}

return even(n)

}

And it works for fairly small numbers:

((n) => {

const even = (x) => {

if (x === 0)

return true;

else

return !even(x - 1);

}

return even(n)

})(13)

//=> false

The if statement is a statement, not an expression (an unfortunate design choice), and its clauses are statements or blocks. So we could also write something like:

(n) => {

const even = (x) => {

if (x === 0)

return true;

else {

const odd = (y) => !even(y);

return odd(x - 1);

}

}

return even(n)

}

And this also works:

((n) => {

const even = (x) => {

if (x === 0)

return true;

else {

const odd = (y) => !even(y);

return odd(x - 1);

}

}

return even(n)

})(42)

//=> true

We’ve used a block as the else clause, and since it’s a block, we’ve placed a const statement inside it.

const and lexical scope

This seems very straightforward, but alas, there are some semantics of binding names that we need to understand if we’re to place const anywhere we like. The first thing to ask ourselves is, what happens if we use const to bind two different values to the “same” name?

Let’s back up and reconsider how closures work. What happens if we use parameters to bind two different values to the same name?

Here’s the second formulation of our diameter function, bound to a name using an IIFE:

((diameter_fn) =>

// ...

)(

((PI) =>

(diameter) => diameter * PI

)(3.14159265)

)

It’s more than a bit convoluted, but it binds ((PI) => (diameter) => diameter * PI)(3.14159265) to diameter_fn and evaluates the expression that we’ve elided. We can use any expression in there, and that expression can invoke diameter_fn. For example:

((diameter_fn) =>

diameter_fn(2)

)(

((PI) =>

(diameter) => diameter * PI

)(3.14159265)

)

//=> 6.2831853

We know this from the chapter on closures, but even though PI is not bound when we invoke diameter_fn by evaluating diameter_fn(2), PI is bound when we evaluated (diameter) => diameter * PI, and thus the expression diameter * PI is able to access values for PI and diameter when we evaluate diameter_fn.

This is called lexical scoping, because we can discover where a name is bound by looking at the source code for the program. We can see that PI is bound in an environment surrounding (diameter) => diameter * PI, we don’t need to know where diameter_fn is invoked.

We can test this by deliberately creating a “conflict:”

((diameter_fn) =>

((PI) =>

diameter_fn(2)

)(3)

)(

((PI) =>

(diameter) => diameter * PI

)(3.14159265)

)

//=> 6.2831853

Although we have bound 3 to PI in the environment surrounding diameter_fn(2), the value that counts is 3.14159265, the value we bound to PI in the environment surrounding (diameter) ⇒ diameter * PI.

That much we can carefully work out from the way closures work. Does const work the same way? Let’s find out:

((diameter_fn) => {

const PI = 3;

return diameter_fn(2)

})(

(() => {

const PI = 3.14159265;

return (diameter) => diameter * PI

})()

)

//=> 6.2831853

Yes. Binding values to names with const works just like binding values to names with parameter invocations, it uses lexical scope.

are consts also from a shadowy planet?

We just saw that values bound with const use lexical scope, just like values bound with parameters. They are looked up in the environment where they are declared. And we know that functions create environments. Parameters are declared when we create functions, so it makes sense that parameters are bound to environments created when we invoke functions.

But const statements can appear inside blocks, and we saw that blocks can appear inside of other blocks, including function bodies. So where are const variables bound? In the function environment? Or in an environment corresponding to the block?

We can test this by creating another conflict. But instead of binding two different variables to the same name in two different places, we’ll bind two different values to the same name, but one environment will be completely enclosed by the other.

Let’s start, as above, by doing this with parameters. We’ll start with:

((PI) =>

(diameter) => diameter * PI

)(3.14159265)

And gratuitously wrap it in another IIFE so that we can bind PI to something else:

((PI) =>

((PI) =>

(diameter) => diameter * PI

)(3.14159265)

)(3)

This still evaluates to a function that calculates diameters:

((PI) =>

((PI) =>

(diameter) => diameter * PI

)(3.14159265)

)(3)(2)

//=> 6.2831853

And we can see that our diameter * PI expression uses the binding for PI in the closest parent environment. but one question: Did binding 3.14159265 to PI somehow change the binding in the “outer” environment? Let’s rewrite things slightly differently:

((PI) => {

((PI) => {})(3);

return (diameter) => diameter * PI;

})(3.14159265)

Now we bind 3 to PI in an otherwise empty IIFE inside of our IIFE that binds 3.14159265 to PI. Does that binding “overwrite” the outer one? Will our function return 6 or 6.2831853? This is a book, you’ve already scanned ahead, so you know that the answer is no, the inner binding does not overwrite the outer binding:

((PI) => {

((PI) => {})(3);

return (diameter) => diameter * PI;

})(3.14159265)(2)

//=> 6.2831853

We say that when we bind a variable using a parameter inside another binding, the inner binding shadows the outer binding. It has effect inside its own scope, but does not affect the binding in the enclosing scope.

So what about const. Does it work the same way?

((diameter) => {

const PI = 3.14159265;

(() => {

const PI = 3;

})();

return diameter * PI;

})(2)

//=> 6.2831853

Yes, names bound with const shadow enclosing bindings just like parameters. But wait! There’s more!!!

Parameters are only bound when we invoke a function. That’s why we made all these IIFEs. But const statements can appear inside blocks. What happens when we use a const inside of a block?

We’ll need a gratuitous block. We’ve seen if statements, what could be more gratuitous than:

if (true) {

// an immediately invoked block statement (IIBS)

}

Let’s try it:

((diameter) => {

const PI = 3;

if (true) {

const PI = 3.14159265;

return diameter * PI;

}

})(2)

//=> 6.2831853

((diameter) => {

const PI = 3.14159265;

if (true) {

const PI = 3;

}

return diameter * PI;

})(2)

//=> 6.2831853

Ah! const statements don’t just shadow values bound within the environments created by functions, they shadow values bound within environments created by blocks!

This is enormously important. Consider the alternative: What if const could be declared inside of a block, but it always bound the name in the function’s scope. In that case, we’d see things like this:

((diameter) => {

const PI = 3.14159265;

if (true) {

const PI = 3;

}

return diameter * PI;

})(2)

//=> would return 6 if const had function scope

If const always bound its value to the name defined in the function’s environment, placing a const statement inside of a block would merely rebind the existing name, overwriting its old contents. That would be super-confusing. And this code would “work:”

((diameter) => {

if (true) {

const PI = 3.14159265;

}

return diameter * PI;

})(2)

//=> would return 6.2831853 if const had function scope

Again, confusing. Typically, we want to bind our names as close to where we need them as possible. This design rule is called the Principle of Least Privilege, and it has both quality and security implications. Being able to bind a name inside of a block means that if the name is only needed in the block, we are not “leaking” its binding to other parts of the code that do not need to interact with it.

rebinding

By default, JavaScript permits us to rebind new values to names bound with a parameter. For example, we can write:

const evenStevens = (n) => {

if (n === 0) {

return true;

}

else if (n == 1) {

return false;

}

else {

n = n - 2;

return evenStevens(n);

}

}

evenStevens(42)

//=> true

The line n = n - 2; rebinds a new value to the name n. We will discuss this at much greater length in Reassignment, but long before we do, let’s try a similar thing with a name bound using const. We’ve already bound evenStevens using const, let’s try rebinding it:

evenStevens = (n) => {

if (n === 0) {

return true;

}

else if (n == 1) {

return false;

}

else {

return evenStevens(n - 2);

}

}

//=> ERROR, evenStevens is read-only

JavaScript does not permit us to rebind a name that has been bound with const. We can shadow it by using const to declare a new binding with a new function or block scope, but we cannot rebind a name that was bound with const in an existing scope.

This is valuable, as it greatly simplifies the analysis of programs to see at a glance that when something is bound with const, we need never worry that its value may change.

Naming Functions

Let’s get right to it. This code does not name a function:

const repeat = (str) => str + str

It doesn’t name the function “repeat” for the same reason that const answer = 42 doesn’t name the number 42. This syntax binds an anonymous function to a name in an environment, but the function itself remains anonymous.

the function keyword

JavaScript does have a syntax for naming a function, we use the function keyword. Until ECMAScript 2015 was created, function was the usual syntax for writing functions.

Here’s our repeat function written using a “fat arrow”

(str) => str + str

And here’s (almost) the exact same function written using the function keyword:

function (str) { return str + str }

Let’s look at the obvious differences:

- We introduce a function with the

functionkeyword. - Something else we’re about to discuss is optional.

- We have arguments in parentheses, just like fat arrow functions.

- We do not have a fat arrow, we go directly to the body.

- We always use a block, we cannot write

function (str) str + str. This means that if we want our functions to return a value, we always need to use thereturnkeyword

If we leave out the “something optional” that comes after the function keyword, we can translate all of the fat arrow functions that we’ve seen into function keyword functions, e.g.

(n) => (1.618**n - -1.618**-n) / 2.236

Can be written as:

function (n) {

return (1.618**n - -1.618**-n) / 2.236;

}

This still does not name a function, but as we noted above, functions written with the function keyword have an optional “something else.” Could that “something else” name a function? Yes, of course.16

Here are our example functions written with names:

const repeat = function repeat (str) {

return str + str;

};

const fib = function fib (n) {

return (1.618**n - -1.618**-n) / 2.236;

};

Placing a name between the function keyword and the argument list names the function. Confusingly, the name of the function is not exactly the same thing as the name we may choose to bind to the value of the function. For example, we can write:

const double = function repeat (str) {

return str + str;

}

In this expression, double is the name in the environment, but repeat is the function’s actual name. This is a named function expression. That may seem confusing, but think of the binding names as properties of the environment, not of the function. While the name of the function is a property of the function, not of the environment.

And indeed the name is a property:

double.name

//=> 'repeat'

In this book we are not examining JavaScript’s tooling such as debuggers baked into browsers, but we will note that when you are navigating call stacks in all modern tools, the function’s binding name is ignored but its actual name is displayed, so naming functions is very useful even if they don’t get a formal binding, e.g.

someBackboneView.on('click', function clickHandler () {

//...

});

Now, the function’s actual name has no effect on the environment in which it is used. To whit:

const bindingName = function actualName () {

//...

};

bindingName

//=> [Function: actualName]

actualName

//=> ReferenceError: actualName is not defined

So “actualName” isn’t bound in the environment where we use the named function expression. Is it bound anywhere else? Yes it is. Here’s a function that determines whether a positive integer is even or not. We’ll use it in an IIFE so that we don’t have to bind it to a name with const:

(function even (n) {

if (n === 0) {

return true

}

else return !even(n - 1)

})(5)

//=> false

(function even (n) {

if (n === 0) {

return true

}

else return !even(n - 1)

})(2)

//=> true

Clearly, the name even is bound to the function within the function’s body. Is it bound to the function outside of the function’s body?

even

//=> Can't find variable: even

even is bound within the function itself, but not outside it. This is useful for making recursive functions as we see above, and it speaks to the principle of least privilege: If you don’t need to name it anywhere else, you needn’t.

function declarations

There is another syntax for naming and/or defining a function. It’s called a function declaration statement, and it looks a lot like a named function expression, only we use it as a statement:

function someName () {

// ...

}

This behaves a little like:

const someName = function someName () {

// ...

}

In that it binds a name in the environment to a named function. However, there are two important differences. First, function declarations are hoisted to the top of the function in which they occur.

Consider this example where we try to use the variable fizzbuzz as a function before we bind a function to it with const:

(function () {

return fizzbuzz();

const fizzbuzz = function fizzbuzz () {

return "Fizz" + "Buzz";

}

})()

//=> undefined is not a function (evaluating 'fizzbuzz()')

We haven’t actually bound a function to the name fizzbuzz before we try to use it, so we get an error. But a function declaration works differently:

(function () {

return fizzbuzz();

function fizzbuzz () {

return "Fizz" + "Buzz";

}

})()

//=> 'FizzBuzz'

Although fizzbuzz is declared later in the function, JavaScript behaves as if we’d written:

(function () {

const fizzbuzz = function fizzbuzz () {

return "Fizz" + "Buzz";

}

return fizzbuzz();

})()

The definition of the fizzbuzz is “hoisted” to the top of its enclosing scope (an IIFE in this case). This behaviour is intentional on the part of JavaScript’s design to facilitate a certain style of programming where you put the main logic up front, and the “helper functions” at the bottom. It is not necessary to declare functions in this way in JavaScript, but understanding the syntax and its behaviour (especially the way it differs from const) is essential for working with production code.

function declaration caveats17

Function declarations are formally only supposed to be made at what we might call the “top level” of a function. Although some JavaScript environments permit the following code, this example is technically illegal and definitely a bad idea:

(function (camelCase) {

return fizzbuzz();

if (camelCase) {

function fizzbuzz () {

return "Fizz" + "Buzz";

}

}

else {

function fizzbuzz () {

return "Fizz" + "Buzz";

}

}

})(true)

//=> 'FizzBuzz'? Or ERROR: Can't find variable: fizzbuzz?

Function declarations are not supposed to occur inside of blocks. The big trouble with expressions like this is that they may work just fine in your test environment but work a different way in production. Or it may work one way today and a different way when the JavaScript engine is updated, say with a new optimization.

Another caveat is that a function declaration cannot exist inside of any expression, otherwise it’s a function expression. So this is a function declaration:

function trueDat () { return true }

But this is not:

(function trueDat () { return true })

The parentheses make this an expression, not a function declaration.

Combinators and Function Decorators

higher-order functions

As we’ve seen, JavaScript functions take values as arguments and return values. JavaScript functions are values, so JavaScript functions can take functions as arguments, return functions, or both. Generally speaking, a function that either takes functions as arguments, or returns a function, or both, is referred to as a “higher-order” function.

Here’s a very simple higher-order function that takes a function as an argument:

const repeat = (num, fn) =>

(num > 0)

? (repeat(num - 1, fn), fn(num))

: undefined

repeat(3, function (n) {

console.log(`Hello ${n}`)

})

//=>

'Hello 1'

'Hello 2'

'Hello 3'

undefined

Higher-order functions dominate JavaScript Allongé. But before we go on, we’ll talk about some specific types of higher-order functions.

combinators

The word “combinator” has a precise technical meaning in mathematics:

“A combinator is a higher-order function that uses only function application and earlier defined combinators to define a result from its arguments.”–Wikipedia

If we were learning Combinatorial Logic, we’d start with the most basic combinators like S, K, and I, and work up from there to practical combinators. We’d learn that the fundamental combinators are named after birds following the example of Raymond Smullyan’s famous book To Mock a Mockingbird.

In this book, we will be using a looser definition of “combinator:” Higher-order pure functions that take only functions as arguments and return a function. We won’t be strict about using only previously defined combinators in their construction.

Let’s start with a useful combinator: Most programmers call it Compose, although the logicians call it the B combinator or “Bluebird.” Here is the typical18 programming implementation:

const compose = (a, b) =>

(c) => a(b(c))

Let’s say we have:

const addOne = (number) => number + 1;

const doubleOf = (number) => number * 2;

With compose, anywhere you would write

const doubleOfAddOne = (number) => doubleOf(addOne(number));

You could also write:

const doubleOfAddOne = compose(doubleOf, addOne);