2. Risk Workflow

2.1 Concepts

2.1.1 Abusing the concept of RISK

As you read this book you will notice liberal references to the concept of RISK, especially when I discuss anything that has security or quality implications.

The reason is I find that RISK is a sufficiently broad concept that can encompass issues of security or quality in a way that makes sense.

I know that there are many, more formal definitions of RISK and all its sub-categories that could be used, but it is most important that in the real world we keep things simple, and avoid a proliferation of unnecessary terms.

Fundamentally, my definition of RISK is based on the concept of ‘behaviors’ and ‘side-effects of code’ (whether intentional or not). The key is to map reality and what is possible.

RISKs can also be used in multi-dimensional analysis, where multiple paths can be analyzed, each with a specific set of RISKs (depending on the chosen implementation).

For example, every threat (or RISK) in a threat model needs to have a corresponding RISK ticket, so that it can be tracked, managed and reported.

Making it expensive to do dangerous actions

A key concept is that we must make it harder for development teams to add features that have security, privacy, and quality implications.

On the other hand, we can’t really say ‘No’ to business owners, since they are responsible for the success of any current project. Business owners have very legitimate reasons to ask for those features. Business wishes are driven by (end)user wishes, (possibly defined by the market research department and documented in a MRD (Market requirement Document). Saying no to the business means saying no to the customer. The goal, therefore, is to enable users to do what they want, with an acceptable level of risk.

By providing multiple paths (with and without additional or new RISKs) we make the implications of specific decisions very clear.

What usually happens is that initially, Path A might look easier and faster than either of Paths B or C, but, after creating a threat model, and mapping out Path A’s RISK, in many cases Path B or C might be better options due to their reduced number of RISKs.

It is important to have these discussions before any code is written and while there is still the opportunity to propose changes. I’ve been involved in many projects where the security/risk review only occurs before the project goes live, which means there is zero opportunity to make any significant architectural changes.

Release Management (RM) should be the gating factor. RM should establish quality gates based on business and security team minimum bar.

2.1.2 Accepting risk

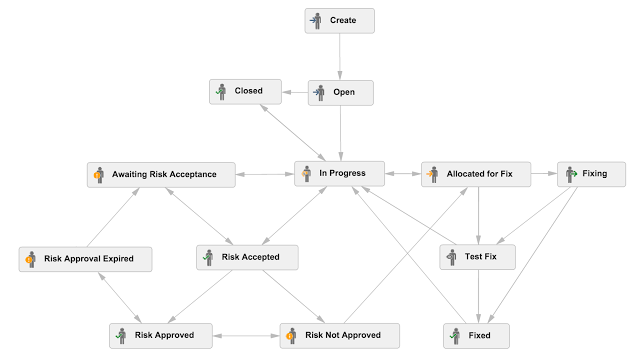

Accepting risk is always a topic of discussion, mostly caused by lack of information. The JIRA Workflow aims to make this process more objective and pragmatic.

Risks can be accepted for a variety of reasons:

- the system containing the risk will be decommissioned in the short term, and does not handle or process sensitive information

- the risk can only occur under very specific circumstances

- the cost of fixing (both money and other resources) is much higher than the cost of fixing operational issues

- the business is okay with the probability of occurrence and its financial/commercial impact

- the system is still under development and it is not live

- the assets managed/exposed by the affected risks are not that important

- the risk represents the current state of affairs and a significant effort would be required to change it

Risks should never be accepted for issues that

- threaten the operational existence of the company (e.g. losing a banking license)

- have significant financial impact (e.g. costs, fines due to data leaks)

- have significant business impact (e.g. losing customers)

- greatly impact the company’s reputation (e.g. front page news)

Not being attacked is both a blessing and a curse. It’s a blessing for a company that has not gone through the pain of an attack, but a curse because it is easy to gain a false sense of security.

The real challenge for companies that have NOT been attacked properly, or publicly, and don’t have an institutional memory of those incidents, is to be able to quantify these risks in real business, financial, and reputational terms.

This is why exploits are so important for companies that don’t have good internal references (or case studies) on why secure applications matter.

As one staff member or manager accepts risk on behalf of his boss, and this action is replicated throughout a company, all the way to the CTO, risks are accumulated. If risks are accepted throughout the management levels of a company, then responsibility should be borne in a similar way if things go wrong. Developers should be able to ensure that it is their manager who will get fired. That manager should make sure that his boss will get fired, and so on, all the way to the CTO and the CISO.

- business owners (or who controls the development pipeline) make large number of decisions with very little side effects

- add explanation of why pollution is a much better analogy than ‘Technical Debt’ (in measuring the side effects of coding and management decisions).

- Risk is a nice way to measure this polution

- ”…you are already being played in this game, so you might as well expose the game and tilt the rules in your favour…“ *

There is a video by Host Unknown, that lays out the consequences of thoughtlessly accepting risk. It is a comic piece, but with a very serious message. (Host Unknown presents: Accepted the Risk)

2.1.3 Can’t do Security Analysis when doing Code Review

One lesson I have learned is that the mindset and the focus that you have when you do security reviews are very different than when you work on normal feature and code analysis.

This is very important because as you accelerate in the DevOps world, it means that you start to ship code much faster, which in turn means that code hits production much faster. Logically, this means that vulnerabilities also hit production much faster.

In the past, almost through inertia, you prevented major vulnerabilities from propagating into production and being exposed to production data. Now, as you accelerate, vulnerabilities, and even maliciously introduced vulnerabilities, will be exposed into production more quickly.

This means that you must have security checks in place. The problem is, implementing those security checks requires a completely different mindset than implementing code reviews.

When you do a code review, you tend to visualize a slice of a model of the application. Your focus is fixed entirely on the problem at hand, and it is hard to think outside of that.

When you implement a security review, this approach becomes counter-intuitive, because many of the security reviews that you do are about following rabbit holes, and finding black spots. Your brain is not geared for this kind of work if you are more used to working on code reviews.

It doesn’t help that we still don’t have very good tests, which don’t focus on the behavior or the side effects of the components. Instead, tests tend to focus more on specific code changes which might be a subset of the behavior changes of the code change.

You need systems that can flag when something is a problem, or needs to be reviewed. Then, with a different mindset or even different people, or different times, go through the code and ask, “What are the unintended side effects? Does this match the threat model that was created?”

In a way, the point of the threat models is to determine and confirm the expected behavior. Ultimately, in security reviews you double-check these environments.

This requires a different mindset, because now you must follow the rabbit holes, and you must ask the following questions:

a] how does data get in here? b] what happens from here? c] who consumes this? d] how much do I trust this?

You ask the STRIDE questions, where you go through proofing, tampering, information disclosure, repudiation, denial of service, and you ask those questions across the multiple layers, and across multiple components. The better the test environment, and the better the technology you have to support you, the easier this task becomes. Of course, it becomes harder, if not impossible, when you don’t have a good test environment, or good technology, because you don’t have enough visibility.

Ideally, the static analysis should significantly help the execution of a security analysis task. The problem is, they still don’t expose a lot of the internal models and they don’t view themselves as tools to help with this analysis. This is crazy when you think about their assets.

2.1.4 Creating Small Tests

When opening issues focused on quality or security best practices (for example, a security assessment or a code review), it’s better to keep them as small as possible. Ideally, these issues are placed on a separate bug-tracking project (like the Security RISK one), since they can cause problems for project managers who like to keep their bug count small.

Since this type of information only exists while AppSec developers are looking at code, if the information isn’t captured, it will be lost, either forever, or until that bug or issue becomes active. It is very important that you have a place to put all those small issues, examples, and changes.

Capturing the small issues also helps to capture the high-level and critical security issues that are made of multiple low or medium issues. Their capture also helps to visualize the patterns that occur across the organization, for example, in an issue that effects dozens of teams or products.

This approach also provides a way to measure exactly what will occur during a quality pass or sprint, for example, when focusing on cleaning and improving the quality of the application.

The smaller the issue, the smaller the commit, the smaller the tests will be. As a result, the whole process will be smoother and easier to review.

It also creates a great two-way communication system between Development and AppSec, since it provides a great place for the team to collaborate.

When a new developer joins the team, they should start with fixing bugs and creating tests for them. This can be a great way for the new team member to understand what is going on, and how the application works.

Fixing these easy tests is also a good preparation for tackling more complex development tasks.

2.1.5 Creating Abuse Cases

Developer teams tend to focus on the ‘Happy Paths’, where everything works out exactly as expected. However, in agile environments, creating evil user stories linked to a user story can be a powerful technique to convey higher level threats.

Evil user stories (Abuse cases)

Evil user stories have a dependency with threat modeling and can be an effective way of translating higher level threats and mitigations. They can be imagined as a kind of malicious Murphy’s law 3.

Take for example a login flow. After doing a threat model on this flow one should have identified the following information:

Attackers: * registered users * unregistered users

Assets: * credentials * customer information

With this information, the following threats can be constructed:

- unregistered users bypassing the login functionality

- unregistered users enumerating accounts

- unregistered users brute-forcing passwords

- registered users accessing other users’ information

At the same time the team has constructed the following user stories that should be implemented: * “as a registered user I want to be able to login with my credentials so I can access my information”

Combined with the outcome of the threat model, the following evil user stories can be constructed: * “as an unregistered user I should not be able to login without credentials, and access customer information” * “as an unregistered user I should not be able to identify existing accounts and use them to attempt to login” * “as a user I should not be able to have unlimited attempts to log in and hijack someone’s account” * “as an authenticated user I should not be able to access other users’ information”

2.1.6 Deliver PenTest reports using JIRA

Using JIRA to deliver PenTest reports is much more efficient than creating a PDF to do so. Using JIRA allows for both a quick validation of findings, and direct communication between the developers and the AppSec team.

If customized properly, there are a number of tools that can act as triage tools between PenTest results and JIRA:

- ‘defect dojo’

- ‘bag of holding’

- ThreadFix

- Other…

Threat Modeling tools could also work well between PenTest results and JIRA.

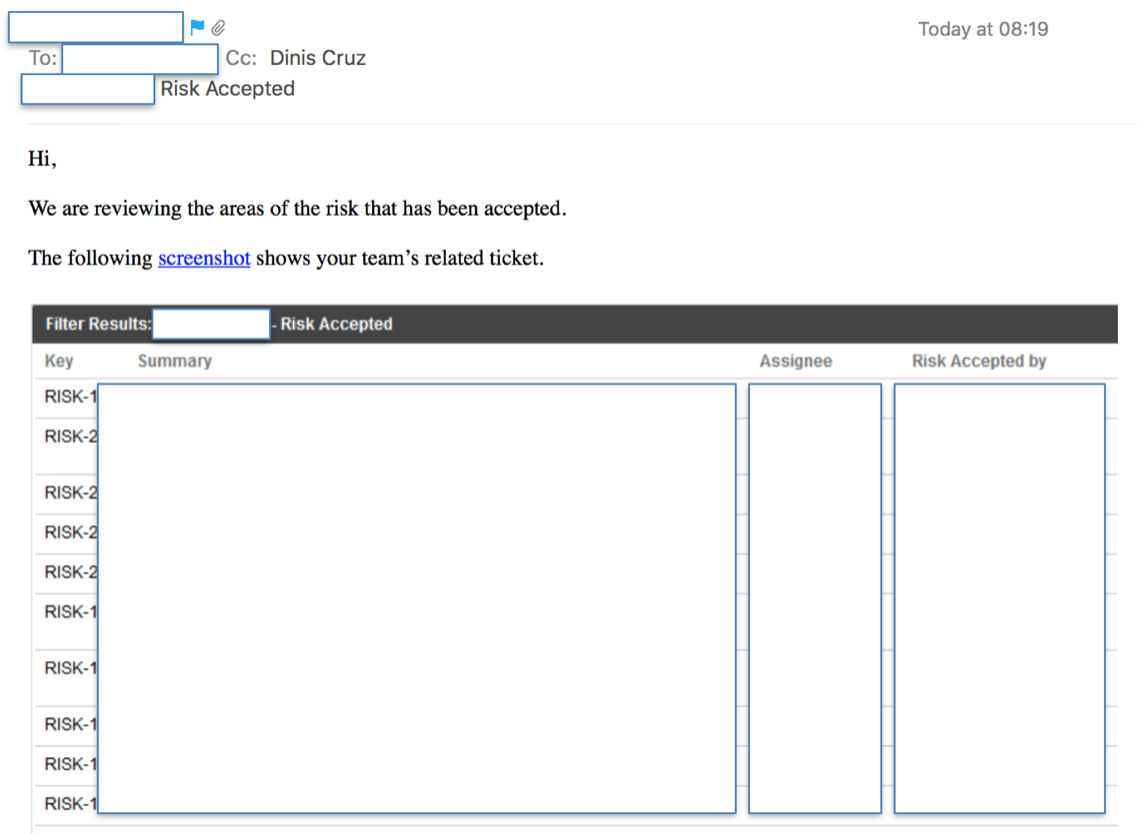

2.1.7 Email is not an Official Communication Medium

Emails are conversations, they are not official communication mediums. In companies, there is a huge amount of information and decisions that is only communicated using emails, namely:

- risks

- to-dos

- non-functional requirements

- re-factoring needs

- post-mortem analysis

This knowledge tends to only exist on an email thread or in the middle of a document. That is not good enough. Email is mostly noise, and once something goes into an email, it is often very difficult to find it again.

If information is not at the end of a link, like on a wiki page, bug-tracking system or source control solution like Git, it basically doesn’t exist.

It is especially important not to communicate security risks or quality issues in email, where it is not good enough to say to a manager, ”… by the way, here is something I am worried about…“.

You should create and send a JIRA RISK ticket to the manager.

This will allow you to track the situation, the information collected and the responses; in short, you can track exactly what is going on. This approach also gets around the problem of someone moving to other teams or companies. Their knowledge of a particular issue remains behind for someone else to use if they need to.

Emails are not a way to communicate official information; they are just a nice chat, and they should be understood as such. Important official information, in my view, should be hyperlinked.

The hyperlinkability of a piece of information is key, because once it has a hyperlinked location, you can point others to it, and a track record is created.

The way I look at it, if information is not available on the hyperlink, it doesn’t exist.

The Slack revolution

There is a Real-time messaging revolution happening, driven by tools like Slack or Matter-most, which are quickly becoming central to development and operational teams (some still use old-school tools like Basecamp, Jabber, IRC, IM or Skype).

One of the real powers of this new generation of collaboration tools is the integration with CI/CD and their ability to become the glue between teams and tools.

The problem is that all data is short-lived, and will soon disappear (there are some limited search capabilities, which are as bad as email).

Using Wikis as knowledge capture

JIRA issues can also be hard to find, especially since they tend to be focused on specific topics.

Labels, queries and reports help, but the best model is capture their knowledge by linking to them on Wikis (e.g. MediaWiki or Confluence) or document management tools (e.g. Umbraco or Sharepoint). The idea is to document lessons learned, how-to’s, and best practice.

2.1.8 Good Managers are not the Solution

When we talk about risk, workflows, business owners making decisions about development, and QA teams that don’t write tests, we often hear the comment, “If we had good managers, we wouldn’t have this problem”.

That statement implies that if you had good managers, you wouldn’t have the problem, because good managers would solve the problem. That is the wrong approach to the statement. Rather, if you had good managers, you wouldn’t have the problem, because good managers would ask the right questions before the problem even developed.

These workflows aren’t designed for when you have a good manager, a manager who pushes testing, who demands good releases, who demands releases every day, or who demands changes to be made immediately.

These workflows are designed for bad managers (I use the term reluctantly). Bad managers are not knowledgeable, or they are exclusively focused on the short-term benefits of business decisions, without taking to account the medium-term consequences of the same decisions. This goes back to the idea of pollution, where the manager says “Just ship it now, and we will deal with the pollution later”. With start-ups, sometimes managers will even say, “Push it out or we won’t have a company”.

The risk workflow, and the whole idea of making people accountable is exactly because of these kinds of situations, where poor decisions by management can cause huge problems.

We want to empower developers, the technical guys who are writing code and have a very good grasp of reality and potential side effects. They are the ones who should make technical decisions, because they are the ones who spend their time coding, and they understand what is going on.

2.1.9 Hyperlink Everything you do

Whether you are a developer or a security person, it is crucial that you link everything you do to a location where somebody can just click on a link and hit it. Make sure whatever you do is hyperlinkable.

This means that what you create is scalable, and it can be shared and found easily. It forms part of a workflow that is just about impossible if you don’t hyperlink your material.

An email is a black box, a dump of data which is a wasted opportunity because once an email is sent, it is difficult to find the information again. Yes, it is still possible to create a mess when you start to link things, connect things, and generate all sorts of data, but you are playing a better game. You are on a path that makes it much easier in the medium term for somebody to come in, click on the link, and make sure it is improved. It is a much better model.

Let others to help you.

If you share something with a link, in the future somebody can proactively find it, connect to it and do something about it. That is how you scale. Once you send enough links out to people, they learn where to look for information.

Every time I write something that is more than a couple of paragraphs long I try to include a link so that my future self, or somebody else in the future, will be able to find it and propagate that information without my active involvement.

Putting information in a public hyperlinked position encourages a culture of sharing. Making information available to a wider audience, either to the internet or internally in a company, sends the message that it is okay to share.

Sharing through hyperlinking is a powerful concept because when you send information to somebody directly it is very unlikely that you will note on each file whether it is okay to share.

But if you put data on a public, or on an internal easy-to-access, system, then you send a message to other players that it is okay to share this information more widely. Sending that link to other people has a huge impact on how that idea, or that concept, will propagate across the company and across your environment.

The thing to remember is that you are playing a long game. Your priority is not to get an immediate response, it is to change the pattern, stage the flows.

2.1.10 Linking source code to Risks

If you add links to risk as source code comments, you deploy a powerful and very useful technique with many benefits.

When you add links to the root cause location, and all the places where the risk exists, you make the risk visible. This reinforces the concept of cost (i.e. pollution) when insecure, or poor quality, code is written. Linking the source code to risk becomes a positive model when fixes delete the comments. When the comments are removed, the AppSec team is alerted to the need for a security review. Finally, tools can be built that will scan for these comments and provide a ‘risk pollution’ indicator.

- add coffee-script example from ‘Start with tests’ presentation

2.1.11 Mitigating Risk

One strategy to handle risk is to mitigate it.

Sometimes, external solutions, such as a WAF, provide a more effective solution.

If a WAF fixed the issue, and there are tests that prove it, then it is a valid fix. In this case, it is important to create a new RISK which says “Number of security vulnerabilities are currently being mitigated using a WAF. If that WAF is disabled, or if there is a WAF bypass exploit, then the vulnerabilities will become exploitable”

2.1.12 Passive aggressive strategy

Keep zooming in on the answers to refine the scope and focus of the issue.

Not finding something is a risk, and not having time to research something is a risk.

Sometimes, it is necessary to open tickets that state the obvious: * when we are not using SSL (or have an HTTP to HTTPS transition), then we should open a risk to be accepted with: “Our current SSL protection only works when the attacker we are trying to protect against (via man-in-the-middle) is not there“

2.1.13 Reducing complexity

The name of the game is to reduce the moving parts of a particular solution, its interactions, inputs, and behaviours.

Essentially, the aim is to reduce the complexity of what is being done.

2.1.14 Start with passing tests

- add content from presentation

2.1.15 Ten minute hack vs one day work

- 10m hacks vs 1 day proper coding will create more RISK tickets and source comments

- I get asked this a lot from developers

- There has to be a quantitive difference, which needs to be measured

That code sucks

- Ok can you prove it?

- Code analysers in IDE can help identify known bad patterns and provide awareness

- Why is it bad?

In new code legacy - if it also hard to refactor and change, then yes

2.1.16 The Pollution Analogy

When talking about risks, I prefer to use an pollution analogy rather than technical debt. The idea is that we measure the unintended negative consequences of creating something, which in essence is pollution.

In the past, pollution was seen an acceptable side effect of the industrial revolution. For a long time, pollution wasn’t seen as a problem in the same way that we don’t see security vulnerability as a problem today. We still don’t understand that gaping holes in our infrastructure, or in our code, are a massive problem for current and future generations.

We are still in the infancy of software security, where we are in the 1950s in terms of pollution. David Rice gave a great presentation4 where he talks about the history of pollution and how it maps perfectly with InfoSec and AppSec.

Finding a security vulnerability is questioning the entire development pipeline and quality control. How it is possible that these massive gaps, these massive security vulnerabilities and code patterns weren’t picked up before. Weren’t they understood by the development teams, by the testers, by QA, by the clients?

Even worse, can’t the current NOC and log monitoring detect those when those vulnerabilities are initially probed and ultimately exploited?

We need to make this pollution visible.

I view the proposed risk model as a way to measure that pollution, and to measure the difference between app A and app B.

One positive side of using the pollution analogy, is that security doesn’t need to say no all time. Instead of saying “You can’t do XYZ”, AppSec says “If you want to go for feature A in a specific timeline, in a specific scope with a specific brief, then you will have this residual pollution, and these unintended consequences”.

You could also have a situation where security says, “Well, if you go in ‘that’ direction, currently we don’t know what are its consequences or security site-effects, so the business owner will have to accept the risk that there is an unknown set of risks which are also not clearly understood”.

The path of using pollution as an analogy is an evolution from the current status quo. At least with it we can measure and label different apps using common metrics. But ideally we want to create a clean code that doesn’t pollute

This is analogous to the evolution of pollution.

The first phase of industrial pollution saw pollution as a necessary side effect of progress, with some fines for the worse offenders (the ‘polluter pay’ model).

The second phase established the green movement where business had to behave decently, pollute less and be rewarded by the market.

The third phase is most interesting to this analogy, because in the third phase companies started to produce things in different ways, which not only created better products, but also dramatically reduced or eliminated pollution.

This is where we want to get to in application security. We want to get to the point where we create software that is better, faster, cheaper and secure (i.e. without pollution)

2.1.17 Triage issues before developers see them

Issues identified in vulnerability scans, code analysers, and penetration tests, must be triaged by the AppSec team to prevent overloading developers with duplicate issues or false positives. Once triaged, these issues can be pushed to issue trackers, and exposed to the developers.

Unfortunately, this is still an underdeveloped part of the AppSec pipeline. Even commercial vendors’ UI don’t really support the customisation and routing of the issues they ‘discover’.

The following tools are attempting to do just that:

- Bag of Holding https://github.com/aparsons/bag-of-holding

- Defect Dojo https://github.com/OWASP/django-DefectDojo

- ThreadFix

With these triage tools, AppSec specialists can identify false positives, accepted risks, or theoretical vulnerabilities that are not relevant in the context of the system. This ensures that developers should only have to deal with the things that need fixing.

Create JIRA workflow to triage raw issues

The creation of a security JIRA workflow for the raw issues to act as a triage tool, and push them to team boards after reviewing, would be another good example of the power of JIRA for workflows.

2.1.18 Using AppSec to measure quality

- add content from presentation AppSec and Software Quality

- academic research

2.1.19 Employ Graduates to Manage JIRA

One of the challenges of the JIRA RISK workflow is managing the open issues. This can be a considerable amount of work, especially when there are 200 or more issues to deal with.

In large organizations, the number of risks opened and managed should be above 500, which is not a large quantity. In fact, visibility into existing risks starts to increase, and improve, when there are more than 500 open issues.

The solution to the challenge of managing issues isn’t to have fewer issues.

The solution is to allocate resources, for example to graduates, or recently hired staff.

These are inexpensive professionals who want to develop their careers in AppSec, or they want to get a foot in the company’s door. Employing them to manage the open issues is a win-win situation, as they will learn a great deal on the job, and they will meet a lot of key people.

By directing graduate employees or new hires to manage the open issues, developers’ time is then free to fix the issues instead of maintaining JIRA.

The maintenance of issues is critical for the JIRA RISK workflow to work, because one of its key properties is that it is always up-to-date and it behaves as a ‘source of truth’.

It is vital that risks are accepted and followed up on and that issues are never moved into the developer’s backlog where they will be lost forever.

We can’t have security RISKs in backlog; issues must either be fixed or accepted.

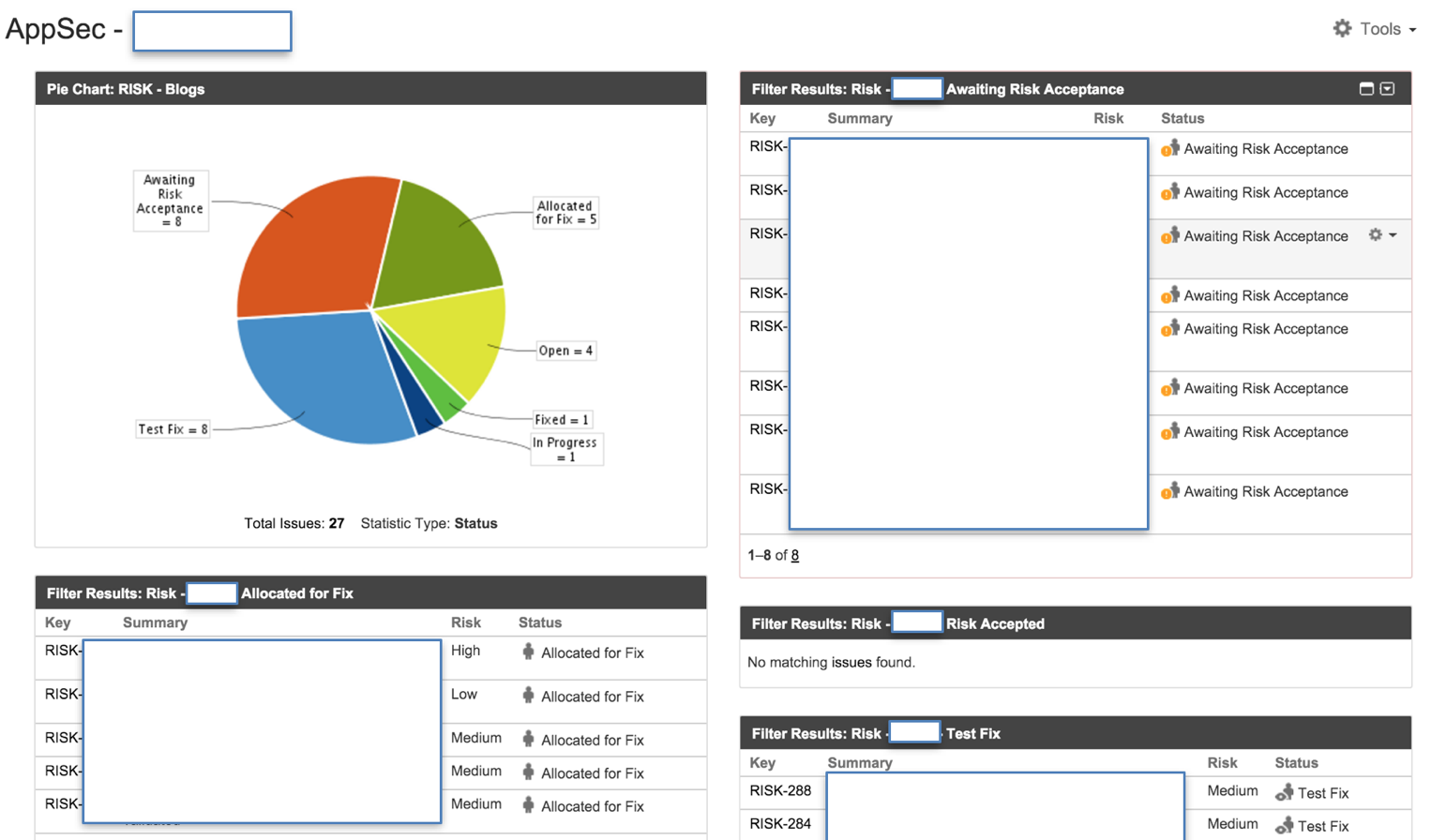

2.1.20 Risk Dashboards and emails

It is critical that you create a suite of management dashboards that map the existing security metrics and the status of RISK tickets:

- Open, In Progress

- Awaiting Risk Acceptance, Risk Accepted

- Risk Approved, Risk not Approved, Risk Expired

- Allocated for Fix, Fixing, Test Fix

- Fixed

Visualizing this data makes it real and creates powerful dashboards. The dashboards need to be provided to all players in the software development lifecycle.

You should create a sequence of dashboards that starts with the developer (who maps the issues that he is responsible for), then his technical architect, then the business owner, the product owner … all the way to the CTO and CISO.

Each dashboard clarifies which risks they are responsible for, and the status of application security for those applications/projects.

To reinforce ownership and make sure the issues/risks don’t ‘disappear’, use either the full dashboard, or specific tables/graphs, to create emails that are sent regularly to their respective owners.

The power of risk acceptance is that each layer accepts risk on behalf of the layer above. This means that the higher one is in the company structure, the more risks are allocated and accepted (all the way to the CISO and CTO).

The CISO plays a big part in this workflow, since it is his job to approve all ‘Risk Accepted’ issues (or raise exceptions that need to be approved by higher management).

This workflow drives many security activities because it motivates each player to act in his own best interests (which is to reduce the number of allocated Risk Accepted items).

The idea is to social-engineer the management layer into asking the developers to do the non-function-requirements tasks that the developers know are important, but seldom get the time to do.

2.2 For Developers

2.2.1 5000% code coverage

A big blind spot in development is the idea that 100% code coverage is ‘too much’.

100% or 99% code coverage isn’t your summit, your destination. 100% is base camp, the beginning of a journey that will allow you to do a variety of other tests and analysis.

The logic is that you use code coverage as an analysis tool, and to understand what a particular application, method, or code path is doing.

Code coverage allows you to answer code-related questions in much greater detail.

Let’s look at MVC Controller’s code coverage as an example. We must be certain that there is 100% code coverage on all exposed controllers. Usually, there are traditional ‘unit tests’ for those controllers which give the impression that we have a good coverage of their behavior. However, that level of coverage might not be good enough. You need the browser automation, or network http-based QA tests, to hit 100% of those controllers multiple times, from many different angles and with all sorts of payloads.

You need to know what happens in scenarios when only a couple of controllers are invoked in a particular sequence. You need to know how deep into the code we get, and what parts of the application are ‘touched’ by those requests or workflows.

This means that you don’t need 100% code coverage. Instead, you need 1000%, or 2000%, or even 5000% coverage. You need a huge amount of code coverage because ultimately, each method should be hit more than once, with each code-path invoked with multiple values/payloads.

In fact, the code coverage should ideally match the use cases, and every workflow that exists.

This brings us to another interesting question. If you take all client-accepted use cases which are invoked and simulated with tests, meaning that you have matched the expected ‘user contract’, (everything the user expects to happen when interacting with an application) if the coverage of the application is not at 100%, what else is there?

Maybe the executed tests only covered the happy paths?

Now let’s add the abuse and security use cases. What is the code coverage now?

If, instead of 100%, you are now at 70% coverage of a web application or a backend API, what is the rest of the code doing?

What code cannot currently be invoked using external requests? In most cases, you will find dead code or even worse, high-risk vulnerabilities that exist there, silently waiting to be invoked.

This is the key question to answer with tests: “Is there any part of the code that will not be triggered by external actions?”. We need to understand where those scenarios are, especially if we want to fix them. How can we fix something if we don’t even understand where it is, or we are unable to replicate the abuse cases?

2.2.2 Backlog Pit of Despair

In lots of development teams, especially in agile teams, the backlog has become a huge black hole of issues and features that get thrown into it and disappear. It is a mixed bag of things we might want to do in the future, so we store them in the backlog.

The job of the product backlog is to represent all the ideas that anyone in the application food chain has had about the product, the customer, and the sales team. The fact that these ideas are in the backlog means they aren’t priority tasks, but are still important enough that they are captured. Moving something into the backlog in this way, and identifying it as a future task, is a business decision.

However, you cannot use the backlog for non-functional requirements, especially the ones that have security implications. You have to have a separate project to hold and track those tickets, such as a Jira or a GitHub project.

Security issues or refactoring needs, still exist, regardless of whether the product owner wants to pursue them, whether they are a priority, or whether customers are asking for them.

Security and quality issues should either be in a fixed state, or in a risk acceptance state.

The difference is that quality and security tickets represents reality, whereas the backlog represents the ideas that could be developed in the future. That is why they have very different properties, and why you shouldn’t have quality and security tickets in the backlog Pit of Despair 5.

2.2.3 Developer Teams Need Budgets

Business needs to trust developer teams.

Business needs to trust that developers want to do their best for their projects, and for their company.

If business doesn’t learn to trust its developer teams, problems will emerge, productivity will be affected and quality/security will suffer.

A great way to show trust is to give the developer team a budget, and with it the power to spend money on things that will benefit the team.

This could include perks for developers such as conference attendance, buying books, or buying things which are normally a struggle to obtain. It is often the case that companies will purchase items for a team when requested, but first the team must struggle to overcome the company’s apathy to investing in the developer team. The balance of power never lies with the team, and that imbalance makes it hard to ask for items to be purchased. Inconsistencies are also a problem: sometimes it can be easier to ask for £5,000, or for £50,000, than it is to ask for £50 .

Companies need to treat developer teams as the adults they are, and they need to trust them. My experience in all aspects of organizations is that it is difficult to spend money.

When you spend money, especially in an open-ended way, your expenditure is recorded and it becomes official. If your investment doesn’t yield good results for the company, you will be held accountable.

Therefore, allocating a budget to the developer teams will keep the teams honest, and will direct their focus to productive investments. Purchases could range from buying some tools for the developers, to buying a trip, or even to outsourcing some work to a freelancer.

Spending on the operational expenses for the team will yield benefits both to developer teams and to business.

2.2.4 Developers should be able to fire their managers

Many problems developer teams deal with arise from the inverted power structure of their working environment. The idea persists that the person managing the developers is the one who is ultimately in charge, responsible, and accountable.

That idea is wrong, because sometimes the person best-equipped to make the key technological decisions, and the difficult decisions, is the developer, who works hands-on, writing and reading the code to make sure that everything is correct.

A benefit of the ‘Accept Risk’ workflow, is that it pushes the responsibility to the ones that really matter. I’ve seen cases when upper-layers of management realise that they are not the ones that should be accepting that particular risk, since they are not the ones deciding on it. In theses cases usualy the decision comes down to the developers, who should use the opportunity to gain a bigger mandate to make the best decisions for the project.

Sometimes, a perverse situation occurs where the managers are no longer coding. They may have been promoted in the past because they used to be great programmers, or for other reasons, but now they are out of touch with programming and they no longer understand how it works.

Their job is to make the developers more productive. They work in customer liaison, they manage the team and its results, they organise, review, handle business requirements and expectations, and make sure everything runs smoothly. That is the job of the manager, and that manager also acts as the voice of the developer team.

This situation promotes inefficiencies and makes the managers more difficult to work with. They don’t want to share information, but they do want to take ownership of developers’ work or ideas that they didn’t have themselves. This environment gets very political very fast, and productivity is effected.

The manager I describe above should ideally be defending the developer team, and should act like an agent for that team. Logically, a developer, or a group of developers, should therefore have the power to nominate, appoint, and sack the manager if necessary.

The developers should hold the balance of power.

Developers should also be able to take decisions on pay, perks, and budgets. Business should treat the developer teams as the grownups they are, because the developer teams are ultimately accountable for what is created within the company.

2.2.5 Every Bug is an Opportunity

The power of having a very high degree of code coverage (97%+) is that you have a system where making changes is easy.

The tests are easy to fix, and you don’t have an asymmetric code fixing problem, where a small change of code gives you a nightmare of test changes, or vice versa.

Instead, you get a very interesting flow where every bug, every security issue, or every code change is an opportunity to check the validity of your tests. Every time you make a code change, you want the tests to break. In fact you should worry if the tests don’t break when you make code changes.

You should also worry if the tests break at a different location than expected. This means that the change you made is not having the desired effect. Either your tests are wrong, or you don’t have the right coverage, because you expected the test to break in one location but it broke somewhere else, maybe even on a completely different test that happened to pass that particular path.

To enforce the quality of your test, especially when you have a high degree of coverage, use every single bug fix, and every single code change as opportunities to confirm that your tests are still effective.

Every code change gives the opportunity to make sure that the understanding of what should change is what did change. Every bug allows you to ask the questions, “Can I replicate this bug? Can I correctly identify all that is needed to replicate the creation, or the expectation of this bug?”

2.2.6 Every project starts with 100% code coverage

Every application starts with 100% code coverage because by definition, when you start coding, you cover all the code.

You must ensure that you have a very high degree of code coverage right from the beginning. Because if you don’t, as you add code, it is easier to let code coverage slip, and then you realise that large chunks of your code base are not tested (any code that is changed without being tested, is just a random change).

Reasons why code coverage slips:

- code changes are not executed using tests

- code coverage is not being used as a debug tool

- code coverage is not shown in the IDE in real-time

- demo or go-live deadline

- mocks and interfaces are used to isolate changes (ironic side effect)

- certain parts of the application are hard to test

- weak testing technology and workflows

- tests are written by separate teams (for example separate dev and QA teams)

- lack of experience by developers, testers and management on the value and power of 100% code coverage

All of these are symptoms of insecure development practices, and will have negative side effects in the medium term.

It is very important to get back to 100% code coverage as soon as possible. This usually will require significant efforts in improving the testing technology, which is usually where the problems start (i.e. lack of management support for this effort)

Some of unmanaged languages, or even managed languages (like Java with the way exceptions are handled), have difficulties in measuring certain code paths. There is nothing wrong in changing coding patterns or conventions to improve an tool’s capabilities, in this case the code coverage technology’s ability to detect code execution paths.

It is very important that the development team has the discipline and habit of always keeping the application on 100% code coverage. Having the right tools and worlflows will make a massive difference (for example Wallaby for Javascript or NCunch for .NET)

For the teams that are really heavy into visualisation and measuring everything, it is very powerful to use dashboards like the ones created by Kibana to track code coverage in real-time (i.e. see test’s execution as they occur in multiple target environments: dev, qa, pre-prod and prod).

2.2.7 Feedback Loops

The key to DevOps is feedback loops. The most effective and powerful DevOps environments are environments where feedback loops, monitoring, and visualizations are not second-class citizens. The faster you release, the more you need to understand what is happening.

DevOps is not a silver bullet, and in fact anyone saying so is not to be trusted. DevOps are a profound transformation of how you build and develop software.

DevOps are all about small interactions, small development cycles, and making sure that you never make big changes at any given moment. The feedback loop is crucial to this because it enhances your understanding and allows you to react to situations very quickly.

The only way you can react quickly is if you have programmatically codified your infrastructure, your deployment, and your tests. What you want is automation of your understanding, and of your tests. The feedback loops you get make this possible.

The success stories of DevOps are all about the companies who moved from waterfall style, top-down development, to a more agile way of doing things. The success of DevOps has also allowed teams that were agile but weren’t working very well, to ship faster.

I like the idea of pushing as fast as possible to production. It is essential to have the two pipelines I mention elsewhere in the book, where, if you codify with specific patterns that you know, you go as fast as possible. If you add changes that introduce new patterns or change the attack surface, you must have a separate pipeline that triggers manual or automated reviews.

The feedback loops allow you to understand this. The feedback loops allow you to understand how to modify your attack surface, how to change how your application behaves, and how to change key parts of how everything works.

2.2.8 Make managers accountable

Managers need to be held accountable for the decisions they make, especially when those decisions have implications for quality and security. There are several benefits to increased accountability. Organisations will be encouraged to accept risk, and, before they click on the ‘Accept Risk’ button, managers will be compelled to ‘read’ the ticket information. However, the introduction and development of a more accountable management structure is a long game, to be played out over many months, or even years. Sometimes, it requires a change in management to create the right dynamic environment.

Usually, a new manager will not be too enthusiastic when he sees the risks that are currently accepted by a business and are now his responsibility. He knows he has a limited window, of a few months perhaps, where he can blame his predecessor for any problems. After that time has passed, he ‘owns’ the risk, and has accepted it himself.

This workflow creates historical records of decisions, which supports and reinforces a culture of accountability.

In agile environments, the Product Owner is responsible for making the right decisions.

2.2.9 Risk lifecycle

Here are the multiple stages of Risks:

1 digraph {

2 size ="3.4"

3 node [shape=underline]

4

5 "Risk" -> "Test (reprod\

6 uces issue)" [label = "write test"]

7 "Test (reproduces issue)" -> "Risk Accept\

8 ed" [label = "accepts risk"]

9 "Test (reproduces issue)" -> "Fixing" \

10 [label = "allocated for fix"] \

11

12 "Test (reproduces issue)" -> "Regression \

13 Tests" [label = "fixed"]

14 }

It is key that a test that replicates the issue reported is created.

The tests result for each of the bottom layers mean different things:

- Risk Accepted : Issues that will NOT be fixed and represent the current accepted Risk profile of the target application or company

- Fixing: Issues that are currently being actively addressed (still real Risks)

- Regression Tests: Past issues that are now being tested to confirm that they no longer exist. Some of these tests should run against production.

2.2.10 Risk profile of Frameworks

Many frameworks work as a wrapper around a ‘raw’ language and have built in mechanisms to prevent vulnerabilities.

Examples are:

- Rails for Ruby

- Django for Python

- AngularJS, Ember, ReactJS for javascript

- Razor for .Net

Using these frameworks can help less experienced developers and act as a ‘secure by default’ mechanism.

This means that when using the ‘secure defaults’ of these frameworks, there will be less Risk tickets created. Ideally Frameworks will make it really easy to write secure code, and really hard (and visible) to write dangerous/insecure code (for example how AngularJS handles raw HTML injection from controllers to views).

This can backfire when Frameworks perform complex and dangerous operations ‘automagically’. In those cases it is common for the developers to not really understand what is going on under the hood, and high risk vulnerabilities will be created (sometimes even using the code based on the Framework’s own code samples)

- Add case-studies on issues created by ‘normal’ framework usage:

- SpringMVC Auto-Binding (also called over-posting)

- Razor controls vulnerable to XSS on un-expected locations (like label)

- Ember SafeHtml

- OpenAM SQL Query Injection on code sample (and see in live app)

- Android query SQL Injection (on some parameters)

2.2.11 Security makes you a Better Developer

When you look at the development world from a security angle, you learn very quickly that you need to look deeper than a developer normally does. You need to understand how things occur, how the black magic works, and how things happen ‘under the hood’. This makes you a better developer.

Studying in detail allows you to learn in an accelerated way. In a way, your brain does not learn well when it observes behavior, but not cause. If you are only dealing with behavior, you don’t learn why something is happening, or the root causes of certain choices that were made in the app or the framework.

Security requires and encourages you to look deeper, to find ways to learn the technology, to understand how the technology works, and how to test it. I have a very strong testing background because I spend so much time trying to replicate things, to make things work, to connect A to B, and to manipulate data between A and B.

What interests me is that when I return to a development environment, I realize that I have a bigger bag of tricks at my disposal. I always find that I have greater breadth, and depth, of understanding of technology than a lot of developers.

I might not be the best developer at some algorithms, but I often have a better toolkit and a more creative way of solving problems than more intelligent and more creative developers. The nature of their work means that their frames of reference are narrower.

Referencing is important in programming because often, once you know something is possible, you can easily carry out the task. But when you don’t know if something is possible, you must consider how you investigate the possibilities, how long your research will take, and how long it might be before you know if something will succeed or not. Whereas, if you know something is possible, you know that it is X hours away and you can see its evolution, or if it is a lost cause.

Having those references makes a big difference because when you look at a problem it is important to know which rabbit holes you can follow and which ways will create good and sound solutions and which ones don’t. This is especially important when we talk about testing the app: there tends to be a lot of interesting lateral thinking and creative thinking required to come up with innovative solutions to test specific things.

My argument is that when you do application security, you become a better developer. Your toolkit expands, your mind opens, and you learn a lot. In the last few months alone, I had to learn and review different languages and frameworks such as Golang, Spark, Camel, Objective C, Swift and OpenIDM.

After a while learning new systems becomes easier, and the ability to make connections makes you a better developer because you are more skilled at observing concepts and technologies.

2.2.12 Test lifecycle

- explain test flow from replicating the bug all the way to regression tests

1 digraph {

2 size ="3.4"

3 node [shape=box]

4 "Bug"-> "Test Reproduces Bug"

5 -> "Root cause analysis"

6 -> "Fix"

7 -> "Test is now Regression test"

8 -> "Release"

9 }

2.2.13 The Authentication micro-service cache incident

A good example of why we need tests across the board, not just normal unit tests, but integration tests, and tests that are spawned as wide as possible, is the story of a authentication module that was developed as an re-factoring into a separate micro-service.

When the module was developed, it contained a high degree of code coverage, in fact it had 100% unit test coverage. The problems arose when it went live, and several issues occurred. One of the original issues occurred because the new system was designed to improve the way the database or the passwords were stored. This meant that once it was fully deployed some of existing dependent services stopped working.

For example, one of the web services used by customer service stopped working. Suddenly, they couldn’t reset passwords, and the APIs were no longer available. Because end-to-end integration tests weren’t carried out when the website started, some of the customer service failed, which had real business impact.

But the worst-case example occurred when a proxy was used in one of the systems, and the proxy cached the answer from the authentication service. In that website, anybody could log in with any password because the cache was caching the ‘yes you are logged in’.

This illustrates the kind of resilience you want these authentication systems to have. You want a situation where, when you connect to a web service, you don’t just get, for example, a 200 which means okay, you should get the equivalent of an cross-site-request-forgery token. Ideally you would get an transaction token in the response received, i.e. “authentication is ok for this XYZ transaction, this user and here is an user object with user details”.

(todo: add example of 2fA exploit using Url Injection)

When you make a call to a web service and ask for a decision, and the only response you get is yes or no, this is quite dangerous. You should get a lot more information.

The fundamental problem here is the lack of proper end-to-end testing. It is a QA and development problem. It is the fact that in this environment you cannot spin off the multiple components. If you want to test the end-to-end user log in a journey, you should be able to spin off every single system that uses that functionality at any given time (which is what DevOps should also be providing).

If that had been done from the moment the authentication module was available, these problems would have been identified months in advance, and several incidents, security problems, and security vulnerabilities would have been prevented from occurring.

2.2.14 Using Git as a Backup Strategy

When you code, you inevitably go on different tangents. Git allows you to keep track of all those tangents, and it allows you to record and save your progress.

In the past, we used to code for long periods of time and commit everything at the end. The problem with this approach is that sometimes you follow a path to which you might want to return, or you might follow a path that turns out to be inefficient. If you commit both early and often, you can keep track of all such changes. This is a more efficient way of programming.

Of course, before you make the final commit, and before you send the push to the main repository, you should clean up and ensure the code has a certain degree of quality and testing.

I find that every time I code, even for a short while, my instinct is to write a commit, and keep track of my work. Even if I add only a part of the code, or use the staging capabilities to do some selective commits, I find that Git gives me a much cleaner workflow. I can be confident in my changes from start to end, and I rarely lose any code or any snippet with which I was happy.

2.2.15 Creating better briefs

Developers should use the JIRA workflow to get better briefs and project plans from management. Threat Models are also a key part of this strategy.

Developers seldom find the time to fulfil the non-functional requirements of their briefs. The JIRA workflow gives developers this time.

The JIRA workflow can help developers to solve many problems they have in their own development workflow (and SDL).

2.3 For management

2.3.1 Annual Reports should contain a section on InfoSec

Annual reports should include sections on InfoSec and AppSec, which should list their respective activities, and provide very detailed information on what is going on.

Most companies have Intel dashboards of vulnerabilities, which measure and map risk within the company. Companies should publish that data, because only when it is visible can you make the market work and reward companies. Obliging companies to publish security data will make them understand the need to invest, and the consequences of the pollution that happens when you have rented projects with crazy deadlines and inadequate resources, but somehow manage to deliver.

The ability to deliver in such chaotic circumstances is often due to the heroic efforts of developers, who work extremely hard to deliver high quality projects, but get rewarded only by being pushed even more by management. This results in extraordinary vulnerabilities and risks being created and bought by the company. Of course, the company doesn’t realize this until the vulnerabilities are exploited.

In agile environments, it’s important to provide relative numbers such as:

- Risk issues vs velocity

- Risk issues vs story points

By analyzing these numbers over time, the tipping point, where quality and security are no longer in focus, can become painfully clear.

2.3.2 Cloud Security

One way in which cloud security differs from previous generations of security efforts, such as software security and website security, is that in the past, both software and website security were almost business disablers. The more features and the more security people added, the less attractive the product became. There are very few applications and websites that make the client need more security to do more business, which results in the best return on investment.

What’s interesting about cloud security is that it might be one of the occasions where security is a business requirement, because a lack of security would slow down the adoption rate and prevent people from moving into the cloud. Accordingly, people care about cloud security, and they invest in it.

While thinking about this I realized that the problem with security vulnerabilities in the cloud is that any compromise doesn’t just affect one company, it affects all the companies hosted in the cloud. It’s a much bigger problem than the traditional scenario, where security incidents resulted in one company being affected, and the people in that company worked to resolve the problem. When an incident happens in the cloud, the companies or assets affected are not under the control of their owners. The people hosting them must now manage all these external parties, who don’t have any control over their data, but who can’t work because their service is down or compromised. The problem is horizontal; a Company A-driven attack will affect Companies B, C, D, E, F, all the way to X, Y, Z. As a result, it’s a much tougher problem to solve.

While catastrophic failures are tolerated in normal websites and applications, cloud-based worlds are much less tolerant. A catastrophic failure, where everything fails or all the data is compromised or removed, means the potential loss of that business. That hasn’t happened yet, but doubtless it will happen. Cloud service companies will then have to show that they care more about security than the people who own the data.

Cloud providers care more than you

One of the concepts that Bruce Schneier talked about at the OWASP IBWAS conference in Spain is that a cloud service cares more about the security of their customers than the customer does. This makes sense since their risk is enormous.

In the future, cloud companies will be required to demonstrate this important concept. They should be able to say, “Look, I have better systems in place than you, so you can trust me with your data. I can manage more data for you than you can”. This will be akin to the regulatory compliance that handles data in the outside world.

One way to do this is with publishing of RISK data and dashboards (see OWASP Security Labelling System Project).

You can imagine a credit card company wanting or needing to demonstrate this. But you can also see the value of it to the medical industry, or any kind of industry that holds personal information. If a company can’t provide this type of service it must outsource the service, but to be able to do that the security industry must become more transparent. We need a lot more maturity in our industry, because companies need to show that they have adequate security controls. It is not sufficient to be declared compliant by somebody who goes for the lowest common denominator, gets paid, and tells the company it’s compliant.

Genuinely enhanced visibility of what’s going on will allow people to measure what’s happening, and then make decisions based on that information. The proof of the pudding will not be how many vulnerabilities the cloud companies have, but how they recover from incidents.

The better a company can sustain an attack, the better that company can protect data. A company who says, “I received x, y, z number of attacks and I was able to sustain them and protect them this way” is more trustworthy than the company that is completely opaque. The key to making this work is to create either technology, or standards of process, that increase the visibility of what is going on.

2.3.3 Code Confidence Index

Most teams don’t have confidence in their own code, in the code that they use, in the third parties, or the soup of dependencies that they have on the application. This is a problem, because the less confidence you have in your code, the less likely you are to want to make changes to that code. The more you hesitate to touch it, the slower your changes, your re-factoring, and your securing of the code will be.

To address this issue, we need to find ways to measure the confidence of code, in a kind of Code Confidence Index (COI).

If we can identify the factors that allow us to measure code confidence, we would be able to see which teams are confident in their own or other code, and which teams aren’t.

Ultimately, the logic should be that the teams with high levels of Code Confidence are the teams who make will be making better software. Their re-factoring is better, and they ship faster.

2.3.4 Feedback loops are key

A common error occurs when the root cause of newly discovered issues or exploits receives insufficient energy and attention from the right people.

Initially, operational monitoring or incident response teams identify new incidents. They send the incidents are to the security department, and after some analysis the development teams receive them as tickets. The development teams receive no information about the original incident, and are therefore unable to frame the request in the right perspective. This can lead to suboptimal fixes with undesired side effects.

It is beneficial to include development teams in the root cause analysis from the start, to ensure the best solutions can be identified.

2.3.5 Getting Assurance and Trust from Application Security Tests

When you write an application security test, you ask a question. Sometimes the tests you do don’t work, but the tests that fail are as important as the tests that succeed. Firstly, they tell you that something isn’t there today so you can check it for the future. Secondly, they tell you the coverage of what you do.

These tests must pass, because they confirm that something is impossible. If you do a SQL injection test, in a particular page or field, or if you do an authorization test, and it doesn’t work, you must capture that.

If you try something, and a particular vulnerability or exploit isn’t working, the fact that it doesn’t work is a feature. The fact that it isn’t exploitable today is something that you want to keep testing. Your test confirms that you are not vulnerable to that and that is a powerful piece of information.

You should have tests for both the things you know are problems, and the things you know are not problems. Such tests give you confidence that you have tested your application for certain things.

Be sure that you have enough coverage of those tests. You also want to relate this to code coverage and to functionality, because you want to make sure that there is direct alignment between what is possible on the application and what is invoked by the test they should match.

The objective is to have much a stronger assurance of what is happening in the application, and to detect future vulnerabilities (created in locations that were not vulnerable at the time of the last security assessment).

2.3.6 I don’t know the security status of a website

Lack of data should affect decision-making about application security.

Recently, I looked at a very interesting company that provides VISA compatible debit-card for kids, which allows kids to get a card whose budget can be controlled online by their parents. There is even a way to invest in the company online via a crowdfunding scheme.

I looked at this company as a knowledgeable person, able to process security information and highly technical information about the application security of any web service. But I was not able to make any informed security decision about whether this service is safe for my kids. I couldn’t understand the company’s level of security because they don’t have to publish it and, therefore, I don’t have access to that data.

As a result, I must take everything on the company’s website at face value. And because there is no requirement to publish any real information about their product, the information given is shaped by the company’s marketing strategy. I have no objective way of measuring whether the company has good security across their SDL, has good SecDevOps capabilities, if are there are any known security risks I should be aware, or more importantly, if my kids’ data is protected and secure.

This means that my friends who recommended that service to me are even worse off than I am. They are not security savvy users and they can only rely on the limited information given on the company’s website.

If there are three or four competing services at any moment in time, they will not be able to compete on the security of their product. It is not good enough if a company only invests in security in case security becomes a problem, or causes embarrassment in the future. It is like saying, “Oh, let’s not pollute our environment because we might get caught”.

In business today, security issues are directly related to quality issues. Application security could be used to gain a good understanding of what is going on in the company, and whether it is a good company to invest in, or a good company to use as a consumer.

This approach could scale. If I found problems, or if data was open, I could publish my analysis, others could consume it, and this would result in a much more peer-reviewed workflow for companies.

This reflects my first point: if I can’t understand a company’s level of security because they don’t have to publish it, this should change. And if it does, it will change the market.

Having a responsible disclosure program or public bug bounty program is also a strong indicator of quality and security.

In fact, a company that doesn’t have a public bug bounty program is telling the world that they don’t have an AppSec team.

2.3.7 Inaction is a risk

Lacking the time to perform ‘root cause analysis’, or not understanding what caused a problem, are valid risks in themselves.

It is key that these risk are accepted

This is what makes them ‘real’, and what will motivate the business owner to allocate resources in the future. Specially when a similar problem occur.

2.3.8 Insurance Driven Security

- insurance companies are starting to operate in the AppSec work

- they will need objective way to measure RISK

- workflows like this one could provide that

2.3.9 Is the Decision Hyperlinked?

I regularly hear the following statements: “The manager knows about it”, “I told you about this in the meeting”, “Everyone is aware of this”, and so on. However, if a decision is not in a hyperlinkable location, then the decision doesn’t exist. It is vital that you capture decisions, because without a very clear track of them, you cannot learn from experience.

Capturing decisions is most important for the longer term. When you deal with your second and third situations, you start building the credibility to say, “We did this in the past, it didn’t work then, and here are the consequences. Here is the real cost of that particular decision, so let’s not repeat this mistake”.

It is essential to do postmortems and understand the real cost of decisions. If a comment is made along the lines of, “Oh, we don’t have time to do this now because we have a deadline”, after a huge amount of manpower and time has been spent fixing the problem, you need to be able to say, “Was that decision the correct one? Let’s now learn from that, and really quantify what we are talking about”.

Completing this exercise will give you the knowledge to say the next time, “We need a week, or two weeks, or a month to do this”. Or you could say, “Last time we didn’t do this and we lost six months”. So, it is key not only to capture the decisions, but also to ensure you do a very good postmortem analysis of what happens after risks are accepted.

When there are negative consequences because of a bad decision, such as security issues or problems of technical debt, it is important that the consequences are hyperlinked back to the original issue for future reference.

In a way, the original issues are the foundations of the business case for why a problem occurred, and why you don’t want to repeat the problem in the future.

2.3.10 Measuring companies’ AppSec

Reviewing a company’s technology allows us to understand its future. As more and more companies depend on their technology, and as more investment is directly connected to software developed by companies, there is a need for companies to provide independent analysis of their technology stacks.

Typically, this is something an analyst does, where they should be measuring both the maturity of the company’s software development, and the maturity of its environment. The analyst should use public information to compile their evidence, and they should then use this evidence to support their data. Having good evidence means having explicit data on the record which clearly supports the analysis.

The power of the analyst is that they can take the time, and have the ability to create peer-reviewed, easy-to-measure, easy-to-understand analysis of companies.

Let’s look at investments, as it is now easier and easier for people to invest in companies. If your idea is to invest in technology at the earliest stages of a company’s growth, you want to be really sure it is going in the right direction. You should be able to get good metrics of what is going on, and you need a good understanding of what is happening inside these companies. Questions to ask include the following:

- Are they using an old technology stack?

- How much are they really investing in it?

- How much are they really busking the whole thing? (because you know that is something that can easily happen, where marketing paints a very different rosy picture of reality)

- Are they having have scalability problems caused by past architectural decisions?

- Are they able to refactor the code and keep it clean/lean and focused on the target domain models?

If you have a company which is growing very fast, it makes a massive difference if the company is on a scalable platform or not.

In the past, companies could afford to let their site ‘blow up’ and then address problems after they occurred. That is no longer possible, due to the side effects, and particularly the security side effects, of any crashes or instability. Today, the more successful a company becomes, the more attackers will focus on it. If there are serious security vulnerabilities, they will be exploited faster than the company can address them, and this will affect real users and real money. We can see this happening with cars and the IoT (Internet of Things).

There is still a huge amount of cost in moving away from products/technologies, which is I call the ‘lock-in index’, and is whatever ‘locks’ the customer to the software.

The idea is that investors and users should have a good measure of how ‘locked-in’ a company is, and the technological quality of the application/service they are about to invest into.

2.3.11 OWASP Top 10 Risk methodology

- add details about Risk methodology that in included with the OWASP Top 10

- mention other Risks methodologies

- applying OWASP ASVS allows for a more risk based approach

- OwaspSAMM and BSIMM can measure the maturity level of an organisation

2.3.12 Relationship with existing standards

It is important have a good understanding of how a company’s Risk profile maps to existing security standards alike PCI DSS, HIPAA, and others.

Most companies will fail these standards when their existing ‘real’ RISKs are correctly identified and mapped. This explains the difference between being ‘compliant’ and being ‘secure’.

Increasingly, external regulatory bodies and laws require some level of proof that companies are implementing security controls.

To prevent unnecessary delays or fines, these requirements should be embedded in the process in the form of regular scans, embedded controls (e.g. in the default infrastructure), or in user stories.

For example, in DevOps environments all requirements related to OS hardening should be present in the default container. Development teams should not have to think about them.

2.3.13 Responsible disclosure

Having a responsible disclosure process and/or participating in programs like HackerOne or Bugcrowd have several advantages:

- it shows a level of confidence to the customers

- it provides additional testing cycles

- it sends a message that they have an AppSec program

2.3.13.1 JIRA workflow for bug bounty’s submissions

- add diagram (from XYZ)

- real world example of using an JIRA workflow to manage submissions received

2.3.13.2 Bug bounties can backfire

http://www.forbes.com/sites/thomasbrewster/2016/07/13/fiat-chrysler-small-bug-bounty-for-hackers/#58cc01f4606f

2.3.14 Third party components and outsourcing

- these dependencies need to be managed

- lots of control lost to 3rd parties

- open source and close source are very similar

- at least with open source there is the chance to look at the code (doesn’t mean that somebody will)

- companies need developers that are core contributors of FOSS

- outsource code tends to have lots of ‘pollution’ and RISKs that are not mapped (until it is too late)

- liability and accountability of issues is a big problem

- see OWASP Legal project for an idea of AppSec clauses to include in outsourcing contracts.

- copyright infringes will become more of a problem in the future; this can be hard to identify in 3rd party components

2.3.14.1 Tools to help manage dependencies

- OWASP Dependency checker can identify vulnerable components in use

- several commercial tools exist that can identify copyright infringements in components

- Veracode has it

- Sourceclear

- Sonar (check which version)

- nsp node security

- … add more (see 13 tools for checking the security risk of open-source dependencies)

2.3.15 Understand Every Project’s Risks

It is essential that every developer and manager know what risk game they are playing. To fully know the risks, you must learn the answers to the following questions:

- what is the worst-case scenario for the application?

- what are you defending, and from whom?

- what is your incident response plan?

Always take advantage of cases when you are not under attack, and you have some time to address these issues.

2.3.16 Using logs to detect risks exploitation

Are your logs and dashboards good enough to tell you what is going on? You should know when new and existing vulnerabilities are discovered or exploited. However, this is a difficult problem that requires serious resources and technology.

It is crucial that you can at least detect known risks without difficulty. Being able to detect known risks is one reason to create a suite of tests that can run against live servers. Not only will those tests confirm the status of those issues across the multiple environment, they will provide the NOC (Network Operations Centre) with case studies of what they should be detecting.

2.3.16.1 Beware of the security myth

Often, no special software or expertise is needed to identify basic, potential, bad behavior. Usually, companies already have all the tools and technology they need in-house. The problem is making those tools work in the company’s reality. For example, if someone accesses 20 non-existing pages per second for several minutes, it is most likely they are brute-forcing the application. You can easily identify this by monitoring for 404 and 403 errors per IP address over time.

2.3.17 Using Tests to Communicate

Teams should talk to each other using tests. Teams usually have real problems communicating efficiently with each other. You might find an email, a half-baked bug-tracking issue opened, a few paragraphs, a screen shot, and if you are lucky, a recorded screencast movie.

This is a horrible way to communicate. The information isn’t reusable, the context isn’t immediately clear, you can’t scale, you can’t expand on the topic, and you can’t run it automatically to know if the problem is still there or not. This is a highly inefficient way to communicate.

The best way for teams to communicate is by using Tests.

Both within and across teams; top-down and bottom-up, from managers and testers to teams.

Tests should become the lingua franca of companies. Their main means of communication.

This has tremendous advantages, since in order for it to work, it requires really very good test APIs, and very good execution environments.

One must have an easy-to-develop, easy-to-install, and easy-to-access development environment in place, something that very rarely occurs.

By making tests the principal way teams communicate, you create an environment that not only rewards good APIs, it rewards good solutions for testing the application. Another advantage is that you can measure how many tests have been written across teams and you can review the quality of the tests.

Not only is this a major advantage to a company, it is also a spectacular way to review changes. If you send a test that says, “Hey! I would like XYZ to occur”, or “Here is a problem”, then the best way to review the quality of the change is to review the test that was modified in order to solve the problem.