Bash Shell

Programming is an applied skill. If you want to learn it, you should choose a programming language and solve tasks. This is the only way to get practical skills.

We use the Bash language in this book. This language is convenient for automating computer administration tasks. Here are few examples of what you can do with Bash:

- Create data backups.

- Manipulate directories and files.

- Run programs and transfer data between them.

Bash was developed in the Unix environment. Therefore, it bears the imprint of the Unix philosophy. Despite its roots, you can also use Bash on Windows and macOS.

Development Tools

You need a Bash interpreter and a terminal emulator to run the examples of this chapter. You can install them on all modern operating systems. Let’s take a look at how to do this.

Bash Interpreter

Bash is a script programming language. It has the following features:

- It is interpreted language.

- It operates existing programs or high-level commands.

- You can use it as a shell to access the OS functions.

If you use Linux or macOS, you have the preinstalled Bash interpreter. If your OS is Windows, you need both Bash interpreter and POSIX-compatible environment. Bash needs this environment to work correctly. There are two ways to install it.

The first option is to install the MinGW toolkit. It contains the GNU compiler collection in addition to Bash. If you do not need all MinGW features, you can install Minimal SYStem (MSYS) instead. MSYS is the MinGW component that includes Bash, a terminal emulator and GNU utilities. These three things make up a minimal Unix environment.

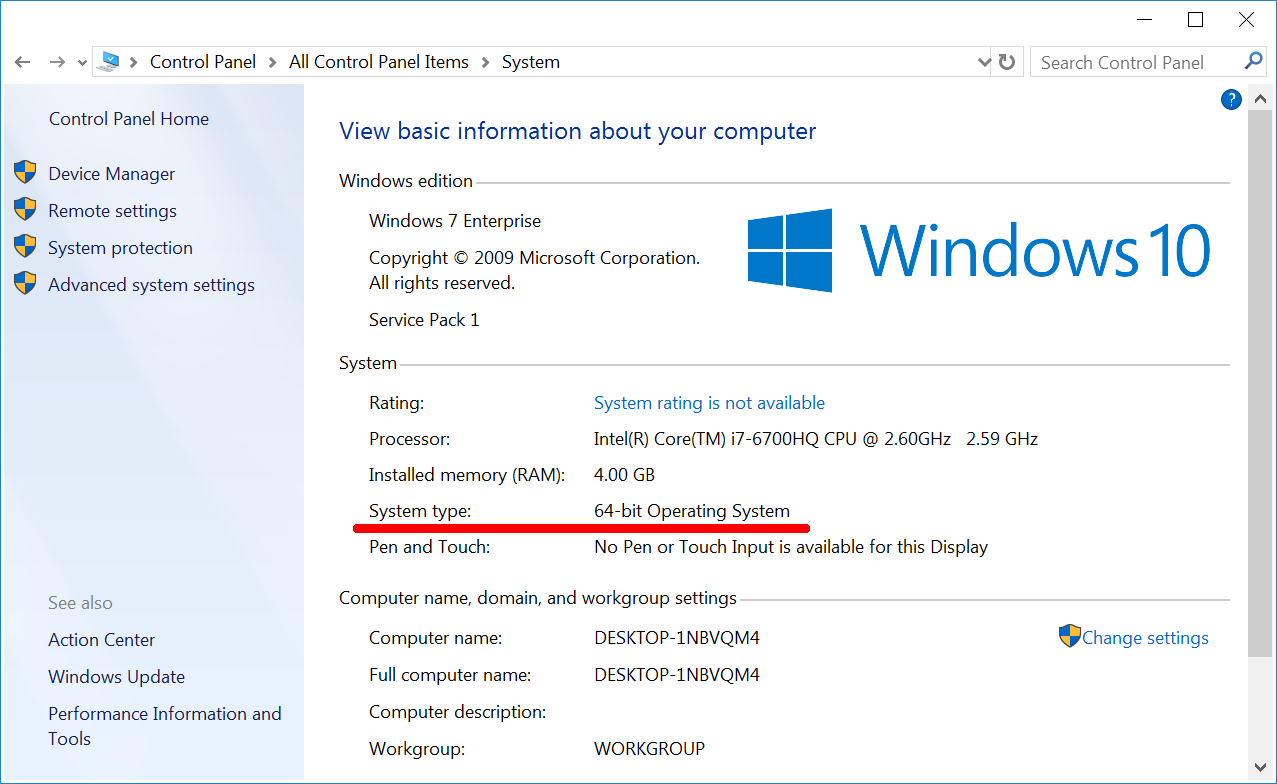

It is always good to clarify the bitness of your Windows before installing any software. Here are steps to read it:

- If you have a “Computer” icon on your desktop, right-click on it and select the “Properties” item.

- If there is no “Computer” icon on your desktop, click the “Start” button. Find the “Computer” item in the menu. Right-click on it and select “Properties”.

- You have opened the “System” window. Locate the “System Type” item there as Figure 2-1 demonstrates. This item shows you the bitness of Windows.

We are going to install the modern MSYS version called MSYS2. Download its installer from the official website. You should choose the installer version that fits the bitness of your Windows.

Now we have everything to install MSYS2. Follow these steps for doing it:

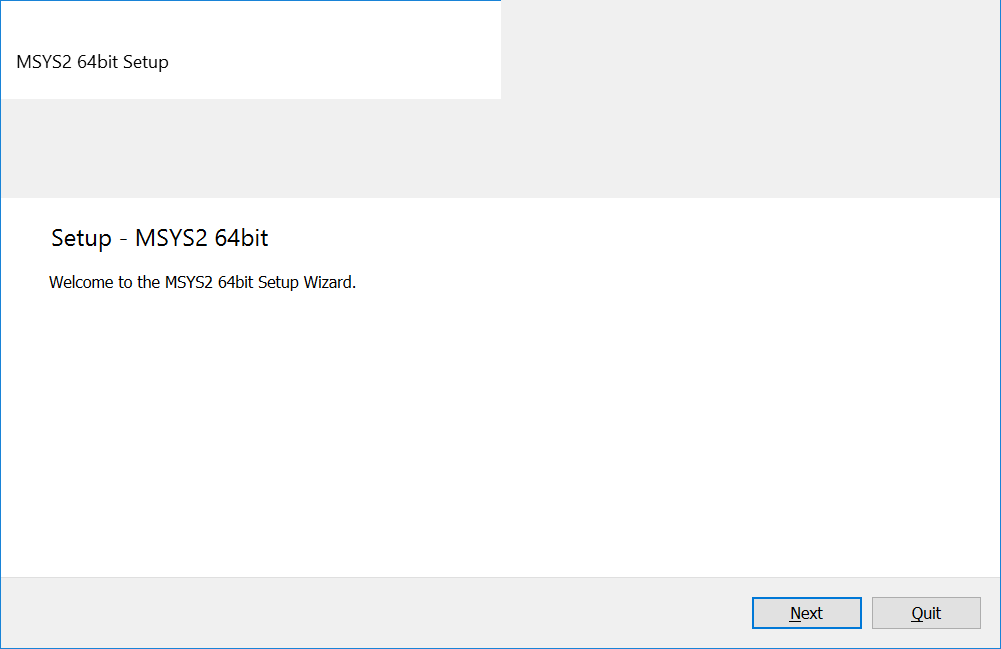

1. Run the installer file. You will see the window as Figure 2-2 shows.

- Click the “Next” button.

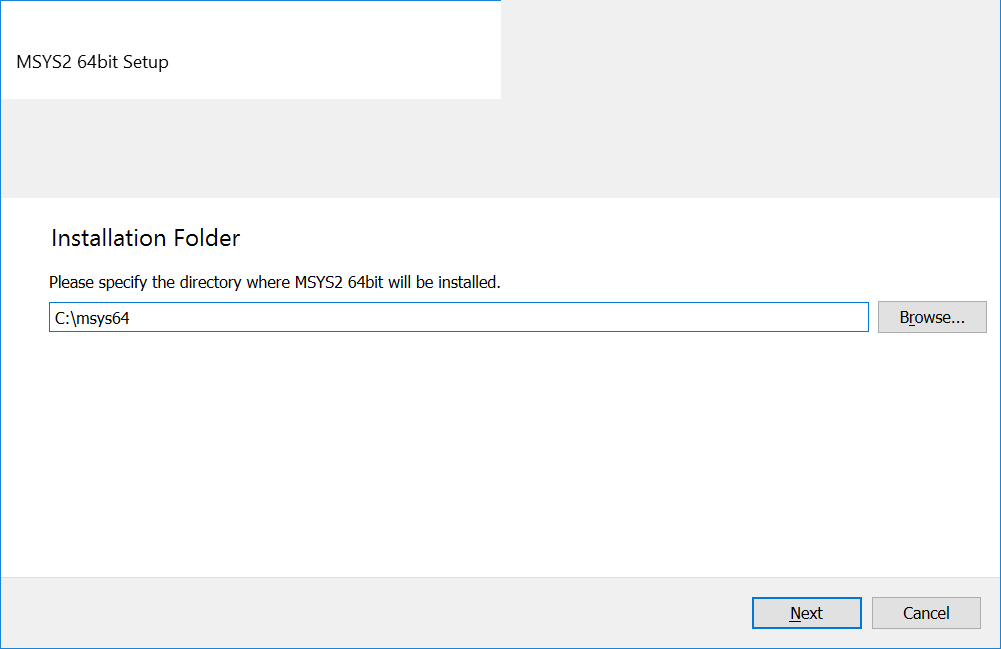

- You see the new window as Figure 2-3 shows. Specify the installation path there and press the “Next” button.

- The next window suggests you to choose the application name for the Windows “Start” menu. Leave it unchanged and click the “Next” button. Then the installation process starts.

- When the installation finishes, click the “Finish” button. It closes the installer window.

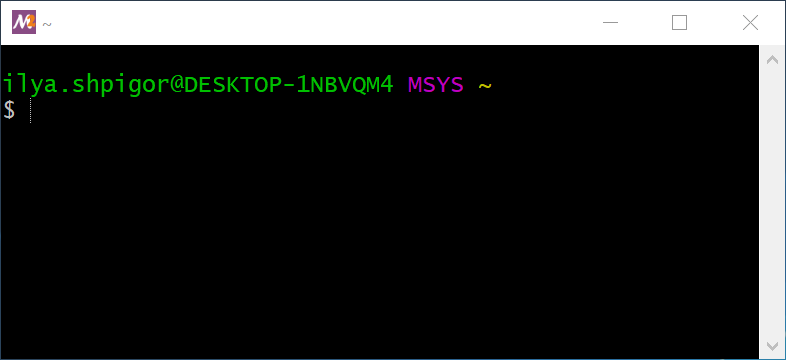

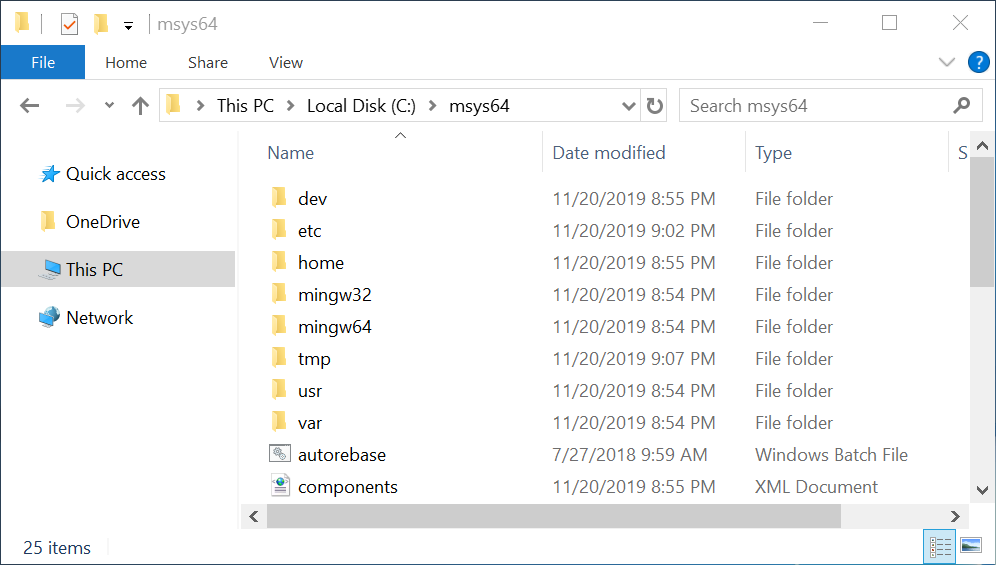

You have installed the MSYS2 Unix environment on your hard drive. You can find its files in the C:\msys64 directory if you did not change the default installation path. Go to this directory and run the msys2.exe file. It opens the window where you can work with the Bash shell. Figure 2-4 shows this window.

The second option is to install a Unix environment from Microsoft. It is called Windows subsystem for Linux (WSL). This environment is available for Windows 10 only. It does not work on Windows 8 and 7. You can find the manual to install WSL on the Microsoft website.

If you use Linux, you do not need to install Bash. You already have it. Just press the shortcut key Ctrl+Alt+T to open a window with the Bash shell.

If you use macOS, you have everything to launch Bash too. Here are the steps for doing that:

- Click the magnifying glass icon in the upper right corner of the screen. It opens the Spotlight search program.

- The dialog box appears. Enter the text “Terminal” there.

- Spotlight shows you a list of applications. Click on the first line in the list with the “Terminal” text.

Terminal emulator

Bash shell is not a regular GUI application. It even does not have its own window. When you run the msys2.exe file, it opens a window of the terminal emulator program.

An emulator is a program that mimics the behavior of another program, OS or device. The emulator solves the compatibility task. For example, you want to run a Windows program on Linux. There are several ways to do that. One option is using the emulator of the Windows environment for Linux. It is called Wine. Wine provides its own version of the Windows system libraries. When you run your program, it uses these libraries and supposes that it works on Windows.

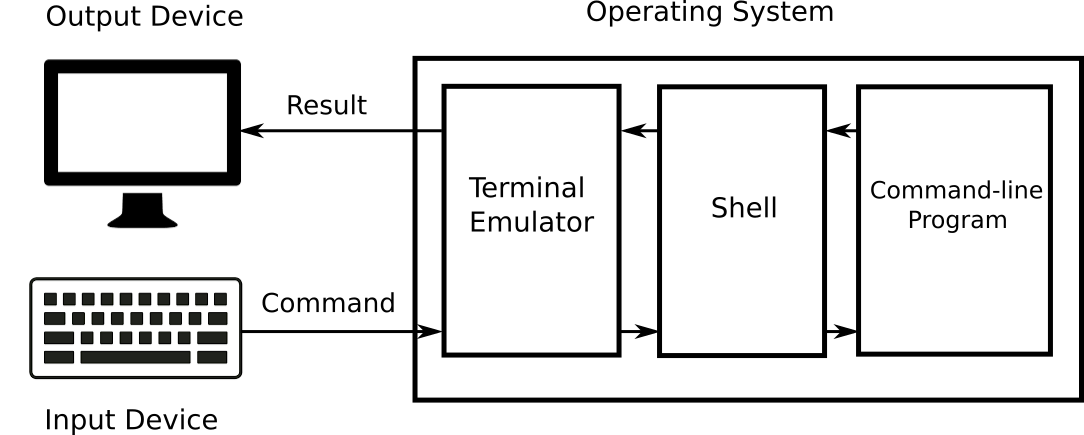

The terminal emulator solves the compatibility task too. Command-line programs are designed to work through a terminal device. Nobody uses such devices today. Cheap personal computers and laptops have replaced them. However, there are still many programs that require a terminal for working. You can run them using the terminal emulator. It uses the shell to pass data to the program. When the program returns some results, the shell passes them to the terminal emulator. Then the emulator displays the results on the screen.

Figure 2-5 explains the interaction between input/output devices, the terminal emulator, the shell and the command-line program.

The terminal emulator window in Figure 2-4 shows the following two lines:

ilya.shpigor@DESKTOP-1NBVQM4 MSYS ~$The first line starts with the username. The username is ilya.shpigor in my case. Then there is the @ symbol and the computer name DESKTOP-1NBVQM4. You can change the computer name via Windows settings. The word MSYS comes next. It means the platform where Bash is running. At the end of the line, there is the symbol ~. It is the path to the current directory.

Command Interpreter

All interpreters have two working modes: non-interactive and interactive. When you choose the non-interactive mode, the interpreter loads the source code file from the disk and executes it. You do not need to type any commands or control the interpreter. It does everything on its own.

When you choose the interactive mode, you type each command to the interpreter manually. If the interpreter is integrated with the OS and works in the interactive mode, it is called a command shell or shell.

A command shell provides access to the settings and functions of the OS. You can perform the following tasks using it:

- Run programs and system services.

- Manage the file system.

- Control peripherals and internal devices.

- Access the kernel features.

Demand of the CLI

Why would somebody learn the command-line interface (CLI) today? It appeared 40 years ago for computers that are thousands of times slower than today. Then the graphical interface supplanted CLI on PCs and laptops. Everybody prefers to use GUI nowadays.

The CLI seems to be outdated technology. However, this statement is wrong. It should be a reason why developers include Bash in all modern macOS and Linux distributions. Windows also has a command shell called Cmd.exe. Microsoft has replaced it with PowerShell in 2006. Just think about this fact. The developer of the most popular desktop OS has created a new command shell in the 2000s. All these points confirm that CLI is still in demand.

What tasks does the shell perform in modern OSes? First of all, it is a tool for system administration. The OS consist of the kernel and software modules. These modules are libraries, services and system utilities. Most of the modules have settings and special modes of operation. You do not need them in your daily work. Therefore, you cannot access these settings via GUI in most cases.

If the OS fails, you need system utilities to recover it. They have a command-line interface because a GUI often is not available after the failure.

Besides the administration tasks, you would need CLI when connecting computers over a network. There are GUI programs for such connection. The examples are TeamViewer and Remote Desktop. They require a stable and fast network connection for working well. If the connection is not reliable, the GUI programs are slow and often fail. The command interface does not have such a limitation. The remote server receives your command even if the link is poor.

You can say that a regular user does not deal with administration tasks and network connections. Even if you do not have such tasks, using command shell speeds up your daily work with the computer. Here are few things that you can do more effective with CLI than with GUI:

- Operations on files and directories.

- Creating data backups.

- Downloading files from the Internet.

- Collecting statistics about your computer’s resource usage.

An example will explain the effectiveness of CLI. Suppose you rename several files on the disk. You add the same suffix to their names. If you have a dozen of files, you can do this task with Windows Explorer in a couple of minutes. Now imagine that you should rename thousands of files this way. You will spend the whole day doing that with the Explorer. If you use the shell, you need to launch a single command and wait for several seconds. It will rename all your files automatically.

The example with renaming files shows the strength of the CLI that is scalability. Scalability means that the same solution handles well both small and large amounts of input data. The solution implies a command when we are talking about the shell. The command renames ten and a thousand files with the same speed.

Experience with the command interface is a great benefit for any programmer. When you develop a complex project, you manage plenty of text files with the source code. You use the GUI editor to change the single file. It works well until you do not need to introduce the same change in many files. For example, it can be the new version of the license information in the file headers. You waste your time when solving such a task with the editor. Command-line utilities make this change much faster.

You need to understand the CLI to deal with compilers and interpreters. These programs usually do not have a graphical interface. You should run them via the command line and pass the names of the source code files. The reason for such workflow is the poor scalability of the GUI. If you have plenty of source code files, you cannot handle them effectively via the GUI.

There are special programs to edit and compile source code. Such programs are called integrated development environments (IDE). You can compile a big project using IDE and its GUI. However, IDE calls the compiler via the command line internally. Therefore, you should deal with the compiler’s CLI if you want to change its options or working mode.

If you are an experienced programmer, knowing the CLI encourages you to develop helper utilities. It happens because writing a program with a command interface is much faster than with a GUI. The speed of development is essential when solving one-off tasks.

Here is an example situation when you would need to write a helper utility. Suppose that you have to make a massive change in the source code of your project. You can do it with IDE by repeating the same action many times.

Another option is to spend time writing a utility that will do this job. You should compare the required time for both ways of solving your task. If you are going to write a GUI helper utility, it takes more time than for a CLI utility. This can lead you to the wrong decision to solve the task manually using the IDE. Automating your job is the best option in most cases. It saves your time and helps to avoid mistakes.

You decide if you need to learn the CLI. I have only given few examples of when it is beneficial. It is hard to switch from using a GUI to a CLI. You have to re-learn many things that you do with Windows Explorer regularly. But once you get the hang of the command shell, your new productivity will surprise you.

Navigating the File System

Let’s start introducing the Unix environment and Bash with a file system. A file system is a software that dictates how to store and read data from disks. It covers the following topics:

- API to access data on the disk that programs can use.

- Universal way for accessing different storage devices.

- Physical operations on the disk storage.

First, we will look at the differences between the directory structure in Unix and Windows. Then we will learn the Bash commands for navigating the file system.

Directory Structure

There is an address bar at the top of the Windows Explorer window. It displays the absolute path to the current directory. An absolute path shows the place of the file system object regardless of the current directory.

Another way to specify the file system object place is using the relative path. It shows you how to reach the object from the current directory.

A directory is a file system cataloging structure. It can contain files and other directories. Windows terminology calls it folder. Both names mean the same kind of file system object.

Figure 2-6 shows an Explorer window. The address bar equals This PC > Local Disk (C:) > msys64 there. It matches the C:\msys64 absolute path. Thus, we see the contents of the msys64 directory on the C drive in the Explorer window.

The letter C in the path denotes the local system disk drive. The local drive means the device that is connected to your computer physically. You can also have a network drive. You access such a device via the network. The system disk means one that has the Windows installation on it.

If you run the MSYS2 terminal emulator, it shows you the current absolute path at the end of the first line. This line behaves like the address bar of Windows Explorer. When you change the current directory, the current path changes too. However, you have to consider that the terminal and Explorer show you different paths for the same current directory. It happens because directory structures of the Unix environment and Windows do not match.

Windows marks each disk drive with a Latin letter. You can open the drive using Explorer as a regular folder. Then you access its content.

For example, let’s open the C system drive. It has a standard set of directories. Windows has created them during the installation process. If you open the C drive in Explorer, you see the following directories there:

WindowsProgram FilesProgram Files (x86)UsersPerfLogs

These directories store OS components, user applications and temporary files.

You can connect extra disk drives to your computer. Another option is to split a single disk into several logical partitions. Windows will assign the Latin letters (D, E, F, etc) to these extra disks and partitions. You are allowed to create any directory structure there. Windows does not restrict you in any way.

The File Allocation Table (FAT) file system dictates how Windows manages disks and provides you access to them. Microsoft developed this file system for the MS-DOS OS. The principles of FAT became the basis of the ECMA-107 standard. The next-generation file system from Microsoft is called NTFS. It replaced the obsolete FAT in modern versions of Windows. However, the basic principles of disks and directory structure are the same in NAT and FAT. The reason for that is the backward compatibility requirement.

The Unix directory structure follows the POSIX standard. This structure gives you less freedom than the Windows one. It has several predefined directories that you cannot move or rename. You are allowed to put your data in the specific paths only.

The POSIX standard says that the file system should have a top-level directory. It is called the root directory. The slash sign / denotes it. All directories and files of all connected disk drives are inside the root directory.

If you want to access the contents of a disk drive, you should mount it. Mounting means embedding the contents of a disk into the root directory. When mounting is done, you can access the disk contents through some path. This path is called a mount point. If you go to the mount point, you enter the file system of the disk.

Let’s compare the Windows and Unix directory structures by example. Suppose that your Windows computer has two local disks C and D. Listing 2-1 shows their directory structure.

Suppose that you have installed the Unix environment on your Windows. Then you run the terminal emulator and get the directory structure from Listing 2-2.

Since you launch the MSYS2 terminal, you enter the Unix environment. Windows paths don’t work there. You should use Unix paths instead. For example, you can access the C:\Windows directory via the /c/Windows path only.

There is another crucial difference between Unix and Windows file systems besides the directory structure. The character case makes strings differ in the Unix environment. It means that two words with the same letters are not the same if their character case differs. For example, the Documents and documents words are not equal. Windows has no case sensitivity. If you type the c:\windows path in the Explorer address bar, it opens the C:\Windows directory. This approach does not work in the Unix environment. You should type all characters in the proper case.

Here is the last point to mention regarding Unix and Windows file systems. Use the slash sign / to separate directories and files in Unix paths. When you work with Windows paths, you use backslash \ for that.

File System Navigation Commands

We are ready to learn our first Bash commands. Here are the steps to execute a shell command:

- Open the terminal window.

- Type the command there.

- Press Enter.

The shell will execute your command.

When the shell is busy, it cannot process your input. You can distinguish the shell’s state by the command prompt. It is a sequence of one or more characters. The default prompt is the dollar sign $. You can see it in Figure 2-4. If the shell prints the prompt, it is ready for executing your command.

Windows Explorer allows you the following actions to navigate the file system:

- Display the current directory.

- Go to a specified disk drive or directory.

- Find a directory or file on the disk.

You can do the same actions with the shell. It provides you a corresponding command for each action. Table 2-1 shows these commands.

| Command | Description | Examples |

|---|---|---|

ls |

Display the contents of the directory. | ls |

| If you call the command without parameters, it shows you the contents of the current directory. | ls /c/Windows |

|

pwd |

Display the path to the current directory. | pwd |

When you add the -W parameter, the command displays the path in the Windows directory structure. |

||

cd |

Go to the directory at the specified | cd tmp |

| relative or absolute path. | cd /c/Windows |

|

cd .. |

||

mount |

Mount the disk to the root file system. If you call the command without parameters, it shows a list of all mounted disks. | mount |

find |

Find a file or directory. The first parameter | find . -name vim |

| specifies the directory to start searching. | find /c/Windows -name *vim* |

|

grep |

Find a file by its contents. | grep "PATH" * |

grep -Rn "PATH" . |

||

grep "PATH" * .* |

Bash can perform pwd and cd commands of Table 2-1 only. They are called built-ins. Special utilities perform all other commands of the table. Bash calls an appropriate utility if it cannot execute your command on its own.

The MSYS2 environment provides a set of GNU utilities. These are auxiliary highly specialized programs. They give you access to the OS features in Linux and macOS. However, their capabilities are limited in Windows. Bash calls GNU utilities to execute the following commands of Table 2-1:

lsmountfindgrep

When you read an article about Bash on the Internet, its author can confuse the “command” and “utility” terms. He names both things “commands”. This is not a big issue. However, I recommend you to distinguish them. Calling a utility takes more time than calling Bash built-in. It causes performance overhead in some cases.

pwd

Let’s consider the commands in Table 2-1. You have just started the terminal. The first thing you do is to find out the current directory. You can get it from the command prompt, but it depends on your Bash configuration. You do not have this feature enabled by default in Linux and macOS.

When you start the terminal, it opens the home directory of the current user. Bash abbreviates this path by the tilde symbol ~. You see this symbol before the command prompt. Use tilde instead of the home directory absolute path. It makes your commands shorter.

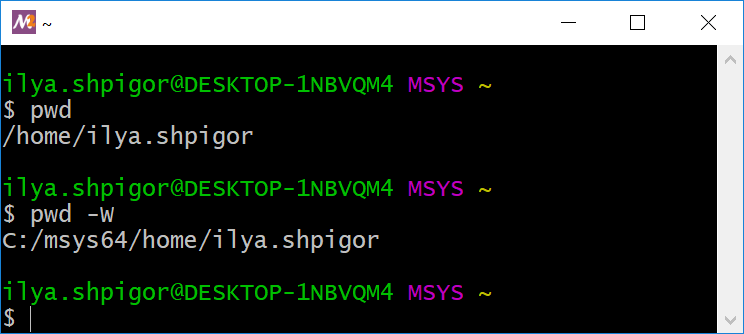

Call the pwd command to get the current directory. Figure 2-7 shows this call and its output. The command prints the absolute path to the user’s home directory. It equals /home/ilya.shpigor in my case.

If you add the -W option to the call, the command prints the path in the Windows directory structure. It is useful when you create a file in the MSYS2 environment and open it in a Windows application afterward. Figure 2-7 shows you the result of applying the -W option.

pwd commandWhat is a command option? When the program has a CLI only, you have very limited ways to interact with it. The program needs some data on input to do its job. The shell provides you a simple way to pass these data. Just type them after the command name. These data are called arguments of the program. Bash terminology distinguishes two kinds of arguments: parameter and option. A parameter is the regular word or character you pass to the program. An option or key is an argument that switches the mode of a program. The standard dictates the option format. It is a word or character that starts with a dash - or a double dash --.

You pass data to the CLI programs and Bash built-ins in the same way. Use parameters and options for doing that.

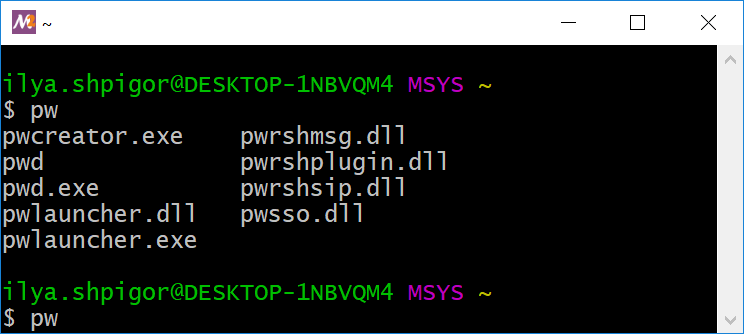

Typing long commands is inconvenient. Bash provides the autocomplete feature to save your time. Here are the steps for using it:

- Type the first few letters of the command.

- Press the Tab key.

- If Bash finds the command you mean, it completes it.

- If several commands start with the typed letters, autocomplete does not happen. Press Tab again to see the list of these commands.

Figure 2-8 demonstrates how the autocomplete feature works. Suppose that you type the “pw” word. Then you press the Tab key twice. Bash shows you the commands that start with “pwd” as Figure 2-8 shows.

ls

We got the current directory using the pwd command. The next step is checking the directory content. The ls utility does this task.

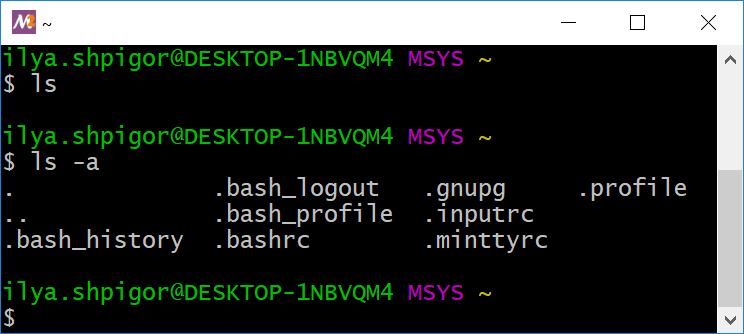

Suppose that you have just installed the MSYS2 environment. Then you launched the terminal first time. You are in the user’s home directory. Call the “ls” command there. Figure 2-9 shows its result. The command output has nothing. It means that the directory is empty or has hidden files and directories only.

ls utilityWindows has a concept of hidden files and directories. The Unix environment also has it. Applications and OS create hidden files for their own needs. These files store configuration and temporary data.

You can make the file hidden in Windows by changing its attribute. If you want to do the same in Unix, you should add a dot at the beginning of the filename.

When you launch the ls utility without parameters, it does not show you hidden objects. You can add the -a option to see them. Figure 2-9 shows a result of such a call.

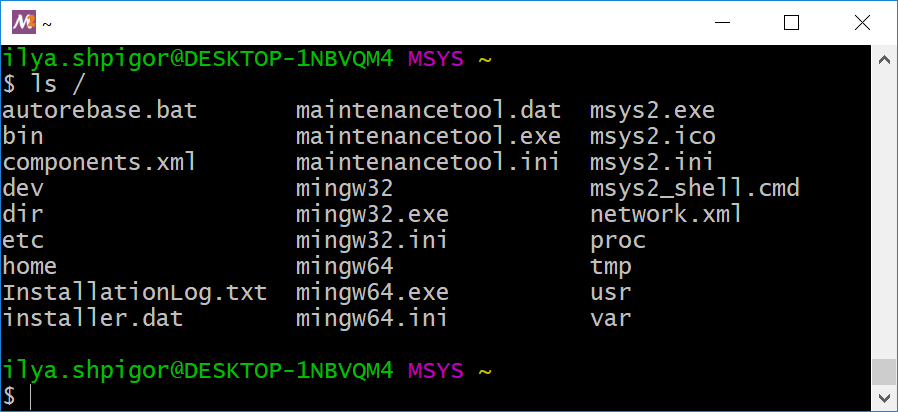

The ls utility can show the contents of the specified directory. Pass a directory’s absolute or relative path to the utility. For example, the following command shows the contents of the root directory:

Figure 2-10 shows the output of this command.

ls utilityThere are no directories /c and /d in Figure 2-10. These are the mount points of C and D disk drives according to Listing 2-2. The mounting points are in the root directory. Why does not the ls utility print them? It happens because the Windows file system does not have a concept of mount points. Therefore, it does not have directories /c and /d. They are present in the Unix environment only. These are not real directories but paths where you can access the disk file systems. The ls utility reads the directory contents in the Windows file system. Thus, it does not show the mount points. The ls utility behaves differently in Linux and macOS. It shows mount points properly there.

mount

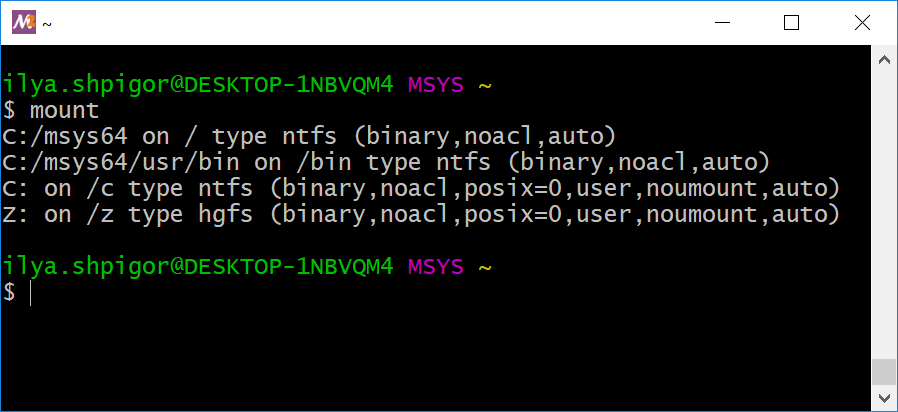

If your computer has several disk drives, you can read their mount points. Call the mount utility without parameters for doing that. Figure 2-11 shows its output.

mount utilityConsider this output as a table with four columns. The columns display the following values:

- The disk drive, its partition or directory. It is the object that the OS has mounted to the root directory.

- Mount point. It is the path where you can access the mounted disk drive.

- The file system type of the disk drive.

- Mounting parameters. An example is access permissions to the disk contents.

If we split the mount utility output into these columns, we get Table 2-2.

| Mounted partition | Mount point | FS type | Mounting parameters |

|---|---|---|---|

C:/msys64 |

/ |

ntfs |

binary,noacl,auto |

C:/msys64/usr/bin |

/bin |

ntfs |

binary,noacl,auto |

C: |

/c |

ntfs |

binary,noacl,posix=0,user,noumount,auto |

Z: |

/z |

hgfs |

binary,noacl,posix=0,user,noumount,auto |

Table 2-2 confuses most Windows users. MSYS2 mounts C:/msys64 as the root directory. Then it mounts the C and Z disks into the root. Their mount points are /c and /z. It means that you can access the C drive via the C:/msys64/c Windows path in the Unix environment. However, C:/msys64 is the subdirectory of disk C in the Windows file system. We got a contradiction.

Actually, there is no contradiction. The /c path is the mount point that exists only in the Unix environment. It does not exist in the Windows file system. Therefore, Windows knows nothing about the C:/msys64/c path. It is just invalid if you try to open it via Explorer. You can imagine the mount point /c as the shortcut to drive C that exists in the MSYS2 environment only.

The output of the mount utility took up a lot of screen space. You can clear the terminal window by the Ctrl+L keystroke.

Another useful keystroke is Ctrl+C. It interrupts the currently running command. Use it if the command hangs or you want to stop it.

cd

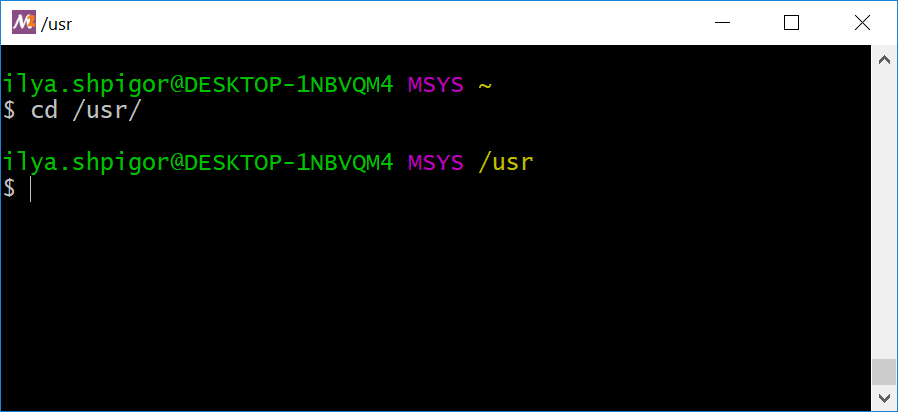

You have got everything about the current directory. Now you can change it. Suppose that you are looking for the Bash documentation. You can find it in the /usr system directory. Installed applications stores their non-executable files there. Call the cd command to go to the /usr path. Do it this way:

cd /usr

Do not forget about autocompletion. It works for both command and its parameters. Just type “cd /u” and press the Tab key. Bash adds the directory name usr automatically. Figure 2-12 shows the result of the command.

cd commandThe cd command does not output anything if it succeeds. It changes the current directory and that is it. You can read the new path in the line above the command prompt. This line shows the /usr path after our cd call.

The cd command accepts both absolute and relative paths. Relative paths are shorter. Therefore, you type them faster. Prefer them when navigating the file system using a command shell.

There is a simple rule to distinguish the type of path. An absolute path starts with a slash /. An example is /c/Windows/system32. A relative path starts with a directory name. An example is Windows/system32.

Now you are in the /usr directory. You can get a list of its subdirectories and go to one of them. Suppose that you want to go one level higher and reach the root directory. There are two ways for doing that: go to the absolute path / or the special relative path ... The .. path always points to the parent directory of the current one. Use it in the cd call this way:

cd ..

Come back to the /usr directory. Then run the ls utility there. It will show you the share subdirectory. Come to this directory and call ls again. You will find the doc directory there. It contains Bash documentation. Call the cd command this way to reach the documentation:

cd doc/bash

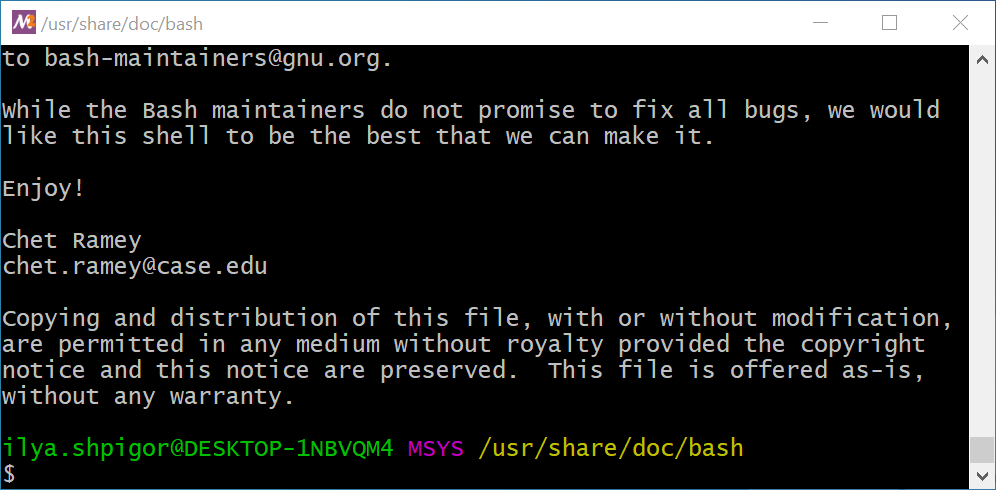

You are in the /usr/share/doc/bash directory now. Call the ls utility there. It will show you several files. One of them is README. It contains a brief description of the Bash interpreter.

You found the documentation file. The next step is to print its contents. The cat utility does that. Here is an example of how to run it:

Figure 2-13 shows the terminal window after the cat call.

cat utilityecho "$(< README.txt)"

The README file contents do not fit in the terminal window. Therefore, you see the tail of the file in Figure 2-13. Use the scroll bar on the window’s right side to check the head of the file. Also, use the Shift+PageUp and Shift+PageDown hotkeys to scroll pages up and down. The Shift+↑ and Shift+↓ keystrokes scroll the lines.

Command History

Whenever you call a command, Bash saves it in the command history. You can navigate the history by up and down arrow keys. Bash automatically types the corresponding command. You just need to press Enter for launching it. For example, you have called the “cat README” command. Press the up arrow and Enter to repeat it.

The Ctrl+R shortcut brings up a search over all command history. Press Ctrl+R and start typing. Bash will show you the last called command that begins with these characters. Press Enter to execute it.

The history command shows you the whole history. Run it without parameters this way:

history

The history stores the command that you have executed. It does not keep the command that you typed and then erased.

There is a trick to save the command to the history without executing it. Add the hash symbol # before the command and press Enter. Bash stores the typed line, but it does not execute it. This happens because the hash symbol means comment. When the interpreter meets a comment, it ignores this line. However, Bash adds the commented lines in the history because they are legal constructions of the language.

Here is an example of the trick with comment for our cat utility call:

#cat README

You have saved the commented command in the history. Now you can find it there by pressing the up arrow key. Remove the hash symbol at the beginning of the line and press Enter. Bash will execute your command.

You can do the comment trick by the Alt+Shift+3 shortcut. It works in most modern terminal emulators. Here are the steps for using the shortcut:

- Type a command, but do not press Enter.

- Press Alt+Shift+3.

- Bash saves the command in the history without executing it.

Sometimes you need to copy text from the terminal window. It can be a command or its output. Here is an example. Suppose that some document needs a part of the Bash README file. Use the clipboard to copy it. The clipboard is temporary storage for text data. When you select something in the terminal window with a mouse, the clipboard saves it automatically. Then you can paste this data to any other window.

These are the steps to copy text from the terminal window:

- Select the text with the mouse. Hold down the left mouse button and drag the cursor over the required text.

- Press the middle mouse button to paste the text from the clipboard into the same or another terminal window. You insert the text at the current cursor position.

- Right-click and select the “Paste” item to paste the text to the application other than the terminal.

find

It is inconvenient to search for a file or directory with cd and ls commands. The special find utility does it better.

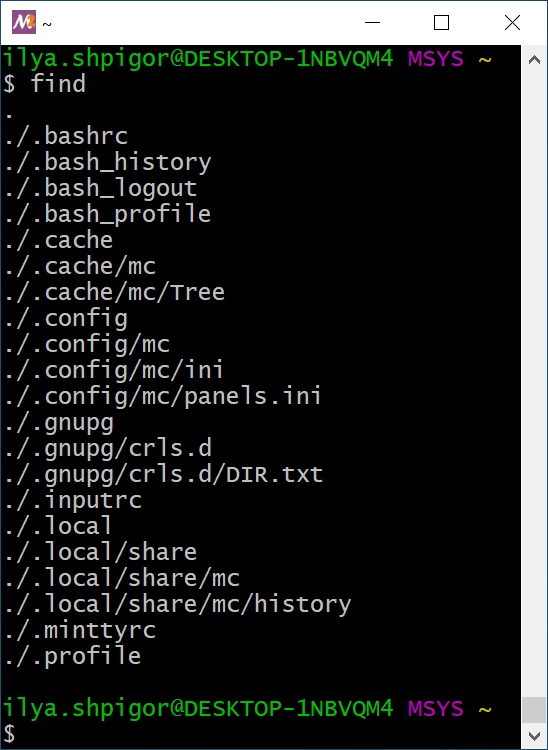

If you run the find utility without parameters, it traverses the contents of the current directory and prints it. The output includes hidden objects. Figure 2-14 shows the result of running find in the home directory.

find utilityThe first parameter of find is the directory to search in. The utility accepts relative and absolute paths. For example, the following command shows the contents of the root directory:

You can specify search conditions starting from the second parameter. If the found object does not meet these conditions, find does not print it. The conditions form a single expression. The utility has an embedded interpreter that processes this expression.

An example of the find condition is the specific filename. When you call the utility with such a condition, it prints the found files with this name only.

Table 2-3 shows the format of commonly used conditions for the find utility.

| Condition | Meaning | Example |

|---|---|---|

-type f |

Search files only. | find -type f |

-type d |

Search directories only. | find -type d |

-name <pattern> |

Search for a file or directory with the | find -name README |

| name that matches a glob pattern. The | find -name READ* |

|

| pattern is case-sensitive. | find -name READ?? |

|

-iname <pattern> |

Search for a file or directory with the name that matches a glob pattern. The pattern is case-insensitive. | find -iname readme |

-path <pattern> |

Search for a file or directory with the path that matches a glob pattern. The pattern is case-sensitive. | find -path */doc/bash/* |

-ipath <pattern> |

Search for a file or directory with the path that matches a glob pattern. The pattern is case-insensitive. | find . -ipath */DOC/BASH/* |

-a or -and

|

Combine several conditions using the logical AND. If the found object fits all conditions, the utility prints it. | find -name README -a -path */doc/bash/* |

-o or -or

|

Combine several conditions using the logical OR. If the found object fits at least one condition, the utility prints it. | find -name README -o -path */doc/bash/* |

! or -not

|

The logical negation (NOT) of the | find -not -name README |

| condition. If the found object does not fit the condition, the utility prints it. | find ! -name README |

A glob pattern is a search query that contains wildcard characters. Bash allows three wildcard characters: *, ? and [. The asterisk stands for any number of any characters. A question mark means a single character of any kind.

Here is an example of glob patterns. The string README matches all following patterns:

*MEREADM?*M?R*M?

Square brackets indicate a set of characters at a specific position. For example, the pattern “[cb]at.txt” matches the cat.txt and bat.txt files. You can apply this pattern to the find call this way:

"[cb]at.txt"

Let’s apply glob patterns into practice. Suppose that you do not know the Bash README file location and looking for it. Then you should use the find utility.

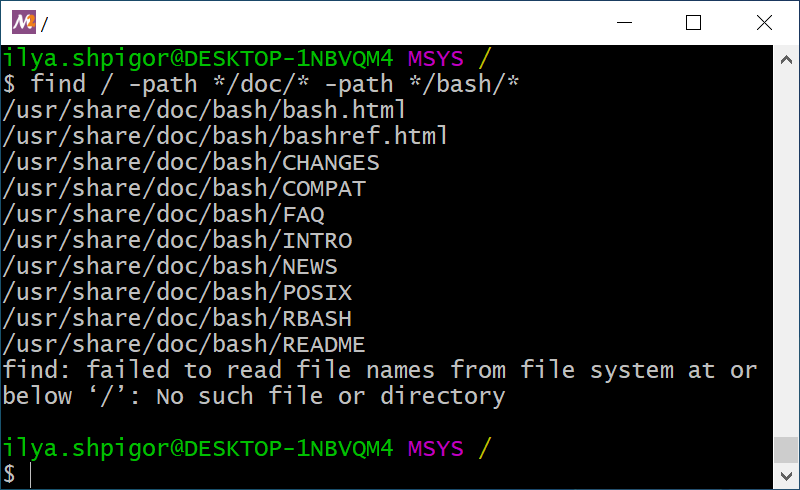

Start searching with the utility from the root directory. Now you need a search condition. It is a common practice to store documentation in directories called doc in Unix. Therefore, you can search files in these directories only. This way, you get the following find call:

The command shows you all documentation files on all mounted disks. This is a huge list. You can shorten it with an extra search condition. It should be a separate directory for the Bash documentation. The directory is called bash. Add this path as the second search condition. Then you get the following command:

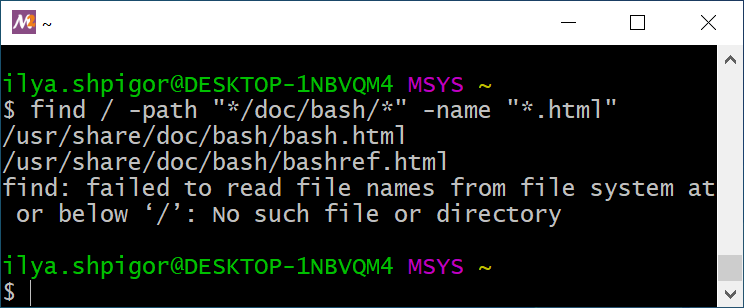

Figure 2-15 shows the result of this command.

The following find call provides the same result:

Our find calls differ by the -a option between conditions. The option means logical AND. If you do not specify any logical operator between conditions, find inserts AND by default. This is a reason why both calls provide the same result.

find utilityYou can see that the find utility reports an error in Figure 2-15. The mount points of Windows disk drives cause it. The utility cannot access them when you start searching from the root directory. You can avoid the problem if you start searching from the /c mount point. Do it this way:

There is an alternative solution. You should exclude mount points from the search. The -mount option does this. Apply the option this way:

When you add the second search condition, the find utility shows a short list of documents. You can find the right README file easily there.

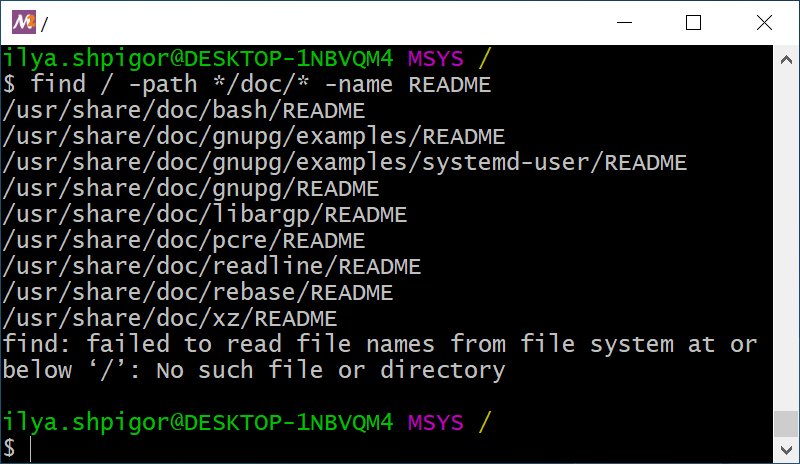

There are other ways to search for the documentation file. Suppose that you know its name. Then you can specify it together with an assumed path. You will get the find call like this:

Figure 2-16 shows the result of this command.

find utilityAgain you got a short list of files. It is easy to locate the right file there.

You can group the conditions of the find utility. Do it using the escaped parentheses. Here is an example of using them. Let’s write the find call that searches README files with path */doc/* or LICENSE files with an arbitrary path. This call looks like this:

\( -path */doc/* -name README \) -o -name LICENSE

Why should you apply backslashes to escape brackets here? The parentheses are part of the Bash syntax. Therefore, Bash treats them like language constructs. When Bash meets parentheses in a command, it performs an expansion. The expansion is the replacement of a part of the command with something else. When you escape parentheses, you force Bash to ignore them. Thus, Bash does not perform the expansion and passes all search conditions to the find utility as it is.

The find utility can process the found objects. You can specify an action to apply as an extra option. The utility will apply this action to each found object.

Table 2-4 shows the find options that specify actions.

| Option | Meaning | Example |

|---|---|---|

-exec command {} \; |

Execute the specified command on each found object. | find -name README -type f -exec cp {} ~ \; |

-exec command {} + |

Execute the specified command once over all found objects. The command receives all these objects on the input. | find -type d -exec cp -t ~ {} + |

-delete |

Delete each of the found files. The utility deletes empty directories only. | find -name README -type f -delete |

Table 2-4 shows that there are two variants of the -exec action. They differ by the last symbol. It can be an escaped semicolon \; or a plus sign +. Use the plus sign only if the called command handles several input parameters. You will make a mistake if the command accepts one parameter only. It will process the first found object and skip the rest.

Let’s apply the -exec action in practice. Suppose that you want to copy files with the Bash documentation into the home directory. You are interested in the HTML files only.

The first step is preparing the correct find call for searching the files. You should apply two conditions here. The first one checks the directory of the Bash documentation. The second condition checks the file extensions. If you combine these conditions, you get the following find call:

"*/doc/bash/*" -name "*.html"

When you pass the glob pattern to the find utility, always enclose it in double quotes. The quotes do the same as the backslash before parentheses. They prevent Bash from expanding the patterns. Instead, Bash passes them to the find utility.

Figure 2-17 shows the result of our find call. You can see that it found HTML files correctly.

find utilityThe second step for solving your task is adding the -exec action. The action should call the cp utility. This utility copies files and directories to the specified path. It takes two parameters. The first one is the source object to copy. The second parameter is the target path. When you apply the -exec action, you get the following find call:

"*/doc/bash/*" -name "*.html" -exec cp {} ~ \;

Run this command. It prints an error about the mount point. Despite the error, the command did its job. It copied the HTML files into the home directory.

How does the command work in detail? It calls the cp utility for each HTML file it found. When calling the utility, find inserts each found object instead of curly braces {}. Therefore, two cp calls happen here. They look like this:

1 cp ./usr/share/doc/bash/bash.html ~

2 cp ./usr/share/doc/bash/bashref.html ~

Each cp call copies one HTML file to the home directory.

Good job! You just wrote your first program in the language of the find utility. The program works according to the following algorithm:

- Find HTML files starting from the root directory. Their paths match the

*/doc/bash/*pattern. - Copy each found file into the home directory.

The program is quite simple and consists of two steps only. However, it is a scalable solution for finding and copying files. The program processes two or dozens of HTML files with the same speed.

You can combine the -exec actions in the same way as the search conditions. For example, let’s print the contents of each found HTML file and count the number of its lines. You should call the cat utility to print the file contents. The wc utility counts the lines. It takes the filename as an input parameter. If you combine cat and wc calls, you get the following find command:

"*/doc/bash/*" -name "*.html" -exec cat {} \; -exec wc -l {} \;

There is no logical operation between the -exec actions. The find utility inserts logical AND by default. This has a consequence in our case. If the cat utility fails, find does not call the wc utility. It means that find executes the second action only if the first one succeeds. You can apply the logical OR explicitly. Then find always calls wc. Here is the command with logical OR:

"*/doc/bash/*" -name "*.html" -exec cat {} \; -o -exec wc -l {} \;

You can group the -exec actions with escaped parentheses \( and \). It works the same way as grouping search conditions.

find utilityWrite a find call to search for text files in a Unix environment.

Extend the command to print the total number of lines in these files.

Boolean Expressions

The search conditions of the find utility are Boolean expressions. A Boolean expression is a programming language statement. It produces a Boolean value when evaluated. This value equals either “true” or “false”.

The find condition is a statement of the utility’s language. It produces the “true” value if the found object meets its requirement. Otherwise, the condition produces “false”. If there are several conditions in the find call, they make a single compound Boolean expression.

When we have considered the binary numeral system, we already met Boolean algebra. This section of mathematics studies logical operators. They differ from the arithmetic operations: addition, subtraction, multiplication, and division.

You can apply a logical operator to Boolean values or expressions. Using an arithmetic operation does not make sense in this case. Addition or subtraction is trivial for Boolean values. It yields nothing. When you apply a logical operator, you get a condition with strict evaluation rules. This way, you wrote search conditions for the find utility. When you combine several conditions, you get a program with complex behavior.

An operand is an object of a logical operator. Boolean values and expressions can be operands.

Let’s consider Boolean expressions using an example. The example is not related to the find utility or Bash for simplicity. Imagine that you are programming a robot for a warehouse. Its job is to move boxes from point A to point B. You can write the following straightforward algorithm for the robot:

- Move to point A.

- Pick up the box at point A.

- Move to point B.

- Put the box at point B.

This algorithm does not have any conditions. It means that the robot performs each step independently of external events.

Now imagine that an obstacle happens in the robot’s way. For example, another robot stuck there. Executing your algorithm leads to the collision of the robots in this case. You should add a condition in the algorithm to prevent the collision. For example, it can look like this:

- Move to point A.

- Pick up the box at point A.

- If there is no obstacle, move to point B. Otherwise, stop.

- Put the box at point B.

The third step of the algorithm is called conditional statement. All modern programming languages have such a statement.

The conditional statement works according to the following algorithm:

- Evaluate the Boolean expression in the condition.

- If the expression produces “true”, perform the first action.

- If the expression produces “false”, perform the second action.

The robot evaluates the value of the Boolean expression “there is no obstacle” in our example. If there is an obstacle, the expression produces “false” and the robot stops. Otherwise, the robot moves to point B.

When writing the conditional statement, you can combine several Boolean expressions using logical operators. Here is an example. Suppose that the robot tries to pick up a box at point A, but there is no box. Then there is no reason for him to move to point B. You can check this situation in the conditional statement. Add the new Boolean expression there using logical AND (conjunction). Then the robot’s algorithm becomes like this:

- Move to point A.

- Pick up the box at point A.

- If there is a box AND no obstacle, move to point B. Otherwise, stop.

- Put the box at point B.

Logical operators produce Boolean values when evaluated. The result of a logical AND equals “true” when both operands are “true”. In our example, it happens when the robot has a box and there is no obstacle on its way. Otherwise, the result of logical AND equals “false”. It forces the robot to stop.

You have used two more logical operators when learning the find utility. These operators are OR (disjunction) and NOT (negation).

Actually, you have already applied logical NOT in the robot’s algorithm. It stays implicitly in the expression “there is no obstacle”. It equals the following negation: “there is NOT an obstacle”. You can specify the logical NOT in the algorithm explicitly this way:

- Move to point A.

- Pick up the box at point A.

- If there is a box AND there is NOT an obstacle, move to point B. Otherwise, stop.

- Put the box at point B.

You can always replace logical AND by OR with some extra changes. Let’s do it for our example but keep the robot’s behavior the same. You should add the negation to the first Boolean expression and remove it from the second one. Also, you have to change the order of actions in the conditional statement. If the condition produces “true”, the robot stops. If it produces “false”, the robot moves to point B. The new algorithm looks this way:

- Move to point A.

- Pick up the box at point A.

- If there is NOT a box OR there is an obstacle, stop. Otherwise, move to point B.

- Put the box at point B.

Read the new conditional statement carefully. The robot follows the same decisions as before. It stops if it has no box or if there is an obstacle on its way. However, you have exchanged the logical AND to OR. This trick helps you to keep your conditional statements clear. Choose between logical AND and OR depending on your Boolean expressions. Pick one that fits your case better.

You wrote the Boolean expressions as sentences in English in our example. Such a sentence sounds unnatural. You have to read it several times to understand it. This happens because the natural humans’ language is not suitable for writing Boolean expressions. This language is not accurate enough. Boolean algebra uses mathematical notation for that reason.

We have considered logical AND, OR and NOT. You will deal with three more operators in programming often:

- Equivalence

- Non-equivalence

- Exclusive OR

Table 2-5 explains them.

| Operator | Evaluation Rule |

|---|---|

| AND | It produces “true” when both operands are “true”. |

| OR | It produces “true” when any of the operands is “true”. It produces “false” when all operands are “false”. |

| NOT | It produces “true” when the operand is “false” and vice versa. |

| Exclusive OR (XOR) | It produces “true” when the operands have different values (true-false or false-true). It produces “false” when the operands are the same (true-true, false-false). |

| Equivalence | It produces “true” when the operands have the same values. |

| Non-equivalence | It produces “true” when the values of the operands differ. |

Try to memorize this table. It is simple to reach when you use logical operators often.

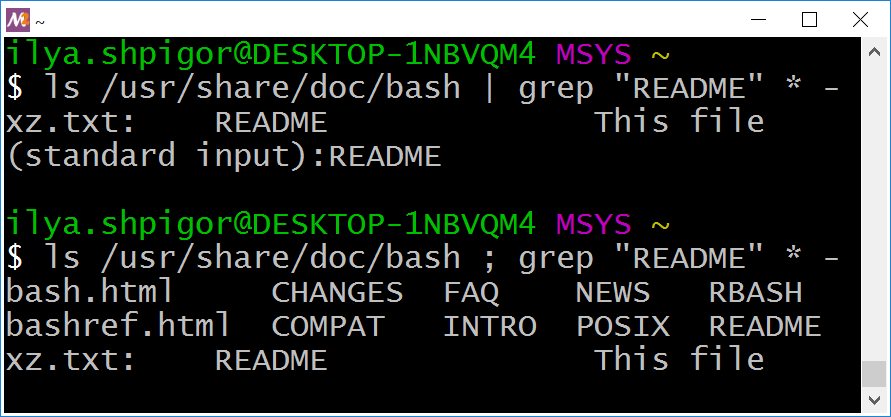

grep

The GNU utilities have one more searching tool besides find. It is called grep. This utility checks file contents when searching.

How to choose the proper utility for searching? Use find for searching a file or directory by its name, path or metadata. Metadata is extra information about an object. Examples of the file metadata are size, time of creation and last modification, permissions. Use the grep utility to find a file when you know nothing about it except its contents.

Here is an example. It shows you how to choose the right utility for searching. Suppose that you are looking for a documentation file. You know that it contains the phrase “free software”. If you apply the find utility, the searching algorithm looks like this:

- Call

findto list all the files with theREADMEname. - Open each file in a text editor and check if it has the phrase “free software”.

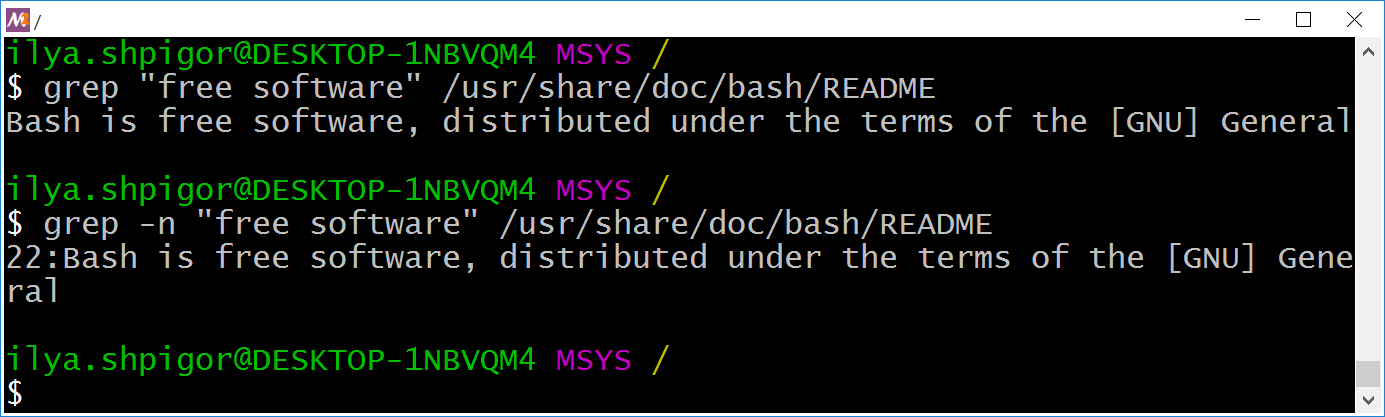

Using a text editor for checking dozens of files takes too much effort and time. You should perform several operations with each file manually: open it, activate the editor’s searching mode, type the “free software” phrase. The grep utility automates this task. For example, the following command finds all lines with the “free software” phrase in the specified README file:

"free software" /usr/share/doc/bash/README

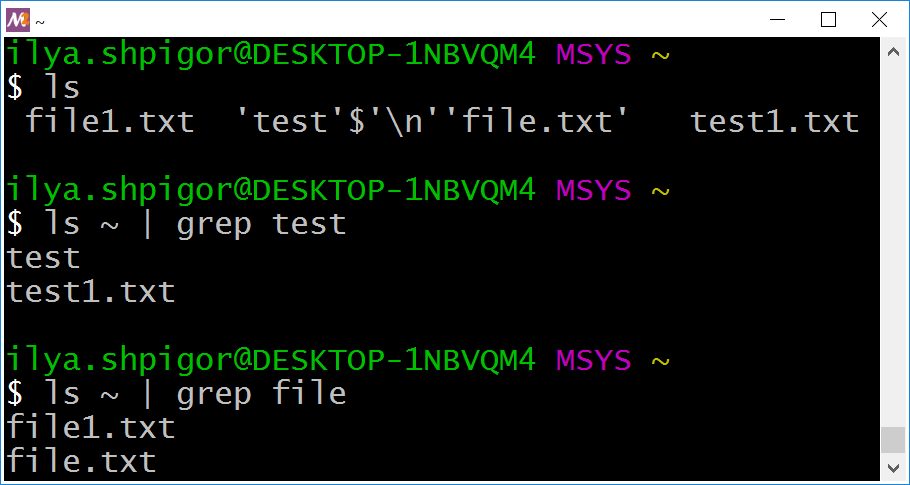

The first parameter of the utility is a string for searching. Always enclose it in double quotes. This way, you prevent Bash expansions and guarantee that the utility receives the string unchanged. Without the quotes, Bash splits the phrase into two separate parameters. This mechanism of splitting strings into words is called word splitting.

The second parameter of grep is a relative or absolute path to the file. If you specify a list of files separated by spaces, the utility processes them all. In the example, we passed the README file path only.

Figure 2-18 shows the result of the grep call.

grep utilityYou see all lines of the file where the utility found the specified phrase. The -n option adds the line numbers to the grep output. It can help you to check big text files. Add the option before the first parameter when calling the utility. Figure 2-18 shows the output in this case.

We have learned how to use grep to find a string in the specified files. Now let’s apply the utility to solve our task. You are looking for the documentation files with the phrase “free software”. There are two ways to find them with the grep utility:

- Use Bash glob patterns.

- Use the file search mechanism of the

greputility.

The first method works well when you have all files for checking in the same directory. Suppose that you found two README files: one for Bash and one for the xz utility. You have copied them to the home directory with the names bash.txt and xz.txt. The following two commands find the file that contains the phrase “free software”:

1 cd ~

2 grep "free software" *

The first command changes the current directory to the user’s home. The second command calls the grep utility.

When calling grep, we have specified the asterisk for the target file path. This wildcard means any string. Bash expands all wildcards in the command before launching it. In our example, Bash replaces the asterisk with all files of the home directory. The resulting grep call looks like this:

"free software" bash.txt xz.txt

Launch both versions of the grep call: with the * pattern and with a list of two files. The utility prints the same result for both cases.

You can search for the phrase in a single command. Just exclude the cd call. Then add the home directory to the search pattern. You will get the following grep call:

"free software" ~/*

This command does not handle subdirectories. It means that the grep call does not check the files in the ~/tmp directory, for example.

There is an option to check how the Bash expands a glob pattern. Use the echo command for that. Here are echo calls for checking our patterns:

1 echo *

2 echo ~/*

Run these commands. The first one lists files and subdirectories of the current directory. The second command does the same for the home directory.

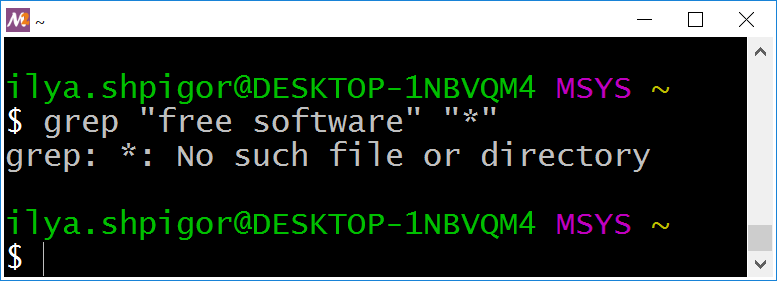

Do not enclose search patterns in double quotes. Here is an example of the wrong command:

"free software" "*"

Quotes prevent the Bash expansion. Therefore, Bash does not insert the filenames to the command but passes the asterisk to the grep utility. The utility cannot handle the glob pattern properly as find does. Thus, you will get an error like Figure 2-19 shows.

grepWhen expanding the * pattern, Bash ignores hidden files and directories. Therefore, the grep utility ignores them too in our example. Add the dot before the asterisk to get the glob pattern for hidden objects. It looks like .*. If you want to check all files at once, specify two patterns separated by the space. Here is an example grep call:

"free software" * .*

The second approach to search files with grep is using its built-in mechanism. It traverses the directories recursively and checks all files there. The -r option enables this mechanism. When using this option, specify the search directory in the second utility’s parameter.

Here is an example of using the -r option:

"free software" .

This command finds the “free software” phrase in the files of the current directory. It processes the hidden objects too.

If you work on Linux or macOS, prefer the -R option instead of -r. It forces grep to follow symbol links when searching. Here is an example:

"free software" .

You can specify the starting directory for searching by a relative or absolute path. Here are the examples for both cases:

1 grep -R "free software" ilya.shpigor/tmp

2 grep -R "free software" /home/ilya.shpigor/tmp

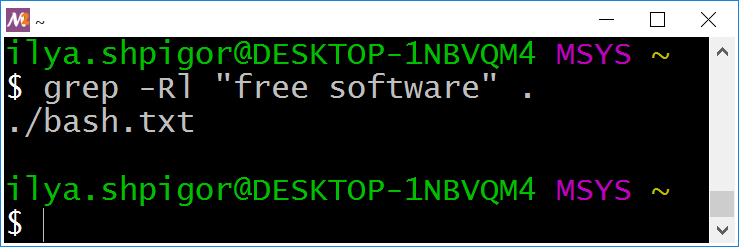

Suppose that you are interested in a list of files that contain a phrase. You do not need all occurrences of the phrase in each file. The -l option switches the grep utility in the mode you need. Here is an example of using it:

"free software" .

Figure 2-20 shows the result of this command.

grep outputs filenames onlyYou see a list of files where the phrase “free software” occurs at least once. Suppose that you need the opposite result: a list of files without the phrase. Use the -L option for finding them. Here is an example:

"free software" .

The grep utility processes the text files only. Therefore, it deals well with the source code files. You can use the utility as an add-on to your code editor or IDE.

You may have liked the grep utility. You want to process PDF and MS Office documents with it. Unfortunately, this approach does not work. The contents of these files are not text. It is encoded. You need another utility to process such files. Table 2-6 shows grep alternatives for non-text files.

| Utility | Features |

|---|---|

| pdftotext | It converts a PDF file into text format. |

| pdfgrep | It searches PDF files by their contents. |

| antiword | It converts an MS Office document into text format. |

| catdoc | It converts an MS Office document into text format. |

| xdoc2txt | It converts PDF and MS Office files into text format. |

Some of these utilities are available in the MSYS2 environment. Use the pacman package manager for installing them. The last chapter of the book describes how to use it.

grep utilityWrite a grep call to find system utilities with a free license.

Here are widespread licenses for open-source software:

1. GNU General Public License

2. MIT license

3. Apache license

4. BSD license

Command Information

We got acquainted with commands for navigating the file system. Each command has several options and parameters. We have covered the most common ones only. What if you need a rare feature that is missing in this book? You would need official documentation in this case.

All modern OSes and applications have documentation. However, you rarely need it when using the graphical interface. It happens because graphic elements are self-explanatory in most cases. Therefore, most PC users do not care about documentation.

When working with the CLI, the only way to know about available features of the software is by reading documentation. Besides that, you do not have anything that gives you a quick hint. When using CLI utility, it is crucial to know its basics. The negligence can lead to loss or corruption of your data.

The first versions of Unix had paper documentation. Using it was inconvenient and time-consuming. Soon it became even worse because the documentation volume grew rapidly. It exceeded the size of a single book. The Unix developers introduced the system called man page to solve the issue with documentation. Using this software, you can quickly find the required topic. It contains information about OS features and all installed applications.

The man page system is a centralized place to access documentation. Besides it, every program in Unix provides brief information about itself. For example, the Bash interpreter has its own documentation system. It is called help.

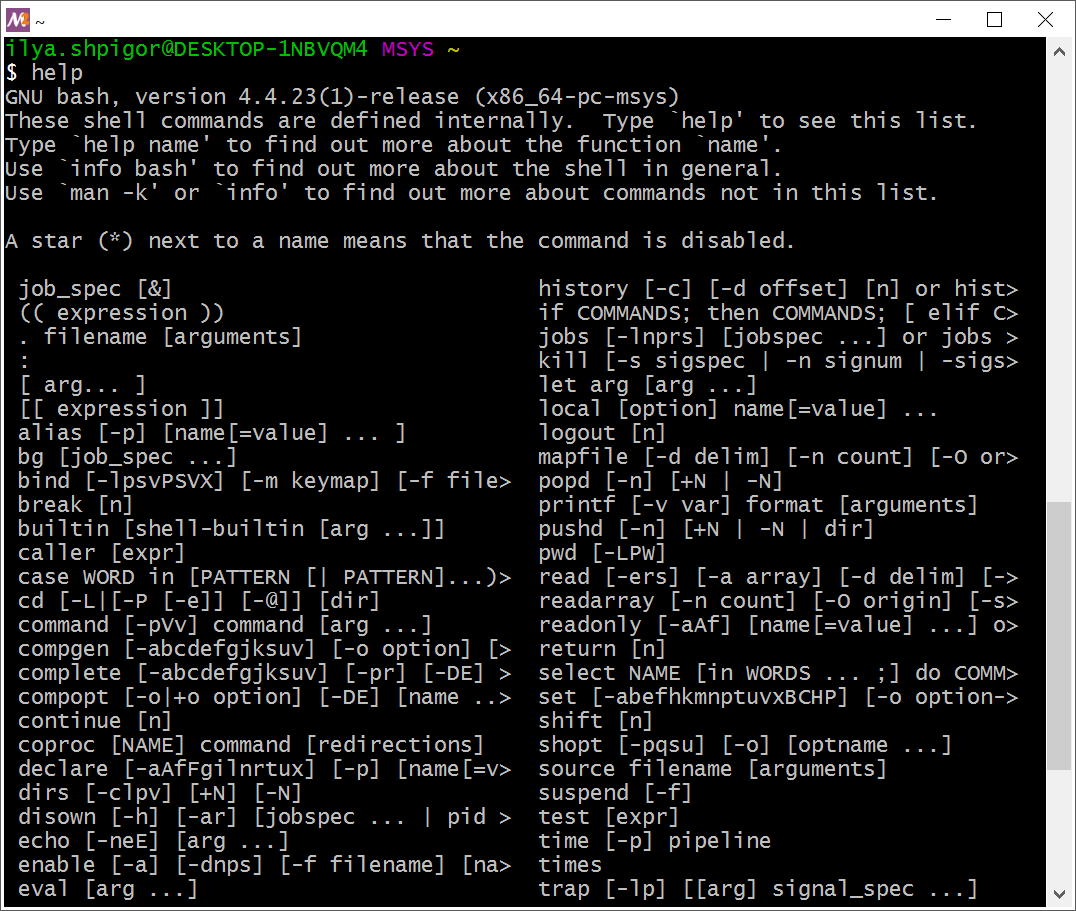

Suppose that you want to get a list of all Bash built-ins. Launch the help command without parameters. Figure 2-21 shows its output.

help commandYou see a list of all commands that Bash executes on its own. If some command is missing in this list, Bash calls a GNU utility or another program to execute it.

Here is an example. The cd command presents in the help list. It means that Bash executes it without calling another program. Now suppose you type the find command. It is missing in the help list. Therefore, Bash looks for an executable file with the find name on the disk drive. If it succeeds, Bash launches this file.

Where does Bash look for files that execute your commands? Bash has a list of paths where it searches utilities and programs. The environment variable called PATH stores this list. The variable is a named area of memory. If you write a program in machine code and want to access the memory area, you should specify its address. A variable is a mechanism of a programming language. It allows you to use the variable name instead of the memory address. Therefore, you do not need to remember addresses, which are long numbers.

Bash stores about a hundred environment variables. They hold data that affect the interpreter’s behavior. Most of these data are system settings. We will consider Bash variables in the next chapter.

You can imagine the variable as a value that has a name. For example, you can say: “The time now is 12 hours”. “Time now” is the variable name. Its value equals “12 hours”. The computer stores it in memory at some address. You do not know the address. However, you can ask a computer the value of the “time now” variable. It returns you “12 hours”. This is how the variables work.

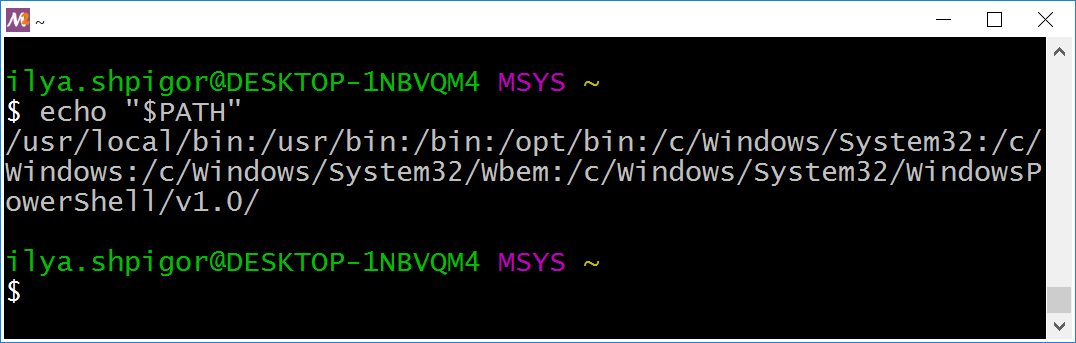

The echo command prints strings. It can also show you the value of a variable. For example, the following echo call prints the PATH variable:

echo "$PATH"

Why do we need the dollar sign $ before the variable name? The echo command receives the string on input and outputs it. For example, this echo call prints the text “123”:

echo 123

The dollar sign before a word tells Bash that it is a variable name. The interpreter handles it differently than a regular word. When Bash encounters a variable name in a command, it checks its variable list. If the name presents there, Bash inserts the variable value into the command. Otherwise, the interpreter places an empty string there.

Let’s come back to the echo command that prints the PATH variable. Figure 2-22 shows this output.

PATH variableWhat does this line mean? It is a list of paths separated by colons. If you write each path on a new line, you get the following list:

The format of the PATH variable raises questions. Why does Bash use colons as delimiters instead of line breaks? Line breaks make it easy to read the list. The reason is the specific behavior of Bash and some utilities when handling line breaks. Colons allow developers to avoid potential problems.

Suppose that you want to locate an executable file of some program on the disk. The PATH variable gives you a hint of where to look. Then you can apply the find utility and locate the file. For example, the following command searches the executable of the find utility:

The command shows you two locations of the find file:

/bin/usr/bin

Both locations present in the PATH variable.

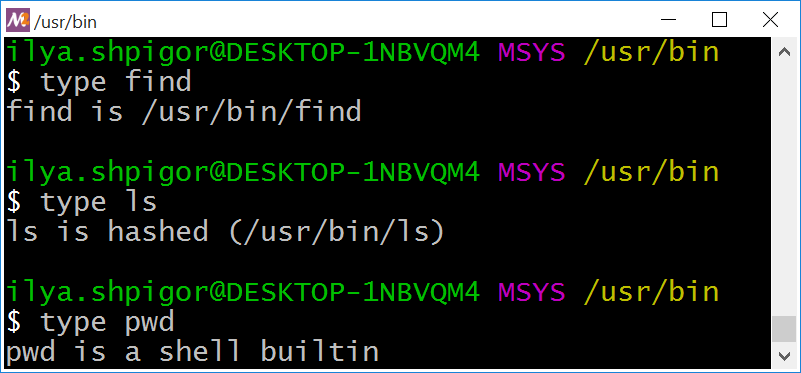

There is a much faster way to locate an executable on the disk. The type Bash built-in does it. Call the command and give it a program name. You will get the absolute path to the program’s executable. Figure 2-23 shows how it works.

type commandYou see that the /usr/bin directory stores the executables of find and ls utilities. The ls utility is marked as hashed. It means that Bash has remembered its path. When you call ls, the interpreter does not search the executable on the disk. Bash uses the stored path and calls the utility directly. If you move the hashed executable, Bash cannot find it anymore.

You can call the type command and pass a Bash built-in there. Then type tells you that Bash executes this command. Figure 2-23 shows an example of such output for the pwd command.

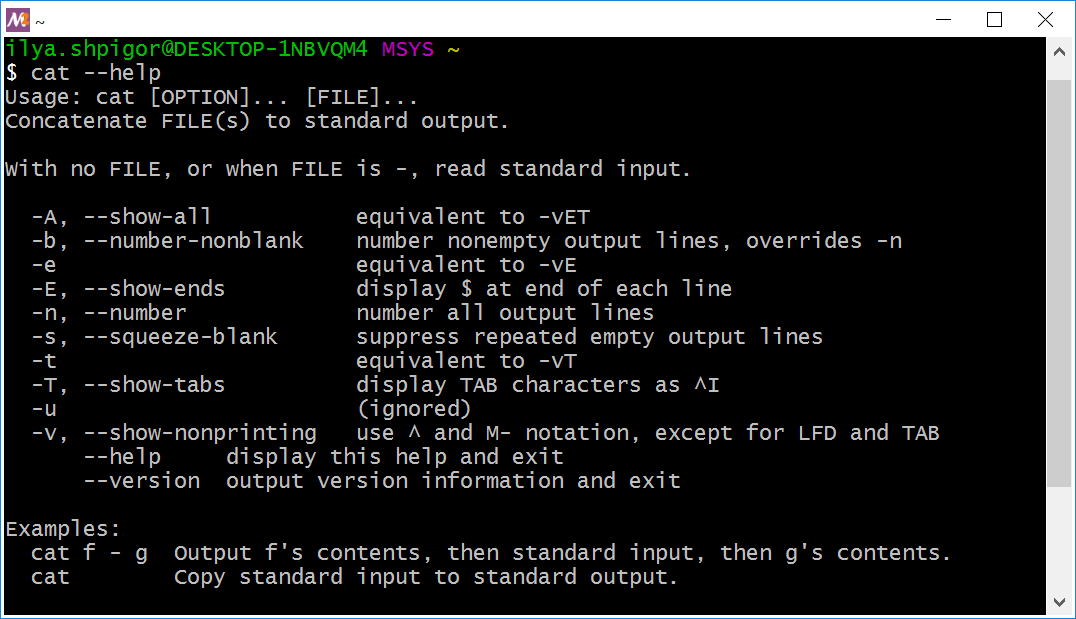

Suppose that you found the executable of the required utility. How do you know the parameters it accepts? Call the utility with the --help option. The option prints a brief help. Figure 2-24 shows this help for the cat utility.

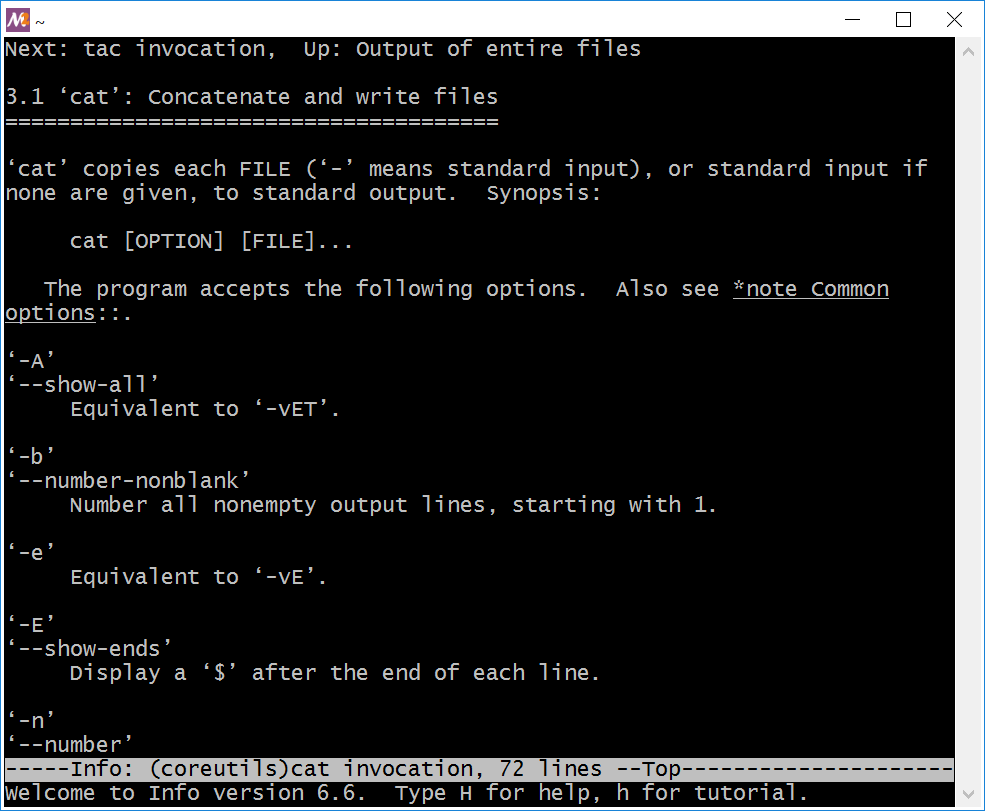

cat utilityIf the brief help is not enough, refer to the documentation system called info. Suppose you need examples of how to use the cat utility. The following command shows them:

Figure 2-25 shows the result of the command.

info page for the cat utilityYou see a program for reading text documents. Use the arrow keys, PageUp and PageDown to scroll the text. Press the Q key to end the program.

Developers of GNU utilities have created the info system. Before that, all Unix distributions used the man page system. It is also known as man. The capabilities of info and man are similar. The MSYS2 environment uses the info system, which is more modern.

Your Linux distribution may use man instead of info. Use it in the same way as info. For example, the following man call shows you help for the cat utility:

When you know which utility solves your task, it is easy to get help. What would you do if you don’t know how to solve the task? The best approach is to look for the answer on the Internet. You will find tips there. They are more concise than the manuals for GUI programs. You don’t need screenshots and videos that explain each action. Instead, you will find a couple of lines with command calls that do everything you need.

Actions on Files and Directories

You have learned how to find a file or directory on the disk. Now let’s discuss what you can do with it. If you have an experience with Windows GUI, you know the following actions with file system objects:

- Create

- Delete

- Copy

- Move or rename

Each of these actions has a corresponding GNU utility. Call them to manage the file system objects. Table 2-7 describes these utilities.

| Utility | Feature | Examples |

|---|---|---|

mkdir |

It creates the directory with the specified name and path. | mkdir ~/tmp/docs |

mkdir -p tmp/docs/report |

||

rm |

It deletes the specified file or directory | rm readme.txt |

rm -rf ~/tmp |

||

cp |

It copies a file or directory. The first parameter | cp readme.txt tmp/readme.txt |

| is the current path. The second parameter is the target path. | cp -r /tmp ~/tmp |

|

mv |

It moves or renames the file or directory | mv readme.txt documentation.txt. |

| specified by the first parameter. | mv ~/tmp ~/backup |

Each of these utilities has the --help option. It displays a brief help. Please read it before using the utility the first time. You will find there some modes that this book misses. Refer to the info or man system if you need more details.

It is time to consider the utilities of Table 2-7.

mkdir

The mkdir utility creates a new directory. Specify its target path in the first parameter of the command. Here is an example mkdir call for creating the docs directory:

We specified the absolute path to the docs directory. You can pass the relative path instead. There are two steps to take it:

- Navigate the home directory.

- Call the

mkdirutility there.

Here are the corresponding commands:

1 cd ~

2 mkdir docs

The utility has an option -p. It creates the nested directories. Here is an example of when to use it. Suppose you want to move the documents into the ` ∼/docs/reports/2019 path. However, the docs and reports directories do not exist yet. If you use mkdir in the default mode, you should call it three times to create each of the nested directories. Another option is to call mkdir once with the -p` option like this:

This command succeeds even if the docs and reports directories already exist. It creates only the missing 2019 directory in this case.

rm

The rm utility deletes files and directories. Specify the object to delete by its absolute or relative path. Here are examples of rm calls:

1 rm report.txt

2 rm ~/docs/reports/2019/report.txt

The first call deletes the report.txt file in the current directory. The second one deletes it in the ~/docs/reports/2019 path.

The rm utility can remove several files at once. Specify a list of filenames separated by spaces in this case. Here is an example:

If you want to delete dozens of files, listing them all is inconvenient. Use a Bash glob pattern in this case. For example, you need to delete all text files whose names begin with the word “report”. The following rm call does it:

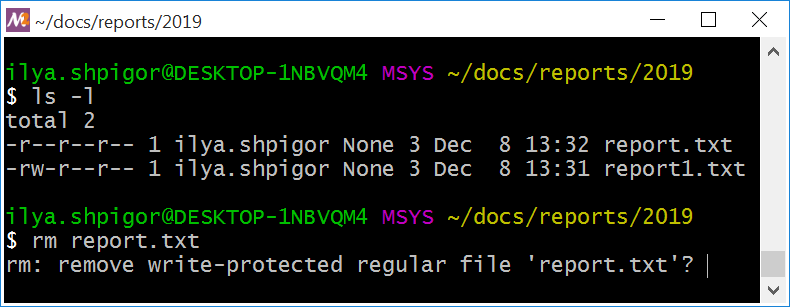

When removing a write-protected file, the rm utility shows you a warning. You can see how it looks like in Figure 2-26.

When you see such a warning, there are two options. You can press the Y (short for yes) and Enter. Then the rm utility removes the file. Another option is to press N (no) and Enter. It cancels the operation.

If you want to suppress any rm warnings, use the -f or --force option. The utility removes files without confirmation in this case. Here is an example call:

The rm utility cannot remove a directory unless you pass one of two possible options there. The first option is -d or --dir. Use it for removing an empty directory. Here is an example:

If the directory contains files or subdirectories, use the -r or --recursive option to remove it. Such a call looks like this:

The -r option removes empty directories too. Therefore, you can always use the -r option when calling rm for a directory.

cp and mv

The cp and mv utilities copy and move file system objects. Their interfaces are almost the same. Specify the target file or directory in the first parameter. Pass the new path for the object in the second parameter.

Here is an example. You want to copy the report.txt file. First, you should come to its directory. Second, call the cp utility this way:

This command creates the new file report-2019.txt in the current directory. Both report-2019.txt and report.txt files have the same contents.

Suppose that you do not need the old file report.txt. You can remove it with the rm utility after copying. The second option is to combine copying and removing in a single command. The mv utility does that:

This command does two things. First, it copies the report.txt file with the new name report-2019.txt. Second, it removes the old file report.txt.

Both cp and mv utilities accept relative and absolute paths. For example, let’s copy a file from the home directory to the ~/docs/reports/2019 path. Here is the command for that:

This command copies the report.txt file into the ~/docs/reports/2019 directory. The copy has the same name as the original file.

You can repeat the copying command with relative paths. Come to the home directory and call the cp utility there. The following commands do it:

1 cd ~

2 cp report.txt docs/reports/2019

When copying a file between directories, you can specify the copy name. Here is an example:

This command creates a file copy with the report-2019.txt name.

Moving files works the same way as copying. For example, the following command moves the report.txt file:

The following command moves and renames the file at once:

You can rename a directory using the mv utility too. Here is an example:

This command changes the name of the tmp directory to backup.

The cp utility cannot copy a directory when you call it in the default mode. Here is an example. Suppose you want to copy the directory /tmp with the temporary files to the home directory. You call cp this way:

This command fails.

You must add the -r or --recursive option when copying directories. Then the cp utility can handle them. This is the correct command for our example:

Suppose you copy or move a file. If the target directory already has the file with the same name, the cp and mv utilities ask you to confirm the operation. If you press the Y and Enter keys, utilities overwrite the existing file.

There is an option to suppress the confirmation when copying and moving files. Use the -f or --force option. It forces cp and mv utilities to overwrite the existing files. Here are examples:

1 cp -f ~/report.txt ~/tmp

2 mv -f ~/report.txt ~/tmp

Both commands overwrite the existing report.txt file in the tmp directory. You do not need to confirm these operations.

File System Permissions

Each utility of Table 2-7 checks the file system permissions before acting. These permissions define if you are allowed to operate the target object. Let’s consider this file system mechanism in detail.

The permissions restrict the user actions on the file system. The OS tracks these actions and checks their allowance. Each user can access only his files and directories, thanks to this feature. It also restricts access to the OS components.

The permissions allow several people to share one computer. This workflow was widespread in the 1960s until the advent of PCs. Hardware resources were expensive at that time. Therefore, several users have to operate with one computer.

Today most users have their own PC or laptop. However, the file system permissions are still relevant. They protect your Linux or macOS system from unauthorized access and malware.

Have a look at Figure 2-26 again. There you see the output of the ls utility with the -l option. It is the table. Each row corresponds to a file or directory. The columns have the following meaning:

- Permissions to the object.

- The number of hard links to the file or directory.

- Owner.

- Owner’s group.

- The object’s size in bytes.

- Date and time of the last change.

- File or directory name.

The permissions to the file report.txt equal the “-r–r–r–” string. What does it mean?

Unix stores permissions to a file object as a bitmask. The bitmask is a positive integer. When you store it in computer memory, the integer becomes a sequence of zeros and ones. Each bit of the mask keeps a value that is independent of the other bits. Therefore, you can pack several values into a single bitmask.

What values can you store in a bitmask? This is a set of object’s properties, for example. Each bit of the mask corresponds to one property. If it is present, the corresponding bit equals one. Otherwise, the bit equals zero.

Let’s come back to the file access rights. We can represent these rights as the following three attributes:

- Read permission.

- Write permission.

- Permission to execute.

If you apply a mask of three bits, you can encode these attributes there. Suppose a user has full access to the file. He can read, change, copy, remove or execute it. It means that the user has read, write, and execute permissions to the file. The writing permission allows changing the file and removing it. Therefore, the file permissions mask looks like this:

111

Suppose the user cannot read or execute the file. The first bit of the mask corresponds to the read access. The third bit is execution permission. When you set both these bits to zero, you restrict the file access. Then you get the following mask:

010

You should know the meaning of each bit in the mask if you want to operate it properly. The mask itself does not provide this information.

Our mask with three bits is a simplified example of file permissions. The permissions in Unix follow the same idea. However, bitmasks there have more bits. The ls utility prints these access rights to the report.txt file:

This string is the bitmask. Here dashes correspond to zeroed bits. Latin letters match the set bits. If you follow this notation, you can convert the “-r–r–r–” string to the 0100100100 mask. If all bits of the mask equal ones, the ls prints it like the “drwxrwxrwx” string.

The Unix permissions string has four parts. Table 2-8 explains their meaning.

| d | rwx | rwx | rwx |

|---|---|---|---|

| The directory attribute. | The permissions of the object’s owner. The owner is a user who has created the object. | The permissions of the user group that is attached to the object. By default, it is the group to which the owner belongs. | The permissions of all other users except the owner and the group attached to the object. |

You can imagine the Unix permissions as four separate bitmasks. Each of them corresponds to one part of Table 2-8. All bitmasks have a size of four bits. Using this approach, you can represent the “-r–r–r–” string this way:

0000 0100 0100 0100

The Latin letters in the Unix permissions have special meaning. First of all, they match bits that are set to one. The position of each bit defines the allowed action to the object. You do not need to remember the meaning of each position. The Latin letters give you a hint. For example, “r” means read access. Table 2-9 explains the rest letters.

| Letter | Meaning for a file | Meaning for a directory |

|---|---|---|

| d | If the first character is a dash instead of d, the permissions correspond to a file. |

The permissions correspond to a directory. |

| r | Access for reading. | Access for listing the directory contents. |

| w | Access for writing. | Access for creating, renaming or deleting objects in the directory. |

| x | Access for executing. | Access for navigating to the directory and accessing its nested objects. |

| — | The corresponding action is prohibited. | The corresponding action is prohibited. |

Suppose that all users of the system have full access to the file. Then its permissions look like this:

If all users have full access to a directory, the permissions look this way:

The only difference is the first character. It is d instead of the dash.

Now you know everything to read the permissions of Figure 2-26. It shows two files: report.txt and report1.txt. All users can read the first one. Nobody can modify or execute it. All users can read the report1.txt file. Only the owner can change it. Nobody can execute it.

We have considered commands and utilities for operating the file system. When you call each of them, you specify the target object. You should have appropriate permissions to the object. Otherwise, your command fails. Table 2-10 shows the required permissions.

| Command | Required Bitmask | Required Permissions | Comment |

|---|---|---|---|

ls |

r-- |

Reading | Applied for directories only. |

cd |

--x |

Executing | Applied for directories only. |

mkdir |

-wx |

Writing and executing | Applied for directories only. |

rm |

-w- |

Writing | Specify the -r option for the directories. |

cp |

r-- |

Reading | The target directory should have writing and executing permissions. |

mv |

r-- |

Reading | The target directory should have writing and executing permissions. |

| Execution | r-x |

Reading and executing. | Applied for files only. |

Files Execution

Windows has strict rules for executable files. The file extension defines its type. The Windows loader runs only files with the EXE and COM extensions. These are compiled executable of programs. Besides them, you can run scripts. The script’s extension defines the interpreter that launches it. Windows cannot run the script if there is no installed interpreter for it. The possible extensions of the scripts are BAT, JS, PY, RB, etc.

Unix rules for executing files differ from Windows ones. Here you can run any file if it has permissions for reading and executing. Its extension does not matter, unlike Windows. For example, the file called report.txt can be executable.

There is no convention for extensions of the executable files in Unix. Therefore, you cannot deduce the file type from its name. Use the file utility to get it. The command receives the file path on input and prints its type. Here is an example of calling file: