S3 Recipes

Signing up for Amazon S3

Signing up for any of the Amazon Web Services is a two step process. First, sign up for an Amazon Web Service Account. Second, sign up for that specific service.

Signing up for Amazon Web Services

Note: If you have already signed up for Amazon Elastic Compute Cloud or any other Amazon Web Services, you can skip this step.

Go to http://aws.amazon.com. On the right sidebars, there’s a link that says ‘sign up today’. Click on that.

You can use an already existing Amazon account, or sign up for a new account. If you use an existing account, you won’t have to enter your address or credit card information. Once you have signed up, Amazon will send you an e-mail, which you can safely ignore for now. You will also be taken to a page with a set of links to all of the different Amazon Web Services. Click on the ‘Amazon Simple Storage Service’ link.

Signing up for Amazon Simple Storage Service

If you have just followed the directions above, you will be looking at the correct web page. If not, go to http://s3.amazonaws.com.

On the right hand side of the page, you will see a button labeled ‘Sign up for this web service’. Click on it, scroll to the bottom, and enter your credit card information. On the next page, enter your billing address. (if you are using an already existing Amazon account, you won’t have to enter this information). Once you are done, click on ‘complete sign up’.

Your access id and secret

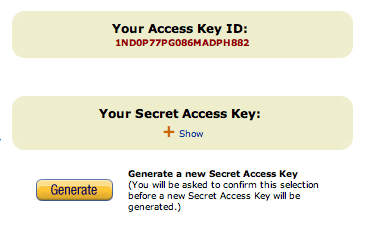

You will get a second e-mail from Amazon with directions on getting your account information and your access key. Click on the second link in the e-mail or go to http://aws-portal.amazon.com/gp/aws/developer/account/index.html?action=access-key.

On the right-hand side of the page, you will see your access key and secret access key. You will need to click on ‘show’ to see your secret access key.

Your access key is

Next Steps

Once you have signed up, you will want to install Ruby and the AWS/S3 gem (“Installing Ruby and the AWS/S3 Gem”) and set up s3sh (“Setting up the S3SH command line tool”) and s3lib (“Installing the S3Lib library”). These tools are used in almost all of the rest of the recipes.

Installing Ruby and the AWS/S3 Gem

The Problem

You want to use the AWS/S3 library to use the examples in this book. You will need to install Ruby first as well.

The Solution

The AWS/S3 Gem is a Ruby library for talking to Amazon S3 written by Marcel Molina. It wraps the S3 Rest interface into an elegant Ruby library. Full documentation for the library can be found at amazon.rubyforge.org. It also comes with a command line tool to interact with S3, s3sh. We will be using s3sh and the AWS/S3 library in many of the S3 Recipes, so it will be worth your while to install it.

There are three steps to this process:

- Install Ruby

- Install RubyGems

- Install the AWS/S3 Gem

Installing Ruby

First, check to make sure that you don’t already have Ruby installed. Try typing

1 $> ruby

at the command prompt. If it’s not installed, read the section specific to your Operating System for installation directions. If none of those options work for you, then you can download the source code or pre-compiled packages at http://www.ruby-lang.org/en/downloads/.

On Windows

On Windows, the easiest way to install Ruby and RubyGems is via the ‘One-click Ruby Installer’. Go to http://rubyinstaller.rubyforge.org/wiki/wiki.pl, download the latest version and run the executable. This will install Ruby and RubyGems.

On OS X

If you are using OS X 10.5 (Leopard) or greater, Ruby and RubyGems will be installed when you install the XCode developer tools that came with your computer. On earlier versions of OS X, Ruby will be installed by you will have to install RubyGems yourself.

On Unix

You most likely have Ruby installed on your Unix machine. If not, use your package manager to get it.

On Redhat, yum install ruby

On Debian, apt-get install ruby

If you want to roll your own or are using a more esoteric version of Unix, download the source code or pre-compiled packages at http://www.ruby-lang.org/en/downloads/.

Installing RubyGems

RubyGems is the package manager for Ruby. It allows you to easily install, uninstall and upgrade packages of Ruby code. Before trying to install it, check to make sure that you don’t already have rubygems installed. Try typing

1 $> gem

at the command prompt. If it’s not installed, then do the following:

- Download the latest version of RubyGems from RubyForge at http://rubyforge.org/frs/?group_id=126

- Uncompress the package you downloaded in to a directory

- CD in to the directory and then run the setup program

$> ruby setup.rb

Installing the AWS/S3 gem

Once you have Ruby and RubyGems installed, installing the Amazon Web Services S3 Gem is simple. Just type

1 $> gem install aws-s3

or

1 $> sudo gem install aws-s3

at the command prompt. You should see something similiar to this:

1 $> sudo gem install aws-s3

2 Successfully installed aws-s3-0.4.0

3 1 gem installed

4 Installing ri documentation for aws-s3-0.4.0...

5 Installing RDoc documentation for aws-s3-0.4.0...

Setting up the S3SH command line tool

One of the great tools that comes with the AWS/S3 gem is the s3sh command line tool. You need to have Ruby and the AWS/S3 gem installed (“Installing Ruby and the AWS/S3 Gem”) before going any farther with this recipe.

Once you have installed the AWS/S3 gem, you should be able to start up s3sh by typing s3sh at the command prompt. After a few seconds, you will see a new prompt that looks like ‘>>’. You can use the Base.connected? command from the AWS/S3 library to see if you are connected to S3.

1 $> s3sh

2 >> Base.connected?

3 => false

4 >>

The Base.connected? command is returning false, telling us that you are not connected to S3. To connect to S3, you need to provide your authentication information: your AWS ID and your AWS secret. There are two ways to do this: the hard way and the easy way. Let’s do the hard way first.

The hard way isn’t all that hard. You use the Base.establish_connection! command from AWS/S3 library to connect to S3.

1 >> Base.establish_connection!(:access_key_id => 'your AWS ID',

2 :secret_access_key => 'your AWS secret')

3 >> Base.connected?

4 => true

5 >>

The hard part is that you’ll have to do that every time you start up s3sh. If you’re lazy like me, you can avoid this by setting two environment variables. AMAZON_ACCESS_KEY_ID should be set to your AWS ID, and AMAZON_SECRET_ACCESS_KEY should be set to your AWS secret. I’m not going to go in to the gory details of how you do this. If you have them set correctly, you will automatically be authenticated with S3 when you start up s3sh.

1 $> env | grep AMAZON

2 AMAZON_ACCESS_KEY_ID=my_aws_id

3 AMAZON_SECRET_ACCESS_KEY=my_aws_secret

4 $> s3sh

5 >> Base.connected?

6 => true

7 >>

Now that you are connected, you can play around a little. Try the following recipes for some inspiration:

Installing the S3Lib library

The Problem

You want to use S3Lib to follow along with the recipes or to fool around with S3 requests.

The Solution

Install the S3Lib gem with one of the following commands. Use the sudo version if you’re on a Unix or OS X system, the non-sudo version if you’re on Windows or using rvm or rbenv.

1 $> sudo gem install s3lib

2

3 C:\> gem install s3lib

Once you have the gem installed, follow the directions in “Setting up the S3SH command line tool” to set up your environment variables.

Discussion

Test out your setup by opening up an s3lib session and trying the following:

1 $> s3lib

2 >> S3Lib.request(:get, '').read

3 => "<?xml version=\"1.0\" encoding=\"UTF-8\"?>

4 <ListAllMyBucketsResult xmlns=\"http://s3.amazonaws.com/doc/2006-03-01/\">

5 ...

6 </ListAllMyBucketsResult>"

If you get a nice XML response showing a list of all of your buckets, everything is working properly.

If you get something that looks like this, then you haven’t set up the environment variables correctly:

1 $> s3lib

2 >> S3Lib.request(:get, '')

3 S3Lib::S3ResponseError: 403 Forbidden

4 amazon error type: SignatureDoesNotMatch

5 from /Library/Ruby/Gems/1.8/gems/s3-lib-0.1.3/lib/s3_authenticator.rb:39\

6 :in `request'

7 from (irb):1

Make sure you’ve followed the directions in “Setting up the S3SH command line tool”, then try again.

Making a request using s3Lib

The Problem

You want to make requests to S3 and receive back unprocessed XML results. You might just be experimenting, or you might be using S3Lib as the basis for an S3 Library

The Solution

Make sure you’ve installed S3Lib as described in “Installing the S3Lib library”. Then, require the S3Lib library and use S3Lib.request to make your request.

Here’s an example script:

1 #!/usr/bin/env ruby

2

3 require 'rubygems'

4 require 's3lib'

5

6 puts S3Lib.request(:get, '').read

To use S3Lib in an interactive shell, use irb, requiring s3lib when you invoke it:

1 $> irb -r s3lib

2 >> puts S3Lib.request(:get, '').read

3 <?xml version="1.0" encoding="UTF-8"?>

4 <ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

5 ...

6 </ListAllMyBucketsResult>

Discussion

The S3Lib::request method takes three arguments, two of them required. The first is the HTML verb that will be used to make the request. It can be :get, :put, :post, :delete or :head. The second is the URL that you will be making the request to. The final argument is the params hash. This is used to add headers or a body to the request.

If you want to create an object on S3, you make a PUT request to the object’s URL. You will need to use the params hash to add a body (the content of the object you are creating) to the request. You will also need to add a content-type header to the request. Here’s a request that creates an object with a key of new.txt in the bucket spatten_test_bucket with a body of ‘this is a new text file’ and a content type of ‘text/plain’.

1 S3Lib.request(:put, 'spatten_test_bucket/new.txt',

2 :body => "this is a new text file",

3 'content-type' => 'text/plain')

The response you get back from an S3Lib.request is a Ruby IO object. If you want to see the actual response, use .read on the response. If you want to read it more than once, you’ll need to rewind between reads:

1 $> irb -r s3lib

2 >> response = S3Lib.request(:get, '')

3 => #<StringIO:0x11c7edc>

4 >> puts response.read

5 <?xml version="1.0" encoding="UTF-8"?>

6 <ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

7 ...

8 </ListAllMyBucketsResult>

9 >> puts response.read

10

11 >> response.rewind

12 >> puts response.read

13 <?xml version="1.0" encoding="UTF-8"?>

14 <ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

15 ...

16 </ListAllMyBucketsResult>

Getting the response with AWS/S3

The Problem

You have made a request to S3 using the AWS/S3, and you want to see the response status and/or the raw XML response.

The Solution

Use Service.response to get both

1 $> s3sh

2 >> Bucket.find('spattentemp')

3 >> Service.response

4 => #<AWS::S3::Bucket::Response:0x9759530 200 OK>

5 >> Service.response.code

6 => 200

7 >> Service.response.body

8 => "<?xml version=\"1.0\" encoding=\"UTF-8\"?>

9 <ListBucketResult xmlns=\"http://s3.amazonaws.com/doc/2006-03-01/\">

10 <Name>spattentemp</Name>

11 <Prefix></Prefix>

12 <Marker></Marker>

13 <MaxKeys>1000</MaxKeys>

14 <IsTruncated>false</IsTruncated>

15 <Contents>

16 <Key>acl.rb</Key>

17 <LastModified>2008-09-12T18:45:27.000Z</LastModified>

18 <ETag>"87e54e8253f2be98ec8f65111f16980d"</ETag>

19 <Size>4141</Size>

20 <Owner>

21 <ID>9d92623ba6dd9d7cc06a7b8bcc46381e7c646f72d769214012f7e91b50c0de0f</ID>

22 <DisplayName>scottpatten</DisplayName>

23 </Owner>

24 <StorageClass>STANDARD</StorageClass>

25 </Contents>

26

27 ....

28

29 <Contents>

30 <Key>service.rb</Key>

31 <LastModified>2008-09-12T18:45:22.000Z</LastModified>

32 <ETag>"98b9dce82771bbfec960711235c2d445"</ETag>

33 <Size>455</Size>

34 <Owner>

35 <ID>9d92623ba6dd9d7cc06a7b8bcc46381e7c646f72d769214012f7e91b50c0de0f</ID>

36 <DisplayName>scottpatten</DisplayName>

37 </Owner>

38 <StorageClass>STANDARD</StorageClass>

39 </Contents>

40 </ListBucketResult>"

Discussion

There are a lot of other useful methods that Service.response responds to. Two you might use are Service.response.parsed, which returns a hash obtained from parsing the XML, and Service.response.server_error?, which returns true if the response was an error and false otherwise.

1 >> Service.response.parsed

2 => {"prefix"=>nil, "name"=>"spattentemp", "marker"=>nil, "max_keys"=>1000,

3 "is_truncated"=>false}

4 >> Service.response.server_error?

5 => false

Installing The FireFox S3 Organizer

The Problem

You want a GUI for your S3 account, and you’ve heard the S3 FireFox organizer is pretty good.

The Solution

In FireFox, go to http://addons.mozilla.org and search for ‘amazon s3 organizer’. Click on the ‘Add to FireFox’ button for the ‘Amazon S3 FireFox Organizer (S3Fox)’. Follow the installation instructions, and then restart FireFox.

There will now be a ‘S3 Organizer’ entry in the Tools menu. Click on that, and you’ll see something like this:

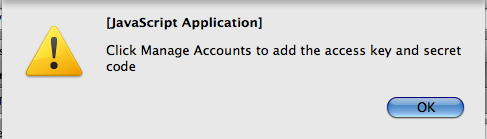

Figure 3.1. S3Fox alert box

Click on the ‘Manage Accounts’ button and then enter a name for your account along with your Access Key and Secret Key. After clicking on ‘Close’, you should see a list of your buckets.

Discussion

For a list of tools that work with S3, including some other GUI applications, see this blog post at elasti8.com: http://www.elastic8.com/blog/tools_for_accessing_using_to_backup_your_data_to_and_from_s3.html

Working with multiple s3 accounts

The Problem

If you’re like me, you have a number of clients all with different S3 accounts. Using your command line tools to work with their accounts can be annoying as you have to copy and paste their access_key and amazon_secret_key in to the correct environment variables every time you change accounts. This recipe provides a quick way of switching accounts.

The Solution

The first thing you need to do is create a file called .s3_keys.yml in your home directory. This is a file in the YAML format (YAML stands for “YAML Ain’t Markup Language”. The official web-site for YAML is at http://www.yaml.org/). Make an entry in the file for each S3 account you have. It should look something like this:

Example 3.4. .s3_keys.yml <<(code/working_with_multiple_s3_accounts_recipe/.s3_keys.yml)

The s3sh_as program

Now we need a program that will read the .s3_keys.yml file, grab the correct set of keys, set them in the environment and then open up a s3sh shell. Here’s something that does the trick:

Example 3.5. s3sh_as <<(code/working_with_multiple_s3_accounts_recipe/s3sh_as)

Discussion

To use s3sh_as, put s3sh_as somewhere in your path, and then call it like this:

1 $> s3sh_as <name of your S3 account>

For example, if I wanted to use my personal account, I would type

1 $> s3sh_as personal

If I wanted to do some work on client_1’s account, I would type

1 $> s3sh_as client_1

If you don’t want to type the code in yourself, then just install the S3Lib gem.

1 sudo gem install s3lib

When you install the S3Lib gem, a version of s3sh_as is automatically installed. The s3lib program is also installed when you install the S3Lib gem. This program, which provides a shell to play around with the S3Lib library, will read the .s3keys.yml file just like s3sh_as, so you can use it to access multiple accounts as well.

Accessing your buckets through virtual hosting

The Problem

You want to access your buckets as either bucketname.s3.amazonaws.com or as

some.other.hostname.com.

The Solution

If you make a request with the hostname as s3.amazonaws.com, the bucket is taken as everything before the first slash in the path of the URI you pass in. The object is everything after the first slash. For example, take a GET request to

http://s3.amazonaws.com/somebucket/object/name/goes/here.

The host is s3.amazonaws.com and the path is

somebucket/object/name/goes/here. Since the host is s3.amazonaws.com, S3 parses the path and finds that the bucket is somebucket and the object key isobject/name/goes/here.

If the hostname is not s3.amazonaws.com, then S3 will parse the hostname to find the bucket and use the full path as the object key. This is called virtual hosting. There are two ways to use virtual hosting. The first is to use a sub-domain of s3.amazonaws.com. If I make a request to http://somebucket.s3.amazonaws.com, the bucket name will be set to somebucket. If you include a path in the URL, this will be the object key. http://somebucket.s3.amazonaws.com/some.key is the URL for the object with a key of some.key in the bucket somebucket.

The second method of doing virtual hosting uses DNS aliases. You name a bucket some domain or sub-domain that you own, and then point the DNS for that domain or subdomain to the proper subdomain of s3.amazonaws.com. For example, I have a bucket called assets0.plotomatic.com, which has its DNS aliased to assets0.plotomatic.com.s3.amazonaws.com. Any requests to http://assets0.plotomatic.com will automagically be pointed at my bucket on S3.

Discussion

The ability to do virtual hosting is really useful in a lot of cases. It’s used for hosting static assets for a website (see “Using S3 as an asset host”) or whenever you want to obscure the fact that you are using S3.

One other benefit is that it allows you to put things in the root directory of a site you are serving. Things like robots.txt and crossdomain.xml are expected to be in the root, and there’s no way to do that without using virtual hosting.

There’s not room here to explain how to set up DNS aliasing for every Domain Registrar out there. Look for help on setting up DNS Aliases or C Name settings. This blog post from Blogger.com gives instructions for a few common registrars: http://help.blogger.com/bin/answer.py?hl=en-ca&answer=58317

Creating a bucket

The Problem

You want to create a new bucket

The Solution

To create a bucket, you make a PUT request to the bucket’s name, like this:

1 PUT /my_new_bucket

2 Host: s3.amazonaws.com

3 Content-Length: 0

4 Date: Wed, 13 Feb 2008 12:00:00 GMT

5 Authorization: AWS some_id:some_authentication_string

To make the authenticated request using the s3lib library

1 #!/usr/bin/env ruby

2 require 'rubygems'

3 require 's3lib'

4

5 response = S3Lib.request(:put,'/my_new_bucket')

To create a bucket in S3SH, you use the Bucket.create command:

1 $> s3sh

2 >> Bucket.create('my_new_bucket')

3 => true

Creating buckets virtual hosted style

You can also make the request using a virtual hosted bucket by setting the Host parameter to the virtual hosted bucket’s url:

1 PUT /

2 Host: mynewbucket.s3.amazonaws.com

3 Content-Length: 0

4 Date: Wed, 13 Feb 2008 12:00:00 GMT

5 Authorization: AWS some_id:some_authentication_string

There’s no way to do this using s3sh, but there’s no real reason why you need to create a bucket using virtual hosting. Here’s how you make the PUT request to a virtual hosted bucket using the s3lib library:

1 #!/usr/bin/env ruby

2 require 's3lib'

3

4 response = S3Lib.request(:put,'/',

5 {'host' => 'newbucket.s3.amazonaws.com'})

Remember that URLs cannot contain underscores (‘_’), so you won’t be able to create or use a bucket named ‘my_new_bucket’ using virtual hosting.

Errors

If you try to create a bucket that is already owned by someone else, Amazon will return a 409 Conflict error. Is s3sh, a AWS::S3::BucketAlreadyExists error will be raised.

1 $> s3sh

2 >> Bucket.create('not_my_bucket')

3 AWS::S3::BucketAlreadyExists: The requested bucket name is not available.

4 The bucket namespace is shared by all users of the system. Please select a diffe\

5 rent name and try again.

6 from /opt/local/lib/ruby/gems/1.8/gems/aws-s3-0.4.0/bin/../lib/aws/s3/er\

7 ror.rb:38:in `raise'

8 from /opt/local/lib/ruby/gems/1.8/gems/aws-s3-0.4.0/bin/../lib/aws/s3/ba\

9 se.rb:72:in `request'

10 from /opt/local/lib/ruby/gems/1.8/gems/aws-s3-0.4.0/bin/../lib/aws/s3/ba\

11 se.rb:83:in `put'

12 from /opt/local/lib/ruby/gems/1.8/gems/aws-s3-0.4.0/bin/../lib/aws/s3/bu\

13 cket.rb:79:in `create'

14 from (irb):1

Discussion

Since a bucket is created by a PUT command, the request is idempotent: you can issue the same PUT request multiple times and have the same effect each time. In other words, Bucket.create won’t complain if you try to create one of your buckets again

1 >> Bucket.create('some_bucket_that_does_not_exist')

2 => true

3 >> Bucket.create('some_bucket_that_does_not_exist') # It exists now, but that's \

4 okay

5 => true

This is useful if you are not sure that a bucket exists. There’s no need to write something like this

1 def function_that_requires_a_bucket

2 begin

3 Bucket.find('some_bucket_that_may_or_may_not_exist')

4 rescue AWS::S3::NoSuchBucket

5 Bucket.create('some_bucket_that_may_or_may_not_exist')

6 end

7 ... rest of method ...

8 end

You can just use Bucket.create

1 def function_that_requires_a_bucket

2 Bucket.create('some_bucket_that_may_or_may_not_exist')

3 ... rest of method ...

4 end

One last thing to note is that Bucket.create returns true if it is successful and raises an error otherwise. Bucket.create does not return the newly created bucket. If you want to create a bucket and then assign it to a variable, you need to use Bucket.find to do the assignation

1 def function_that_requires_a_bucket

2 Bucket.create('my_bucket')

3 my_bucket = Bucket.find('my_bucket')

4 ... rest of method ...

5 end

Creating a European bucket

The Problem

For either throughput or legal reasons, you want to create a bucket that is physically located in Europe.

The Solution

To create a bucket that is located in Europe rather than North America, you add some XML to the body of the PUT request when creating the bucket. The XML looks like this:

1 <CreateBucketConfiguration>

2 <LocationConstraint>EU</LocationConstraint>

3 </CreateBucketConfiguration>

The following code will create a European bucket named spatteneurobucket:

1 $>s3lib

2 >> euro_xml = <<XML

3 <CreateBucketConfiguration>

4 <LocationConstraint>EU</LocationConstraint>

5 </CreateBucketConfiguration>

6 XML

7 >> S3Lib.request(:put, 'spatteneurobucket', :body => euro_xml, 'content-type' =>\

8 'text/xml')

9 => #<StringIO:0x1675858>

Discussion

There are a few things worth noting here. First, as usual, I had to add the content-type to the PUT request. Second, European buckets must be read using virtual hosting. The GET request using virtual hosting will look like this:

1 >> S3Lib.request(:get, '', 'host' => 'spatteneurobucket.s3.amazonaws.com').read

2 => "<?xml version=\"1.0\" encoding=\"UTF-8\"?>

3 <ListBucketResult xmlns=\"http://s3.amazonaws.com/doc/2006-03-01/\">

4 <Name>spatteneurobucket</Name>

5 <Prefix></Prefix>

6 <Marker></Marker>

7 <MaxKeys>1000</MaxKeys>

8 <IsTruncated>false</IsTruncated>

9 </ListBucketResult>"

There are not objects in this bucket, so there are no content tags.

If I try to do a standard GET request, I will raise an error

1 >> S3Lib.request(:get, 'spatteneurobucket')

2 URI::InvalidURIError: bad URI(is not URI?):

3 from /opt/local/lib/ruby/1.8/uri/common.rb:436:in `split'

4 from /opt/local/lib/ruby/1.8/uri/common.rb:485:in `parse'

5 ...

6 from (irb):27

The requirement to use virtual hosting also means that there are extra constraints on the bucket name, as discussed in “Bucket Names”. Because this constraint is required, Amazon enforces them. If you try to create, for example, a bucket with underscores in its name, Amazon will complain:

1 >> S3Lib.request(:put, 'spatten_euro_bucket', :body => euro_xml, 'content-type' \

2 => 'text/xml')

3 S3Lib::S3ResponseError: 400 Bad Request

4 amazon error type: InvalidBucketName

5 from /Users/Scott/versioned/s3_and_ec2_cookbook/code/s3_code/library/s3_\

6 authenticator.rb:39:in `request'

7 from (irb):17

Finally, if you try to create a European bucket multiple times, an error is raised by Amazon:

1 >> S3Lib.request(:put, 'spatteneurobucket', :body => euro_xml, 'content-type' =>\

2 'text/xml').read

3 S3Lib::S3ResponseError: 409 Conflict

4 amazon error type: BucketAlreadyOwnedByYou

5 from /Users/Scott/versioned/s3_and_ec2_cookbook/code/s3_code/library/s3_\

6 authenticator.rb:39:in `request'

7 from (irb):22

This is different behavior from standard buckets, where you are able to create a bucket again and again with no problems (or affects, either).

Finding a bucket’s location

The Problem

You have a bucket, and you aren’t sure if it is located in Europe or North America.

The Solution

Make an authenticated GET request to the bucket’s location URL, which is the bucket’s URL with ?location appended to it. If your bucket is located in North America, then the response will look like this:

1 <?xml version=\"1.0\" encoding=\"UTF-8\"?>

2 <LocationConstraint xmlns=\"http://s3.amazonaws.com/doc/2006-03-01/\"/>

If the bucket is located in Europe, then the response will look like this:

1 <?xml version=\"1.0\" encoding=\"UTF-8\"?>

2 <LocationConstraint xmlns=\"http://s3.amazonaws.com/doc/2006-03-01/\">EU</Locati\

3 onConstraint>

Both requests return a LocationConstraint element. If it’s a North American bucket, the element will be empty. If it’s a European bucket, then it will contain EU. Presumably, if and when further locations become available they will follow the same pattern.

Discussion

At the time of writing, the AWS/S3 library didn’t have support for location creation or reading. You can make the request yourself, however, using the S3Lib library. Here’s an example:

Example 3.6. getting the bucket location using S3Lib

1 $> irb -r s3lib

2 >> S3Lib.request(:get, 'spatteneurobucket?location').read

3 => "<?xml version=\"1.0\" encoding=\"UTF-8\"?>

4 <LocationConstraint xmlns=\"http://s3.amazonaws.com/doc/2006-03-01/\">EU</Locati\

5 onConstraint>"

Deleting a bucket

The Problem

You have a bucket that you want to delete.

The Solution

Using the AWS/S3 library, use Bucket.delete:

1 $> s3sh

2 >> Bucket.delete('spatten_test_bucket')

If the bucket is not empty, you will get an AWS::S3::BucketNotEmpty error. You can force the deletion of the bucket by adding a :force => true parameter:

1 $> s3sh

2 >> Bucket.delete('spatten_test_bucket')

3 AWS::S3::BucketNotEmpty: The bucket you tried to delete is not empty

4 from /Library/Ruby/Gems/1.8/gems/aws-s3-0.5.1/bin/../lib/aws/s3/error.rb\

5 :38:in `raise'

6 from /Library/Ruby/Gems/1.8/gems/aws-s3-0.5.1/bin/../lib/aws/s3/base.rb:\

7 72:in `request'

8 from /Library/Ruby/Gems/1.8/gems/aws-s3-0.5.1/bin/../lib/aws/s3/base.rb:\

9 83:in `delete'

10 from /Library/Ruby/Gems/1.8/gems/aws-s3-0.5.1/bin/../lib/aws/s3/bucket.r\

11 b:163:in `delete'

12 from (irb):1

13 >> Bucket.delete('spatten_test_bucket', :force => true)

This will delete all objects in the bucket before deleting the bucket, so it may take a while.

To delete a bucket by hand, first delete all objects in the bucket (see “Deleting an object”) and then make a DELETE request to the bucket’s URL:

1 $> s3lib

2 >> S3Lib.request(:delete, 'spatten_test_bucket')

Discussion

If you try to delete a bucket that doesn’t exist, you will get a 404 Not Found response:

1 >> S3Lib.request(:delete, 'nonexistent_bucket')

2 S3Lib::S3ResponseError: 404 Not Found

3 amazon error type: NoSuchBucket

4 from /Library/Ruby/Gems/1.8/gems/s3-lib-0.1.6/lib/s3_authenticator.rb:38\

5 :in `request'

6 from (irb):4

Synchronizing two buckets

The Problem

You have two buckets that you want to keep exactly the same (you are probably using them for hosting assets, as in “Using S3 as an asset host”).

The Solution

Use conditional object copying to copy all files from one bucket to another. The following code goes through every object in the source bucket and copies it to the target bucket if either the object doesn’t exist in the target bucket or if the target bucket’s version of the object is different than the source bucket’s version.

Example 3.7. synchronize_buckets

1 #!/usr/bin/env ruby

2 require 'rubygems'

3 require 'aws/s3'

4 include AWS::S3

5

6

7 module AWS

8 module S3

9

10 class Bucket

11

12 # copies all files from current bucket to the target bucket.

13 # target_bucket can be either a bucket instance or a string

14 # containing the name of the bucket.

15 def synchronize_to(target_bucket)

16 objects.each do |object|

17 object.copy_to_bucket_if_etags_dont_match(target_bucket)

18 end

19 end

20

21 end

22

23 class S3Object

24

25 # Copies the current object to the target bucket.

26 # target_bucket can be a bucket instance or a string containing

27 # the name of the bucket.

28 def copy_to_bucket(target_bucket, params = {})

29 if target_bucket.is_a?(AWS::S3::Bucket)

30 target_bucket = target_bucket.name

31 end

32 puts "#{key} => #{target_bucket}"

33 begin

34 S3Object.store(key, nil, target_bucket,

35 params.merge('x-amz-copy-source' => path))

36 rescue AWS::S3::PreconditionFailed

37 end

38 end

39

40 # Copies the current object to the target bucket

41 # unless the object already exists in the target bucket

42 # and they are identical.

43 # target_bucket can be a bucket instance or a string containing

44 # the name of the bucket.

45 def copy_to_bucket_if_etags_dont_match(target_bucket, params = {})

46 unless target_bucket.is_a?(AWS::S3::Bucket)

47 target_bucket = AWS::S3::Bucket.find(target_bucket)

48 end

49 if target_bucket[key]

50 params.merge!(

51 'x-amz-copy-source-if-none-match' => target_bucket[key].etag)

52 end

53 copy_to_bucket(target_bucket, params)

54 end

55

56 end

57

58 end

59 end

60

61 USAGE = "Usage: synchronize_buckets <source_bucket> <target_bucket>"

62 (puts USAGE;exit(0)) unless ARGV.length == 2

63 source_bucket_name, target_bucket_name = ARGV

64

65 AWS::S3::Base.establish_connection!(

66 :access_key_id => ENV['AMAZON_ACCESS_KEY_ID'],

67 :secret_access_key => ENV['AMAZON_SECRET_ACCESS_KEY']

68 )

69

70 Bucket.create(target_bucket_name)

71 Bucket.find(source_bucket_name).synchronize_to(target_bucket_name)

You run the script like this:

1 $> ./synchronize_buckets spatten_s3demo spatten_s3demo_clone

2 eventbrite_com_errors.jpg => spatten_s3demo_clone

3 test.txt => spatten_s3demo_clone

4 vampire.jpg => spatten_s3demo_clone

Discussion

This script just screamed out for the addition of methods to the Bucket and S3Object classes. I borrowed the copy_to_bucket and copy_to_bucket_if_etags_dont_match methods from “Copying an object”, and added the Bucket.synchronize_to method.

If you want to maintain the permissions on the newly created objects, you’ll have to add functionality to copy grants or to add an :access parameter to the params hash passed to S3Object.store.

This script will never delete objects from the target bucket. I’ll leave it as an exercise for the reader to add this functionality.

Using REXML and XPath to parse an XML response from S3

The Problem

You have received an XML response from S3, and you want to extract some information from it.

The Solution

There are many ways to do this, but the one that I have used throughout the book for parsing responses from S3 is the Ruby REXML library. REXML has a few ways of finding nodes in an XML document. I’ll be using XPath throughout the book.

Finding XML nodes with XML

Let’s start with a sample document, and figure out how we can get the information we want from it. Here’s a purely imaginary example of a book written in XML format:

1 <?xml version=\"1.0\" encoding=\"UTF-8\"?>

2 <book>

3 <chapter title="S3's Architecture">

4 <section href="s3_architecture/intro.xml"/>

5 <section href="s3_architecture/buckets.xml"/>

6 <section href="s3_architecture/objects.xml"/>

7 <section href="s3_architecture/acls.xml"/>

8 </chapter>

9 <chapter title="S3 Recipes">

10 <section href="s3_recipes/signing_up_for_s3.xml"/>

11 <section href="s3_recipes/installing_ruby_and_awss3gem.xml"/>

12 <section href="s3_recipes/setting_up_s3sh.xml"/>

13 <section href="s3_recipes/installing_the_firefox_s3_organizer.xml"/>

14 <section href="s3_recipes/dealing_with_multiple_s3_accounts.xml"/>

15 <section href="s3_recipes/creating_a_bucket.xml"/>

16 </chapter>

17 <chapter title="Authenticating S3 Requests">

18 <section href="s3_authentication/authenticating_s3_requests.xml"/>

19 <section href="s3_authentication/s3_authentication_intro.xml"/>

20 <section href="s3_authentication/the_http_verb.xml"/>

21 <section

22 href="s3_authentication/the_canonicalized_positional_headers.xml"/>

23 </chapter>

24 </book>

First, let’s use XPath to find all of the chapters in the response. XPath is a language that allows you to select nodes from an XML document. I’m not going to fully explain how XPath works. That’s a good chunk of a book in and of itself. See O’Reilly’s XPath and XPointer for an example (http://oreilly.com/catalog/9780596002916/).

Here are some quick, cookbook style examples that show you how it works:

An XPath of //chapter will find all chapter nodes, no matter where they are in the document. The following code finds all of the chapter elements in the sample XML and prints them out

Example 3.8. xpath_example.rb <<(code/using_rexml_and_xpath_to_parse_an_xml_response_from_s3_recipe/xpath_example.rb)

Let’s look at the code and figure out what’s going on. First, we take a string representing an XML document and load it into the xml variable. Next, we make a new instance of REXML::Document with that string. Next, and most interestingly, we find all of the chapter elements of the XML document using the XPath expression //chapter. We then print the chapter elements out for posterity.

Okay, so that let’s us find all chapter elements. What if you wanted all of the chapters that weren’t in the appendix? You can do this using a nested XPath expression that looks like this //book/chapter. This means ‘find me all of the chapter elements that are children of a book element’. If you wanted to make sure that the book element you were referring to was the root element of the document, you would use a single forward slash at the beginning of your XPath expression: /book/chapter. If you wanted all of the chapters in the appendix, you could use an XPath expression like //book/appendix/chapter or //appendix/chapter. In this example, they will find exactly the same thing. If you had an XML document with appendix elements that were not children of book elements, then //appendix/chapter would find those appendix elements, while //book/appendix/chapter would not.

If you wanted to get only the chapter with a title of ‘Authenticating S3 Requests’, then you could use an XPath expression like //chapter[@title="Authenticating S3 Requests"]. If you wanted a list of all sections in that chapter, then your XPath expression would be //chapter[@title="Authenticating S3 Requests"]/section.

Extracting information from an XML tree

One other thing we’ll be doing a lot is extracting a single element from another XML element. For example, if I ask S3 for a listing of all my buckets, I’ll get an XML response back that looks like this:

1 <ListAllMyBucketsResult xmlns=\"http://s3.amazonaws.com/doc/2006-03-01/\">

2 <Owner>

3 <ID>9d92623ba6dd9d7cc06a7b8bcc46381e7c646f72d769214012f7e91b50c0de0f</ID>

4 <DisplayName>scottpatten</DisplayName>

5 </Owner>

6 <Buckets>

7 <Bucket>

8 <Name>amazon_s3_and_ec2_cookbook</Name>

9 <CreationDate>2008-08-03T22:41:56.000Z</CreationDate>

10 </Bucket>

11 <Bucket>

12 <Name>spatten_music</Name>

13 <CreationDate>2008-02-19T22:07:24.000Z</CreationDate>

14 </Bucket>

15 <Bucket>

16 <Name>assets.plotomatic.com</Name>

17 <CreationDate>2007-11-05T23:34:56.000Z</CreationDate>

18 </Bucket>

19 </Buckets>

20 </ListAllMyBucketsResult>

I can get all of the Bucket elements from this XML by using an XPath expression like //Buckets or /ListAllMyBucketsResult/Buckets/Bucket. What if I now want to get the name and creation date for each bucket? Here’s some example code that does just that:

Example 3.9. get_bucket_info.rb <<(code/using_rexml_and_xpath_to_parse_an_xml_response_from_s3_recipe/get_bucket_info.rb)

The XPath::match method returns an Array of REXML::Element objects. For each element of the array, call elements['element_name'].text to get the value of the sub-element called element_name.

Discussion

If you want to read more about XPath, there is a freely available chapter from O’Reilly’s XML in a Nutshell available at http://oreilly.com/catalog/xmlnut/chapter/ch09.html.

The REXML library is part of Ruby Core, so no installation should be required if you have Ruby installed. The documentation for the library is at http://www.germane-software.com/software/rexml/. I highly recommend the tutorial rather than diving into the documentation. It is found at http://www.germane-software.com/software/rexml/docs/tutorial.html.

If you’re not using Ruby, almost any language will have an XPath implementation. For a list of them, see http://en.wikipedia.org/wiki/XPath#Implementations

Listing All Of Your Buckets

The Problem

You want to know the names of all of your buckets.

The Solution

Use the Service::buckets method from the AWS/S3 library. This will return an array of Bucket objects, sorted by creation date. If you want just the names of the buckets, then you can use collect on the array

1 $> s3sh

2 >> Service.buckets

3 => [#<AWS::S3::Bucket:0x11baf84 @object_cache=[], @attributes={"name"=>"assets0.\

4 plotomatic.com", "creation_date"=>Thu Sep 06 16:25:25 UTC 2007}>,

5 #<AWS::S3::Bucket:0x11bada4 @object_cache=[], @attributes={"name"=>"assets1.plot\

6 omatic.com", "creation_date"=>Thu Sep 06 16:53:18 UTC 2007}>,

7 #<AWS::S3::Bucket:0x11babc4 @object_cache=[], @attributes={"name"=>"assets2.plot\

8 omatic.com", "creation_date"=>Thu Sep 06 17:18:47 UTC 2007}>,

9

10 ....

11

12 #<AWS::S3::Bucket:0x11b8018 @object_cache=[], @attributes={"name"=>"zunior_bucke\

13 t", "creation_date"=>Sun Jul 27 18:31:07 UTC 2008}>]

14 >> Service.buckets.collect {|bucket| bucket.name}

15 => ["assets0.plotomatic.com", "assets1.plotomatic.com", "assets2.plotomatic.com"\

16 , ..., "zunior_bucket"]

Discussion

You get the listing of all of the buckets you own by making an authenticated GET request to the root URL of the Amazon S3 service: http://s3.amazonaws.com. See “Listing All of Your Buckets” in the API section for more information.

Listing All Objects in a Bucket

The Problem

You have a bucket on S3, and you want to know what objects are in it.

The Solution

If you’re using the AWS::S3 library, you can use the objects method of the Bucket class.

Example 3.10. listing all of the objects in a bucket

1 $> s3sh

2 >> Bucket.find('spatten_test_bucket').objects

3 => [#<AWS::S3::S3Object:0x2650740 '/spatten_test_bucket/book.xml'>,

4 #<AWS::S3::S3Object:0x2650430 '/spatten_test_bucket/cantreadme.txt'>,

5 #<AWS::S3::S3Object:0x2650130 '/spatten_test_bucket/delete_by_index'>,

6 #<AWS::S3::S3Object:0x2649860 '/spatten_test_bucket/execer'>,

7 #<AWS::S3::S3Object:0x2649470 '/spatten_test_bucket/kill_firefox'>,

8 #<AWS::S3::S3Object:0x2649080 '/spatten_test_bucket/mounting_commands'>,

9 #<AWS::S3::S3Object:0x2648580 '/spatten_test_bucket/new.txt'>,

10 #<AWS::S3::S3Object:0x2648060 '/spatten_test_bucket/s3_backup'>,

11 #<AWS::S3::S3Object:0x2647740 '/spatten_test_bucket/s3lib'>,

12 #<AWS::S3::S3Object:0x2647460 '/spatten_test_bucket/shoes'>,

13 #<AWS::S3::S3Object:0x2647190 '/spatten_test_bucket/t'>,

14 #<AWS::S3::S3Object:0x2646880 '/spatten_test_bucket/test1.txt'>,

15 #<AWS::S3::S3Object:0x2646530 '/spatten_test_bucket/viral_marketing.txt'>]

If you just want the keys of the objects, then you can collect them all in to an array like this:

1 >> Bucket.find('spatten_test_bucket').objects.collect {|object| object.key}

2 => ["book.xml", "cantreadme.txt", "delete_by_index", "execer",

3 "kill_firefox", "mounting_commands", "new.txt", "s3_backup",

4 "s3lib", "shoes", "t", "test1.txt", "viral_marketing.txt"]

If you want to get a list of the objects in a bucket by hand, you need to make an authenticated GET request to the bucket’s URL and then parse the XML. Each object will be represented by a Contents element in the XML, which will look something like this:

1 <Contents>

2 <Key>shoes</Key>

3 <LastModified>2008-05-26T06:01:11.000Z</LastModified>

4 <ETag>"4e949f634e17e26cbdeed0db686fb276"</ETag>

5 <Size>46</Size>

6 <Owner>

7 <ID>9d92623ba6dd9d7cc06a7b8bcc46381e7c646f72d769214012f7e91b50c0de0f</ID>

8 <DisplayName>scottpatten</DisplayName>

9 </Owner>

10 <StorageClass>STANDARD</StorageClass>

11 </Contents>

If you want the name of the object, then extract the Key element. For the Object’s size, extract the Size element. Here’s a script that will output the size and key of all the objects in a bucket.

Example 3.11. list_objects_s3lib.rb <<(code/listing_all_objects_in_a_bucket_recipe/list_objects_s3lib.rb)

Here it is in action

1 $> ruby list_objects.rb spatten_test_bucket

2 1347 book.xml

3 7 cantreadme.txt

4 42 delete_by_index

5 68 execer

6 216 kill_firefox

7 113 mounting_commands

8 11 new.txt

9 7601 s3_backup

10 640 s3lib

11 46 shoes

12 45 t

13 6 test1.txt

14 30 viral_marketing.txt

Discussion

The max-keys parameter limits the number of objects that are returned when you get information about a bucket. By default, max-keys is 1000. If you set max-keys to more than 1000, S3 will ignore you and return a maximum of 1000 objects.

The following script will get around this limitation using the max-keys and marker parameters to get all of the objects in the bucket 1000 objects at a time:

Example 3.12. list_objects_s3sh.rb <<(code/listing_all_objects_in_a_bucket_recipe/list_objects_s3sh.rb)

1 $> ./code/s3_code/list_all_objects assets0.plotomatic.com

2 [".htaccess", "404.html", "500.html", "FILES_TO_UPLOAD", "REVISION",

3

4 ....

5

6 "stylesheets/themes/spread/right-top.gif",

7 "stylesheets/themes/spread/top-middle.gif"]

8 # files: 3490

For more information on paginating the list of objects in a bucket, see “Paginating the list of objects in a bucket”

Finding the Total Size of a Bucket

The Problem

You have a bucket on S3, and you want to know the total amount of data it has in it.

The Solution

The Bucket::size method returns the number of objects in a bucket, so that doesn’t work. To find the total amount of data stored in a bucket, you will need to find the size of each of the objects and then add it up. The size of an object can be found by the S3Object#size method, which will return the size of an object in Bytes. Unfortunately, the size method will return a string "0" if the file is empty. Like this:

1 $> s3sh

2 >> Bucket.find('spatten_test_bucket').objects.collect {|object| object.size}

3 => [1347, 7, 42, 68, 216, 113, 11, 7601, 640, 46, 45, 6, 30]

4 >> Bucket.find('spatten_music').objects.collect {|object| object.size}

5 => [8135932, 10074218, "0", 2393173, 12264324, 11153597, 11073140, 8315654, 1011\

6 8103, 2355557, 10031378, 9780602, 11787851, 8974986, 12412700, 1063019, "0", "0"\

7 , 7148472, 11761697, 8960325, 11837974, 12031280, 8468178, 12970644, 10159868, 9\

8 854758, 8622823, 8581027, 14487835, 28388113, 10117027, 8728359, 8785828, 839399\

9 2, 8925845, 9552783, 9488000, 5872653, 9099298, 9428441, 9226775, 8500571, 11085\

10 649]

In this case, the objects with a length of zero are directories inserted by the FireFox S3 Organizer plugin (see “Installing The FireFox S3 Organizer”). Perhaps the best way to work around this is to filter out all of the strings before summing the sizes. Let’s give that a shot:

1 >> Bucket.find('spatten_music').objects.reject {|object| object.size == "0"}.col\

2 lect {|object| object.size}

3 => [8135932, 10074218, 2393173, 12264324, 11153597, 11073140, 8315654, 10118103,\

4 2355557, 10031378, 9780602, 11787851, 8974986, 12412700, 1063019, 7148472, 1176\

5 1697, 8960325, 11837974, 12031280, 8468178, 12970644, 10159868, 9854758, 8622823\

6 , 8581027, 14487835, 28388113, 10117027, 8728359, 8785828, 8393992, 8925845, 955\

7 2783, 9488000, 5872653, 9099298, 9428441, 9226775, 8500571, 11085649]

Now that the data is cleaned up, we can sum it.

1 >> sum = 0

2 >> Bucket.find('spatten_music').objects.reject {|object| object.size == "0"}.eac\

3 h {|object| sum += object.size}

4 >> sum

5 => 400412449

Note

If you’re a Ruby purist, the above summation code probably made you cringe. Here’s how you’d do that in idiomatic Ruby:

1 >> Bucket.find('spatten_music').objects.reject {|object| object.size == "0"}.inj\

2 ect(0) {|sum, object| sum += object.size}

3 => 400412449

Discussion

Listing only objects with keys starting with some prefix

The Problem

You have a bucket with a large number of files in it, and you only want to list files starting with a given string.

The Solution

Use the prefix parameter when you are requesting the list of objects in the bucket. This will limit the objects to those with keys starting with the given prefix. If you are doing this by hand, then you add the prefix by including it as a query param on the bucket’s URL

1 /bucket_name?prefix=<some_prefix>

If you are using the AWS-S3 library, then you set the prefix command like this:

1 $> s3sh

2 >> b = Bucket.find('spatten_test_bucket', :prefix => 'test')

3 >> b.objects.collect {|object| object.key}

4 => ["test.mp3", "test1.txt"]

The interface for S3Lib.request is the same: add a :prefix key to the params hash

1 $> s3lib

2 >> S3Lib.request(:get, 'spatten_test_bucket', :prefix => 'test').read

3 <?xml version=\"1.0\" encoding=\"UTF-8\"?>

4 <ListBucketResult xmlns=\"http://s3.amazonaws.com/doc/2006-03-01/\">

5 <Name>spatten_test_bucket</Name>

6 <Prefix>test</Prefix>

7 <Marker></Marker>

8 <MaxKeys>1000</MaxKeys>

9 <IsTruncated>false</IsTruncated>

10 <Contents>

11 <Key>test.mp3</Key>

12 <LastModified>2008-08-14T22:24:58.000Z</LastModified>

13 <ETag>"80a03d7ed8658fe3869d70d10999e4ff"</ETag>

14 <Size>7182955</Size>

15 <Owner>

16 <ID>9

17 d92623ba6dd9d7cc06a7b8bcc46381e7c646f72d769214012f7e91b50c0de0f

18 </ID>

19 <DisplayName>scottpatten</DisplayName>

20 </Owner>

21 <StorageClass>STANDARD</StorageClass>

22 </Contents>

23 <Contents>

24 <Key>test1.txt</Key>

25 <LastModified>2008-04-29T04:47:03.000Z</LastModified>

26 <ETag>"fd2f80fc0ef8c6cc6378d260182229be"</ETag>

27 <Size>6</Size>

28 <Owner>

29 <ID>

30 9d92623ba6dd9d7cc06a7b8bcc46381e7c646f72d769214012f7e91b50c0de0f

31 </ID>

32 <DisplayName>scottpatten</DisplayName>

33 </Owner>

34 <StorageClass>STANDARD</StorageClass>

35 </Contents>

36 </ListBucketResult>

In both cases, only the two files starting with test are returned in the object list.

Discussion

In the example above, the URL for the GET request that is actually made to S3 is http://s3.amazonaws.com/spatten_test_bucket?prefix=test. If you try doing this directly, you’ll get an error:

1 >> S3Lib.request(:get, 'spatten_test_bucket?prefix=test')

2 S3Lib::S3ResponseError: 403 Forbidden

3 amazon error type: SignatureDoesNotMatch

4 from /Library/Ruby/Gems/1.8/gems/s3-lib-0.1.3/lib/s3_authenticator.rb:39\

5 :in `request'

6 from (irb):6

The ?prefix=test has to be omitted from the URL when it is being used to sign the request. Both the AWS-S3 and the S3Lib libraries opt to add the prefix in after the URL has been calculated rather than allowing you to add it directly and stripping it out during the signature calculation.

Paginating the list of objects in a bucket

The Problem

You want to get the list of objects in a bucket, N objects at a time.

The Solution

Use the max-keys and marker parameters to page through the list of objects. The following code will go through all of the objects in a bucket and place them in to a list of lists.

Example 3.13. paginate_bucket.rb <<(code/paginating_the_list_of_objects_in_a_bucket_recipe/paginate_bucket.rb)

A call like this:

1 ruby paginate_bucket.rb assets0.plotomatic.com 10

Will return an array of arrays containing the keys of all of the objects in a bucket, 10 objects per array.

1 [[".htaccess", "404.html", "500.html",

2 "FILES_TO_UPLOAD", "REVISION", "blank.gif", "dispatch.cgi",

3 "dispatch.fcgi", "dispatch.rb", "favicon.ico"],

4 ["fit_logs", "gnuplot.log", "google0fbc3439960e8dee.html", "graphs",

5 "iepngfix.htc", "images", "images/ScottFlorenciaTrail_4web.jpg",

6 "images/black_2x1.png", "images/blank.gif", "images/graphs"], ... ]

Discussion

The marker parameter means “return all objects with keys that are lexicographically greater than this”.

The default value for max-keys is 1000. You cannot set max-keys to larger than 1000. If you try to do this, it will ignore you and return 1000 objects. So, if you do this:

1 ruby paginate_bucket.rb assets0.plotomatic.com 2000

It’s equivalent to doing this:

1 ruby paginate_bucket.rb assets0.plotomatic.com 1000

The discussion section in “Listing All Objects in a Bucket” tells you how to make sure you have listed all of the files in a bucket, even if there are more than 1000 objects in the bucket.

Listing objects in folders

The Problem

You have your objects stored in a hierarchical, directory-like structure and you want to see only a single level of that structure.

The Solution

Use the prefix and delimiter parameters to roll up ‘directories’ into a single listing.

Let’s say that I have a bucket containing my music. Each song has a key of the form “artist/album/song_name”. Here’s an example listing of the keys in the bucket:

1 Arcade Fire/Funeral/Crown Of Love.mp3

2 Arcade Fire/Funeral/Haiti.mp3

3 Arcade Fire/Funeral/In The Backseat.mp3

4

5 ...

6

7 Arcade Fire/Neon Bible/The Well and the Lighthouse.mp3

8 Arcade Fire/Neon Bible/Windowsill.mp3

9 The Besnard Lakes/The Besnard Lakes Are The Dark Horse/And You Lied To Me.mp3

10 The Besnard Lakes/The Besnard Lakes Are The Dark Horse/Because Tonight.mp3

11

12 ...

13

14 The Besnard Lakes/Volume I/Thomasina.mp3

15 The Besnard Lakes/Volume I/You've Got To Want To Be A Star.mp3

To get a list of all of the artists in my collection, I would do a Bucket.find with a prefix of "" and a delimiter of /

1 >> b = Bucket.find('spatten_music_test', :delimiter => '/', :prefix => '')

2 >> b.common_prefixes

3 => [{"prefix"=>"Arcade Fire/"}, {"prefix"=>"The Besnard Lakes/"}]

To find all of the albums by the Arcade Fire, I would do a Bucket.find with a prefix of Arcade Fire/ and a delimiter of /. Note that the trailing slash on the prefix is necessary. Without it, you won’t see the albums.

1 >> b = Bucket.find('spatten_music_test', :delimiter => '/', :prefix => 'Arcade F\

2 ire/')

3 >> b.common_prefixes

4 => [{"prefix"=>"Arcade Fire/Funeral/"},

5 {"prefix"=>"Arcade Fire/Neon Bible/"}]

Finally, to get all of the songs in Arcade Fire’s Funeral, I would do a Bucket.find with a prefix of Arcade Fire/Funeral/ and a delimiter of /

1 >> b = Bucket.find('spatten_music_test',

2 :delimiter => '/',

3 :prefix => 'Arcade Fire/Funeral/')

4 >> b.common_prefixes

5 >> b.objects.collect {|obj| obj.key}

6 => ["Arcade Fire/Funeral/Crown Of Love.mp3",

7 "Arcade Fire/Funeral/Haiti.mp3",

8 "Arcade Fire/Funeral/In The Backseat.mp3",

9 "Arcade Fire/Funeral/Neighborhood 1 - Tunnels.mp3",

10 "Arcade Fire/Funeral/Neighborhood 2 - Laika.mp3",

11 "Arcade Fire/Funeral/Neighborhood 3 - Power Out.mp3",

12 "Arcade Fire/Funeral/Neighborhood 4 - 7 Kettles.mp3",

13 "Arcade Fire/Funeral/Rebellion (Lies).mp3",

14 "Arcade Fire/Funeral/Une Annee Sans Lumiere.mp3",

15 "Arcade Fire/Funeral/Wake Up.mp3"]

Note that in this case, there are no common prefixes, so you just need to get the objects instead.

Discussion

Uploading a file to s3

The Problem

You want to upload a file to S3.

The Solution

To upload a file to S3, you make an authenticated PUT request to an object’s URL, with the file’s contents as the request body. Here’s a script using S3Lib.request that will upload a file:

Example 3.14. s3lib_upload_file <<(code/uploading_a_file_to_s3_recipe/s3lib_upload_file.rb)

Here’s the same thing using S3SH:

Example 3.15. s3sh_upload_file <<(code/uploading_a_file_to_s3_recipe/s3sh_upload_file.rb)

Discussion

Neither of these examples will work unless the bucket that you are uploading to already exists. This is easily rectified by creating the bucket before uploading, but I decided to keep the examples as simple as possible. Here’s a fix for the S3SH version that works whether or not the bucket already exists:

Example 3.16. s3sh_upload_file_v2 <<(code/uploading_a_file_to_s3_recipe/s3sh_upload_file_v2.rb)

The S3Lib example defaults to a content-type of text/plain unless you set it by hand. The AWS-S3 library will make a guess at the content type.

1 $> ruby s3sh_upload_file recipe_list.txt spatten_test_bucket recipe_list.jpg

2 /Users/spatten/book

3 $> s3sh

4 >> S3Object.find('recipe_list.jpg', 'spatten_test_bucket').content_type

5 => "image/jpeg"

Note that the file I actually uploaded was a text file: S3SH just looks at the extension of the key of the object you are creating. In this case, it saw .jpg and assumed it was of type image/jpeg.

Also note that this PUT request is idempotent: it has no state. This means that if the object already existed, any meta-data or ACLs would be over-written with default values. If you want to preserve the meta-data, see “Copying an object”. If you want to preserve the permissions on the file given in the ACL, see “Keeping the Current ACL When You Change an Object”

Doing a streaming upload to S3

The Problem

You’re uploading a file to S3, and it’s big enough that you don’t want to load it all in to memory before uploading it to S3. In other words, you want to stream it up to S3.

The Solution

If you give an IO object as the data argument of AWS::S3::S3Object::store, it will stream the data up to S3. This is most easily done using File::open. Here’s an example

1 #!/usr/bin/env ruby

2

3 require 'rubygems'

4 require 'aws/s3'

5 include AWS::S3

6

7 # Usage: streaming_upload.rb <filename> <bucket> [<key>]

8

9 file = ARGV[0]

10 bucket = ARGV[1]

11 key = ARGV[2] || file

12

13 AWS::S3::Base.establish_connection!(

14 :access_key_id => ENV['AMAZON_ACCESS_KEY_ID'],

15 :secret_access_key => ENV['AMAZON_SECRET_ACCESS_KEY']

16 )

17

18 puts "uploading #{file} to #{File.join(bucket, key)}"

19 S3Object.store(key, File.open(file), bucket)

Discussion

The only difference between doing a streaming and non-streaming upload is that you provide an IO object rather than the data you are uploading. In many cases, this just means replacing File.read with File.open in your code. Yet another example of the elegance of Marcel Molina’s AWS-S3 library.

Making streaming uploads work with S3Lib is left as an exercise for the reader. If you seriously want to do this, take a look at the AWS::S3::Connection#request method in the AWS-S3 library for inspiration. Also, let me know if you get it working and I’ll put it in the next version of the book.

Deleting an object

The Problem

You have an object that you want to delete.

The Solution

If you are using the AWS/S3 library, use S3Object.delete(object_key, bucket_name)

1 $> s3sh

2 >> S3Object.delete('object.rb', 'spattentemp')

To do this by hand, make a DELETE request to the object’s URL

1 $> s3lib

2 >> S3Lib.request(:delete, 'spattentemp/object.rb')

Discussion

A delete request should be idempotent, so you can delete an object over and over without raising an error. This also means that S3 will not tell you that the object you tried to delete does not exist. This is not true for buckets.

Copying an object

The Problem

You have an object in one bucket, and you want to copy it, either to another object in the same bucket or to another bucket. You don’t want to spend the time and/or money downloading the object and uploading it again.

The Solution

Use the object copy functionality of S3. This allows you to copy an object without downloading the original file and re-uploading it to S3. To make a copy of an object, you make a PUT request to the new object, just as if you were creating it normally. Instead of uploading the contents of the object in the body of the request, you add a x-amz-copy-source header to the request, with the URL of the object you want to copy.

The following command will copy the code/sync_directory.rb object in the amazon_s3_and_ec2_cookbook bucket to the sync_directory_copy.rb object in the spatten_test_bucket bucket.

1 $> s3lib

2 >> S3Lib.request(:put, 'spatten_test_bucket/sync_directory_copy.rb',

3 'x-amz-copy-source' => 'amazon_s3_and_ec2_cookbook/code/sync_directory.rb')

When you copy an object, the object’s metadata is copied by default (see below for information on changing that). The grants on the object, however, are not. They are set to private unless you include a canned ACL along with the PUT request:

1 >> S3Lib.request(:put, 'spatten_test_bucket/sync_directory_copy.rb',

2 'x-amz-copy-source' => 'amazon_s3_and_ec2_cookbook/code/sync_directory.rb',

3 'x-amz-acl' => 'public-read')

The x-amz-metadata-directive header determines whether or not meta data is copied to the new object. The two legal values are COPY and REPLACE. The default is COPY.

Conditional Copying

There are four additional headers that can be used to copy an object conditionally.

x-amz-copy-source-if-match

You provide an etag, and the copy will only happen if the etag matches the etag of the source object.

x-amz-copy-source-if-none-match

You provide an etag, and the copy will only happen if the etag does not match the etag of the source object.

x-amz-copy-source-if-unmodified-since

You provide a date in the correct format (see below) and the copy will only happen if the object has not been modified since the given date.

x-amz-copy-source-if-modified-since

You provide a date in the correct format (see below) and the copy will only happen if the object has been modified since the given date.

Some of the conditional copy headers can be used in pairs. The valid pairs are x-amz-copy-source-if-match and x-amz-copy-source-if-unmodified-since or x-amz-copy-source-if-none-match and x-amz-copy-source-if-modified-since.

If any of the conditional copy headers fail, S3 will return an 412 precondition failed response code.

Note

The date format for the x-amz-copy-source-if-unmodified-since and x-amz-copy-source-if-modified-since headers must be in the format specified in http://www.w3.org/TR/xmlschema-2/#dateTime.

If you keep things simple and use universal standard time, then the format is of the form yyyy-mm-ddThh:mm:ssZ. For example, October 9th, 2002 at 7:00 PM UTC is represented as 2002-10-09T19:00:00Z

Discussion

If you are using the AWS/S3 library, then you might have noticed the S3Object.copy method. This, at the time of writing, doesn’t use the copy functionality, but does a full download and upload instead (the copy object functionality is pretty new, so this may change by the time you read this). Here are two methods that you can add to the S3Object class to take care of copying to another bucket:

Example 3.17. s3object.rb - additions to AWS::S3::S3Object <<(code/copying_an_object_recipe/s3object.rb)

These two methods are used to synchronize two buckets in “Synchronizing two buckets”.

You can also use the copy functionality to rename objects. Just copy the object to another object in the same bucket, and then delete the original bucket.

Downloading a File From S3

The Problem

You have a file stored on S3. You want it on your hard drive. Stat!

The Solution

To get the value of an object on S3, you make an authenticated GET request to that object’s URL. Using the AWS::S3 library, you can use S3Object::value class method to get the value of an object. The S3Object::value method takes as its arguments the key and bucket of the object:

1 S3Object.value(key, bucket)

Once you have read an object’s value, you can write the value to disk. Here’s a script to download an object and write its value to a file:

Example 3.18. download_object <<(code/downloading_a_file_from_s3_recipe/download_object.rb)

Here it is in action

1 $> ./code/s3_code/download_object spatten_test_bucket viral_marketing.txt ~/vira\

2 l_marketing.txt

3 $> more ~/viral_marketing.txt

4 Feel free to pass this around!

Here’s the same thing, making the GET request by hand using S3Lib

Example 3.19. download_object_by_hand <<(code/downloading_a_file_from_s3_recipe/download_object_by_hand.rb)

Discussion

A more ‘Unixy’ way of doing this would be to have the download_object script output the value of the object to STDOUT. You could then redirect the output to wherever you want. This makes the script simpler, too, so it’s all good.

Example 3.20. download_object_unixy

1 #!/usr/bin/env ruby

2

3 require 'rubygems'

4 require 'aws/s3'

5 include AWS::S3

6

7 # Usage: download_object <bucket> <key> <file>

8 # Downloads the object with a key of key in the bucket named bucket and

9 # writes it to a file named filename.

10 bucket, key = ARGV

11

12 AWS::S3::Base.establish_connection!(

13 :access_key_id => ENV['AMAZON_ACCESS_KEY_ID'],

14 :secret_access_key => ENV['AMAZON_SECRET_ACCESS_KEY']

15 )

16

17 puts S3Object.value(key, bucket)

Without redirection, it will just output the contents of the object

1 $> download_object spatten_test_bucket viral_marketing.txt

2 Feel free to pass this around!

3 /Users/spatten/book

You can also redirect the output to a file

1 $> download_object spatten_test_bucket viral_marketing.txt > ~/viral_marketing.t\

2 xt

3 $> more ~/viral_marketing.txt

4 Feel free to pass this around!

The solutions in this recipe will fail for large files, as you’re loading the whole file in to memory before doing anything with it. This is solved in the next recipe, “Streaming a File From S3”

Streaming a File From S3

The Problem

You have a large file stored on S3, and you need to get it on your hard drive. The file is too large to just download in one big chunk.

The Solution

If you are using the AWS-S3 library, use the S3Object::stream method to stream the file down to your computer in chunks. Output each chunk to STDOUT, and then redirect the output to a file.

Example 3.21. stream_object <<(code/streaming_a_file_from_s3_recipe/stream_object.rb)

Here it is in action:

1 $> ./stream_object spatten_music

2 'children of the CPU/firefly.mp3' > ~/firefly.mp3

3 $> open ~/firefly.mp3

4 $> commence_grooving

(The last two commands will only work if you are on a Mac)

Discussion

Note that the Ruby print command is used here, rather than puts. print doesn’t append a new line at the end of its output, which would wreak havoc on your binary files that you were streaming down.

Firefly, by Children of the CPU, is available online for free, and well worth the download if you like mellow, poppy, electronica: http://www.childrenofthecpu.com/music/firefly-rm.mp3

If you are not using the AWS/S3 library, you can download a file in chunks using a Ranged Get, as explained in “Streaming a file from S3 by hand”

Streaming a file from S3 by hand

The Problem

You have a large file stored on S3, and you need to get it on your hard drive. The file is too large to just download in one big chunk. You aren’t using the AWS/S3 library, so you’ll have to do it the hard way.

The Solution

If you are not using the AWS-S3 library, then you can do it by hand by making a Ranged GET request. This allows you to download only part of a file. To use it, make a normal GET request to download a file, and add a Range header to it of the form

1 Range: bytes=<lower_byte>-<upper_byte>

For example, the following command will download bytes 1024 to 2048 of the object with a key of clapyourhandssayyeah/underwater.mp3 in the spatten_music bucket:

1 $> irb -r s3lib

2 >> S3Lib.request(:get, "/spatten_music/clapyourhandssayyeah/underwater.mp3",

3 "Range" => "bytes=1024-2048")

The response will contain a content-range header that gives information about what bytes have been downloaded and what the total size of the file is

1 $> irb -r s3lib

2 >> request = S3Lib.request(:get, "/spatten_music/clapyourhandssayyeah/underwater\

3 .mp3", "Range" => "bytes=1024-2048")

4 => #<StringIO:0x11be0d0>

5 >> puts request.meta['content-range']

6 bytes 1024-2048/5099633

7 >> puts request.meta['content-length']

8 1025

If you make a request where the upper_byte is larger than the file size, then the response will be truncated to the actual file size.

1 >> request = S3Lib.request(:get,

2 "/spatten_music/clapyourhandssayyeah/underwater.mp3",

3 "Range" => "bytes=0-10000000")

4 => #<File:/var/folders/MQ/MQLzTKxvF0S+-qIlPJ4yxE+++TM/-Tmp-/open-uri.2861.0>

5 >> puts request.meta['content-range']

6 bytes 0-5099632/5099633

7 => nil

8 >> puts request.meta['content-length']

9 5099633

If the lower_byte is larger than the file size, then you will get an InvalidRange error

1 >> request = S3Lib.request(:get,

2 "/spatten_music/clapyourhandssayyeah/underwater.mp3",

3 "Range" => "bytes=10000000-10000001")

4 S3Lib::S3ResponseError: 416 Requested Range Not Satisfiable

5 amazon error type: InvalidRange

Let’s put that together into a script that will stream a file down in Megabyte sized chunks

Example 3.22. streaming_download_by_hand

1 #!/usr/bin/env ruby

2

3 require 'rubygems'

4 require 's3lib'

5

6 CHUNK_SIZE = 1024 * 1024 # size of a chunk in bytes

7

8 # Usage: streaming_download_by_hand bucket key [file]

9 # If file is omitted, it will be the same as key

10

11 bucket, key, file = ARGV

12 file ||= key

13 url = File.join(bucket, key)

14

15 file_size = S3Lib.request(:head, url).meta['content-length'].to_i

16

17 File.open(file, 'w') do |file|

18 chunk_start = chunk_end = 0

19 while chunk_start <= file_size

20 chunk_end = chunk_start + CHUNK_SIZE

21 chunk_end = file_size if chunk_end > file_size

22 puts "Getting bytes #{chunk_start} - #{chunk_end} of #{file_size} " +

23 "(#{"%.0f" % (100.0 * chunk_end / file_size) }%)"

24 request = S3Lib.request(:get, url,

25 'Range' => "bytes=#{chunk_start}-#{chunk_end}")

26 file.write request.read

27 chunk_start = chunk_end + 1

28 end

29 end

Here it is downloading a file

1 $> ruby streaming_download_by_hand spatten_music clapyourhandssayyeah/underwater\

2 .mp3 underwater.mp3

3 Getting bytes 0 - 1048576 of 5099633 (21%)

4 Getting bytes 1048577 - 2097153 of 5099633 (41%)

5 Getting bytes 2097154 - 3145730 of 5099633 (62%)

6 Getting bytes 3145731 - 4194307 of 5099633 (82%)

7 Getting bytes 4194308 - 5099633 of 5099633 (100%)

Discussion

I thought it would be easier to get the file size from the file’s meta data, rather than grabbing it from the content-range header in the response. If you want to avoid the extra call to S3, you could parse the content-range header to get the file size instead.

I wrote directly to a file in this example, but you could just as easily write to STDOUT and redirect the results to a file. If you do this, make sure to print the diagnostic output to STDERR.

Note that the streaming download not only allows you to download large files without loading them into memory, it also allows for the resuming and pausing of downloads.

Adding metadata to an object

The Problem

You have an object that you want to add metadata to.

The Solution

Let’s say you have a picture and you want to add meta-data telling you who took the picture and who is in the picture. You can do this by adding meta-data to the object.

Using AWS/S3, you do this with the S3Object.metadata method.

1 >> b = Bucket.find('spatten_s3demo')

2 >> vamp = b['vampire.jpg']

3 >> vamp.metadata

4 => {}

5 >> vamp.metadata['subject'] = 'Claire'

6 => "Claire"

7 >> vamp.metadata['photographer'] = 'Nadine Inkster'

8 => "Nadine Inkster"

9 >> vamp.store

To do it by hand, you add some x-amz-meta headers to the PUT request when are creating (or re-creating) the object. To add a ‘photographer’ meta-data, add a x-amz-meta-photographer header.

1 $> s3lib

2 >> S3Lib.request(:put, 'spatten_s3demo/vampire.jpg',

3 'x-amz-meta-photographer' => 'Nadine Inkster',

4 'x-amz-meta-subject' => 'Claire',

5 :body => File.read('vampire.jpg'),

6 'x-amz-acl' => 'public-read',

7 'content-type' => 'image/png')

Warning

Note that you are actually re-creating the object on S3. In the AWS/S3 example, you have to actually store the object after adding the metadata. When doing it by hand, you have to make sure to maintain any permissions and the content type while PUTting it. This can be quite annoying if it’s a large file, but there’s no way around it.

This is not a limitation of a RESTful architecture, it’s a limitation of how S3’s REST interface is designed. One way to fix this would be to add a metadata sub-resource that you could make PUT requests to without having to upload the whole darn object again.

Discussion

To learn how to read the metadata of an object, see “Reading an object’s metadata”

Reading an object’s metadata

The Problem

You want to read an object’s metadata.

The Solution

Using AWS/S3, you get the object and then use S3Object.metadata:

1 >> vamp = S3Object.find('vampire.jpg', 'spatten_s3demo')

2 >> vamp.metadata

3 => {"x-amz-meta-subject"=>"Claire", "x-amz-meta-photographer"=>"Nadine Inkster"}

This will return the user-defined metadata. If you are interested in other headers like the content-type or last-modified, then you can either use S3Object.about or request those parameters directly:

1 >> vamp.about

2 => {"last-modified"=>"Sat, 13 Sep 2008 19:23:14 GMT",

3 "x-amz-id-2"=>"r4zDmi3tEfeKLPWGjFvHGp1fQAJaGrugBy+Drti9sOwyDcsCuCC/DRLExWtqK\

4 4DC",

5 "content-type"=>"image/png",

6 "etag"=>"\"8e1644a01eb323d2c5d65f6749008dae\"",

7 "date"=>"Sat, 13 Sep 2008 19:32:55 GMT",

8 "x-amz-request-id"=>"B71878D7DF70FA7F",

9 "server"=>"AmazonS3",

10 "content-length"=>"10817"}

11 >> vamp.last_modified

12 => Sat Sep 13 19:23:14 UTC 2008

13 >> vamp.content_type

14 => "image/png"

To get the meta-data by hand, make a HEAD request to the object’s URL, and then call .meta on the response:

1 $> s3lib

2 >> response = S3Lib.request(:head, 'spatten_s3demo/vampire.jpg')

3 >> response.meta

4 => {"last-modified"=>"Sat, 13 Sep 2008 19:23:14 GMT",

5 "x-amz-id-2"=>"Kv8TJVXxkof6Wg7O6tiBSIRfgxnaX02oEBUVUhDGx3MUnKySewU4DdNXXJt3L\

6 zIF",

7 "date"=>"Sat, 13 Sep 2008 19:36:28 GMT",

8 "etag"=>"\"8e1644a01eb323d2c5d65f6749008dae\"",

9 "content-type"=>"image/png",

10 "x-amz-request-id"=>"9B2C2AC6D5F59F79",

11 "x-amz-meta-subject"=>"Claire",

12 "x-amz-meta-photographer"=>"Nadine Inkster",

13 "server"=>"AmazonS3",

14 "content-length"=>"10817"}

The user-defined metadata is all metadata with headers that start with x-amz-meta-.

Discussion

To find out how to set your own meta-data, see “Adding metadata to an object”.

Understanding access control policies

The Problem

You want to understand how giving and removing permissions to read and write your objects and buckets works.

The Solution

You need to learn all about the wonderful world of Access Control Policies (ACPs), Access Control Lists (ACLs) and Grants.

Both buckets and objects have Access Control Policies (ACP). An Access Control Policy defines who can do what to a given Bucket or Object. ACPs are built from a list of grants on that object or bucket. Each grant gives a specific user or group of users (the grantee) a permission on that bucket or object. Grants can only give access. An object or bucket without any grants on it is un-readable or writable.

Warning

The nomenclature is a bit confusing here. You’ll see references to both Access Control Policies (ACPs) and Access Control Lists (ACLs). They’re pretty much synonymous. If it helps, you can think of the Access Control Policy as being a more over-arching concept, and the Access Control List as the implementation of that concept. Really, though, they’re interchangeable.

To avoid writing ‘bucket or object’ over and over in this recipe, I’m going to use resource to refer to both buckets and objects.

Grants

An Access Control List is made up of one or more Grants. A grant gives a user or group of users a specific permission. It looks like this

1 <Grant>

2 <Grantee xmlns:xsi='http://www.w3.org/2001/XMLSchema-instance'

3 xsi:type='grant_type'>

4 ... info on the grantee ...

5 </Grantee>

6 <Permission>permission_type</Permission>

7 </Grant>

The permission_type, grant_type and the information on the grantee are explained in detail below.

Grant Permissions

A grant can give one of five different permissions to a resource. The permissions are READ, WRITE, READ_ACP, WRITE_ACP and FULL_CONTROL.

Table 3.1. Grant Permission Types

Type Bucket Object

READ List bucket contents Read an object’s value and metadata

WRITE Create, over-write or delete an object in the bucket Not supported for Objects

READ_ACP Read the ACL for a bucket or object. The owner of a resource has this permission without needing a grant.

WRITE_ACP Write the ACL for a bucket or object. The owner of a resource has this permission without needing a grant.

FULL_CONTROL Equivalent to giving READ, WRITE, READ_ACP and WRITE_ACP grants on this resource.

The XML for a permission looks like this:

1 <Permission>READ</Permission>

Where READ is replaced by whatever permission type you are granting.

Grantees

When you create a grant, you must specify who you are granting the permission to. There are currently six different types of Grantees.

Owner

The owner of a resource will always have READ_ACP and WRITE_ACP permissions on that resource. When a resource is created, the owner is given FULL_CONTROL access on the resource using a ‘User by Canonical Representation’ grant (see below). You will never actually create an ‘OWNER’ grant directly; to change the grant of the owner of a resource, create a grant by Canonical Representation.

User by Email