Table of Contents

- Introduction

- The History of the Raspberry Pi

- Raspberry Pi Versions

- Raspberry Pi Peripherals

- Operating Systems

- Power Up the Pi

- About Prometheus

- About Grafana

- Installation

- Exporters

- Prometheus Collector Configuration

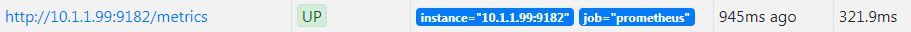

- Adding a monitoring node to Prometheus

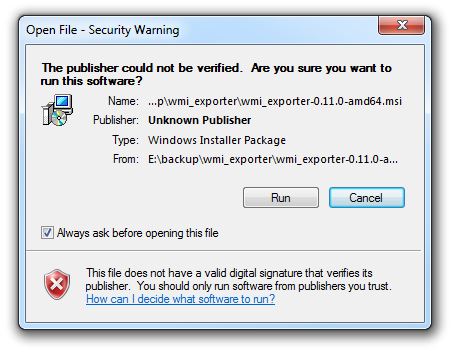

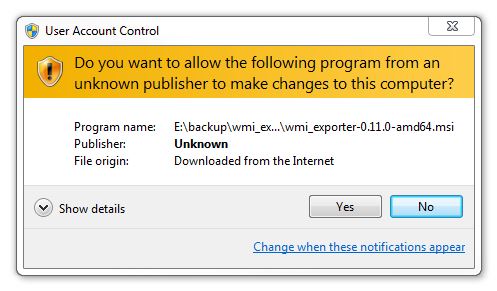

- WMI exporter

- Custom Exporters

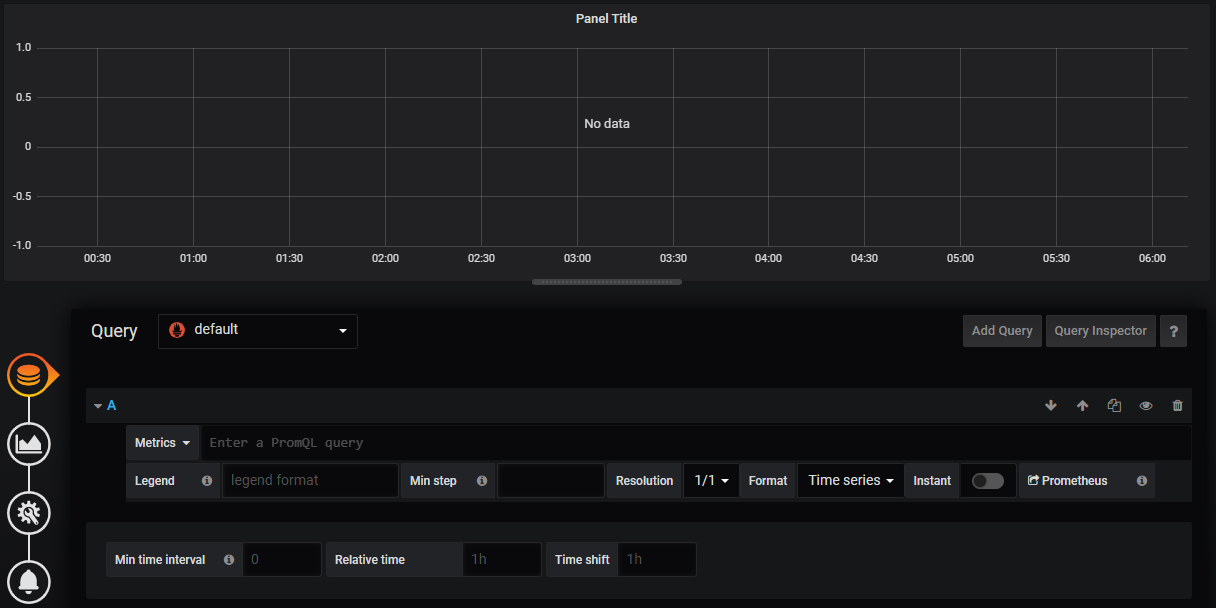

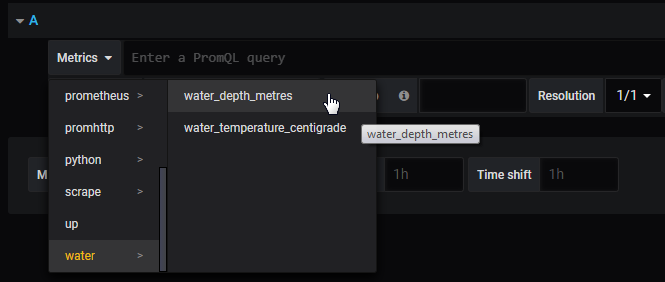

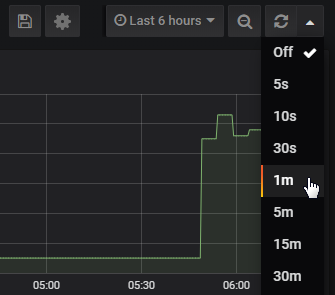

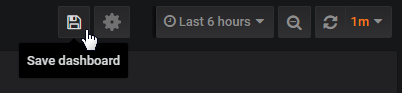

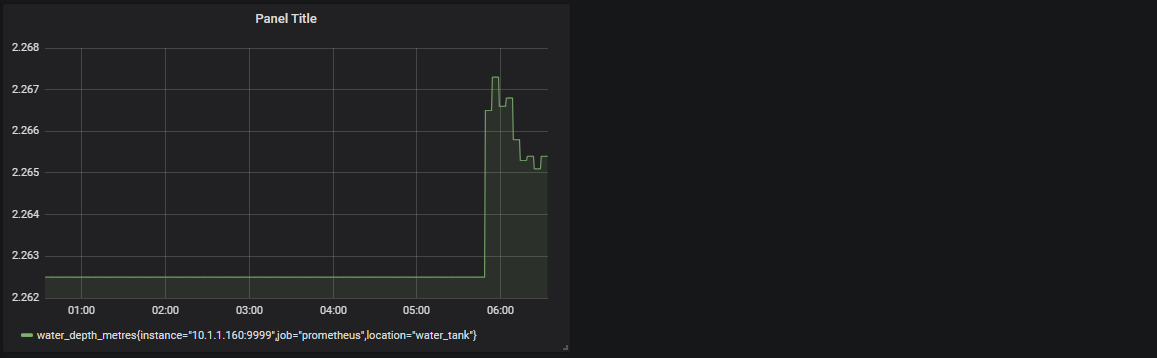

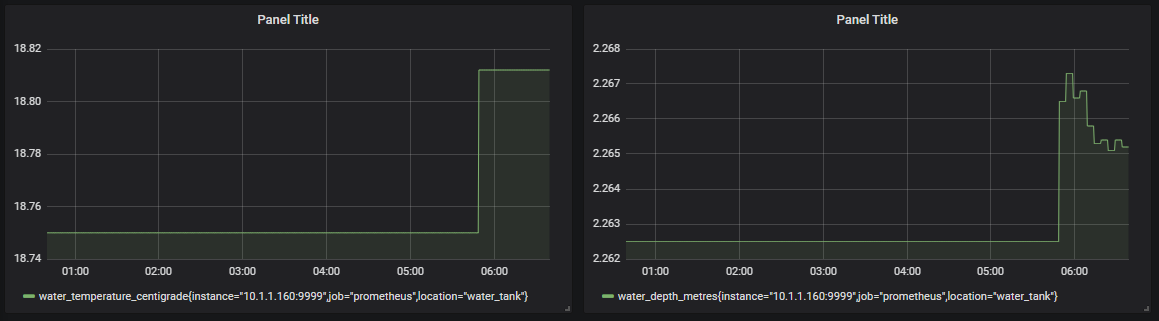

- Dashboards

- Upgrading Prometheus

- Upgrading Grafana

- Prometheus and Grafana Tips and Tricks

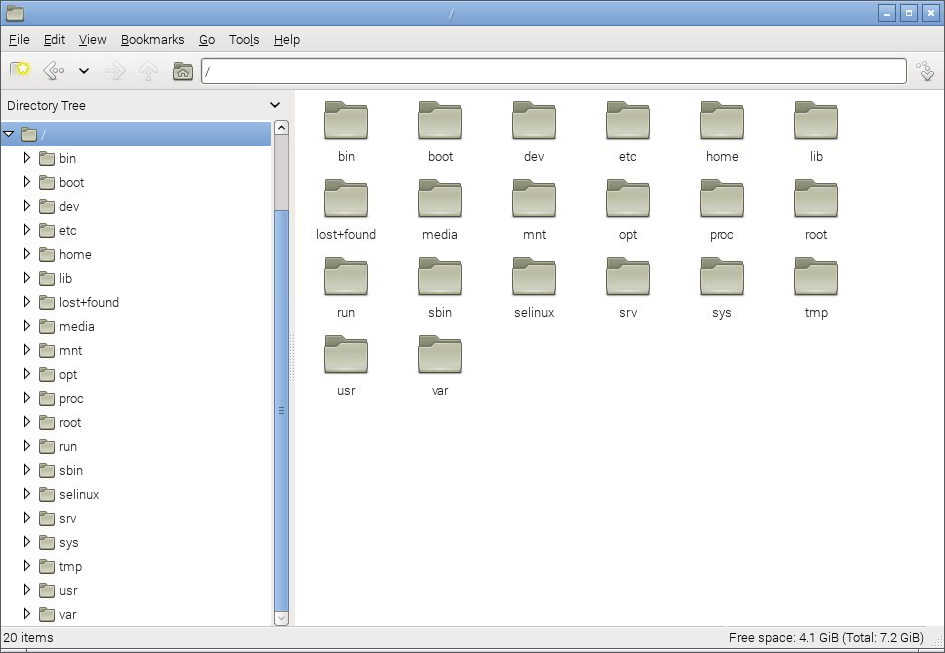

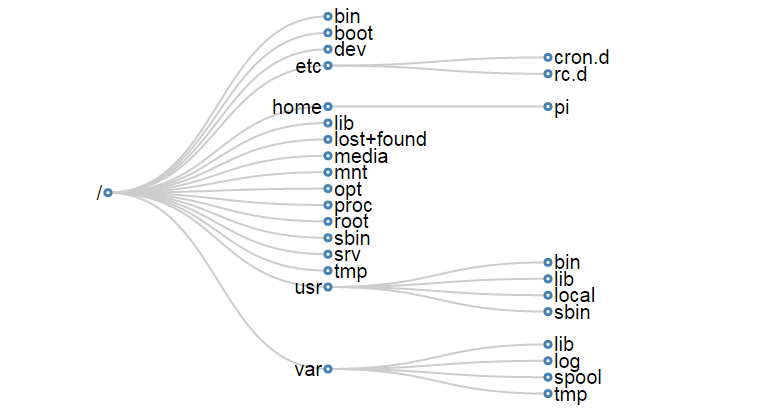

- Linux Concepts

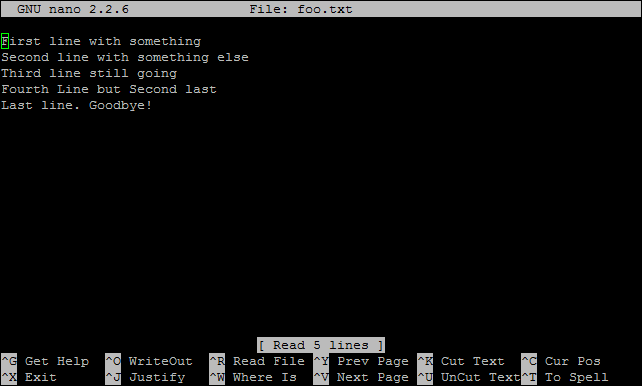

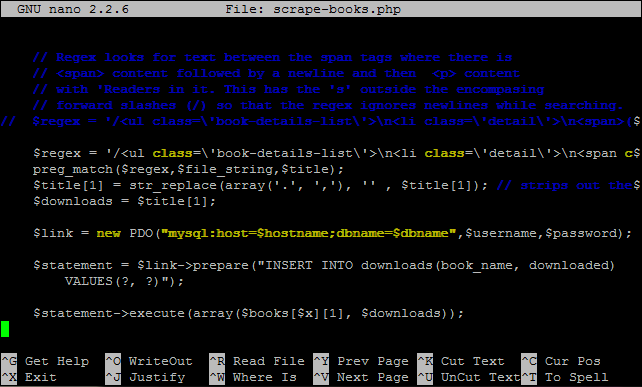

- File Editing

- Linux Commands

- Directory Structure Cheat Sheet

Introduction

Welcome!

Hi there. Congratulations on getting your hands on this book. I hope that you’re excited to learning about installing, configuring and using Prometheus and Grafana on a Raspberry Pi.

This will be a journey of discovery for both of us. By experimenting with computers we will be learning about what is happening on and in your collection of IT devices that you have in your home or business. Others have written many fine words about doing this sort of thing, but I have an ulterior motive. I write books to learn and document what I’ve done. The hope is that by sharing the journey others can learn something from my efforts :-).

Am I ambitious? Maybe :-). But if you’re reading this, I managed to make some headway. I dare say that like other books I have written (or are currently writing) it will remain a work in progress. They are living documents, open to feedback, comment, expansion, change and improvement. Please feel free to provide your thoughts on ways that I can improve things. Your input would be much appreciated.

You will find that I eschew a simple “Do this approach” for more of a story telling exercise. Some explanations are longer and more flowery than might be to everyone’s liking, but there you go, that’s my way :-).

There’s a lot of information in the book. There’s ‘stuff’ that people with a reasonable understanding of computers will find excessive. Sorry about that. I have gathered a lot of the content from other books I’ve written to create this guide. As a result, it is as full of usable information as possible to help people who could be using the Pi and coding for the first time. Please bear in mind, this is the description of ONE project. I could describe it in 5 pages but I have stretched it out into a lot more. If we need to recreate the project from scratch, this guide will leave nothing out. It will also form a basis for other derivative books (as books before this one have done). As Raspberry Pi’s and software improve, the descriptions will evolve.

I’m sure most authors try to be as accessible as possible. I’d like to do the same, but be warned… There’s a good chance that if you ask me a technical question I may not know the answer. So please be gentle with your emails :-).

Email: d3noobmail+monitor@gmail.com

What are we trying to do?

Put simply, we are going to examine the wonder that is the Raspberry Pi computer and use it to accomplish something.

In this specific case we will be installing the software ‘stack’ of Prometheus and Grafana so that we can measure and record metrics from a range of devices, sources and services and present them in a really cool and interesting way. I have done something similar to this in the past with an effort at building my own monitoring stack. This is captured in the book ‘PiMetric: Monitoring using a Raspberry Pi’. That was (and in fact still is) a really interesting process for me. But when I started to look at Prometheus and Grafana, I got this uncomfortable feeling that I had been trying to re-invent the wheel. I’m very much looking forward the exploring the range of possibilities of Prometheus and Grafana and ultimately supplanting the function I was searching for with Pimetric!

Along the way we’ll;

- Look at the Raspberry Pi and its history.

- Work out how to get software loaded onto the Pi.

- Learn about networking and configure the Pi accordingly.

- Install and configure our applications.

- Write some code to interface with our monitoring stack.

- Explore just what our system can do for us.

Who is this book for?

You!

By getting hold of a copy of this book you have demonstrated a desire to learn, to explore and to challenge yourself. That’s the most important criteria you will want to have when trying something new. Your experience level will come second place to a desire to learn.

It may be useful to be comfortable using the Windows operating system (I’ll be using Windows 7 for the set-up of the devices (yes I know that it’s out of support, I’m in the process of changing to a full time Linux Desktop, but I’m not the only person who uses the main computer in the house). You should be aware of Linux as an alternative operating system, but you needn’t have tried it before. Before you learn anything new, it pretty much always appears indistinguishable from magic. but once you start having a play, the mystery falls away.

What will we need?

Well, you could just read the book and learn a bit. By itself that’s not a bad thing, but trust me when I say that actually experimenting with computers is fun and rewarding.

The list below is flexible in most cases and will depend on how you want to measure the values.

- A Raspberry Pi (I’m using a Raspberry Pi Model 3 B+ and a model 4)

- Probably a case for the Pi

- A MicroSD card

- A power supply for the Pi

- A keyboard and monitor that you can plug into the Pi (there are a few options here, read on for details)

- A remote computer (like your normal desktop PC that you can use to talk to connect to the Pi). This isn’t strictly necessary, but it makes the experience way cooler.

- An Internet connection for getting and updating the software.

As we work through the book we will be covering off the different aspects required and you should get a good overview of what your options are in different circumstances.

Why on earth did I write this rambling tome?

That’s a really good question. Writing the previous books in this series was an enjoyable process, so I thought that I’d carry on and continue to adapt the book for subsequent projects. This is book five (?, I lose track) in this series, so I suppose it’s a ‘thing’. Will this continue? Who knows, stay tuned…

Included is a bunch of information from my books on the Raspberry Pi and Linux. I hope you find it useful.

Where can you get more information?

The Raspberry Pi as a concept has provided an extensible and practical framework for introducing people to the wonders of computing in the real world. At the same time there has been a boom of information available for people to use them. The following is a far from exhaustive list of sources, but from my own experience it represents a useful subset of knowledge.

The History of the Raspberry Pi

The story of the Raspberry Pi starts in 2006 at the University of Cambridge’s Computer Laboratory. Eben Upton, Rob Mullins, Jack Lang and Alan Mycroft became concerned at the decline in the volume and skills of students applying to study Computer Science. Typical student applicants did not have a history of hobby programming and tinkering with hardware. Instead they were starting with some web design experience, but little else.

They established that the way that children were interacting with computers had changed. There was more of a focus on working with Word and Excel and building web pages. Games consoles were replacing the traditional hobbyist computer platforms. The era when the Amiga, Apple II, ZX Spectrum and the ‘build your own’ approach was gone. In 2006, Eben and the team began to design and prototype a platform that was cheap, simple and booted into a programming environment. Most of all, the aim was to inspire the next generation of computer enthusiasts to recover the joy of experimenting with computers.

Between 2006 and 2008, they developed prototypes based on the Atmel ATmega644 microcontroller. By 2008, processors designed for mobile devices were becoming affordable and powerful. This allowed the boards to support an graphical environment. They believed this would make the board more attractive for children looking for a programming-oriented device.

Eben, Rob, Jack and Alan, then teamed up with Pete Lomas, and David Braben to form the Raspberry Pi Foundation. The Foundation’s goal was to offer two versions of the board, priced at US$25 and US$35.

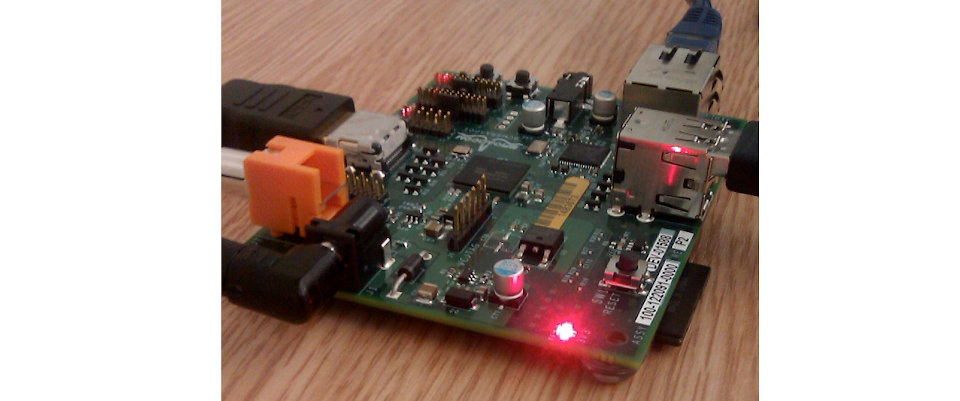

50 alpha boards were manufactured in August 2011. These were identical in function to what would become the model B. Assembly of twenty-five model B Beta boards occurred in December 2011. These used the same component layout as the eventual production boards.

Interest in the project increased. They were demonstrated booting Linux, playing a 1080p movie trailer and running benchmarking programs. During the first week of 2012, the first 10 boards were put up for auction on eBay. One was bought anonymously and donated to the museum at The Centre for Computing History in Suffolk, England. While the ten boards together raised over 16,000 Pounds (about $25,000 USD) the last to be auctioned (serial number No. 01) raised 3,500 Pounds by itself.

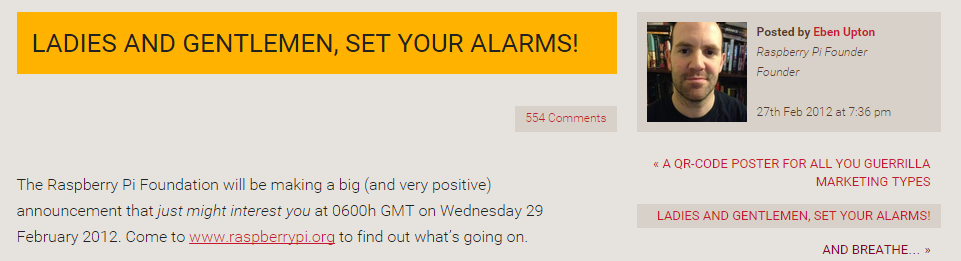

The Raspberry Pi Model B entered mass production with licensed manufacturing deals through element 14/Premier Farnell and RS Electronics. They started accepting orders for the model B on the 29th of February 2012. It was quickly apparent that they had identified a need in the marketplace. Servers struggled to cope with the load placed by watchers repeatedly refreshing their browsers. The official Raspberry Pi Twitter account reported that Premier Farnell sold out within few minutes of the initial launch. RS Components took over 100,000 pre orders on the first day of sales.

Within two years they had sold over two million units.

The lower cost model A went on sale for $25 on 4 February 2013. By that stage the Raspberry Pi was already a hit. Manufacturing of the model B hit 4000 units per day and the amount of on-board ram increased to 512MB.

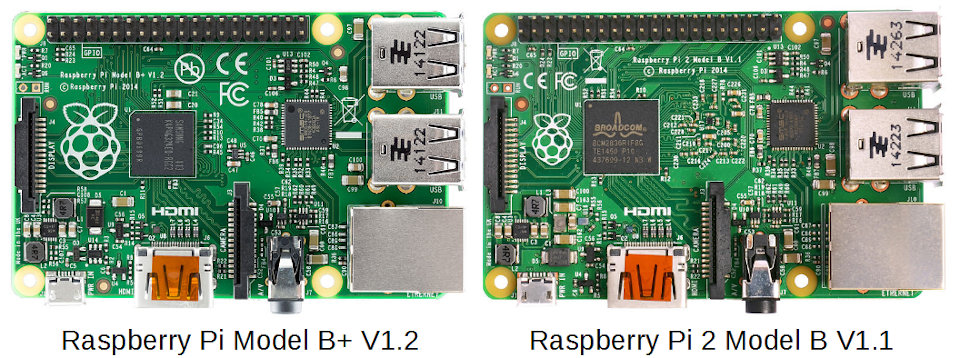

The official Raspberry Pi blog reported that the three millionth Pi shipped in early May 2014. In July of that year they announced the Raspberry Pi Model B+, “the final evolution of the original Raspberry Pi. For the same price as the original Raspberry Pi model B, but incorporating numerous small improvements”. In November of the same year the even lower cost (US$20) A+ was announced. Like the A, it would have no Ethernet port, and just one USB port. But, like the B+, it would have lower power requirements, a micro-SD-card slot and 40-pin HAT compatible GPIO.

On 2 February 2015 the official Raspberry Pi blog announced that the Raspberry Pi 2 was available. It had the same form factor and connector layout as the Model B+. It had a 900 MHz quad-core ARMv7 Cortex-A7 CPU, twice the memory (for a total of 1 GB) and complete compatibility with the original generation of Raspberry Pis.

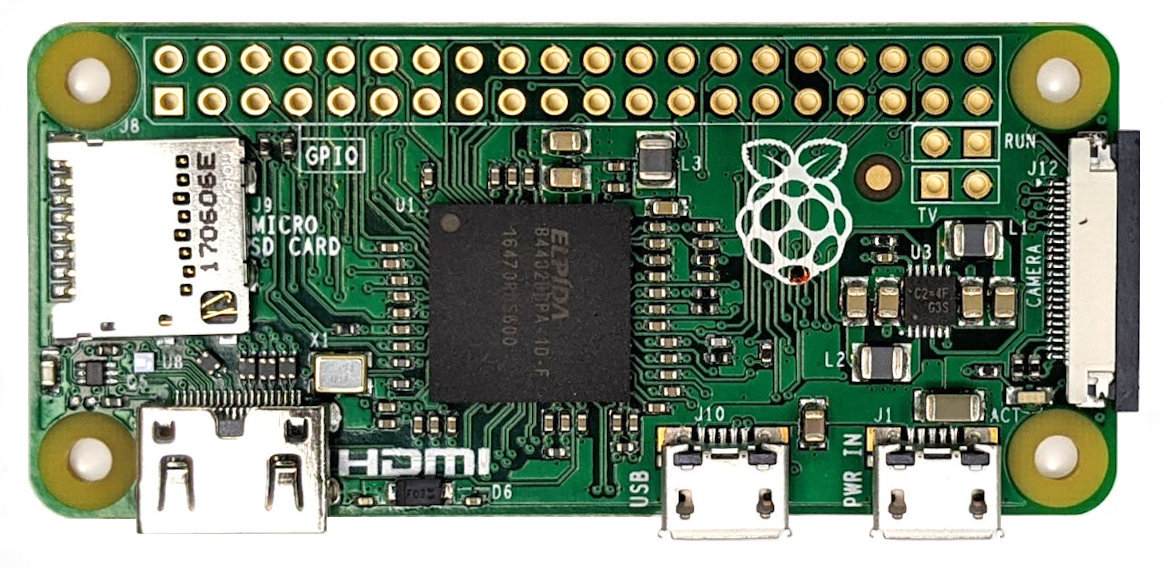

Following a meeting with Eric Schmidt (of Google fame) in 2013, Eben embarked on the design of a new form factor for the Pi. On the 26th of November 2015 the Pi Zero was released.

The Pi Zero is a significantly smaller version of a Pi with similar functionality but with a retail cost of $5. On release it sold out (20,000 units) World wide in 24 hours and a free copy was affixed to the cover of the MagPi magazine.

The Raspberry Pi 3 was released in February 2016. The most notable change being the inclusion of on-board WiFi and Bluetooth.

In February 2017 the Raspberry Pi Zero W was announced. This device had the same small form factor of the Pi Zero, but included the WiFi and Bluetooth functionality of the Raspberry Pi 3.

On Pi day (the 14th of March (Get it? 3-14?)) in 2018 the Raspberry Pi 3+ was announced. It included dual band WiFi, upgraded Bluetooth, Gigabit Ethernet and support for a future PoE card. The Ethernet speed was actually 300Mpbs since it still needs to operate on a USB2 bus. By this stage there had been over 9 million Raspberry Pi 3’s sold and 19 million Pi’s in total.

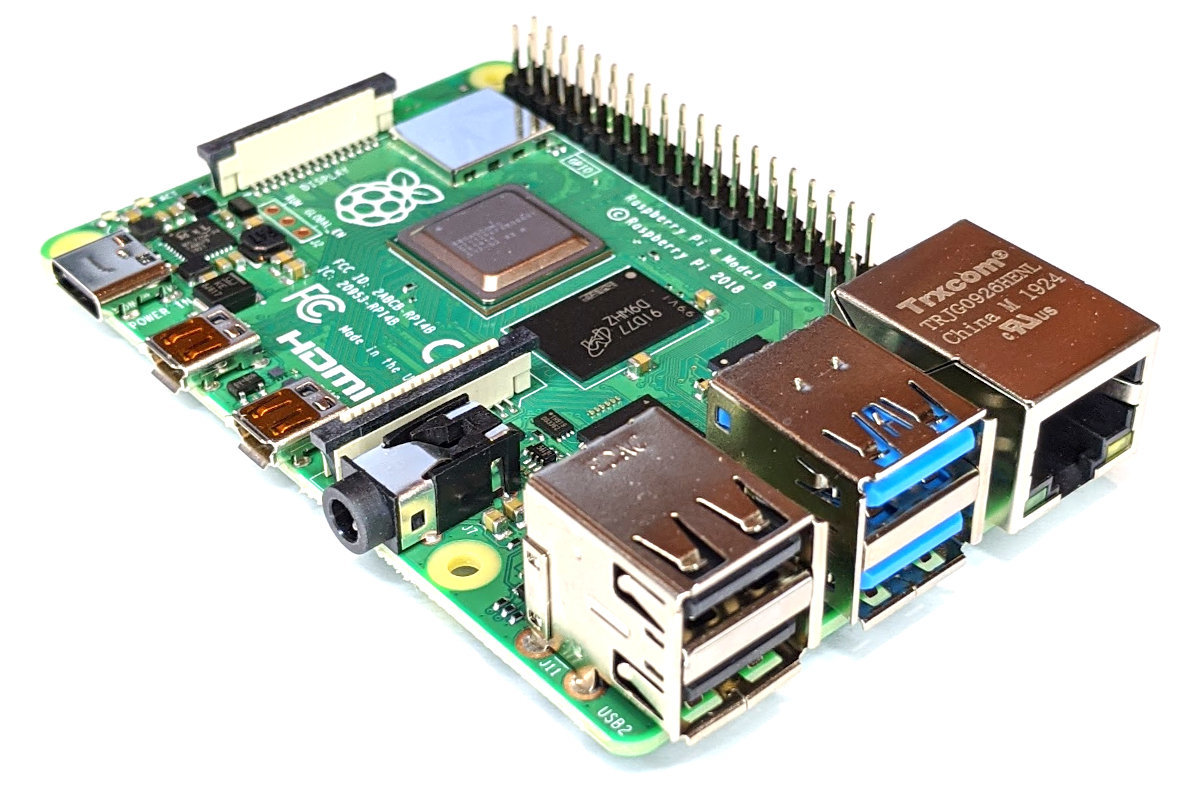

On the 24th of June 2019, the Raspberry Pi 4 was released.

This realised a true Gigabit Ethernet port and a combination of USB 2 and 3 ports. There was also a change in layout of the board with some ports being moved and it also included dual micro HDMI connectors. As well as this, the RPi 4 is available with a wide range of on-board RAM options. Power was now supplied via a USB C port.

A new Raspberry Pi Zero W 2 was released in October 2021. This included a system in a package designed by Raspberry Pi and is capable of using a 64 bit operating system.

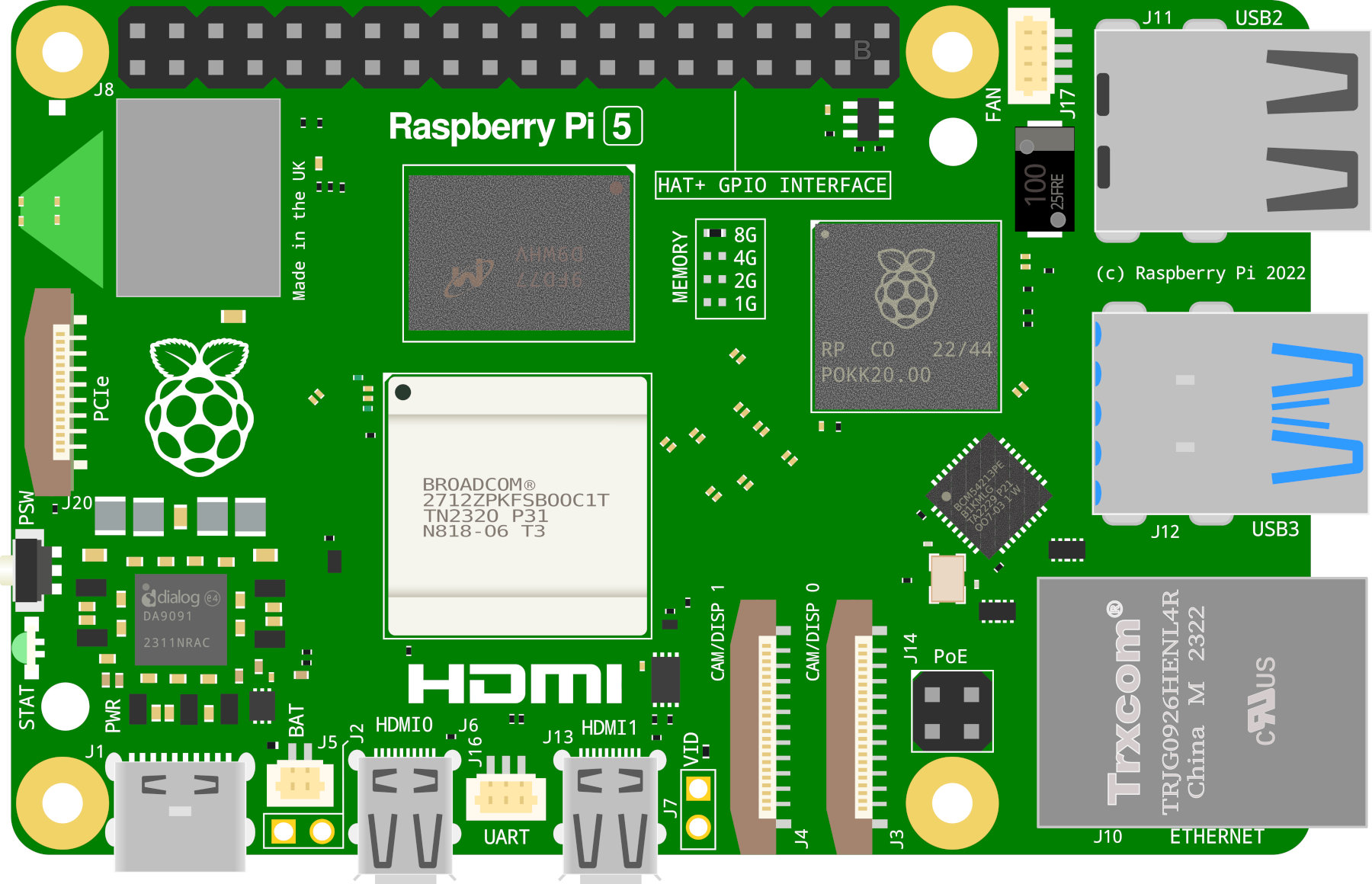

The Raspberry Pi 5 was announced on the 28th of September 2023. It features a custom input / output controller designed by Raspberry Pi and includes a clock speed of 2.4GHz for the 64-bit Cortex-A76 CPU. The dual USB 3.0 ports can now transfer up to 5 Gbps and we can connect two independent 4K 60Hz displays via the micro HDMI ports. There is now an on-board real-time clock and <gasp> a power button!

As of the 28th of February 2022 there had been over 46 million Raspberry Pis (combined) sold.

It would be easy to consider the measurement of the success of the Raspberry Pi in the number of computer boards sold. Yet, this would most likely not be the opinion of those visionaries who began the journey to develop the boards. Their stated aim was to re-invigorate the desire of young people to experiment with computers and to have fun doing it. We can thus measure their success by the many projects, blogs and updated school curriculum’s that their efforts have produced.

Raspberry Pi Versions

In the words of the totally awesome Raspberry Pi foundation;

The Raspberry Pi is a low cost, credit-card sized computer that plugs into a computer monitor or TV, and uses a standard keyboard and mouse. It’s capable of doing everything you’d expect a desktop computer to do, from browsing the internet and playing high-definition video, to making spreadsheets, word-processing, playing games and learning how to program in languages like Scratch and Python.

There are (at time of writing) fourteen different models on the market. The A, B, A+, B+, ‘model B 2’, ‘model B 3’, ‘model B 3+’, ‘model B 4’, ‘5’ (which I’m just going to call the B2, B3, B3+, 4 and 5 respectively), ‘model A+’, ‘model A+ 3’ , the Zero, Zero W and Zero 2 W. A lot of projects will typically use either the the B2, B3, B3+, 4 or the 5 for no reason other than they offer a good range of USB ports (4), 1 - 8 GB of RAM, an HMDI video connection (or two) and an Ethernet connection. For all intents and purposes either the B2, B3, B3+, 4 or 5 can be used interchangeably for the projects depending on connectivity requirements as the B3, B3+, 4 and 5 have WiFi and Bluetooth built in. For size limited situations or where lower power is an advantage, the Zero, Zero W or Zero 2 W is useful, although there is a need to cope with reduced connectivity options (a single micro USB connection) although the Zero W and Zero 2W have WiFi and Bluetooth built in. Always aim to use the latest version of the Raspberry Pi OS operating system (or at least one released on or after the 14th of March 2018). For best results browse the ‘Downloads’ page of raspberrypi.com.

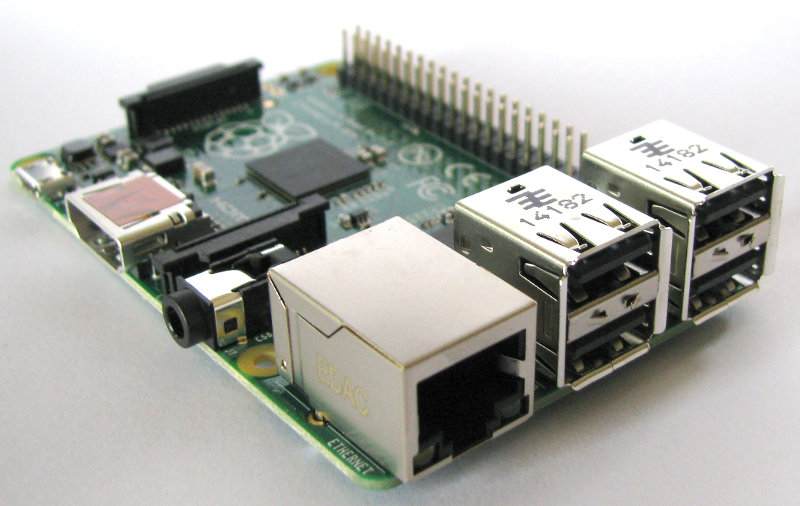

Raspberry Pi B+, B2, B3 and B3+

The model B+, B2, B3 and B3+ all share the same form factor and have been a consistent standard for the layout of connectors since the release of the B+ in July 2014. They measure 85 x 56 x 17mm, weighs 45g and are powered by Broadcom chipsets of varying speeds, numbers of cores and architectures.

USB Ports

They include 4 x USB Ports (with a maximum output of 1.2A)

Video Out

Integrated Videocore 4 graphics GPU capable of playing full 1080p HD video via a HDMI video output connector. HDMI standards rev 1.3 & 1.4 are supported with 14 HDMI resolutions from 640×350 to 1920×1200 plus various PAL and NTSC standards.

Ethernet Network Connection

There is an integrated Ethernet Port for network access. On the B2 and B3 the connection speed is fast ethernet (10/100 bps). The B3+ introduced a 300bps connection speed.

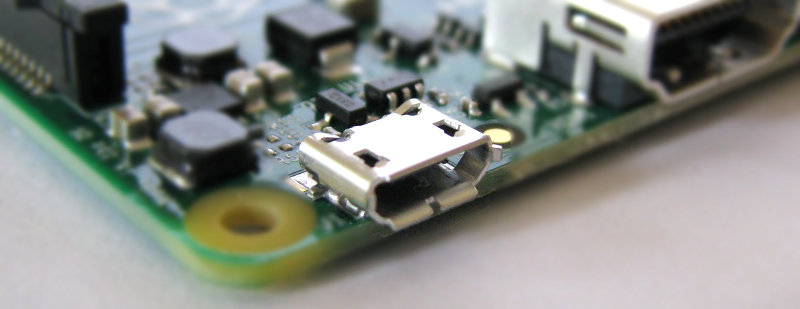

USB Power Input Jack

The boards include a 5V 2A Micro USB Power Input Jack.

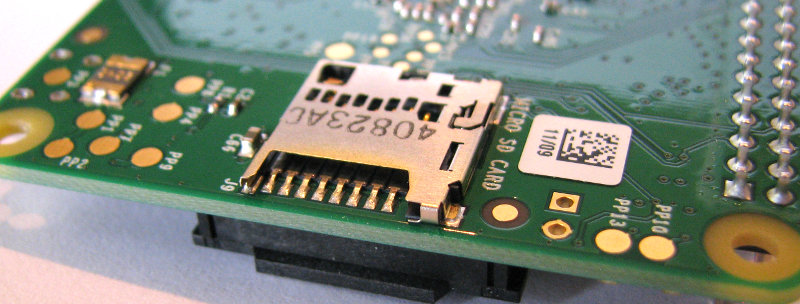

MicroSD Flash Memory Card Slot

There is a microSD card socket on the ‘underside ‘of the board. On the Model B2 this is a ‘push-push’ socket. On the B3 and later this is a simple friction fit.

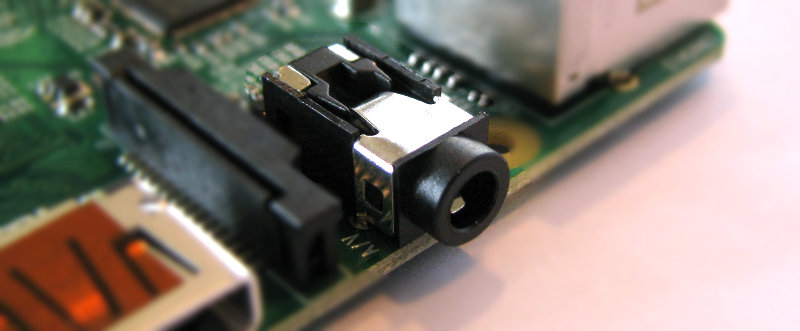

Stereo and Composite Video Output

The B+, B2, B3 and B3+ includes a 4-pole (TRRS) type connector that can provide stereo sound if you plug in a standard headphone jack and composite video output with stereo audio if you use a TRRS adapter.

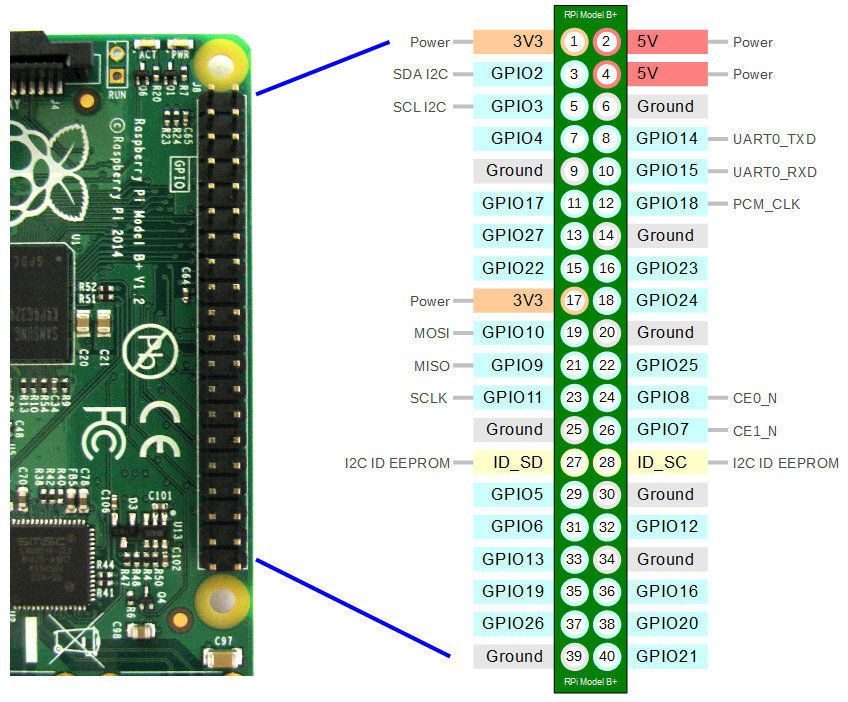

40 Pin Header

The Raspberry Pi B+, B2, B3 and B3+ include a 40-pin, 2.54mm header expansion slot (Which allows for peripheral connection and expansion boards).

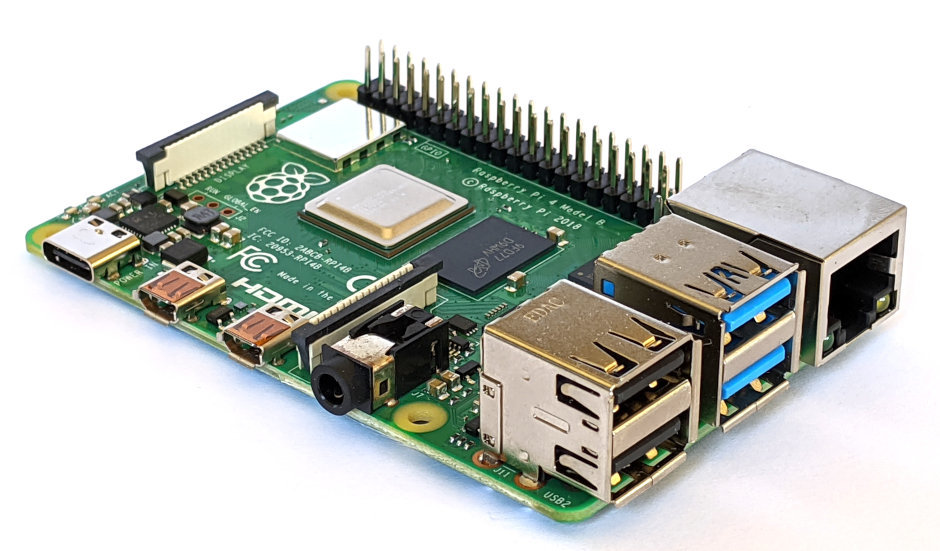

Raspberry Pi 4

The introduction of the Raspberry Pi 4 saw the footprint of the main board used remain the same, but some of the ports have been re-arranged or changed. This means that cases for the RPi 4 will not be suitable for the B+, B2, B3 or B3+.

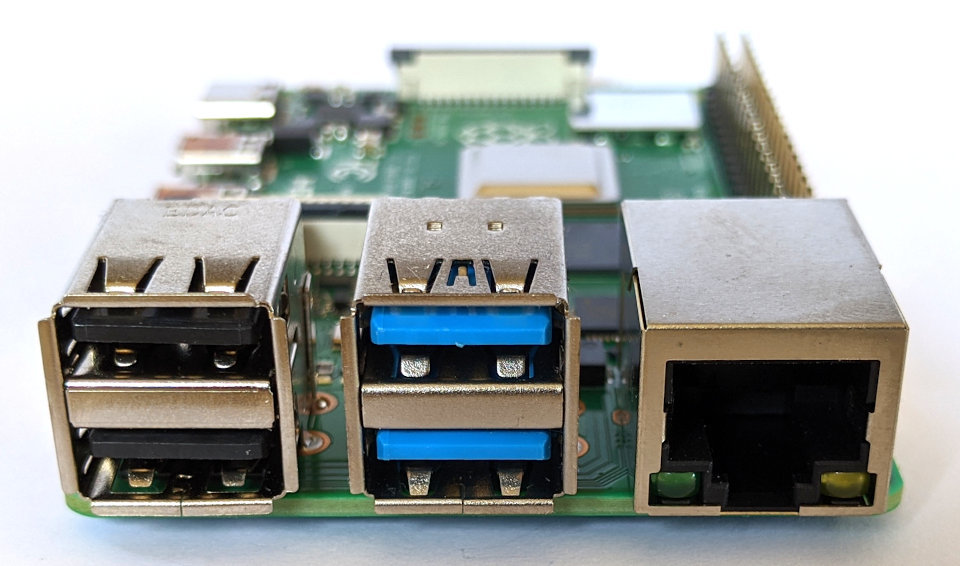

Pi 4 USB ports and Ethernet Ports

The Pi 4 includes 2 x USB 2 ports and 2 x USB 3 ports. The on-board network now supports true Gigabit speed. The location of the USB and Network ports have been reversed compared with those on the B+, B2, B3 and B3+.

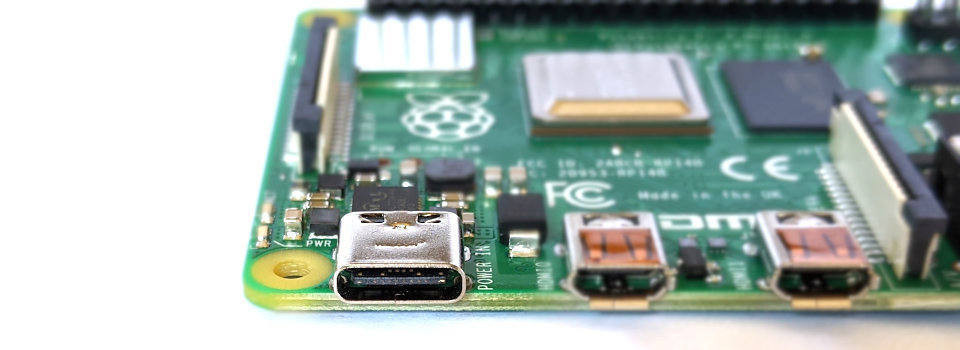

Pi 4 USB C Power Input

Power is now applied to the board via a USB C connector which is in the same location as the Micro USB power input jack on the B+, B2, B3 and B3+.

Pi 4 Dual Video Out

Video output is now provided via an integrated Videocore VI graphics GPU capable of displaying full 4K video via a 2 x micro-HDMI video output connectors. HDMI standard rev 2.0 is supported.

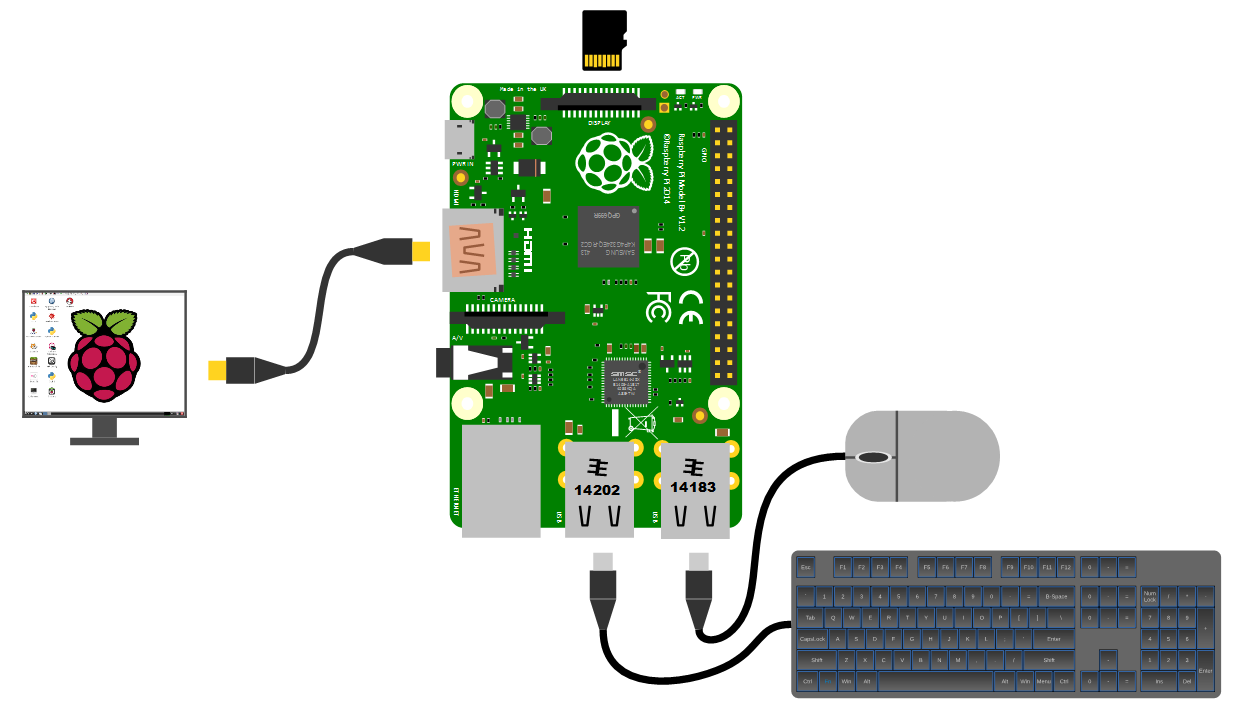

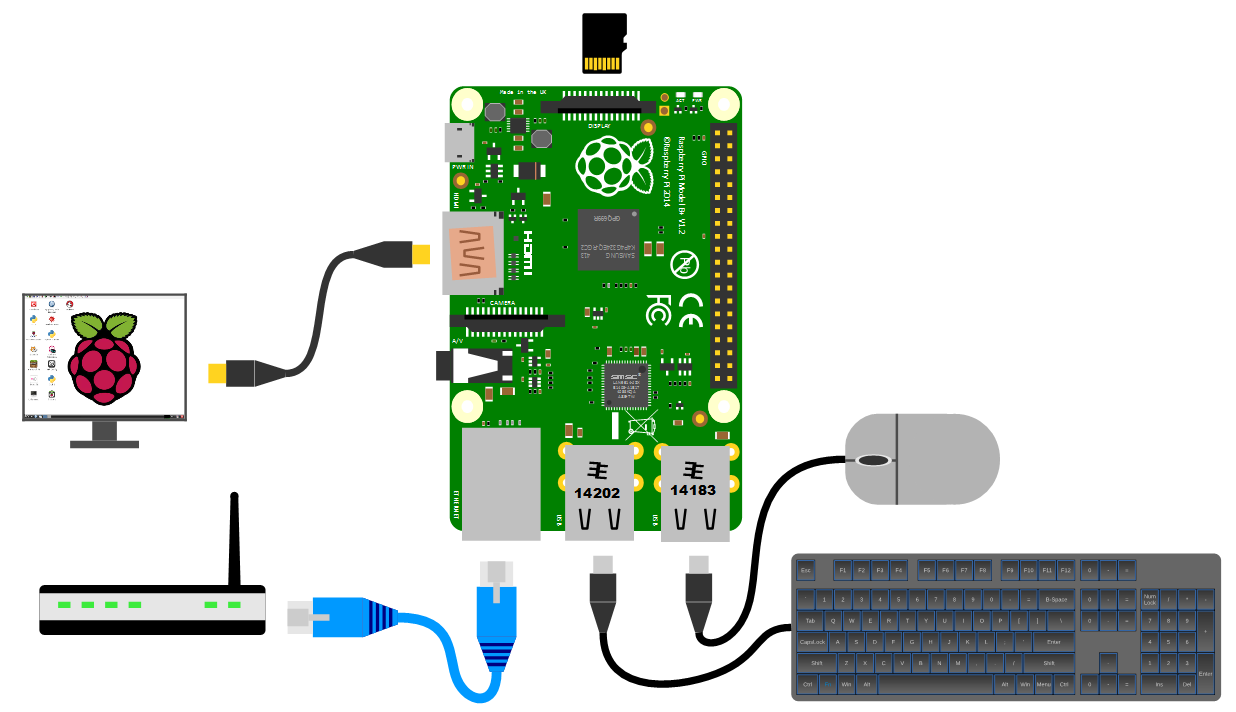

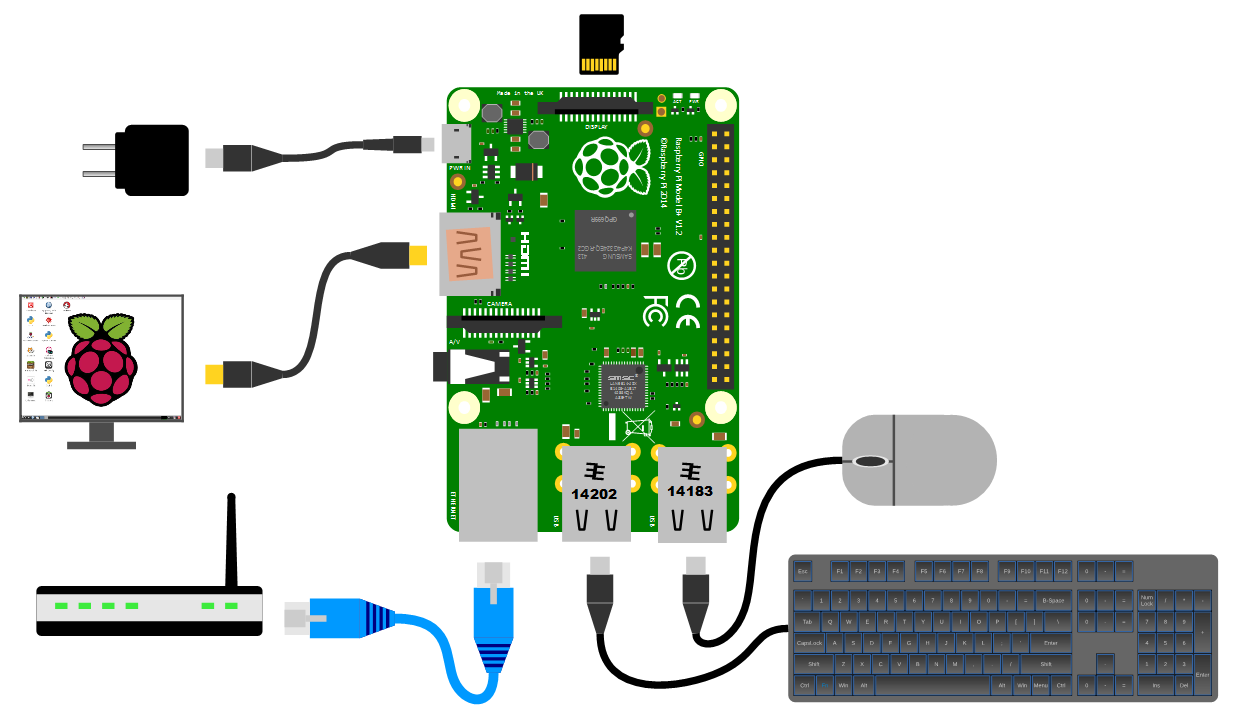

Raspberry Pi Peripherals

To make a start using the Raspberry Pi we will need to have some additional hardware to allow us to configure it.

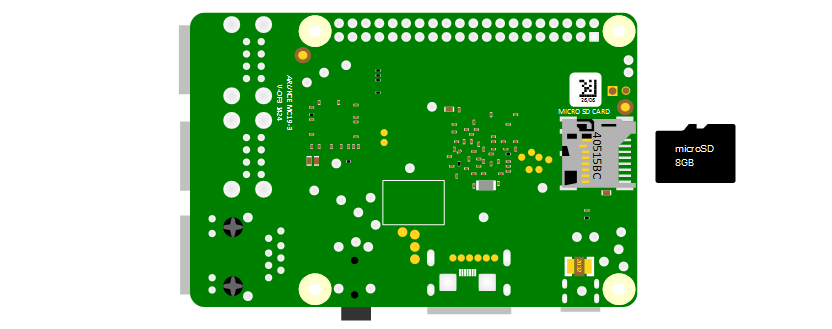

SD Card

Traditionally the Raspberry Pi needs to store the Operating System and working files on a MicroSD card (actually a MicroSD card all models except the older A or B models which use a full size SD card). There is the ability to boot from a mass storage device or the network, but it is slightly ‘tricky’, so we won’t cover it.

The MicroSD card receptacle is on the rear of the board and on the Model B2 it is a ‘push-push’ type which means that you push the card in to insert it and then to remove it, give it a small push and it will spring out.

This is the equivalent of a hard drive for a regular computer, but we’re going for a minimal effect. We will want to use a minimum of an 8GB card (smaller is possible, but 8 is the realistic minimum). Also try to select a higher speed card if possible (class 10 or similar) as this will speed things up a bit.

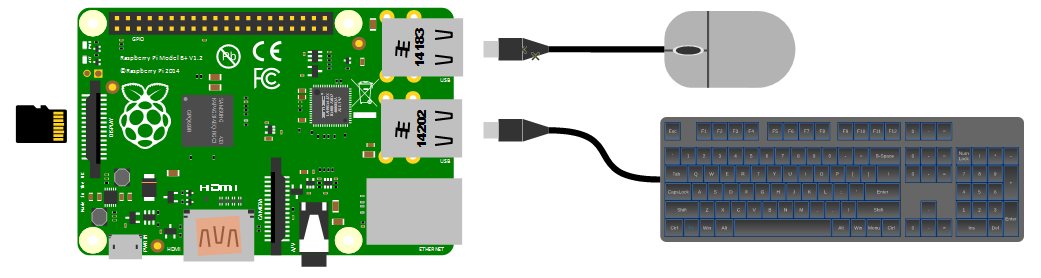

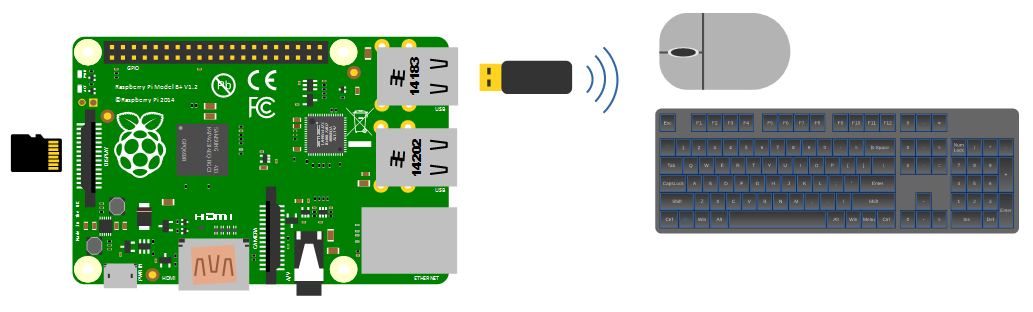

Keyboard / Mouse

While we will be making the effort to access our system via a remote computer, we will need a keyboard and a mouse for the initial set-up. Because the B+, B2, B3, B3+ and 4 models of the Pi have 4 x USB ports, there is plenty of space for us to connect wired USB devices.

An external wireless combination would most likely be recognised without any problem and would only take up a single USB port, but if we build towards a remote capacity for using the Pi (using it headless, without a keyboard / mouse / display), the nicety of a wireless connection is not strictly required.

Video

The Raspberry Pi comes with an HDMI port ready to go which means that any monitor or TV with an HDMI connection should be able to connect easily.

Because this is kind of a hobby thing you might want to consider utilising an older computer monitor with a DVI or 15 pin ‘D’ connector. If you want to go this way you will need an adapter to convert the connection.

Likewise, if you are using a Pi 4 or 5, the standard connectors on the board are micro HDMI and you may therefore require an adaptor.

Network

The B+, B2, B3, B3+, 4 and 5 models of the Raspberry Pi have a standard RJ45 network connector on the board ready to go. In a domestic installation this is most likely easiest to connect into a home ADSL modem or router.

This ‘hard-wired’ connection is great for getting started, but we will work through using a wireless solution later in the book.

Power supply

The Pi can be powered up in a few ways. The simplest is to use the micro USB port to connect from a standard USB charging cable for models B+, B2, B3 and B3+. You probably have a few around the house already for phones or tablets. If you are using a Pi 4 or 5 you will need a USB C power supply or an adaptor to convert between USB A and C.

However, it’s worth thinking about the application that we use our Pi for. Depending on how much we ask of the unit, we might want to pay attention to the amount of current that our power supply can deliver. The A+, B+ and Zero models will function adequately with a 700mA supply, but the B2, B3, B3+ and 4 models will draw more current and if we want to use multiple wireless devices or supplying sensors that demand increased power, we will need to consider a supply that is capable of an output up to 2.5A. If you are thinking of including some power hungry peripherals to the Raspberry Pi 5 (because you can) you could consider a supply that could feed up to 5A.

Cases

We should get ourselves a simple case to keep the Pi reasonably secure. There are a wide range of options to select from. These range from cheap but effective to more costly than the Pi itself (not hard) and looking fancy. The most important thing to consider here is to make sure you get a case appropriate to the model of Pi that you are using. Be aware that while the B+, B2, B3 and B3+ Pis share the same dimensions as the Model 4, there are differences in the port layout that means that the cases are not interchangeable

You could use a simple plastic case that can be brought for a few dollars;

For a very practical design and a warm glow from knowing that you’re supporting a worthy cause, you could go no further than the official Raspberry Pi case that includes removable side-plates and loads of different types of access. All for the paltry sum of about $9.

Operating Systems

An operating system is software that manages computer hardware and software resources for computer applications. For example Microsoft Windows could be the operating system that will allow the browser application Firefox to run on our desktop computer.

Variations on the Linux operating system are the most popular on our Raspberry Pi. Often they are designed to work in different ways depending on the function of the computer.

Linux is a computer operating system that can be distributed as free and open-source software. The defining component of Linux is the Linux kernel which was first released on 5 October 1991 by Linus Torvalds.

Linux was originally developed as a free operating system for Intel x86-based personal computers. It has since been made available to a wide range of computer hardware platforms and is one of the most popular operating systems on servers, mainframe computers and supercomputers. Linux also runs on embedded systems, which are devices whose operating system is typically built into the firmware and is highly tailored to the system; this includes mobile phones, tablet computers, network routers, facility automation controls, televisions and video game consoles. Android, the most widely used operating system for tablets and smart-phones, is built on top of the Linux kernel. In our case we will be using a version of Linux that is assembled to run on the ARM CPU architecture used in the Raspberry Pi.

The development of Linux is one of the most prominent examples of free and open-source software collaboration. Typically, Linux is packaged in a form known as a Linux ‘distribution’, for both desktop and server use. Popular mainstream Linux distributions include Debian, Ubuntu and the commercial Red Hat Enterprise Linux. Linux distributions include the Linux kernel, supporting utilities and libraries and usually a large amount of application software to carry out the distribution’s intended use.

A distribution intended to run as a server may omit all graphical desktop environments from the standard install, and instead include other software to set up and operate a solution ‘stack’ such as LAMP (Linux, Apache, MySQL and PHP). Because Linux is freely re-distributable, anyone may create a distribution for any intended use.

Welcome to Raspberry Pi OS

The Raspberry Pi OS Linux distribution is based on Debian Linux. This is the official operating system for the Raspberry Pi.

Raspberry Pi OS and Raspbian

Up until the end of May 2020 the official operating system was called ‘Raspbian’ and there will be many references to Raspbian in online and print media. With the advent of an evolution to a 64 bit architecture, the maintainers of the Raspbian code (which is 32 bit) didn’t want to have the confusion of the new 64 bit version being called Raspbian when it didn’t actually contain any of their code. So the Raspberry Pi Foundation took the opportunity to opt for a name change to simplify future operating system releases by changing the name of the official Raspberry Pi operating system to ‘Raspberry Pi OS’. The 32 bit version of Raspberry Pi OS will no doubt continue to draw from the Raspbian project, but the 64 bit version will be all new code.

Operating System Evolution

At the time of writing there have been six different operating system releases published based on the Debian Linux distribution. Those six releases are called ‘Wheezy’, ‘Jessie’, ‘Stretch’, ‘Buster’, ‘Bullseye’ and ‘Bookworm’. Debian is a widely used Linux distribution that allows Raspberry Pi OS users to leverage a huge quantity of community based experience in using and configuring software. The Wheezy edition is the earliest and was the stock edition from the inception of the Raspberry Pi till the end of 2015. From that point there were new distributions releases roughly every two years with the latest ‘Bookworm’ being released at the end of 2023. A great deal of effort goes into maintaining the ability for new Operating Systems to support the older Raspberry Pi boards. This means that you can download and install the most recent 32 bit version and it will still work on a Pi 1. However, older boards which don’t support a 64 bit architecture will not be able to run the newer 64 bit Operating Systems.

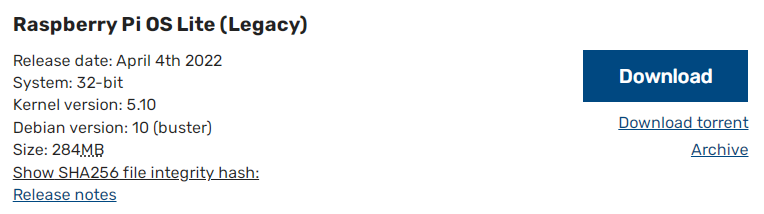

Downloading

The best place to source the latest version of the Raspberry Pi OS is to go to the raspberrypi.com page; https://www.raspberrypi.com/software/operating-systems/. We will download the ‘Lite’ version (which doesn’t use a desktop GUI). If you’ve never used a command line environment, then good news! You’re about to enter the World of ‘real’ computer users :-).

You can download via bit torrent or directly as a zip file, but whatever the method you should eventually be left with an ‘img’ file for Raspberry Pi OS.

To ensure that the projects we work on can be used with versions of the Pi from the B+ onwards we need to make sure that the version of Raspberry Pi OS we use is from 2015-01-13 or later. Earlier downloads will not support the more modern CPU of later models. To support the newer CPU of the B3+ and later (and all the previous CPUs) we will need a version of Raspberry Pi OS from 2018-03-13 or later.

We should always try to download our image files from the authoritative source!

Writing the Operating System image to the SD Card

Once we have an image file we need to get it onto our SD card.

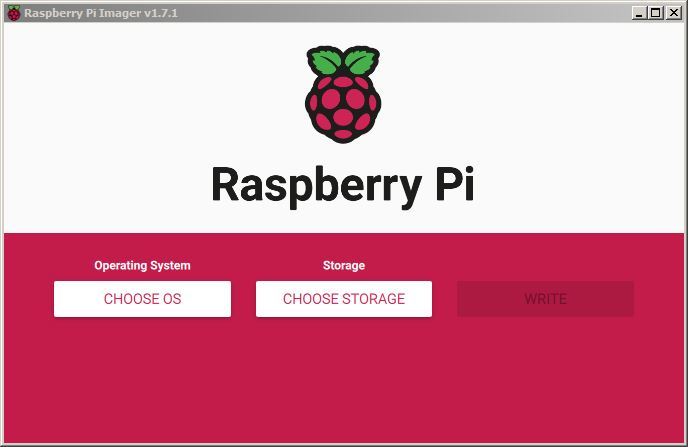

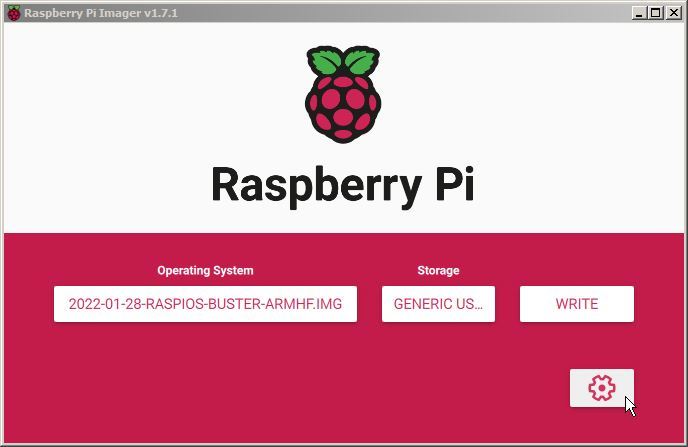

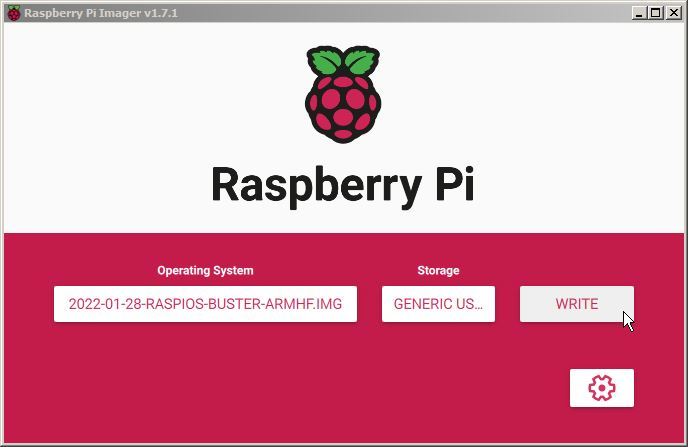

We will work through an example using Windows 7 but the process should be very similar for other operating systems as we will be using the excellent software Raspberry Pi Imager which is available for Windows, Linux and macOS.

Download and install Raspberry Pi Imager and start it up.

Select the ‘CHOOSE OS’ button.

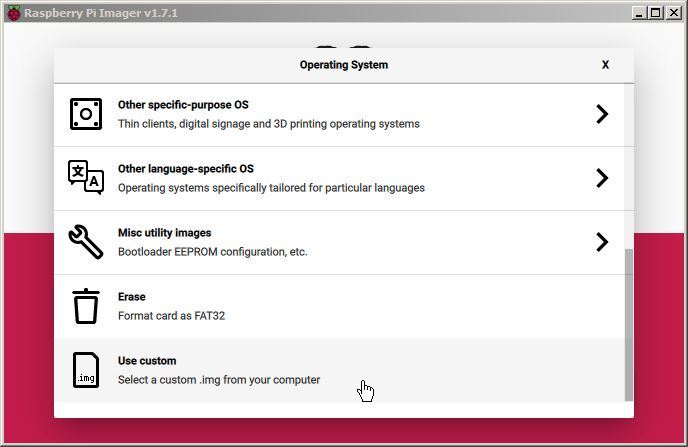

Scroll to the ‘Use custom’ option. This will allow us to have some finer degree of control over which OS we are installing.

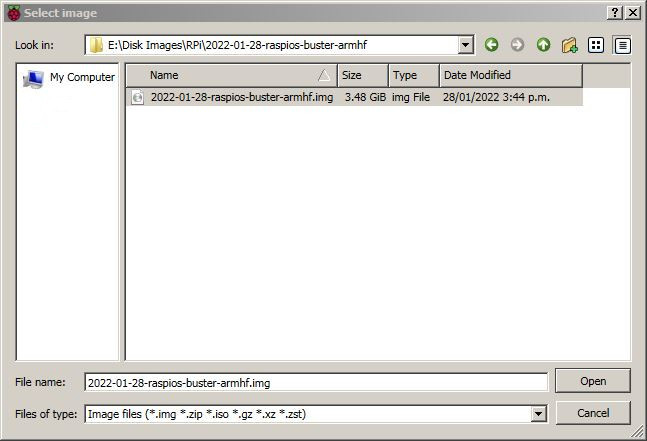

Navigate to the location of the image file that we downloaded earlier. select that and press the ‘Open’ button.

You will need an SD card reader capable of accepting your MicroSD card (you may require an adapter or have a reader built into your desktop or laptop).

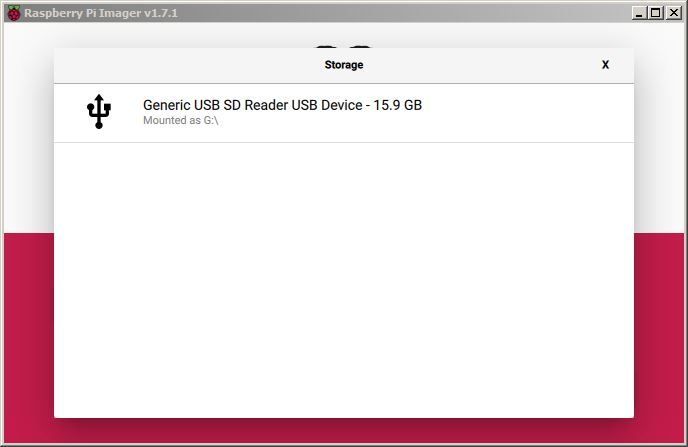

Now select the ‘CHOOSE STORAGE’ button and we will be presented with the SD card that

Assuming that your SD card is in the reader you should see Raspberry Pi Imager automatically select it for writing (Raspberry Pi Imager is very good at presenting options for installing that are only SD cards).

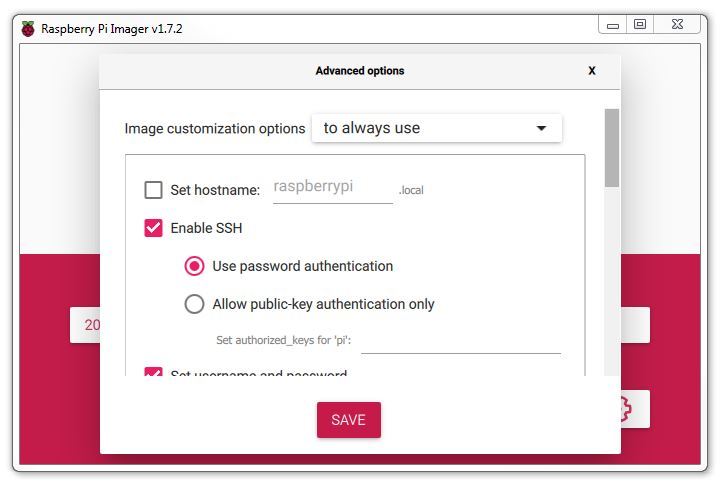

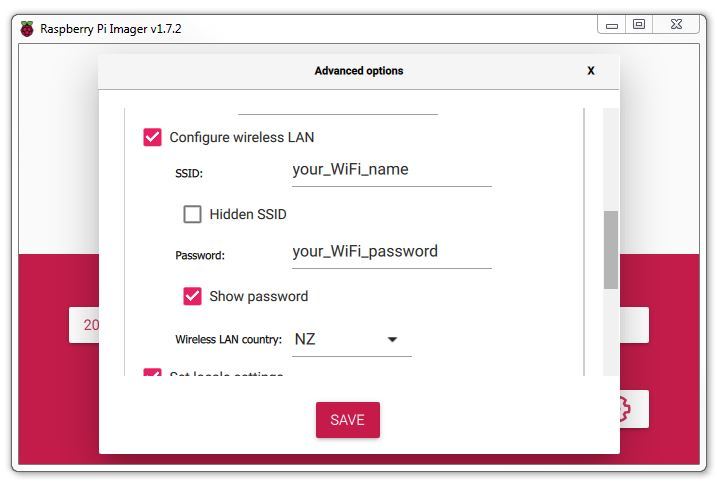

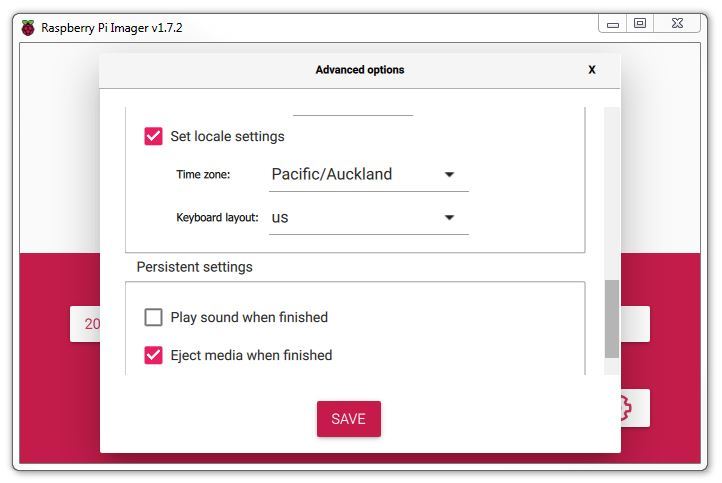

Before we write our SD card we will configure some of it’s initial settings (this is a super useful step that will save us time and effort later).

To do this click on the gear icon.

Presuming that we will want to make these options the same for future use, select the Image customisation options to ‘to always, use’

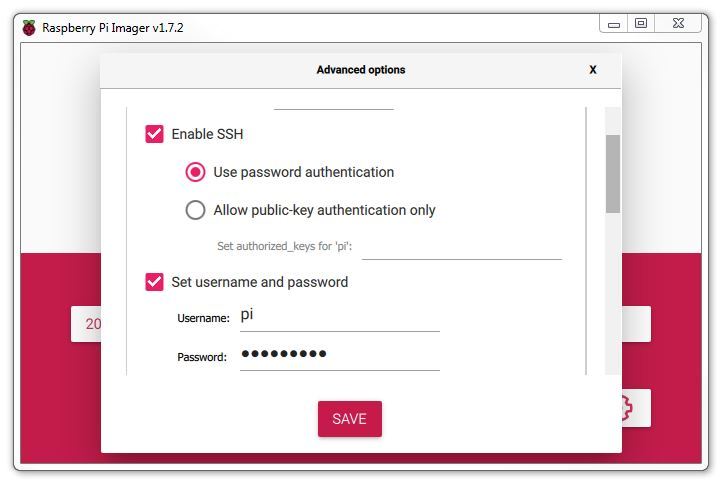

We will enable SSH so that we can remotely access the Pi and set a suitable password. Here I am setting it to the default username ‘Pi’ with the default password ‘raspberry’. You should definitely use your own username and password.

One of the awesome things when learning to use a Raspberry Pi comes when you begin to access it remotely from another computer. This is a bit of an ‘Ah Ha!’ moment for some people as they begin to appreciate just how networks and the Internet is built. We are going to enable and use remote access via what is called ‘SSH’ (this is shorthand for Secure SHell). We’ll start using it later in the book, but for now we can take the opportunity to enable it for later use.

SSH used to be enabled by default, but doing so presents a potential security concern, so it has been disabled by default as of the end of 2016. In our case it’s a feature that we want to use.

If your Pi has WiFi, you can select to configure the wireless LAN and enter it’s password. Likewise you will want to select the Wireless LAN Country for the country that you are in.

Lastly we should set our locale setting to our location and depending on your keyboard type, select the appropriate one.

Once we are happy with our settings. click on ‘SAVE’.

With everything ready. Click on the ‘WRITE’ button

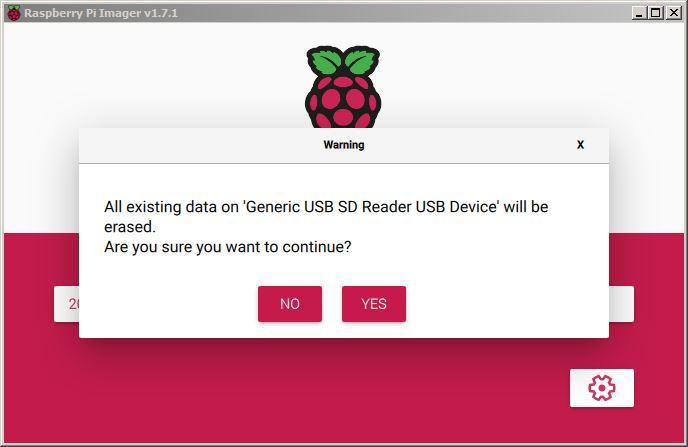

A friendly warning will let us know that if we proceed, the SD card will be erased. Press ‘YES’ if you are sure that you want to continue.

The writing process will start and progress. The time taken can vary a little, but it should only take about 3-4 minutes with a class 10 SD card.

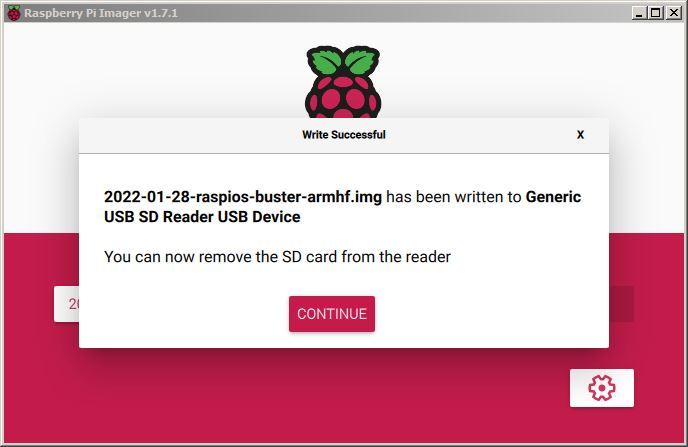

Once done, we should be told that the process has completed successfully and that we can remove our SD card.

Powering On

Insert the card into the slot on the Raspberry Pi and turn on the power.

You will see a range of information scrolling up the screen before eventually being presented with a login prompt.

The Command Line interface

Because we have installed the ‘Lite’ version of Raspberry Pi OS, when we first boot up, the process should automatically re-size the root file system to make full use of the space available on your SD card. If this isn’t the case, the facility to do it can be accessed from the Raspberry Pi configuration tool (raspi-config) that we will look at in a moment.

Once the reboot is complete (if it occurs) you will be presented with the console prompt to log on;

The default username and password (that we set earlier, buy yours may be different) is:

Username: pi

Password: raspberry

Enter the username and password.

Congratulations, you have a working Raspberry Pi and are ready to start getting into the thick of things!

If you didn’t take the opportunity to set some of the advanced options as above with the Raspberry Pi Imager, you might want to do some house keeping per below.

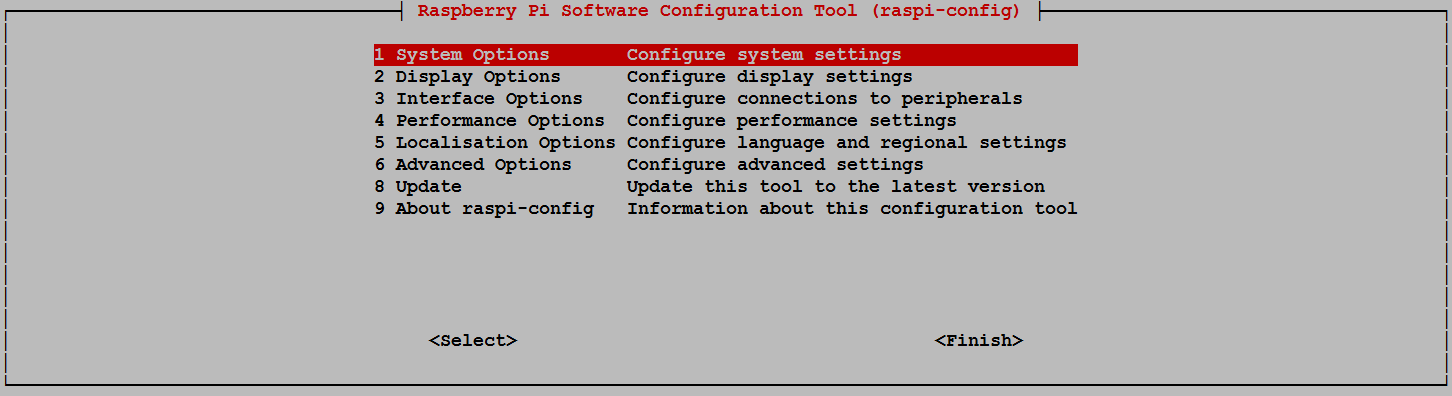

Raspberry Pi Software Configuration Tool

The steps in this section will only be required if you did not set them with the Raspberry Pi Imager.

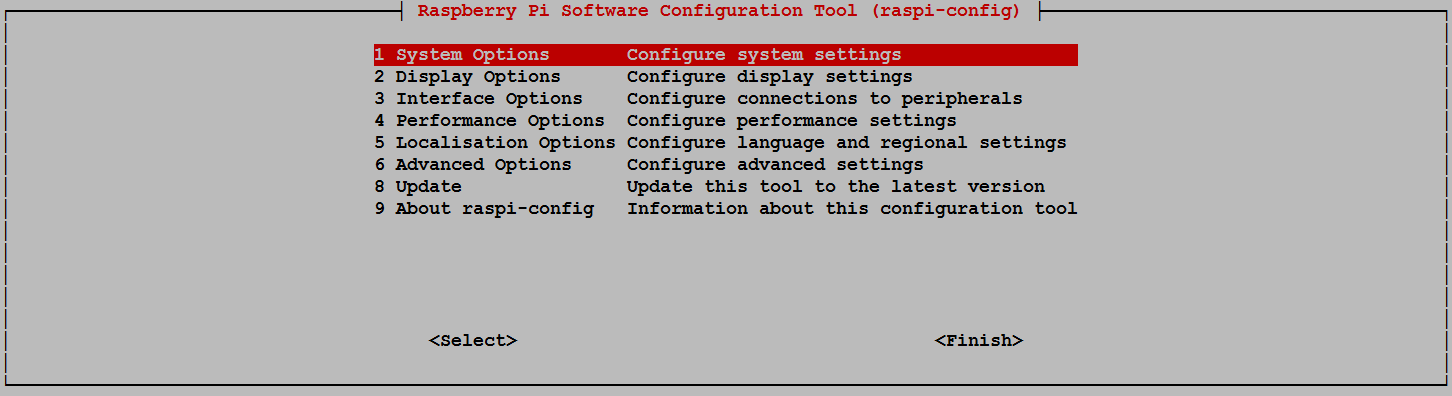

We will use the Raspberry Pi Software Configuration Tool to change the locale and keyboard configuration to suit us. This can be done by running the following command;

Use the up and down arrow keys to move the highlighted section to the selection you want to make then press tab to highlight the <Select> option (or <Finish> if you’ve finished).

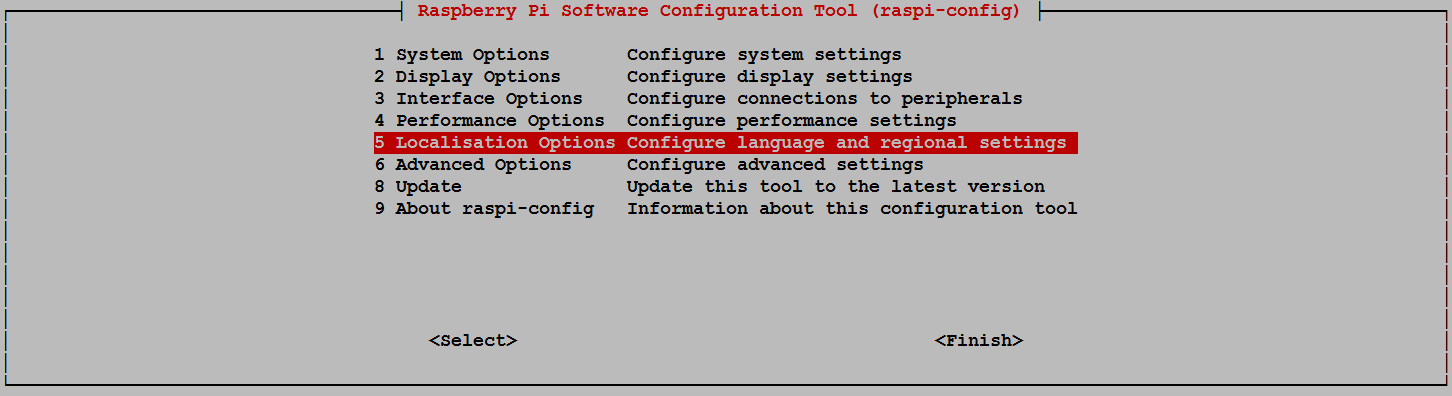

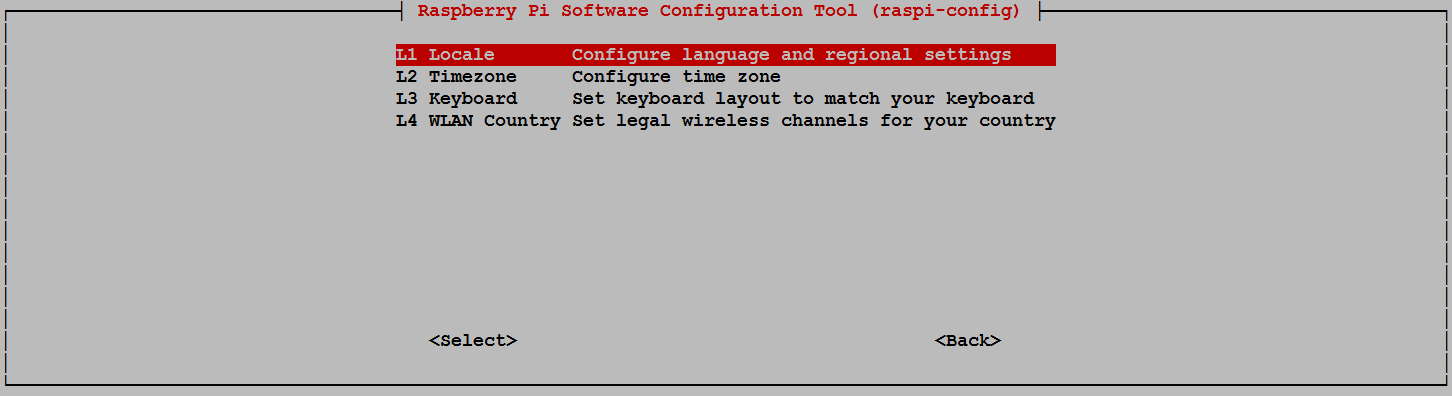

Lets change the settings for our operating system to reflect our location for the purposes of having the correct time, language and WiFi regulations. These can all be located via selection ‘5 Localisation Options’ on the main menu.

Select this and work through any changes that are required for your installation based on geography.

Once you exit out of the raspi-config menu system, if you have made a few changes, there is a possibility that you will be asked if you want to re-boot the Pi. That’s just fine. Even if you aren’t asked, it might be useful since some locales can introduce different characters on the screen.

Once the reboot is complete you will be presented with the console prompt to log on again;

Software Updates

After configuring our Pi we’ll want to make sure that we have the latest software for our system. This is a useful thing to do as it allows any additional improvements to the software we will be using to be enhanced or security of the operating system to be improved. This is probably a good time to mention that we will need to have an Internet connection available.

Type in the following line which will find the latest lists of available software;

You should see a list of text scroll up while the Pi is downloading the latest information.

Use sudo apt-key list and find the entry that is in /etc/apt/trusted.gpg.

Then we convert this entry to a .gpg file, using the last 8 numeric characters from above (90FDDD2E). The characters that you have will most likely be different!

sudo apt-key export 90FDDD2E | sudo gpg –dearmour -o /etc/apt/trusted.gpg.d/raspbian.gpg

If we have more than one warning message we can repeat the above commands for each generated by sudo apt update.

Then we want to upgrade our software to latest versions from those lists using;

The Pi should tell you the lists of packages that it has identified as suitable for an upgrade along with the amount of data that will be downloaded and the space that will be used on the system. It will then ask you to confirm that you want to go ahead. Tell it ‘Y’ and we will see another list of details as it heads off downloading software and installing it.

Power Up the Pi

To configure the Raspberry Pi for our purpose we will extend our Pi a little. This makes configuring and using the device easier and to be perfectly honest, making life hard for ourselves is so exhausting! Let’s not do that.

Static IP Address

As we mentioned earlier, enabling remote access is a really useful thing. This will allow us to configure and operate our Raspberry Pi from a separate computer. To do so we will want to assign our Raspberry Pi a static IP address.

The method by which a static address is assigned changed with the introduction of the operating system ‘bookworm’ (around the end of 2023). The older method used dhcpcd and the newer method uses nmcli. For the sake of completeness I will include both methods here.

An Internet Protocol address (IP address) is a numerical label assigned to each device (e.g., computer, printer) participating in a computer network that uses the Internet Protocol for communication.

There is a strong likelihood that our Raspberry Pi already has an IP address and it should appear a few lines above the ‘login’ prompt when you first boot up;

The My IP address... part should appear just above or around 15 lines above the login line, depending on the version of the Raspberry Pi OS we’re using. In this example the IP address 10.1.1.25 belongs to the Raspberry Pi.

This address will probably be a ‘dynamic’ IP address and could change each time the Pi is booted. For the purposes of using the Raspberry Pi with a degree of certainty when logging in to it remotely it’s easier to set a fixed IP address.

This description of setting up a static IP address makes the assumption that we have a device running on our network that is assigning IP addresses as required. This sounds complicated, but in fact it is a very common service to be running on even a small home network and most likely on an ADSL modem/router or similar. This function is run as a service called DHCP (Dynamic Host Configuration Protocol). You will need to have access to this device for the purposes of knowing what the allowable ranges are for a static IP address.

The Netmask

A common feature for home modems and routers that run DHCP devices is to allow the user to set up the range of allowable network addresses that can exist on the network. At a higher level we should be able to set a ‘netmask’ which will do the job for us. A netmask looks similar to an IP address, but it allows you to specify the range of addresses for ‘hosts’ (in our case computers) that can be connected to the network.

A very common netmask is 255.255.255.0 which means that the network in question can have any one of the combinations where the final number in the IP address varies. In other words with a netmask of 255.255.255.0, the IP addresses available for devices on the network ‘10.1.1.x’ range from 10.1.1.0 to 10.1.1.255 or in other words any one of 256 unique addresses.

CIDR Notation

An alternative to specifying a netmask in the format of ‘255.255.255.0’ is to use a system called Classless Inter-Domain Routing, or CIDR. The idea is to add a specification in the IP address itself that indicates the number of significant bits that make up the netmask.

For example, we could designate the IP address 10.1.1.17 as associated with the netmask 255.255.255.0 by using the CIDR notation of 10.1.1.17/24. This means that the first 24 bits of the IP address given are considered significant for the network routing.

Using CIDR notation allows us to do some very clever things to organise our network, but at the same time it can have the effect of confusing people by introducing a pretty complex topic when all they want to do is get their network going :-). So for the sake of this explanation we can assume that if we wanted to specify an IP address and a netmask, it could be accomplished by either specifying each separately (IP address = 10.1.1.17 and netmask = 255.255.255.0) or in CIDR format (10.1.1.17/24)

Distinguish Dynamic from Static

The other service that our DHCP server will allow is the setting of a range of addresses that can be assigned dynamically. In other words we will be able to declare that the range from 10.1.1.20 to 10.1.1.255 can be dynamically assigned which leaves 10.1.1.0 to 10.1.1.19 which can be set as static addresses.

You might also be able to reserve an IP address on your modem / router. To do this you will need to know what the MAC (or hardware address) of the Raspberry Pi is. To find the hardware address on the Raspberry Pi type;

(For more information on the ifconfig command check out the Linux commands section)

This will produce an output which will look a little like the following;

The figures b8:27:eb:b6:2e:da are the Hardware or MAC address.

Because there are a huge range of different DHCP servers being run on different home networks, I will have to leave you with those descriptions and the advice to consult your devices manual to help you find an IP address that can be assigned as a static address. Make sure that the assigned number has not already been taken by another device. In a perfect World we would hold a list of any devices which have static addresses so that our Pi’s address does not clash with any other device.

For the sake of the upcoming project we will assume that the address 10.1.1.110 is available.

Default Gateway

Before we start configuring we will need to find out what the default gateway is for our network. A default gateway is an IP address that a device (typically a router) will use when it is asked to go to an address that it doesn’t immediately recognise. This would most commonly occur when a computer on a home network wants to contact a computer on the Internet. The default gateway is therefore typically the address of the modem / router on your home network.

We can check to find out what our default gateway is from Windows by going to the command prompt (Start > Accessories > Command Prompt) and typing;

This should present a range of information including a section that looks a little like the following;

The default router gateway is therefore ‘10.1.1.1’.

For OS’s Prior to bookworm

Lets edit the dhcpcd.conf file

On the Raspberry Pi at the command line we are going to start up a text editor and edit the file that holds the configuration details for the network connections.

The file is /etc/dhcpcd.conf. That is to say it’s the dhcpcd.conf file which is in the etc directory which is in the root (/) directory.

To edit this file we are going to type in the following command;

The nano file editor will start and show the contents of the dhcpcd.conf file which should look a little like the following;

for dhcpcd.

# See dhcpcd.conf(5) for details.

# Allow users of this group to interact with dhcpcd via the control socket.

#controlgroup wheel

# Inform the DHCP server of our hostname for DDNS.

hostname

# Use the hardware address of the interface for the Client ID.

clientid

# or

# Use the same DUID + IAID as set in DHCPv6 for DHCPv4 ClientID per RFC4361.

#duid

# Persist interface configuration when dhcpcd exits.

persistent

# Rapid commit support.

# Safe to enable by default because it requires the equivalent option set

# on the server to actually work.

option rapid_commit

# A list of options to request from the DHCP server.

option domain_name_servers, domain_name, domain_search, host_name

option classless_static_routes

# Most distributions have NTP support.

option ntp_servers

# Respect the network MTU. This is applied to DHCP routes.

option interface_mtu

# A ServerID is required by RFC2131.

require dhcp_server_identifier

# Generate Stable Private IPv6 Addresses instead of hardware based ones

slaac private

# Example static IP configuration:

#interface eth0

#static ip_address=192.168.0.10/24

#static ip6_address=fd51:42f8:caae:d92e::ff/64

#static routers=192.168.0.1

#static domain_name_servers=192.168.0.1 8.8.8.8 fd51:42f8:caae:d92e::1

# It is possible to fall back to a static IP if DHCP fails:

# define static profile

#profile static_eth0

#static ip_address=192.168.1.23/24

#static routers=192.168.1.1

#static domain_name_servers=192.168.1.1

# fallback to static profile on eth0

#interface eth0

#fallback static_eth0

The file actually contains some commented out sections that provide guidance on entering the correct configuration.

We are going to add the information that tells the network interface to use eth0 at our static address that we decided on earlier (10.1.1.110) along with information on the netmask to use (in CIDR format) and the default gateway of our router. To do this we will add the following lines to the end of the information in the dhcpcd.conf file;

Here we can see the IP address and netmask (static ip_address=10.1.1.110/24), the gateway address for our router (static routers=10.1.1.1) and the address where the computer can also find DNS information (static domain_name_servers=10.1.1.1).

Once you have finished press ctrl-x to tell nano you’re finished and it will prompt you to confirm saving the file. Check your changes over and then press ‘y’ to save the file (if it’s correct). It will then prompt you for the file-name to save the file as. Press return to accept the default of the current name and you’re done!

To allow the changes to become operative we can type in;

This will reboot the Raspberry Pi and we should see the (by now familiar) scroll of text and when it finishes rebooting you should see;

Which tells us that the changes have been successful (bearing in mind that the IP address above should be the one you have chosen, not necessarily the one we have been using as an example).

For OS’s From bookworm onward

From late 2023 on (assuming that you’re using the latest available Operating System), the default command line tool for setting a static IP address changed to nmcli (nccli is the command line interface tool for the NetworkManager package.

Using this tool means that there is no editing of configuration files required.

To find the name of the connection that we are going to change we run the nmcli con show command. (this is basically shorthand for saying “Show the connections nmcli!”.

This will produce something like the following;

From our checking we know that;

-

Wired connection 1is the name of the connection that we will be setting to a static IP address -

10.1.1.110/24is the static IP address and the netmask that we want (in CIDR notation) -

10.1.1.1is our gateway -

10.1.1.1is also our the address where the computer can go to find DNS information.

Now we can run the commands that will set the Static IP address with all our desired parameters as follows;

We could have actually strung all those commands together as a single command, but for the sake of formatting in the book, the above avoids confusion with line breaks.

To save the changes and reload the network manager we run the following command;

All that remains is to reboot the Pi for the changes to take effect;

This will reboot the Raspberry Pi and we should see the (by now familiar) scroll of text and when it finishes rebooting you should see;

Which tells us that the changes have been successful (bearing in mind that the IP address above should be the one you have chosen, not necessarily the one we have been using as an example).

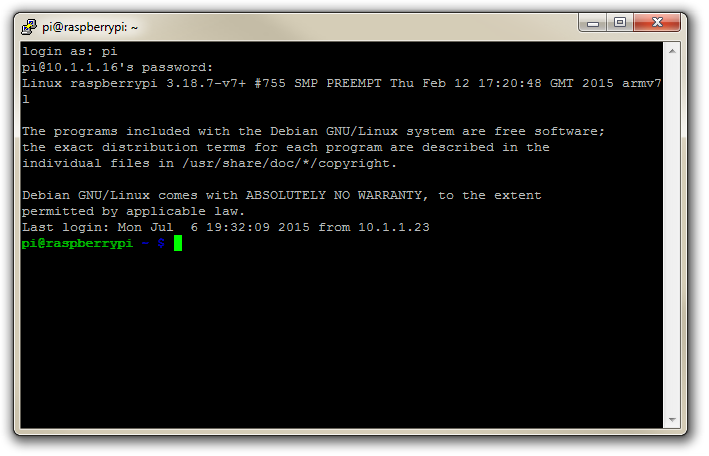

Remote access

To allow us to work on our Raspberry Pi from our normal desktop we can give ourselves the ability to connect to the Pi from another computer. The will mean that we don’t need to have the keyboard / mouse or video connected to the Raspberry Pi and we can physically place it somewhere else and still work on it without problem. This process is called ‘remotely accessing’ our computer .

To do this we need to install an application on our windows desktop which will act as a ‘client’ in the process and have software on our Raspberry Pi to act as the ‘server’. There are a couple of different ways that we can accomplish this task, but because we will be working at the command line (where all we do is type in our commands (like when we first log into the Pi)) we will use what’s called SSH access in a ‘shell’.

Remote access via SSH

Secure SHell (SSH) is a network protocol that allows secure data communication, remote command-line login, remote command execution, and other secure network services between two networked computers. It connects, via a secure channel over an insecure network, a server and a client running SSH server and SSH client programs, respectively (there’s the client-server model again).

In our case the SSH program on the server is running sshd and on the Windows machine we will use a program called ‘PuTTY’.

Setting up the Server (Raspberry Pi)

SSH is already installed and operating but to check that it is there and working type the following from the command line;

The Pi should respond with the message that the program sshd is active (running).

(/lib/systemd/system/ssh.service; enabled)

Active: active (running) since Tue 2017-04-25 03:30:16 UTC; 1h 28min ago

Main PID: 2135 (sshd)

CGroup: /system.slice/ssh.service

└─2135 /usr/sbin/sshd -D

If it isn’t, run the following command;

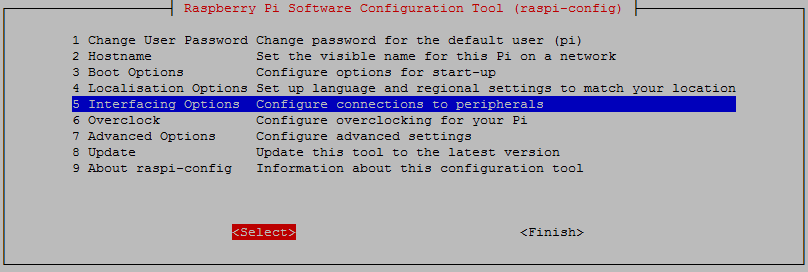

Use the up and down arrow keys to move the highlighted section to the selection you want to make then press tab to highlight the <Select> option (or <Finish> if you’ve finished).

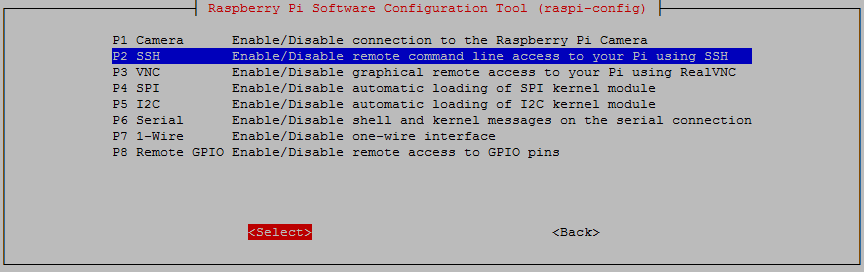

To enable SSH select ‘5 Interfacing Options’ from the main menu.

From here we select ‘P2 SSH’

And we should be done!

Setting up the Client (Windows)

The client software we will use is called ‘Putty’. It is open source and available for download from here.

On the download page there are a range of options available for use. The best option for us is most likely under the ‘For Windows on Intel x86’ heading and we should just download the ‘putty.exe’ program.

Save the file somewhere logical as it is a stand-alone program that will run when you double click on it (you can make life easier by placing a short-cut on the desktop).

Once we have the file saved, run the program by double clicking on it and it will start without problem.

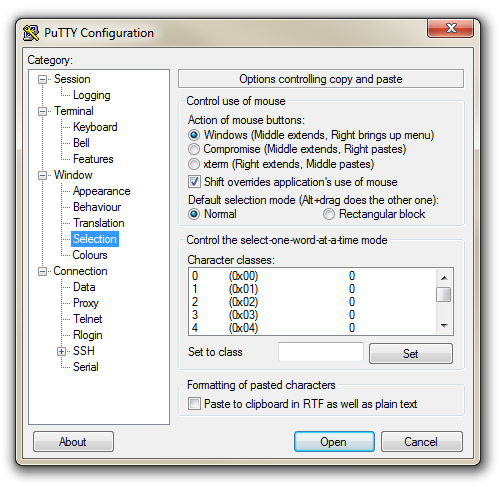

The first thing we will set-up for our connection is the way that the program recognises how the mouse works. In the ‘Window’ Category on the left of the PuTTY Configuration box, click on the ‘Selection’ option. On this page we want to change the ‘Action of mouse’ option from the default of ‘Compromise (Middle extends, Right paste)’ to ‘Windows (Middle extends, Right brings up menu)’. This keeps the standard Windows mouse actions the same when you use PuTTY.

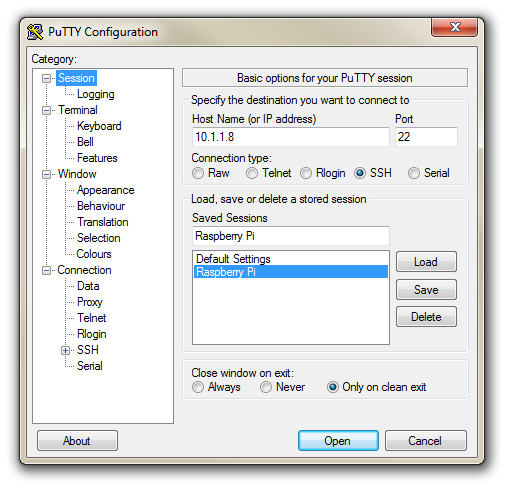

Now select the ‘Session’ Category on the left hand menu. Here we want to enter our static IP address that we set up earlier (10.1.1.160 in the example that we have been following, but use your one) and because we would like to access this connection on a frequent basis we can enter a name for it as a saved session (In the screen-shot below it is imaginatively called ‘Raspberry Pi’). Then click on ‘Save’.

Now we can select our Raspberry Pi session (per the screen-shot above) and click on the ‘Open’ button.

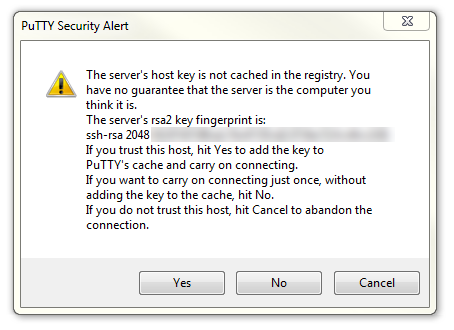

The first thing you will be greeted with is a window asking if you trust the host that you’re trying to connect to.

In this case it is a pretty safe bet to click on the ‘Yes’ button to confirm that we know and trust the connection.

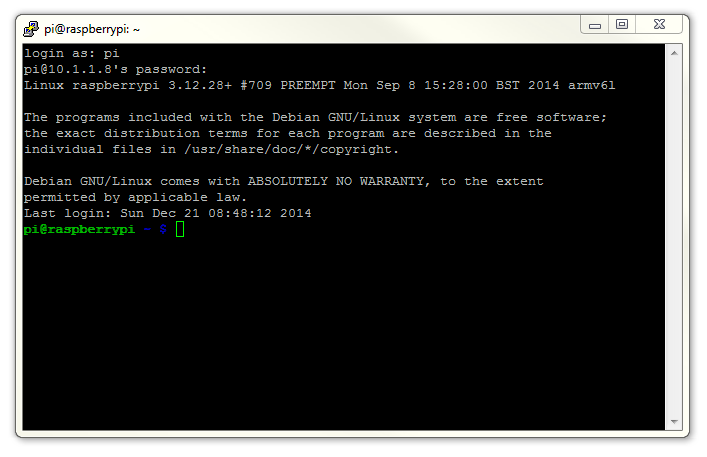

Once this is done, a new terminal window will be shown with a prompt to login as: . Here we can enter our user name (‘pi’) and then our password (if it’s still the default, the password is ‘raspberry’).

There you have it. A command line connection via SSH. Well done.

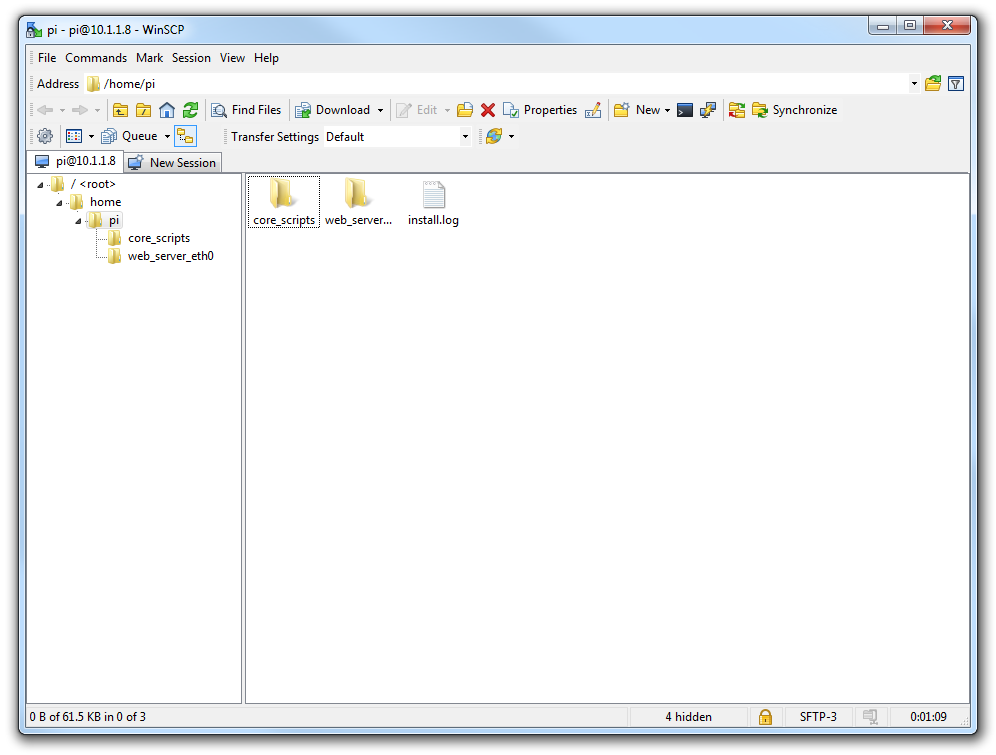

WinSCP

To make the process of transferring files from Windows easier I would recommend looking to the program WinSCP. (If you’re using Linux I will make the assumption that you know how to do the equivalent using SCP.)

This provides a very intuitive way to copy files between your desktop and the Pi.

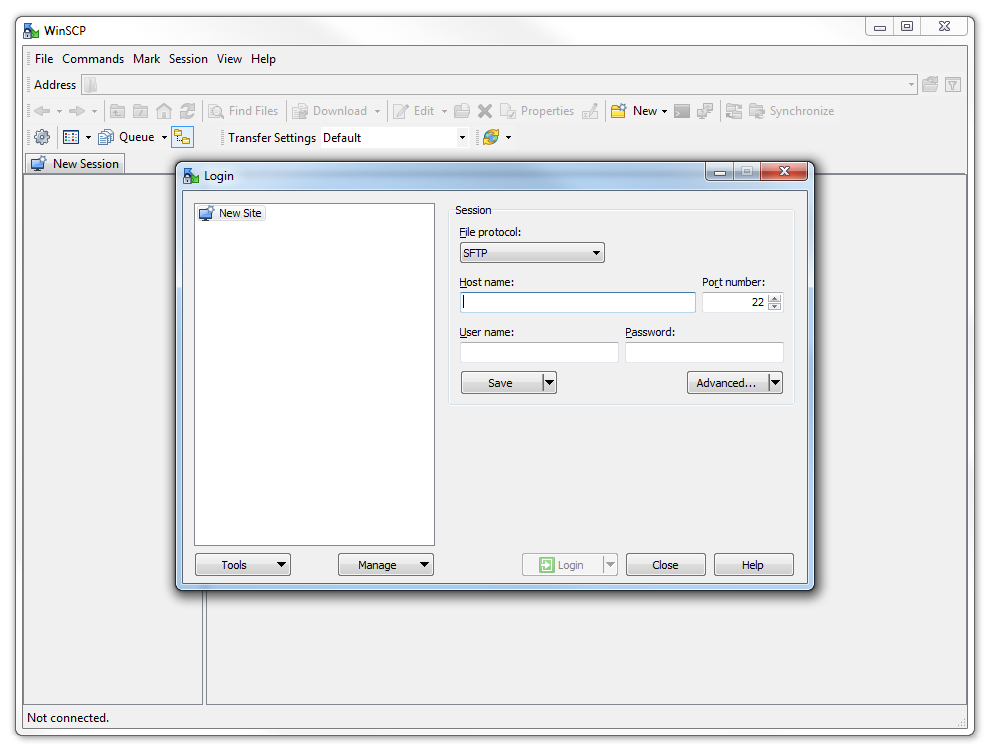

Download and install the program. Once installed, click on the desktop icon.

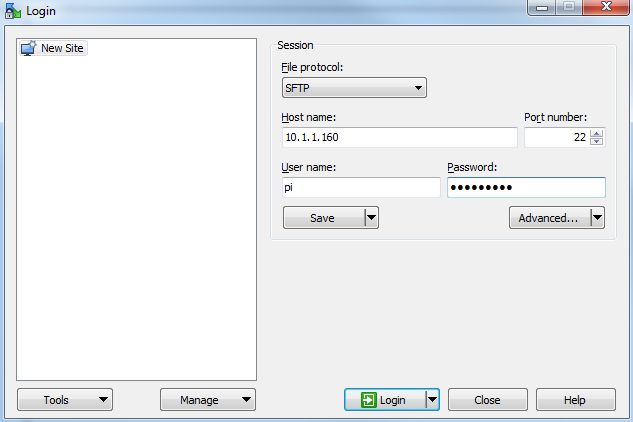

The program opens with default login page. Enter the ‘Host name’ field with the IP address of the Pi. Also put in the username and password of the Pi.

Click on ‘Save’ to save the login details for ease of future access.

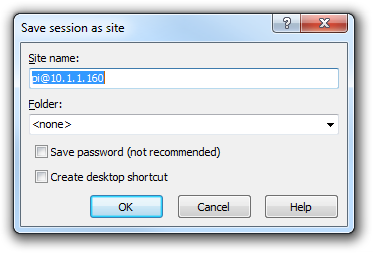

Enter the ‘Site name’ as a name of the Pi or leave it as the default, with the user and IP address. Check the ‘Save password’ for a convenient but insecure way to avoid typing in the username and password in the future. Then press OK

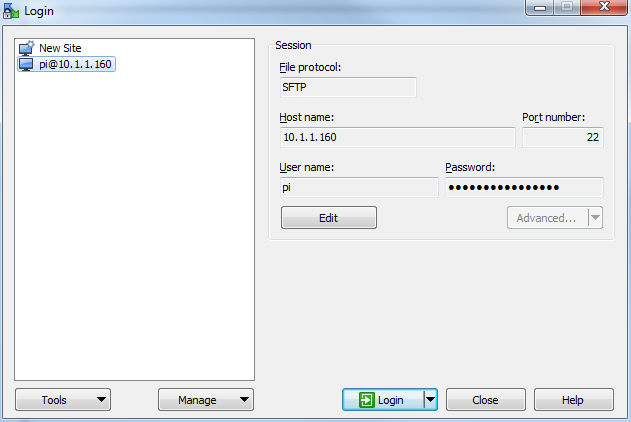

The saved login details now appear on the left hand pane. Click on ‘Login’ to log in to the Pi.

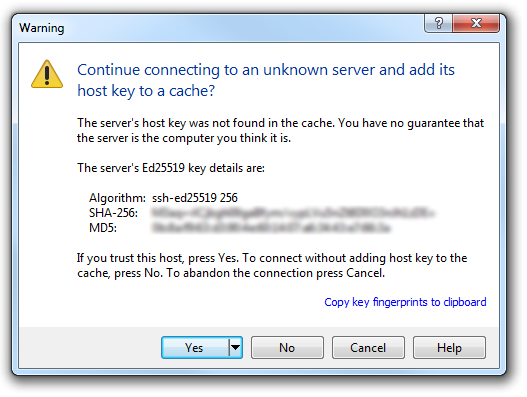

We will receive a warning about connecting to an unknown server for the first time. Assuming that we are comfortable doing this (i.e. that we know that we are connecting the Pi correctly) we can click on ‘Yes’.

There is a possibility that it might fail on its first attempt, but tell it to reconnect if it does and we should be in!

Here we can see a familiar tree structure for file management and we have the ability to copy files via dragging and dropping them into place.

Assuming that we already have PuTTY installed we should be able to click on the ‘Open Session in PuTTY’ icon and we will get access to the command line.

If this is the first time that you’ve done something like this (remotely accessing a computer) it can be a very liberating feeling. Nice job.

Setting up a WiFi Network Connection

Our set-up of the Raspberry Pi will allow us to carry out all the (computer interface) interactions via a remote connection. However, the Raspberry Pi is currently making that remote connection via a fixed network cable. It could be argued that the lower number of connections that we need to run to our machine the better. The most obvious solution to this conundrum is to enable a wireless connection.

It should be noted that enabling a wireless network will not be a requirement for everyone, and as such, I would only recommend it if you need to. If you’re using a model B3, B3+, 4, 5, Zero W or Zero 2W you have WiFi built in, otherwise you will need to purchase a USB WiFi dongle and correctly configure it.

We should also note that the configuration of your WiFi connection can be set using the Raspberry Pi Imager as mentioned earlier in the book.

Built in WiFi Enabling

We need to edit the file wpa_supplicant.conf at /etc/wpa_supplicant/wpa_supplicant.conf. This looks like the following;

Use the nano command as follows;

We need to add the ssid (the wireless network name) and the password for the WiFi network here so that the file looks as follows (using your ssid and password of course);

Make the changes operative

To allow the changes to become operative we can type in;

Once we have rebooted, we can check the status of our network interfaces by typing in;

This will display the configuration for our wired Ethernet port, our ‘Local Loopback’ (which is a fancy way of saying a network connection for the machine that you’re using, that doesn’t require an actual network (ignore it in the mean time)) and the wlan0 connection which should look a little like this;

flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.1.1.99 netmask 255.255.255.0 broadcast 10.1.1.255

inet6 fe80::8b9f:3e4f:dcf0:12a9 prefixlen 64 scopeid 0x20<link>

ether b8:27:eb:e3:b7:f2 txqueuelen 1000 (Ethernet)

RX packets 51 bytes 9384 (9.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 35 bytes 6078 (5.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

This would indicate that our wireless connection has been assigned the dynamic IP address 10.1.1.99.

We should be able to test our connection by connecting to the Pi via SSH and ‘PuTTY’ on the Windows desktop using the address 10.1.1.99.

In theory you are now the proud owner of a computer that can be operated entirely separate from all connections except power!

Make the built in WiFi IP address static

In the same way that we would edit the /etc/dhcpcd.conf file to set up a static IP address for our physical connection (eth0) we will now edit it with the command…

This time we will add the details for the wlan0 connection to the end of the file. Those details (assuming we will use the 10.1.1.17 IP address) should look like the following;

Our wireless lan (wlan0) is now designated to be a static IP address (with the details that we had previously assigned to our wired connection) and we have added the ‘ssid’ (the network name) of the network that we are going to connect to and the password for the network.

Make the changes operative

To allow the changes to become operative we can type in;

We’re done!

WiFi Via USB Dongle

Using an external USB WiFi dongle can be something of an exercise if not done right. In my own experience, I found that choosing the right wireless adapter was the key to making the job simple enough to be able to recommend it to new users. Not all WiFi adapters are well supported and if you are unfamiliar with the process of installing drivers or compiling code, then I would recommend that you opt for an adapter that is supported and will work ‘out of the box’. There is an excellent page on elinux.org which lists different adapters and their requirements. I eventually opted for the Edimax EW-7811Un which literally ‘just worked’ and I would recommend it to others for it’s ease of use and relatively low cost (approximately $15 US).

To install the wireless adapter we should start with the Pi powered off and install it into a convenient USB connection. When we turn the power on we will see the normal range of messages scroll by, but if we’re observant we will note that there are a few additional lines concerning a USB device. These lines will most likely scroll past, but once the device has finished powering up and we have logged in we can type in…

… which will show us a range of messages about drivers that are loaded to support discovered hardware.

Somewhere in that list (hopefully towards the end) will be a series of messages that describe the USB connectors and what is connected to them. In particular we could see a group that looks a little like the following;

That is our USB adapter which is plugged into USB slot 2 (which is the ‘2’ in usb 1-1.2:). The manufacturer is listed as ‘Realtek’ as this is the manufacturer of the chip-set in the adapter that Edimax uses.

Editing files

We need to edit two files. The first is the file wpa_supplicant.conf at /etc/wpa_supplicant/wpa_supplicant.conf. This looks like the following;

Use the nano command as follows;

We need to add the ssid (the wireless network name) and the password for the WiFi network here so that the file looks as follows (using your ssid and password of course);

Make the changes operative

To allow the changes to become operative we can type in;

Once we have rebooted, we can check the status of our network interfaces by typing in;

This will display the configuration for our wired Ethernet port, our ‘Local Loopback’ (which is a fancy way of saying a network connection for the machine that you’re using, that doesn’t require an actual network (ignore it in the mean time)) and the wlan1 connection which should look a little like this;

flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.1.1.97 netmask 255.255.255.0 broadcast 10.1.1.255

inet6 fe80::c4e4:a6e5:9788:d2c2 prefixlen 64 scopeid 0x20<link>

ether 00:ec:0b:4c:6b:99 txqueuelen 1000 (Ethernet)

RX packets 106 bytes 18616 (18.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 34 bytes 5681 (5.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

This would indicate that our wireless connection has been assigned the dynamic IP address 10.1.1.97.

We should be able to test our connection by connecting to the Pi via SSH and ‘PuTTY’ on the Windows desktop using the address 10.1.1.97.

Make USB WiFi IP address static

In the same way that we would edit the /etc/dhcpcd.conf file to set up a static IP address for our physical connection (eth0) we will now edit it with the command…

This time we will add the details for the wlan1 connection to the end of the file. Those details (assuming we will use the 10.1.1.110 IP address) should look like the following;

Make the changes operative

To allow the changes to become operative we can type in;

We’re done!

About Prometheus

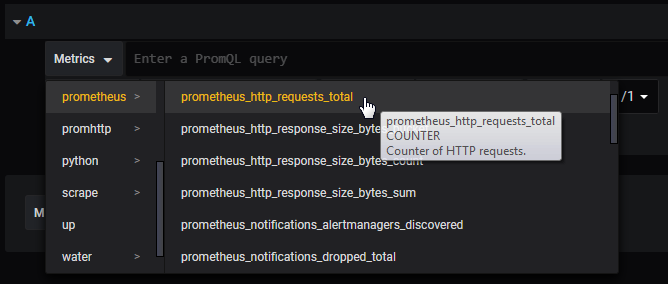

Prometheus is an open source application used for monitoring and alerting. It records real-time metrics in a time series database built using a HTTP pull model.

It is licensed under the Apache 2 License, with source code available on GitHub.

It was was created because of the need to monitor multiple microservices that might be running in a system. It employs a modular architecture and employs modules called exporters, which allow the capture of metrics from a range of platforms, IT hardware and software. Prometheus is written with easily distributed binaries which allow it to run standalone with no external dependencies.

Prometheus’s ‘pull model’ of metrics gathering means that it will actively request information for recording. The alternative (and both have strengths) is a ‘push model’ which occurs when information is pushed to a recording service without being asked. InfluxDB is a popular piece of software that employs that model.

Prometheus collects metrics at regular intervals and stores them locally. These metrics are pulled from nodes that run ‘exporters’. An exporter can be defined as a module that extracts information and translates it into the Prometheus format.

Prometheus has an alert manager that can notify a follow on end point if something is awry and it has a visualisation component that is useful for testing. However, it is commonly used in combination with the Grafana platform which has a very powerful visualisation capability.

Prometheus data is stored as metrics, with each having a name that is used for referencing and querying. This is what makes it very good at recording time series data. To add dimensionality, each metric can be drilled down by an arbitrary number of key=value pairs (labels). Labels can include information on the data source and other application-specific information.

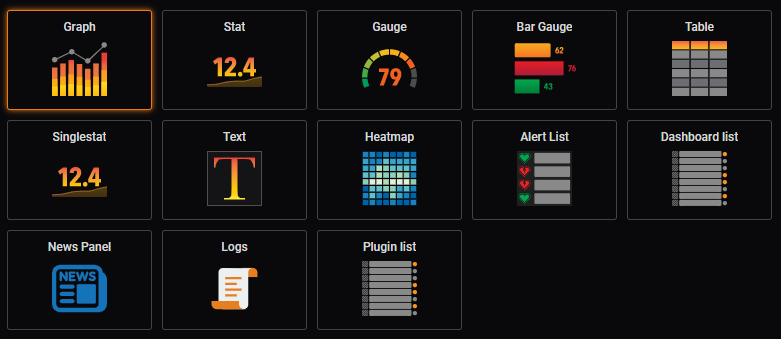

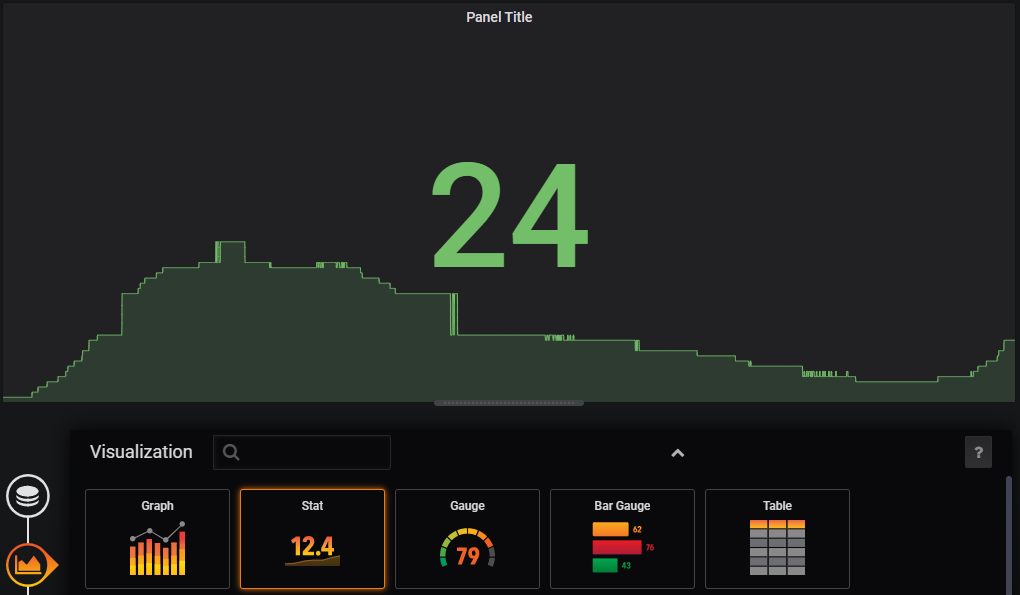

About Grafana

Grafana is an open-source, general purpose dashboard and visualisation tool, which runs as a web application. It supports a range of data inputs such as InfluxDB or Prometheus.

It allows you to visualize and alert on your metrics as well as allowing for the creation of dynamic & reusable dashboards.

Grafana is open source and covered by the Apache 2.0 license and its source code is available on GitHub.

Three of the primary strengths of Grafana are;

- A powerful engine for the building of dashboards that can contain a wide range of different visualisation techniques.

- The ability to display dynamic data from multiple sources in a way that allows for multi-dimensional integration.

- An alerting engine that provides the ability to attach rules to dashboard panels. These rules provide the facility to trigger alerts and notifications.

Installation

While the Raspberry Pi comes with a range of software already installed on the Raspberry Pi OS distribution (even the Lite version) we will need to download and install Prometheus and Grafana separately

If you’re sneakily starting reading from this point, make sure that you update and upgrade the Raspberry Pi OS before continuing.

Installing Prometheus

The first thing that we will want to do before installing Prometheus is to determine what the latest version is. To do this browse to the download page here - https://prometheus.io/download/. There are a number of different software packages available, but it’s important before looking at any of them that we select the correct architecture for our Raspberry Pi. As the Pi 4 (which I’m using for this install) uses a CPU based on the Arm v7 architecture, use the drop-down box to show armv7 options.

Note the name or copy the URL for the file that is presented. On the 7th of January 2024, the version that was available was 2.48.1. The full URL was something like;

https://github.com/prometheus/prometheus/releases/download/v2.48.1/prometheus-2.4\

8.1.linux-armv7.tar.gz

We can see that ‘armv7’ is even in the name. That’s a great way to confirm that we’re on the right track.

On our Pi we will start the process in the pi users home directory (/home/pi/). We will initiate the download process with the wget command as follows (the command below will break across lines, don’t just copy - paste it);

The file that is downloaded is compressed so once the download is finished we will want to expand our file. For this we use the tar command;

Let’s do some housekeeping and remove the original compressed file with the rm (remove) command;

We now have a directory called prometheus-2.48.1.linux-armv7. While that’s nice and descriptive, for the purposes of simplicity it will be easier to deal with a directory with a simpler name. We will therefore use the mv (move) command to rename the directory to just ‘prometheus’ thusly;

Believe it or not, that is as hard as the installation gets. Everything from here is configuration in one form or another. However, the first part of that involves making sure that Prometheus starts up simply at boot. We will do this by setting it up as a service so that it can be easily managed and started.

The first step in this process is to create a service file which we will call prometheus.service. We will have this in the /etc/systemd/system/ directory.

Paste the following text into the file and save and exit.

The service file can contain a wide range of configuration information and in our case there are only a few details. The most interesting being the ‘ExecStart’ details which describe where to find the prometheus executable and what options it should use when starting. In particular we should note the location of the prometheus.yml file which we will use in the future when adding things to monitor to Prometheus.

Before starting our new service we will need to reload the systemd manager configuration. This essentially takes changed configurations from our file system and makes them ready to be used. We have added a service, so systemd needs to know about it before it can start it.

Now we can start the Prometheus service. .

You shouldn’t see any indication at the terminal that things have gone well, so it’s a good idea to check Prometheus’s status as follows;

We should see a report back that indicates (amongst other things) that Prometheus is active and running.

Now we will enable it to start on boot.

To check that this is all working well we can use a browser to verify that Prometheus is serving metrics about itself by navigating to its own metrics endpoint at http://10.1.1.110:9090/metrics (or at least at the IP address of your installation).

A long list of information should be presented in the browser that will look a little like the following;

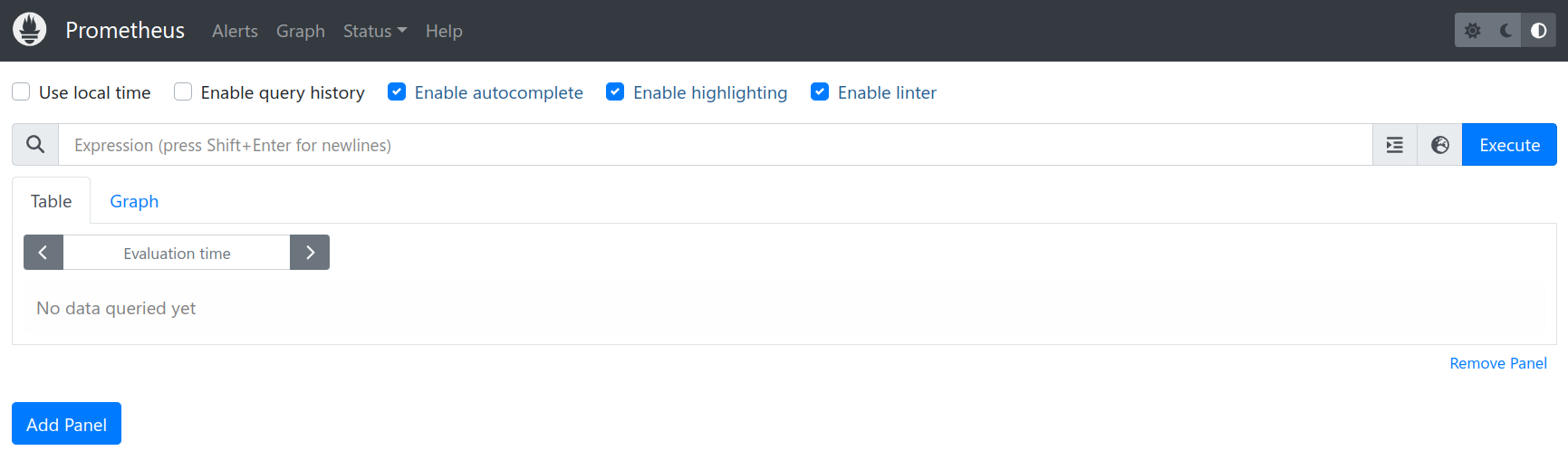

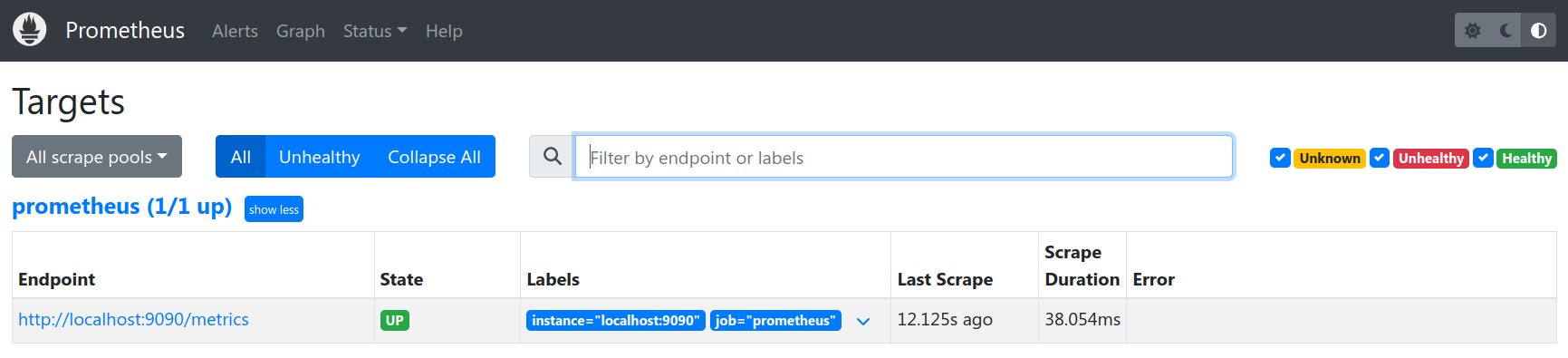

We can now go to a browser and enter the IP address of our installation with the port :9090 to confirm that Prometheus is operating. In our case that’s http://10.1.1.110:9090.

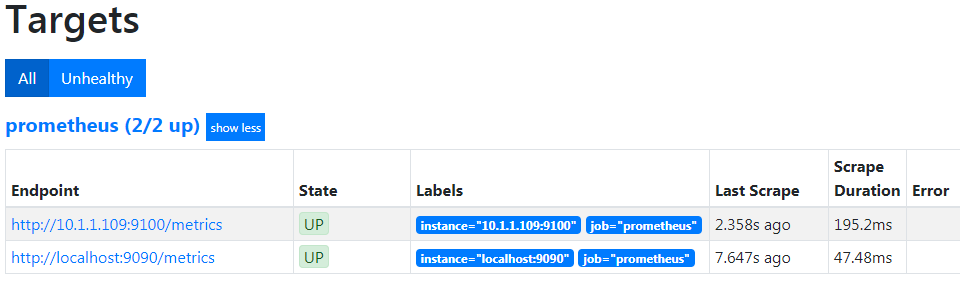

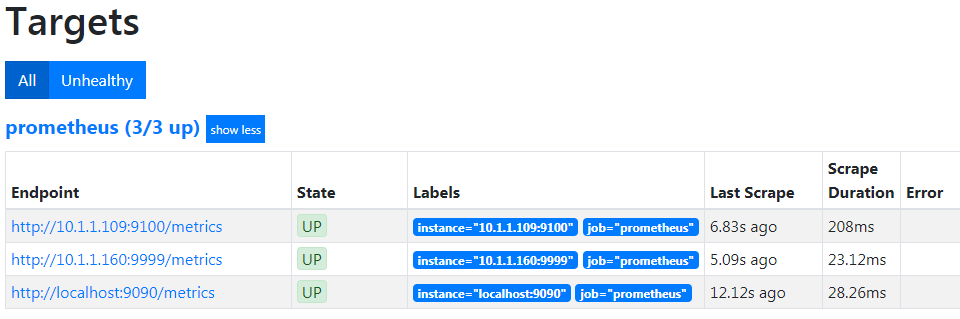

If we now go to the ‘Status’ drop down menu and select ‘Targets’ we can see the list of targets that Prometheus is currently scraping metrics from.

From the information above, we can see that it is already including itself as a metric monitoring point!

There’s still plenty more to do on configuring Prometheus (specifically setting up exporters), but for the mean time we will leave the process here and set up Grafana.

Installing Grafana

There are two different ways that we can go about installing Grafana. Manually, in a way very much like the one that we used for Prometheus, or via the package management service ‘apt-get’. Both methods will be described below, but I will recommend the ‘apt-get’ method as it is simpler and allows for easier updating.

Installing Grafana Manually

In much the same way that we installed Prometheus, the first thing we need to do is to find the right version of Grafana to download. To do this browse to the download page here - https://grafana.com/grafana/download?platform=arm. There are a number of different ways to install Grafana. We are going to opt for the simple method of installing a standalone binary in much the same way that we installed Prometheus.

The download page noted above goes straight to the ARM download page. We will be looking for the ‘Standalone Linux Binaries’ for ARMv7. Note the name or copy the URL for the file that is presented. On the 7th of January 20224, the version that was available was 10.2.3. The full URL was something like https://dl.grafana.com/oss/release/grafana-enterprise-10.2.3.linux-armv7.tar.gz;

On our Pi we will start the process in the pi users home directory (/home/pi/). We will initiate the download process with the wget command as follows (the command below will break across lines, don’t just copy - paste it);

The file that is downloaded is compressed so once the download is finished we will want to expand our file. For this we use the tar command;

Housekeeping time again. Remove the original compressed file with the rm (remove) command;

We now have a directory called grafana-10.2.3. While that’s nice and descriptive, for the purposes of simplicity it will be easier to deal with a directory with a simpler name. We will therefore use the mv (move) command to rename the directory to just ‘grafana’ thusly;

Again, now we need to make sure that Grafana starts up simply at boot. We will do this by setting it up as a service so that it can be easily managed and started.

The first step in this process is to create a service file which we will call grafana.service. We will have this in the /etc/systemd/system/ directory.

Paste the following text into the file and save and exit.

The service file can contain a wide range of configuration information and in our case there are only a few details. The most interesting being the ‘ExecStart’ details which describe where to find the Grafana executable.

Before starting our new service we will need to reload the systemd manager configuration again.

Now we can start the Grafana service.

You shouldn’t see any indication at the terminal that things have gone well, so it’s a good idea to check Grafana’s status as follows;

We should see a report back that indicates (amongst other things) that Grafana is active and running.

Now we will enable it to start on boot.

Installing Grafana Automatically Using ‘apt-get’

So while we call this an automatic method, there is still some manual work to set things up at the start.

First we need to add the APT key used to authenticate the packages:

If we’re using one of the more modern versions of Raspberry Pi OS we may get a warning that apt-key is deprecated. There will come a time in the future when we will need to move to trusted.gpg.d, but that will be a job for our future selves.

Now we need to add the Grafana APT repository (the command below will break across lines, don’t just copy - paste it):

With those pieces of set-up out of the way we can install Grafana;

Grafana is now installed but to make sure that it starts up when the our Pi is restarted, we need to enable and start the Grafana Systemctl service.

First enable the Grafana server;

Then start the Grafana server;

Using Grafana

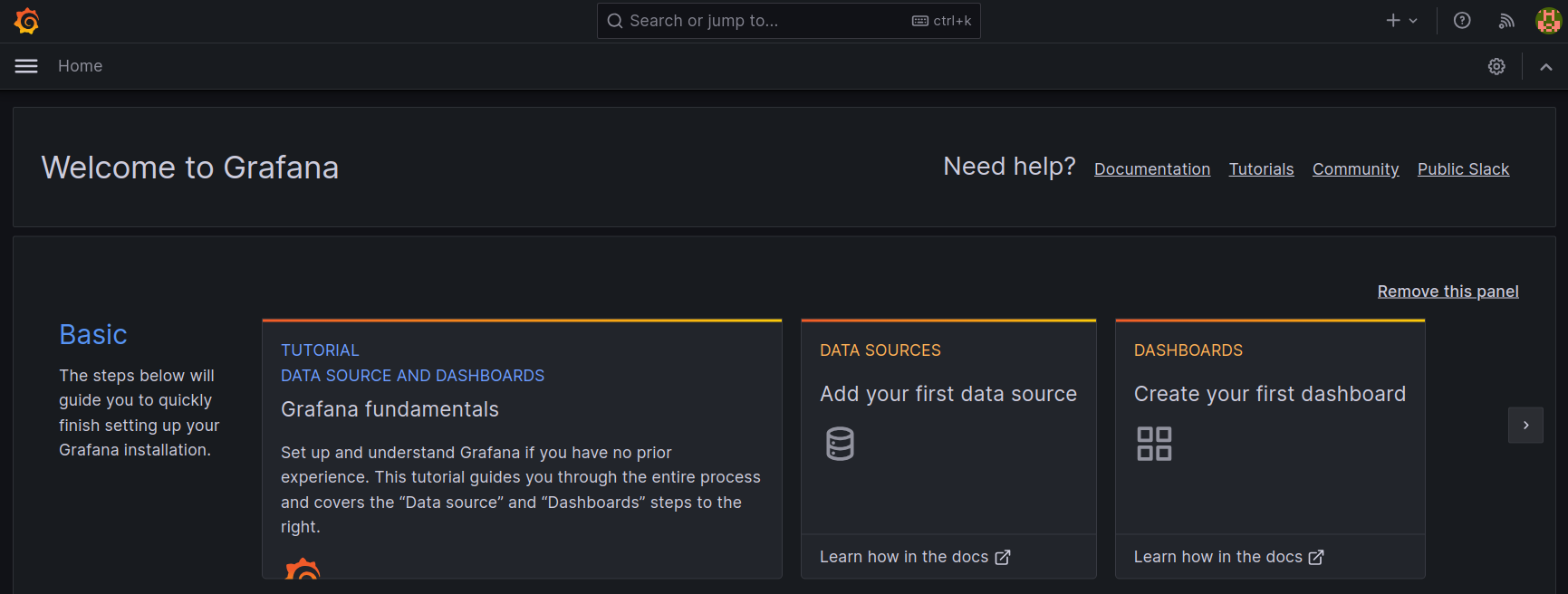

At this point we have Grafana installed and configured to start on boot. Let’s start exploring!

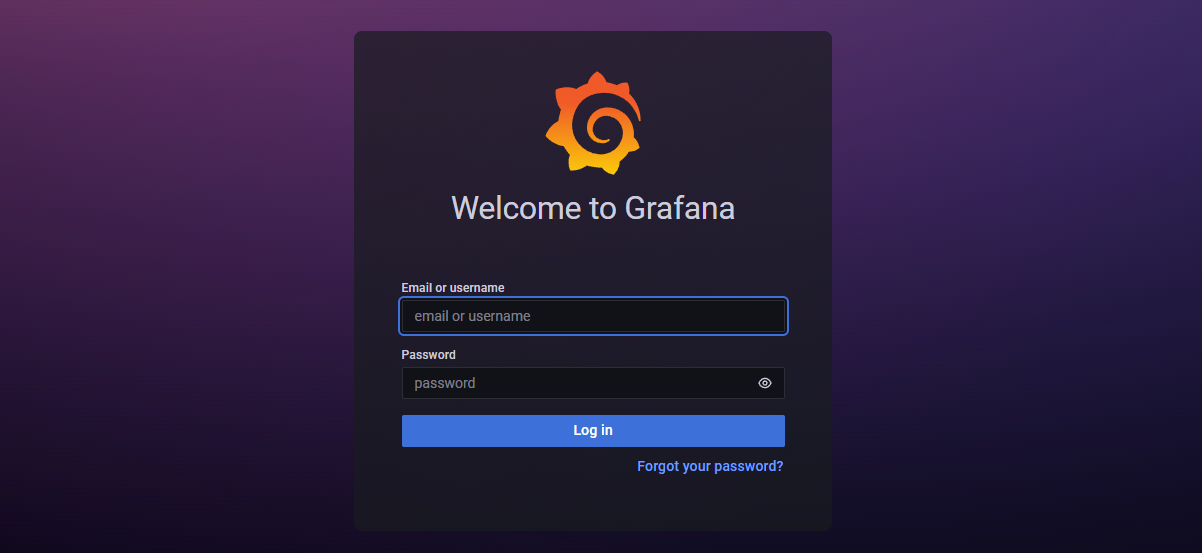

Using a browser, connect to the Grafana server: http://10.1.1.110:3000.

The account and password are: admin/admin. Grafana will ask you to change this password.

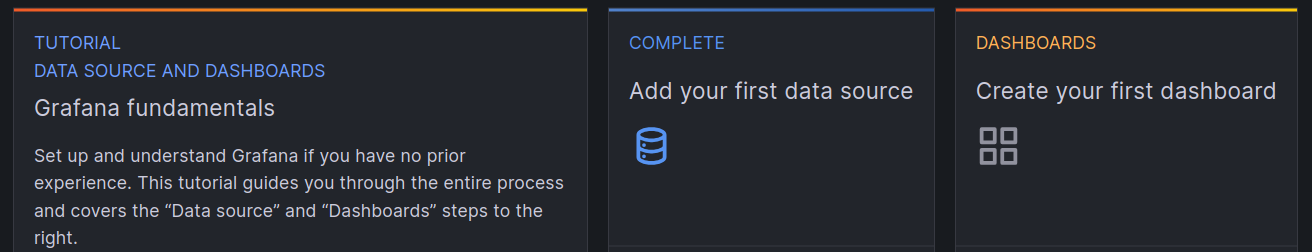

The first configuration to be made will be to create a data source that Grafana will use to collect metrics. Of course, this will be our Prometheus instance

From the main page select the panel to add our first data source.

The select ‘Add Data Source’ and then select Prometheus as that data source.

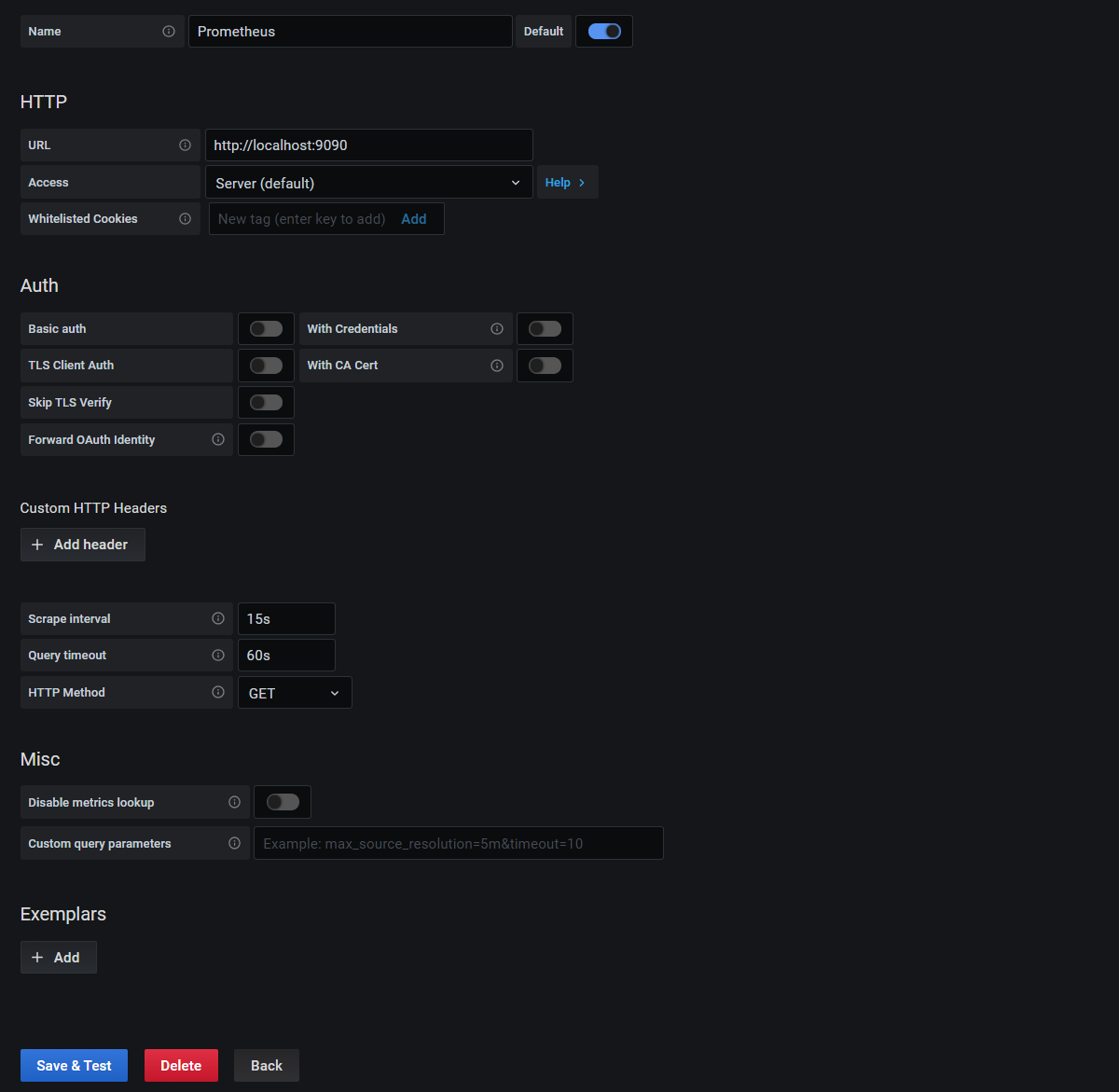

Now we get to configure some of the settings for our connection to Prometheus.

In this case we can set the URL as ‘http://localhost:9090’ (since both Prometheus and Grafana are installed on the same server), leave the scrape interval as ’15s’, the Query timeout as 60s and the http method as ‘GET’. Be aware that on some versions of Grafana, you will need to explicitly type in the URL ‘http://localhost:9090’ or you may receive an error when you test in the next step.

Then click on the ‘Save & Test button’.

We should get a nice tick to indicate that the data source is working.

Great!

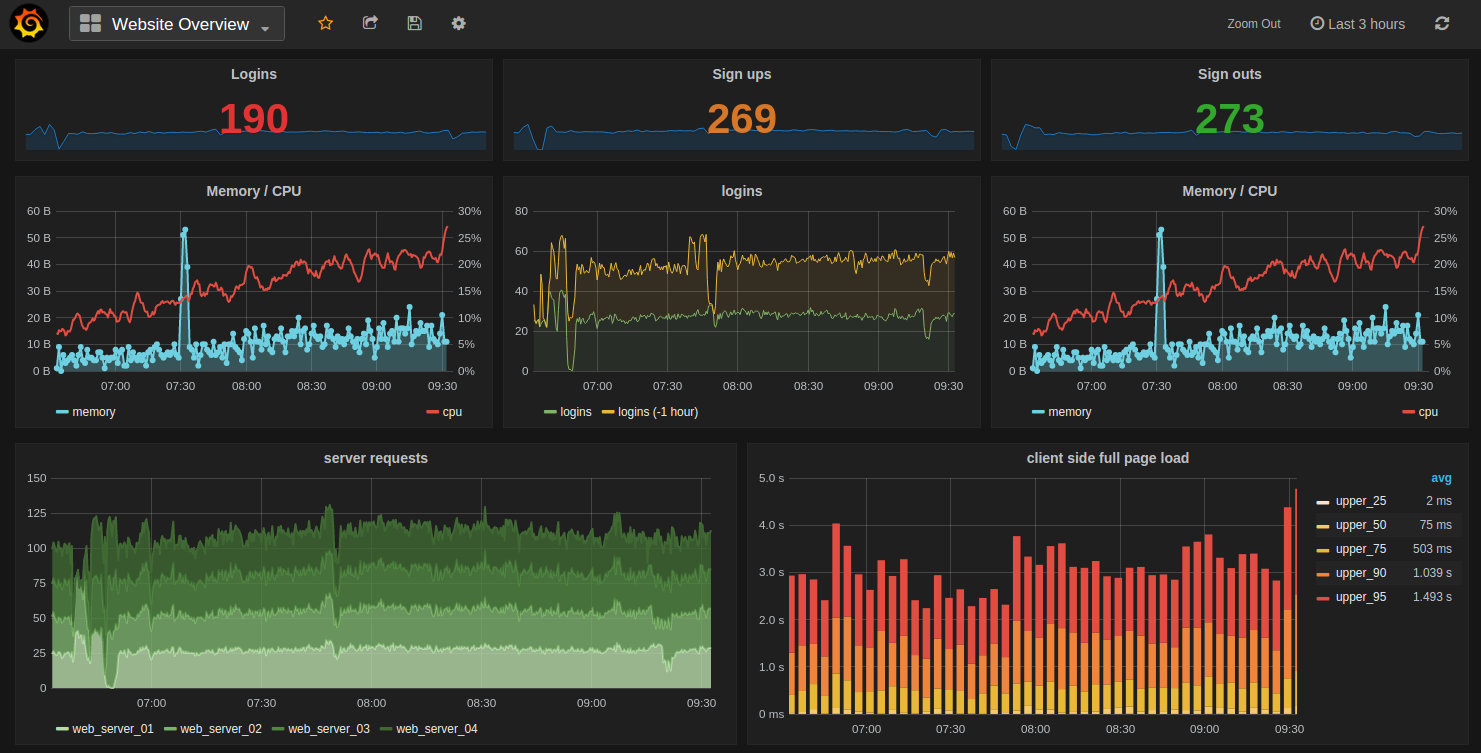

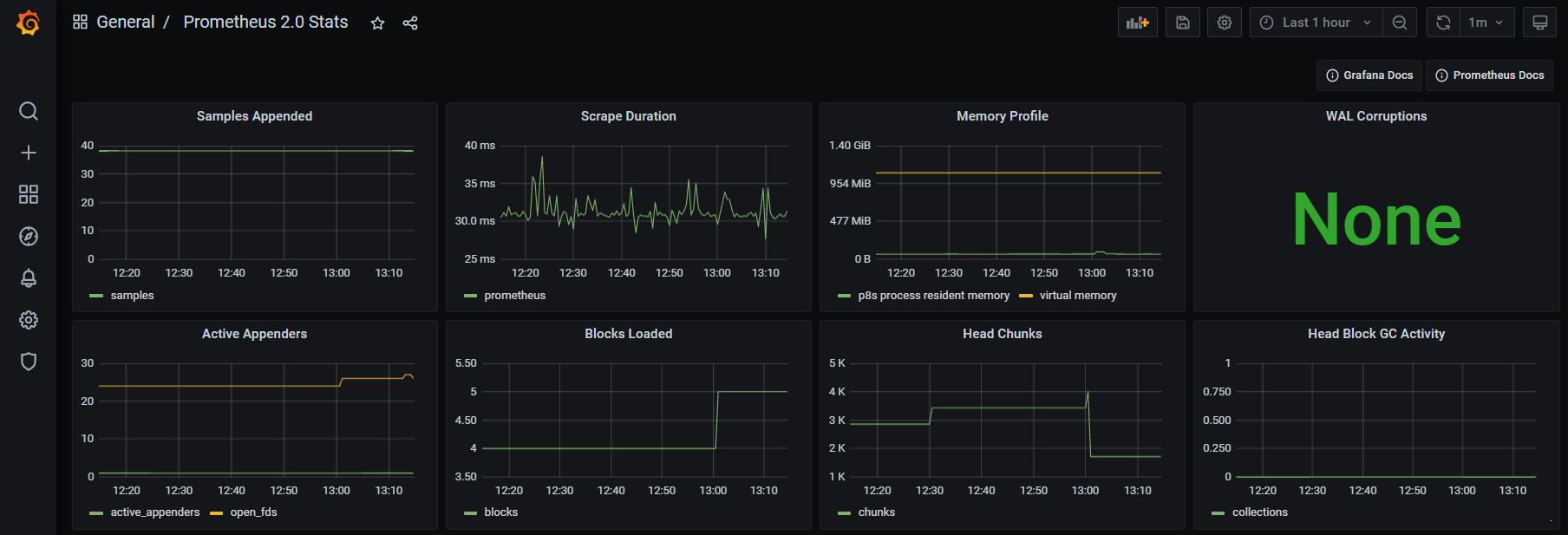

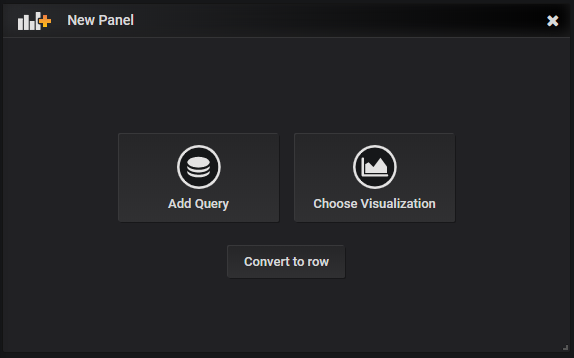

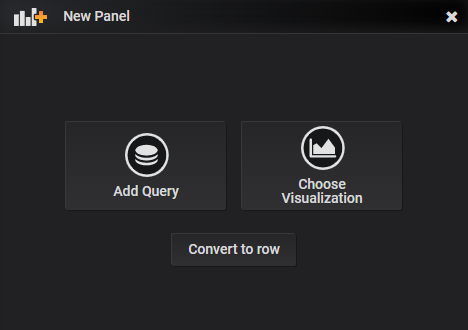

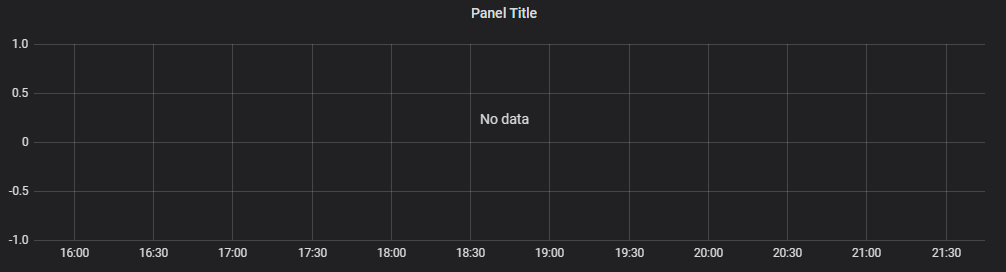

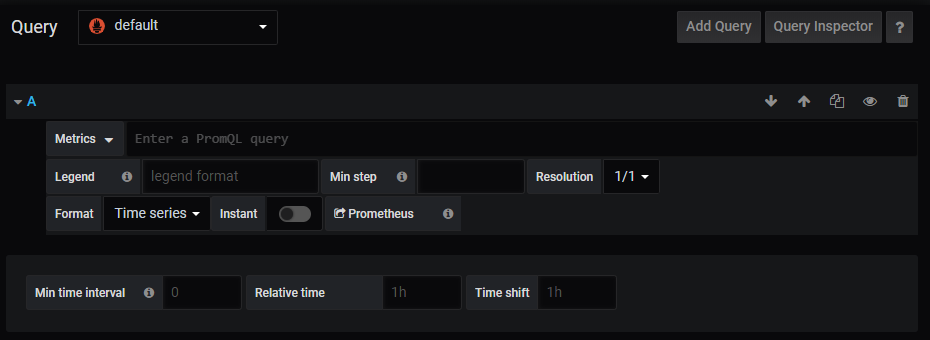

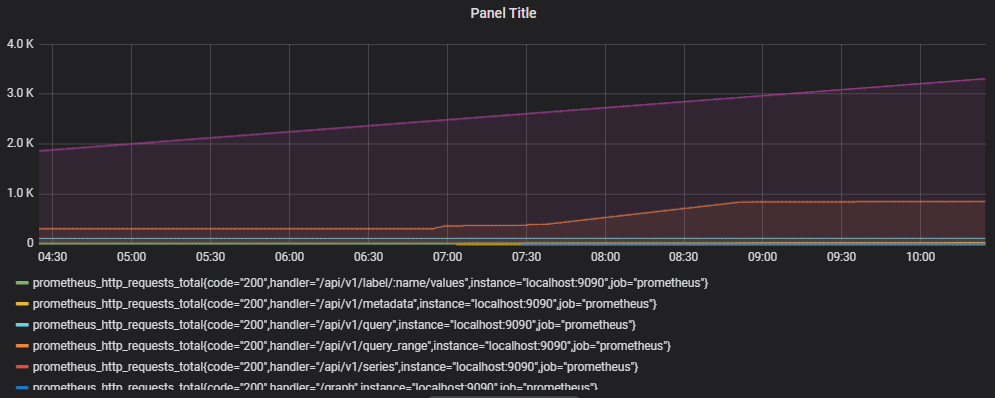

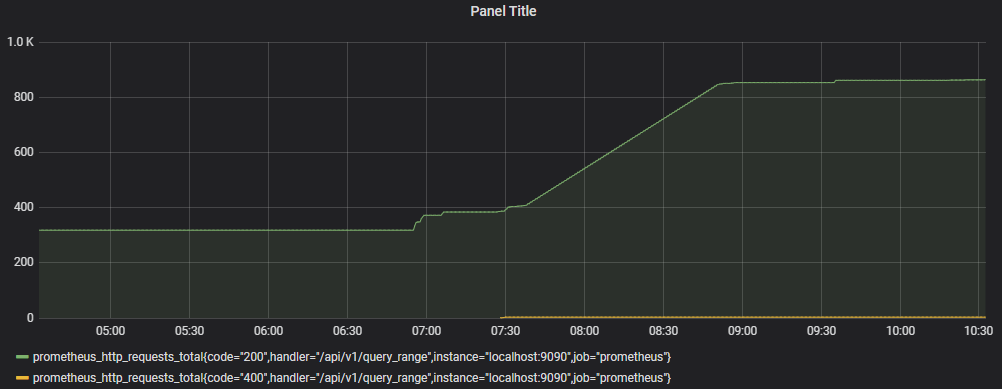

Now things start to get just a little bit exciting. Remember the metrics that were being sent out by Prometheus? Those were the metrics that report back on how Prometheus is operating. In other words, it’s the monitoring system being monitored. We are going to use that to show our first dashboard.

Here lies another strength of Grafana. Dashboards can be built and shared by users so that everyone benefits. We will import one such dashboard to show us how Prometheus is operating.

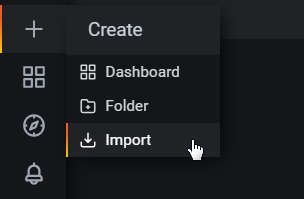

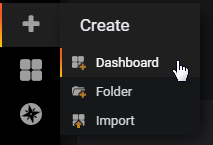

At the top left of our page, click on the icon to return to the home screen.

There is a ‘Dashboards’ panel to create our first dashboard. Let’s click on that to show some available dashboards for our Prometheus instance;

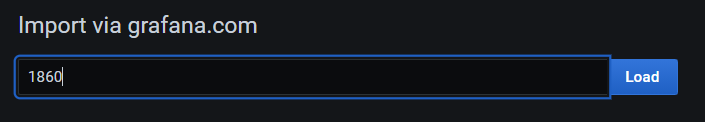

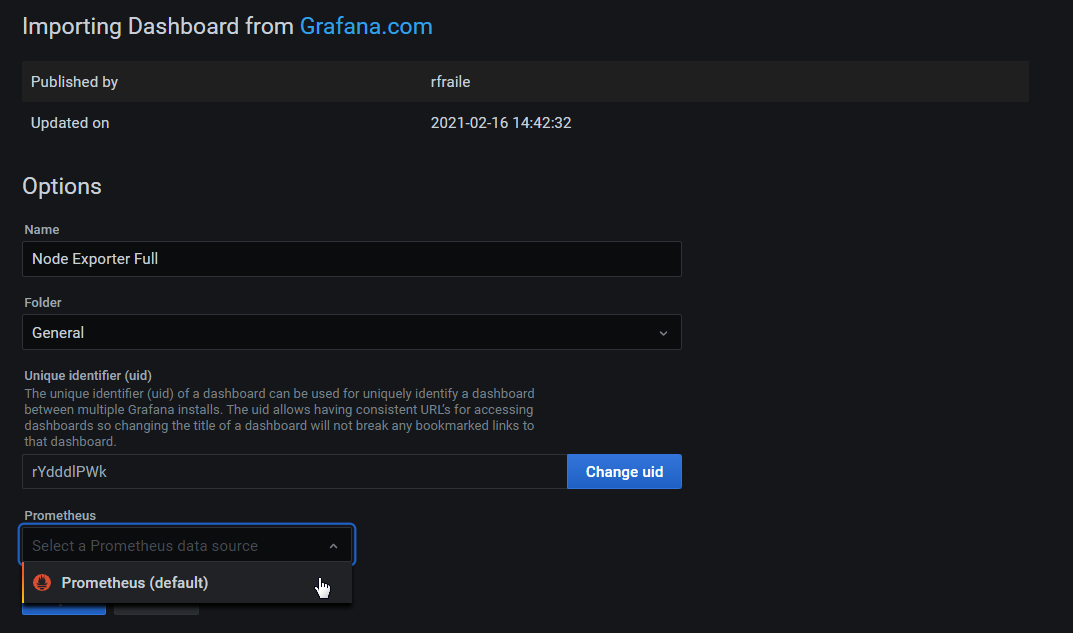

From here we can enter the Grafana dashboard ID number 3662 and click on the ‘Load’ button and then the ‘Import’ button.

And the dashboard will open.

How about that?

We should now be looking at a range of metrics that Grafana is scraping from Prometheus and presenting in a nice fashion.

Trust me, this is just the start of our journey. As simple as the process of getting up and running and looking at a pretty graph is, there are plenty more pleasant surprises to come as we look at the flexibility and power of these two services.

Exporters

Gathering metrics in Prometheus involves pulling data from providers via agents called ‘exporters’. There are a wide range of pre-built exporters and a range of templates for writing custom exporters which will collect data from your infrastructure and send it to Prometheus.

Pre-built exporter examples include;

- Node Exporter: Which exposes a wide variety of hardware- and kernel-related metrics like disk usage, CPU performance, memory state, etcetera for Linix systems.

- SNMP Exporter: Which exposes information gathered from SNMP. Its most common use is to get the metrics from network devices like firewalls, switches and the devices which just supports SNMP only.

- Database Exporters: These are a range of exporters that can retrieve performance data from databases such as MySQL, MSSQL, PostgreSQL, MongoDB and more.

- Hardware Exporters: A number of hardware platforms have exporters supported including Dell, IBM, Netgear and Ubiquiti.

- Storage Platforms: Such as Ceph, Gluster and Hadoop

Custom exporter examples include libraries for Go, Python, Java and Javascript.

Node Exporter

As node_exporter is an official exporter available from the Prometheus site, and as the binary is able to be installed standalone the installation process is fairly similar. We’ll download it, decompress it and run it as a service.

In the case of the installation below the node_exporter will be installed onto another Raspberry Pi operating on the local network at the IP address 10.1.1.109. It will pull our server metrics which will be things like RAM/disk/CPU utilization, network, io etc.

First then we will browse to the download page here - https://github.com/prometheus/node_exporter/releases/. Remembering that it’s important that we select the correct architecture for our Raspberry Pi.

As the Pi that I’m going to monitor in this case with node_exporter uses a CPU based on the ARMv7 architecture, use the drop-down box to show armv7 options.

Note the name or copy the URL for the node_exporter file that is presented. The full URL in this case is something like - https://github.com/prometheus/node_exporter/releases/download/v1.3.1/node_exporter-1.3.1.linux-armv7.tar.gz;

Using a terminal, ssh into the node to be monitored. In our case, this swill be as the pi user again on 10.1.1.109.

Once safely logged in and at the pi users’s home directory we can start the download (remember, the command below will break across lines, don’t just copy - paste it);

The file that is downloaded is compressed so once the download is finished we will want to expand our file. For this we use the tar command;

Housekeeping time again. Remove the original compressed file with the rm (remove) command;

We now have a directory called node_exporter-1.3.1.linux-armv7. Again, for the purposes of simplicity it will be easier to deal with a directory with a simpler name. We will therefore use the mv (move) command to rename the directory to just ‘node_exporter’ thusly;

Again, now we need to make sure that node_exporter starts up simply at boot. We will do this by setting it up as a service so that it can be easily managed and started.

The first step in this process is to create a service file which we will call node_exporter.service. We will have this in the /etc/systemd/system/ directory.

Paste the following text into the file and save and exit.

The service file can contain a wide range of configuration information and in our case there are only a few details. The most interesting being the ‘ExecStart’ details which describe where to find the node_exporter executable.

Before starting our new service we will need to reload the systemd manager configuration again.

Now we can start the node_exporter service.

You shouldn’t see any indication at the terminal that things have gone well, so it’s a good idea to check node_exporter’s status as follows;

We should see a report back that indicates (amongst other things) that node_exporter is active and running.

Now we will enable it to start on boot.

The exporter is now working and listening on the port:9100

To test the proper functioning of this service, use a browser with the url: http://10.1.1.109:9100/metrics

This should return a lot lot statistics. They will look a little like this

Now that we have a computer exporting metrics, we will want it to be gathered by Prometheus

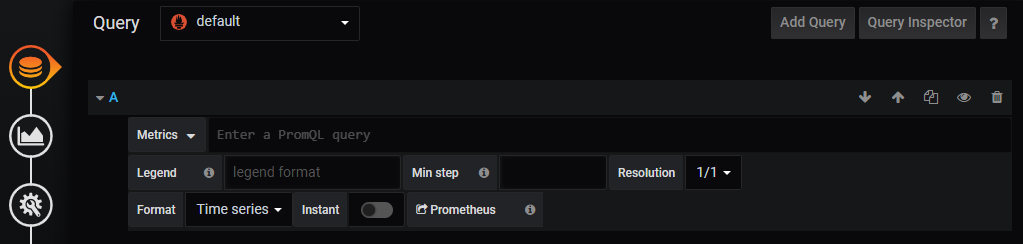

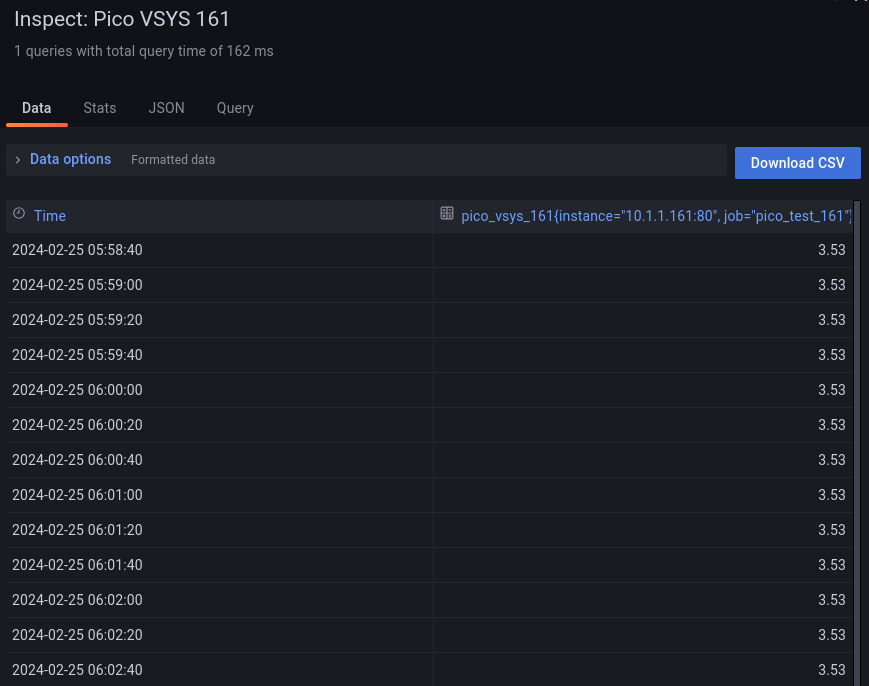

Prometheus Collector Configuration

Prometheus configuration is via a YAML (Yet Another Markup Language) file. The Prometheus installation comes with a sample configuration in a file called prometheus.yml (in our case in /home/pi/prometheus/).

The default file contains the following;

There are four blocks of configuration in the example configuration file: global, alerting, rule_files, and scrape_configs.

global

The global block controls the Prometheus server’s global configuration. In the default example there are two options present. The first, scrape_interval, controls how often Prometheus will scrape targets. We can still override this for individual targets. In this case the global setting is to scrape every 15 seconds. The evaluation_interval option controls how often Prometheus will evaluate rules. Prometheus uses rules to create new time series and to generate alerts. The global settings also serve as defaults for other configuration sections.

The global options are;

- scrape_interval: How frequently to scrape targets. The default = 1m